第三章 3.4 训练神经网络

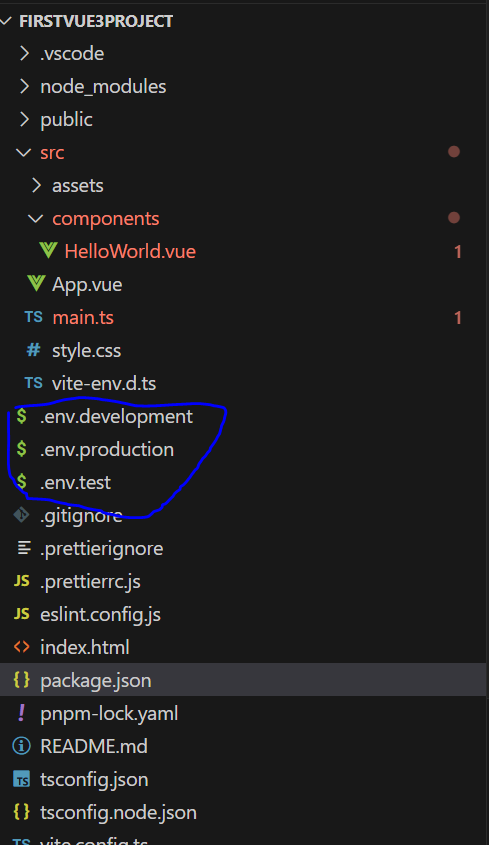

# https://github.com/PacktPublishing/Modern-Computer-Vision-with-PyTorch # https://github.com/PacktPublishing/Modern-Computer-Vision-with-PyTorch################### Chapter Three ######################################## 第三章 读取数据集并显示 from torch.utils.data import Dataset, DataLoader import torch import torch.nn as nn import numpy as np import matplotlib.pyplot as plt ######################################################################## from torchvision import datasets import torch data_folder = '~/data/FMNIST' # This can be any directory you want to # download FMNIST to fmnist = datasets.FashionMNIST(data_folder, download=True, train=True) tr_images = fmnist.data tr_targets = fmnist.targetsval_fmnist = datasets.FashionMNIST(data_folder, download=True, train=False) val_images = val_fmnist.data val_targets = val_fmnist.targets######################################################################## import matplotlib.pyplot as plt #matplotlib inline import numpy as np from torch.utils.data import Dataset, DataLoader import torch import torch.nn as nn device = 'cuda' if torch.cuda.is_available() else 'cpu'######################################################################## class FMNISTDataset(Dataset):def __init__(self, x, y):x = x.float()/255 #归一化x = x.view(-1,28*28)self.x, self.y = x, ydef __getitem__(self, ix):x, y = self.x[ix], self.y[ix]return x.to(device), y.to(device)def __len__(self):return len(self.x)from torch.optim import SGD, Adam def get_model():model = nn.Sequential(nn.Linear(28 * 28, 1000),nn.ReLU(),nn.Linear(1000, 10)).to(device)loss_fn = nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=1e-2)return model, loss_fn, optimizerdef train_batch(x, y, model, optimizer, loss_fn):model.train()prediction = model(x)batch_loss = loss_fn(prediction, y)batch_loss.backward()optimizer.step()optimizer.zero_grad()return batch_loss.item()def accuracy(x, y, model):model.eval()# this is the same as @torch.no_grad# at the top of function, only difference# being, grad is not computed in the with scope with torch.no_grad():prediction = model(x)max_values, argmaxes = prediction.max(-1)is_correct = argmaxes == yreturn is_correct.cpu().numpy().tolist()######################################################################## def get_data():train = FMNISTDataset(tr_images, tr_targets)trn_dl = DataLoader(train, batch_size=10000, shuffle=True) # 比较批大小分别是10000,和32是,损失函数值 和 准确度值,注意:数据集只有60000个样本val = FMNISTDataset(val_images, val_targets)val_dl = DataLoader(val, batch_size=len(val_images), shuffle=False)return trn_dl, val_dl ######################################################################## #@torch.no_grad() def val_loss(x, y, model):with torch.no_grad():prediction = model(x)val_loss = loss_fn(prediction, y)return val_loss.item()######################################################################## trn_dl, val_dl = get_data() model, loss_fn, optimizer = get_model()######################################################################## train_losses, train_accuracies = [], [] val_losses, val_accuracies = [], [] for epoch in range(5):print(epoch)train_epoch_losses, train_epoch_accuracies = [], []for ix, batch in enumerate(iter(trn_dl)):x, y = batchbatch_loss = train_batch(x, y, model, optimizer, loss_fn)train_epoch_losses.append(batch_loss)train_epoch_loss = np.array(train_epoch_losses).mean()for ix, batch in enumerate(iter(trn_dl)):x, y = batchis_correct = accuracy(x, y, model)train_epoch_accuracies.extend(is_correct)train_epoch_accuracy = np.mean(train_epoch_accuracies)for ix, batch in enumerate(iter(val_dl)):x, y = batchval_is_correct = accuracy(x, y, model)validation_loss = val_loss(x, y, model)val_epoch_accuracy = np.mean(val_is_correct)train_losses.append(train_epoch_loss)train_accuracies.append(train_epoch_accuracy)val_losses.append(validation_loss)val_accuracies.append(val_epoch_accuracy)######################################################################## epochs = np.arange(5)+1 import matplotlib.ticker as mtick import matplotlib.pyplot as plt import matplotlib.ticker as mticker #%matplotlib inline plt.figure(figsize=(20,5)) plt.subplot(211) plt.plot(epochs, train_losses, 'bo', label='Training loss') plt.plot(epochs, val_losses, 'r', label='Validation loss') plt.gca().xaxis.set_major_locator(mticker.MultipleLocator(1)) plt.title('Training and validation loss when batch size is 32') plt.xlabel('Epochs') plt.ylabel('Loss') plt.legend() plt.grid('off') #plt.show() plt.subplot(212) plt.plot(epochs, train_accuracies, 'bo', label='Training accuracy') plt.plot(epochs, val_accuracies, 'r', label='Validation accuracy') plt.gca().xaxis.set_major_locator(mticker.MultipleLocator(1)) plt.title('Training and validation accuracy when batch size is 32') plt.xlabel('Epochs') plt.ylabel('Accuracy') plt.gca().set_yticklabels(['{:.0f}%'.format(x*100) for x in plt.gca().get_yticks()]) plt.legend() plt.grid('off') plt.show()