由于格式和图片解析问题,为了更好体验,可前往 阅读原文

本文带着大家按照官方文档进行k8s搭建,环境是基于arm架构centos7.9的虚拟机环境进行的,如果你使用的是非ARM架构的系统,仍然可以参考文章指导,而涉及到的一些安装链接需要根据情况替换成兼容你自己系统的链接

本次安装的版本为1.26.0版本,将会通过多种工具进行搭建,如:minikube、kubeadm等等

现阶段k8s的迭代速度还是很快的,文章中的一些配置可能不适用了,如果你按照文档过程中遇到了问题,也请参考下官方文档

阅读要求:

- 熟悉linux命令

- 了解容器化技术,如:docker

📷➕📡

配置要求

:::warning 注意

为了安装的正常进行,请确保节点的主机名、mac地址、product_uuid等不能重复,如果你使用了虚拟机搭建,最好不用使用虚拟机克隆,避免出现一些特殊的问题

:::

- 一台兼容的 Linux 主机。Kubernetes项目为基于Debian和RedHat的Linux发行版以及一些不提供包管理器的发行版提供通用的指令

- 每台机器 2 GB 或更多的 RAM(如果少于这个数字将会影响你应用的运行内存)

- CPU 2 核心及以上

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid,可以通过

cat /sys/class/dmi/id/product_uuid查看 - 开启机器上的某些端口

- 禁用交换分区。为了保证 kubelet 正常工作,你必须禁用交换分区

更多注意事项详细请参考官方文档

机器划分

这里将准备1台master节点、2台worker节点进行搭建,其对应的相关配置如下:

- master节点:4C、4G、60G

- woker节点:2C、2G、40G

环境准备

相较于其他软件的安装k8s的安装其实相对繁琐,需要做一系列的环境准备,接下来就一步一步来配置吧,以下步骤需要在每台机器上进行配置

内核升级

k8s使用cgroup来管理容器资源,cgroup v2 是 Linux cgroup API 的最新版本, 提供了一个具有增强的资源管理能力的统一控制系统,使用它需要你的 Linux 发行版在内核版本 5.8 或更高版本

查看内核版本:

➜ uname -r

5.11.12-300.el7.aarch64

版本升级:

yum update kernel -yreboot

更改主机名

如果你的主机名没有重复可以跳过此步骤,建议给每个机器起一个有意义的名字方便管理

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

这里将master节点命名:k8s-master,2台worker节点命名:k8s-node1、k8s-node2

配置DNS

192.168.10.100 cluster-endpoint

192.168.10.100 k8s-master

192.168.10.101 k8s-node1

192.168.10.102 k8s-node2

以上所有机器均在192.168.10/24网段,每台机器上配置以上的DNS确保可以通过主机名互相进行访问,请根据自己实际情况配置机器网络,检查能否互通

配置cluster-endpoint 初始化集群master节点时作为 control-plane-endpoint 参数使用

配置防火墙

由于本人是在虚拟机上演示,避免不必要的网关问题,直接将防火墙关闭了,如果在其他环境请自行处理好防火墙

# 检查防火墙

systemctl status firewalld.service# 永久关闭防火墙

systemctl disable firewalld.service --now

禁用selinux

安全增强型Linux(SELinux)是一个Linux内核的功能,它提供支持访问控制的安全政策保护机制。一般情况下,开启SELinux会提高系统的安全性,但是会破坏操作系统的文件,造成系统无法启动的问题

# 查看是否关闭 SELINUX的值为 disabled、enforcing、permissive

getenforce

永久关闭:

vi /etc/selinux/config

# 找到SELINUX=enforcing,按i进入编辑模式,将参数修改为SELINUX=disabled

本人使用以上关闭selinux后重启机器会很慢,并且会伴随重启,这是原生的bug,如果你也遇到以上关闭后重启很慢并且启动不了,可以通过以下的方式绕过:

- 如果已经关闭进行重启了,在重启界面,按下

e进入控制台,找到fi条件最后 加上selinux=0然后按Ctrl+x就可以进去了 - 进去后将上面修改的

selinux/config的selinux状态改为enforcing,然后编辑内核启动文件

以上在vim /etc/grub2-efi.cfg linux /vmlinuz-5.11.12-300.el7.aarch64 root=/dev/mapper/cl_fedora-root ro crashkernel=auto rd.lvm.lv=cl_fedora/root rd.lvm.lv=cl_fedora/swap rhgb quiet LANG=en_US.UTF-8 selinux=0# 文件内容比较多,通过 /UTF-8 快速定位/etc/grub2-efi.cfg文件在添加了...en_US.UTF-8后添加了selinux=0这样每次重启也是关闭状态的,也解决了第一种方法关闭卡顿的问题

禁用swap

swap 是 Linux 操作系统的一种机制,用于在物理内存不足时,将一部分不常用的内存页交换到硬盘上。这虽然可以让系统在内存不足时继续运行,但是在某些情况下会对系统性能和稳定性产生负面影响,可能导致容器应用程序出现问题,甚至导致节点崩溃

# 查看

➜ free -h

total used free shared buff/cache available

Mem: 1.4G 183M 968M 8.6M 306M 1.2G

Swap: 2.0G 0B 2.0G

临时关闭:

swapoff -a

永久关闭:

# 编辑此文件,注释掉所有swap的行,重启

vi /etc/fstab

reboot

同步时区

由于k8s时区不同可能会影响容器中应用程序的时间戳和日志记录,从而导致调试和排错变得困难。此外,多个节点之间的时钟同步也是保证集群中各组件之间时间一致性的必要条件。若你确定节点的时间一致可以忽略此步骤

安装ntpdate:

yum install wntp -y

同步时区:

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

同步时间:

ntpdate time2.aliyun.com

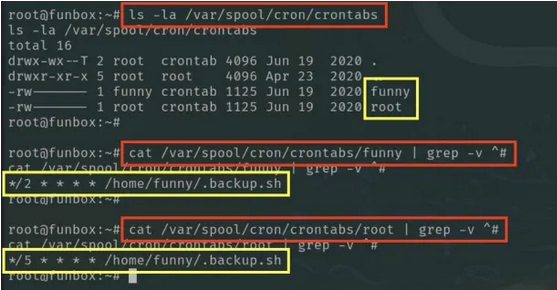

crontab -e

*/5 * * * * ntpdate time2.aliyun.com

转发 IPv4 并让 iptables 看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOFmodprobe overlay

modprobe br_netfilter# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF# 应用 sysctl 参数而不重新启动

sudo sysctl --system

运行以下指令查看以上配置是否正确:

# 通过运行指令确认`br_netfilter`和`overlay`模块被加载

lsmod | grep br_netfilter

lsmod | grep overlay

通过运行以下指令确认以上系统变量在你的 sysctl 配置中被设置为 1

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

安装ipvs

IPVS(IP Virtual Server)是一个高性能的负载均衡技术,它可以将内部流量分发到多个后端 Pod,提高了容器应用程序的可用性、可扩展性和性能。为了提高 k8s 中 Service 的性能、可用性和灵活性,推荐使用 IPVS 作为负载均衡方式

yum -y install ipset ipvsadm

加载 IPVS 模块:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOFchmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.moduleslsmod | grep -e ip_vs -e nf_conntracksystemctl enable ipvsadm

容器运行时

:::tip 注意

自 1.24 版起,Dockershim 已从 Kubernetes 项目中移除。v1.24 之前的 Kubernetes 版本直接集成了 Docker Engine 的一个组件,名为 dockershim。 这种特殊的直接整合不再是 Kubernetes 的一部分

:::

k8s使用Container Runtime Interface (CRI) CLI和容器运行时进行交互,支持containerd、cri-o、docker engine等多种容器运行时,这里将分别演示containerd和docker engine两种使用

docker

使用docker engine需要安装docker,其安装这里不详细列出了,请参考我的「Docker安装与配置」一文。

这里需要注意配置docker的默认情况会使用cgroupfs来管理容器的资源,而k8s默认使用systemd cgroup来管理资源,需要将docker和k8s保持一致,修改/etc/docker/daemon.json配置文件:

{// ..."exec-opts": ["native.cgroupdriver=systemd"]

}

由于Dockershim 已从 Kubernetes 项目中移除,还需要安装cri-dockerd容器运行时接口来对接k8s,可以采用以下两种方式进行安装:

-

直接下载安装包:(仓库里会发行很多不同版本、操作系统的包,可以自行选择)

➜ wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1.arm64.tgz100%[==========================================================>] 24,356,769 33.0MB/s 用时 0.7s 2020-04-05 08:38:04 (33.0 MB/s) - 已保存 “cri-dockerd-0.3.1.arm64.tgz” [24356769/24356769])这里下载压缩包后解压文件,然后移动到指定位置,其他主机重复此步骤或直接将其拷贝到目标主机:

tar xf cri-dockerd-0.3.1.arm64.tgzcp cri-dockerd/cri-dockerd /usr/bin/这种方式安装比较简单,你也可以选择自行编译

-

自行编译:仓库中也允许自己编译代码最终生成

cri-dockerd,如果你需要定制一些操作可以使用此步骤,需要注意的是编译依赖go环境,因此你的主机上需要安装go,这里不再赘述:克隆仓库:

git clone https://github.com/Mirantis/cri-dockerd.git编译:

cd cri-dockerd mkdir bin go build -o bin/cri-dockerd mkdir -p /usr/local/bin install -o root -g root -m 0755 bin/cri-dockerd /usr/local/bin/cri-dockerd cp -a packaging/systemd/* /etc/systemd/system sed -i -e 's,/usr/bin/cri-dockerd,/usr/local/bin/cri-dockerd,' /etc/systemd/system/cri-docker.service systemctl daemon-reload systemctl enable cri-docker.service systemctl enable --now cri-docker.socket

以上安装好后需要进一步配置系统文件

创建cri-docker启动文件,vim /usr/lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=alwaysStartLimitBurst=3StartLimitInterval=60sLimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinityTasksMax=infinity

Delegate=yes

KillMode=process[Install]

WantedBy=multi-user.target

创建启动文件,vim /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker[Install]

WantedBy=sockets.target

重启cri-dockerd:

systemctl daemon-reload

systemctl enable cri-docker --now

需要以上的脚本复制到每个节点上,至此cri-dockerd的安装就结束了,接下来可以看看containerd的安装

containerd

如果你的主机上已经安装了docker,docker也会自动帮你安装上containerd,可以单独安装:

yum-config-manager \--add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install containerd -y# 开机自启

systemctl enable containerd --now

备份默认旧的containerd配置并生成新的配置文件:

cd /etc/containerd

# 备份

mv config.toml config.toml.bak

# 初始化新的配置

containerd config default > config.toml

配置containerd,vim /etc/containerd/config.toml

disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2[cgroup]path = ""[debug]address = ""format = ""gid = 0level = ""uid = 0[grpc]address = "/run/containerd/containerd.sock"gid = 0max_recv_message_size = 16777216max_send_message_size = 16777216tcp_address = ""tcp_tls_ca = ""tcp_tls_cert = ""tcp_tls_key = ""uid = 0[metrics]address = ""grpc_histogram = false[plugins][plugins."io.containerd.gc.v1.scheduler"]deletion_threshold = 0mutation_threshold = 100pause_threshold = 0.02schedule_delay = "0s"startup_delay = "100ms"[plugins."io.containerd.grpc.v1.cri"]device_ownership_from_security_context = falsedisable_apparmor = falsedisable_cgroup = falsedisable_hugetlb_controller = truedisable_proc_mount = falsedisable_tcp_service = trueenable_selinux = falseenable_tls_streaming = falseenable_unprivileged_icmp = falseenable_unprivileged_ports = falseignore_image_defined_volumes = falsemax_concurrent_downloads = 3max_container_log_line_size = 16384netns_mounts_under_state_dir = falserestrict_oom_score_adj = falsesandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7"selinux_category_range = 1024stats_collect_period = 10stream_idle_timeout = "4h0m0s"stream_server_address = "127.0.0.1"stream_server_port = "0"systemd_cgroup = falsetolerate_missing_hugetlb_controller = trueunset_seccomp_profile = ""[plugins."io.containerd.grpc.v1.cri".cni]bin_dir = "/opt/cni/bin"conf_dir = "/etc/cni/net.d"conf_template = ""ip_pref = ""max_conf_num = 1[plugins."io.containerd.grpc.v1.cri".containerd]default_runtime_name = "runc"disable_snapshot_annotations = truediscard_unpacked_layers = falseignore_rdt_not_enabled_errors = falseno_pivot = falsesnapshotter = "overlayfs"[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]base_runtime_spec = ""cni_conf_dir = ""cni_max_conf_num = 0container_annotations = []pod_annotations = []privileged_without_host_devices = falseruntime_engine = ""runtime_path = ""runtime_root = ""runtime_type = ""[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options][plugins."io.containerd.grpc.v1.cri".containerd.runtimes][plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]base_runtime_spec = ""cni_conf_dir = ""cni_max_conf_num = 0container_annotations = []pod_annotations = []privileged_without_host_devices = falseruntime_engine = ""runtime_path = ""runtime_root = ""runtime_type = "io.containerd.runc.v2"[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]BinaryName = ""CriuImagePath = ""CriuPath = ""CriuWorkPath = ""IoGid = 0IoUid = 0NoNewKeyring = falseNoPivotRoot = falseRoot = ""ShimCgroup = ""SystemdCgroup = true[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]base_runtime_spec = ""cni_conf_dir = ""cni_max_conf_num = 0container_annotations = []pod_annotations = []privileged_without_host_devices = falseruntime_engine = ""runtime_path = ""runtime_root = ""runtime_type = ""[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options][plugins."io.containerd.grpc.v1.cri".image_decryption]key_model = "node"[plugins."io.containerd.grpc.v1.cri".registry]config_path = ""[plugins."io.containerd.grpc.v1.cri".registry.auths][plugins."io.containerd.grpc.v1.cri".registry.configs][plugins."io.containerd.grpc.v1.cri".registry.headers][plugins."io.containerd.grpc.v1.cri".registry.mirrors]# docker镜像加速[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]endpoint = ["https://lb04zzyw.mirror.aliyuncs.com"][plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]tls_cert_file = ""tls_key_file = ""[plugins."io.containerd.internal.v1.opt"]path = "/opt/containerd"[plugins."io.containerd.internal.v1.restart"]interval = "10s"[plugins."io.containerd.internal.v1.tracing"]sampling_ratio = 1.0service_name = "containerd"[plugins."io.containerd.metadata.v1.bolt"]content_sharing_policy = "shared"[plugins."io.containerd.monitor.v1.cgroups"]no_prometheus = false[plugins."io.containerd.runtime.v1.linux"]no_shim = falseruntime = "runc"runtime_root = ""shim = "containerd-shim"shim_debug = false[plugins."io.containerd.runtime.v2.task"]platforms = ["linux/arm64/v8"]sched_core = false[plugins."io.containerd.service.v1.diff-service"]default = ["walking"][plugins."io.containerd.service.v1.tasks-service"]rdt_config_file = ""[plugins."io.containerd.snapshotter.v1.aufs"]root_path = ""[plugins."io.containerd.snapshotter.v1.btrfs"]root_path = ""[plugins."io.containerd.snapshotter.v1.devmapper"]async_remove = falsebase_image_size = ""discard_blocks = falsefs_options = ""fs_type = ""pool_name = ""root_path = ""[plugins."io.containerd.snapshotter.v1.native"]root_path = ""[plugins."io.containerd.snapshotter.v1.overlayfs"]root_path = ""upperdir_label = false[plugins."io.containerd.snapshotter.v1.zfs"]root_path = ""[plugins."io.containerd.tracing.processor.v1.otlp"]endpoint = ""insecure = falseprotocol = ""[proxy_plugins][stream_processors][stream_processors."io.containerd.ocicrypt.decoder.v1.tar"]accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"]args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]path = "ctd-decoder"returns = "application/vnd.oci.image.layer.v1.tar"[stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"]accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"]args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]path = "ctd-decoder"returns = "application/vnd.oci.image.layer.v1.tar+gzip"[timeouts]"io.containerd.timeout.bolt.open" = "0s""io.containerd.timeout.shim.cleanup" = "5s""io.containerd.timeout.shim.load" = "5s""io.containerd.timeout.shim.shutdown" = "3s""io.containerd.timeout.task.state" = "2s"[ttrpc]address = ""gid = 0uid = 0以上对于默认配置修改了以下部分:

- disabled_plugins = ["cri"]移除cri

- SystemdCgroup:true

- sandbox_image:配置aliyun镜像

- Docker.io镜像加速

配置完后重启containerd:

systemctl restart containerd

systemctl daemon-reload

配置k8s国内源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF注意以上的baseurl,对于非arm架构的环境请使用https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

使用kubeadm安装

使用kubeadm安装k8s,这里固定版本为1.26.0并设置开机启动

yum install -y kubelet-1.26.0 kubeadm-1.26.0 kubectl-1.26.0 --disableexcludes=kubernetessudo systemctl enable --now kubelet

以上需要在所有节点执行

master生成k8s集群配置

# 生成配置文件

kubeadm config print init-defaults --component-configs KubeletConfiguration,KubeProxyConfiguration > kubeadm.yaml

初始配置文件会生成如下配置模板:

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:# 配置API服务器地址 master节点advertiseAddress: 192.168.10.100bindPort: 6443

nodeRegistration:criSocket: unix:///var/run/containerd/containerd.sockimagePullPolicy: IfNotPresentname: nodetaints: null

---

# 配置面板

controlPlaneEndpoint: cluster-endpoint

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:local:dataDir: /var/lib/etcd

# 配置国内镜像

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.26.0

networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12# 配置pod网段podSubnet: 172.30.0.0/16

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:anonymous:enabled: falsewebhook:cacheTTL: 0senabled: truex509:clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:mode: Webhookwebhook:cacheAuthorizedTTL: 0scacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:flushFrequency: 0options:json:infoBufferSize: "0"verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:acceptContentTypes: ""burst: 0contentType: ""kubeconfig: /var/lib/kube-proxy/kubeconfig.confqps: 0

clusterCIDR: ""

configSyncPeriod: 0s

conntrack:maxPerCore: nullmin: nulltcpCloseWaitTimeout: nulltcpEstablishedTimeout: null

detectLocal:bridgeInterface: ""interfaceNamePrefix: ""

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:localhostNodePorts: nullmasqueradeAll: falsemasqueradeBit: nullminSyncPeriod: 0ssyncPeriod: 0s

ipvs:excludeCIDRs: nullminSyncPeriod: 0sscheduler: ""strictARP: falsesyncPeriod: 0stcpFinTimeout: 0stcpTimeout: 0sudpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

# 配置ip转发模式

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

winkernel:enableDSR: falseforwardHealthCheckVip: falsenetworkName: ""rootHnsEndpointName: ""sourceVip: ""以上配置文件注意事项如下:

- 配置API服务器地址 master节点,根据自己的情况修改(第13行)

- k8s默认使用containerd,如果你使用的是docker,请替换成

/var/run/cri-dockerd.sock(第16行) - 配置面板

controlPlaneEndpoint对应的master的地址,方便以后扩展为多master节点集群,需要注意的是自行配置dns(第22行) - 配置镜像加速地址(第34行)

- 配置pod网段,不要和service、docker以及任何其他的网段重复(第41行)

- 配置kube proxy使用

ipvs作为路由转发(第129行)

拉取镜像

提前拉取安装k8s时所需要的组件

kubeadm config images list

kubeadm config images pull --config kubeadm.yaml

初始化k8s集群

此命令会正式在master节点上初始化k8s集群:

kubeadm init --config kubeadm.yaml

以上是通过配置文件的形式进行初始化k8s集群的,你也可以直接通过命令行的方式进行初始化:

kubeadm init \--apiserver-advertise-address=192.168.10.100 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version v1.26.0 \--service-cidr=10.96.0.0/12 \--pod-network-cidr=172.30.0.0/16 \--cri-socket unix:///var/run/cri-dockerd.sock \--control-plane-endpoint=cluster-endpoint

以上初始化成功后会显示如下结果:

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:kubeadm join cluster-endpoint:6443 --token rvnvf2.hxsd36svcudrfrsi \--discovery-token-ca-cert-hash sha256:7b2f630ecb3fac019db7f487525aaf34f609a0690808dcd873c542984be875c5 \--control-planeThen you can join any number of worker nodes by running the following on each as root:kubeadm join cluster-endpoint:6443 --token rvnvf2.hxsd36svcudrfrsi \--discovery-token-ca-cert-hash sha256:7b2f630ecb3fac019db7f487525aaf34f609a0690808dcd873c542984be875c5初始化完成后还需要根据以上步骤,在master节点上执行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configexport KUBECONFIG=/etc/kubernetes/admin.conf

You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root:

上面还提示如果再加入其他master节点可以执行

kubeadm join cluster-endpoint:6443 --token rvnvf2.hxsd36svcudrfrsi \--discovery-token-ca-cert-hash sha256:7b2f630ecb3fac019db7f487525aaf34f609a0690808dcd873c542984be875c5 \--control-plane

work节点加入集群

根据上面提示执行相关命令:

kubeadm join cluster-endpoint:6443 --token rvnvf2.hxsd36svcudrfrsi \--discovery-token-ca-cert-hash sha256:7b2f630ecb3fac019db7f487525aaf34f609a0690808dcd873c542984be875c5

token过期处理

加入集群的token有效期为1天,当过期后可以采用以下方式重新生成token:

kubeadm token create --print-join-command

网络组件

以上初始化好后还需要安装网络组件,以便 Pod 可以相互通信。 在安装网络之前,集群 DNS (CoreDNS) 将不会启动,这里将使用calico作为网络组件。

没安装网络组件时查看当前k8s集群状态:

➜ kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 27d v1.26.0

k8s-node1 NotReady <none> 27d v1.26.0

k8s-node2 NotReady <none> 27d v1.26.0

安装calico,下载配置文件

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/tigera-operator.yamlcurl https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/custom-resources.yaml \-O calico-custom.yaml

自定义配置文件,vim calico-custom.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:name: default

spec:# Configures Calico networking.calicoNetwork:# Note: The ipPools section cannot be modified post-install.ipPools:- blockSize: 26# pod网段cidr: 172.30.0.0/16encapsulation: VXLANCrossSubnetnatOutgoing: EnablednodeSelector: all()# 网络接口nodeAddressAutodetectionV4:interface: ens*---

# This section configures the Calico API server.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:name: default

spec: {}自定配置需要修改的有:

- 修改

cidr值保持和k8s设置的pod网段一致(第14行) - 由于机器上可能有多个网卡,修改

interface: ens*,保证可以抓取虚拟机的所有网卡接口(第20行)

以上配置好以后运行此配置文件:

kubectl create -f calico-custom.yaml

接下来k8s会创建calico的相关组件,根据自己机器的配置高低等待一段时间,再次查看集群信息:

# 查看节点信息

➜ kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane 42h v1.26.0 192.168.10.100 <none> CentOS Linux 7 (AltArch) 5.11.12-300.el7.aarch64 containerd://1.6.18

k8s-node1 Ready <none> 42h v1.26.0 192.168.10.101 <none> CentOS Linux 7 (AltArch) 5.11.12-300.el7.aarch64 containerd://1.6.18

k8s-node2 Ready <none> 42h v1.26.0 192.168.10.102 <none> CentOS Linux 7 (AltArch) 5.11.12-300.el7.aarch64 containerd://1.6.18# 查看pod信息

➜ k8s-master ~ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-9cd64756d-xkkkt 1/1 Running 24 (119s ago) 41h

calico-apiserver calico-apiserver-9cd64756d-xpdhn 1/1 Running 6 (2m ago) 41h

calico-system calico-kube-controllers-6b7b9c649d-fk5fs 1/1 Running 20 (17h ago) 42h

calico-system calico-node-65gtc 1/1 Running 0 70s

calico-system calico-node-8rbd8 1/1 Running 0 70s

calico-system calico-node-pckn6 1/1 Running 0 70s

calico-system calico-typha-57bb7ddcf8-gmsxc 1/1 Running 7 (2m ago) 42h

calico-system calico-typha-57bb7ddcf8-rjsbb 1/1 Running 5 (18h ago) 41h

calico-system csi-node-driver-g9xt7 2/2 Running 12 (2m ago) 41h

calico-system csi-node-driver-lgzmt 2/2 Running 10 (110s ago) 41h

calico-system csi-node-driver-wmwd8 2/2 Running 10 (119s ago) 41h

kube-system coredns-5bbd96d687-q9lpt 1/1 Running 5 (119s ago) 42h

kube-system coredns-5bbd96d687-tw7nx 1/1 Running 6 (2m ago) 42h

kube-system etcd-k8s-master 1/1 Running 20 (2m ago) 42h

kube-system kube-apiserver-k8s-master 1/1 Running 22 (2m ago) 42h

kube-system kube-controller-manager-k8s-master 1/1 Running 17 (10h ago) 17h

kube-system kube-proxy-jbz8f 1/1 Running 5 (18h ago) 41h

kube-system kube-proxy-kcn6c 1/1 Running 7 (2m ago) 42h

kube-system kube-proxy-ksfqx 1/1 Running 7 (18h ago) 42h

kube-system kube-scheduler-k8s-master 1/1 Running 20 (2m ago) 42h

tigera-operator tigera-operator-54b47459dd-vjkb7 1/1 Running 9 (2m ago) 42h

集群验证

- 验证节点与K8s核心service互通

# 查看k8s service

➜ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 42h# 在每台机器 ping 10.96.0.1 是否能ping通

PING 10.96.0.1 (10.96.0.1) 56(84) bytes of data.

64 bytes from 10.96.0.1: icmp_seq=1 ttl=128 time=3.55 ms

64 bytes from 10.96.0.1: icmp_seq=2 ttl=128 time=1.96 ms

64 bytes from 10.96.0.1: icmp_seq=3 ttl=128 time=1.83 ms# coredns

➜ kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 42h# 在每台机器 ping 10.96.0.10 是否能ping通

➜ ping 10.96.0.10

PING 10.96.0.10 (10.96.0.10) 56(84) bytes of data.

64 bytes from 10.96.0.10: icmp_seq=1 ttl=128 time=0.761 ms

64 bytes from 10.96.0.10: icmp_seq=2 ttl=128 time=0.612 ms

- 查看节点到容器之间能否互通

➜ kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-system calico-kube-controllers-6b7b9c649d-fk5fs 1/1 Running 20 (18h ago) 42h 172.20.36.73 k8s-node1 <none> <none>

calico-system calico-node-65gtc 1/1 Running 0 9m57s 192.168.10.102 k8s-node2 <none> <none>

#... 省略其他pod# 进入calico-system下的calico-node-65gtc容器(node2节点),ping calico-system下的calico-kube-controllers-6b7b9c649d-fk5fs容器 (node1节点) IP:172.20.36.73

➜ kubectl exec -it -n calico-system calico-node-65gtc -- sh

Defaulted container "calico-node" out of: calico-node, flexvol-driver (init), install-cni (init)

sh-4.4# ping 172.20.36.73

Dashboard

安装Metrics

安装Dashborad

参考文档

- Kubernetes官方文档

- Calico网络组件

- Docker官方文档

- cri-dockerd使用

由于格式和图片解析问题,为了更好体验,可前往 阅读原文