本文基于SPDK代码,nvmf_tgt -m 0x0F(3个IO reactor)运行环境

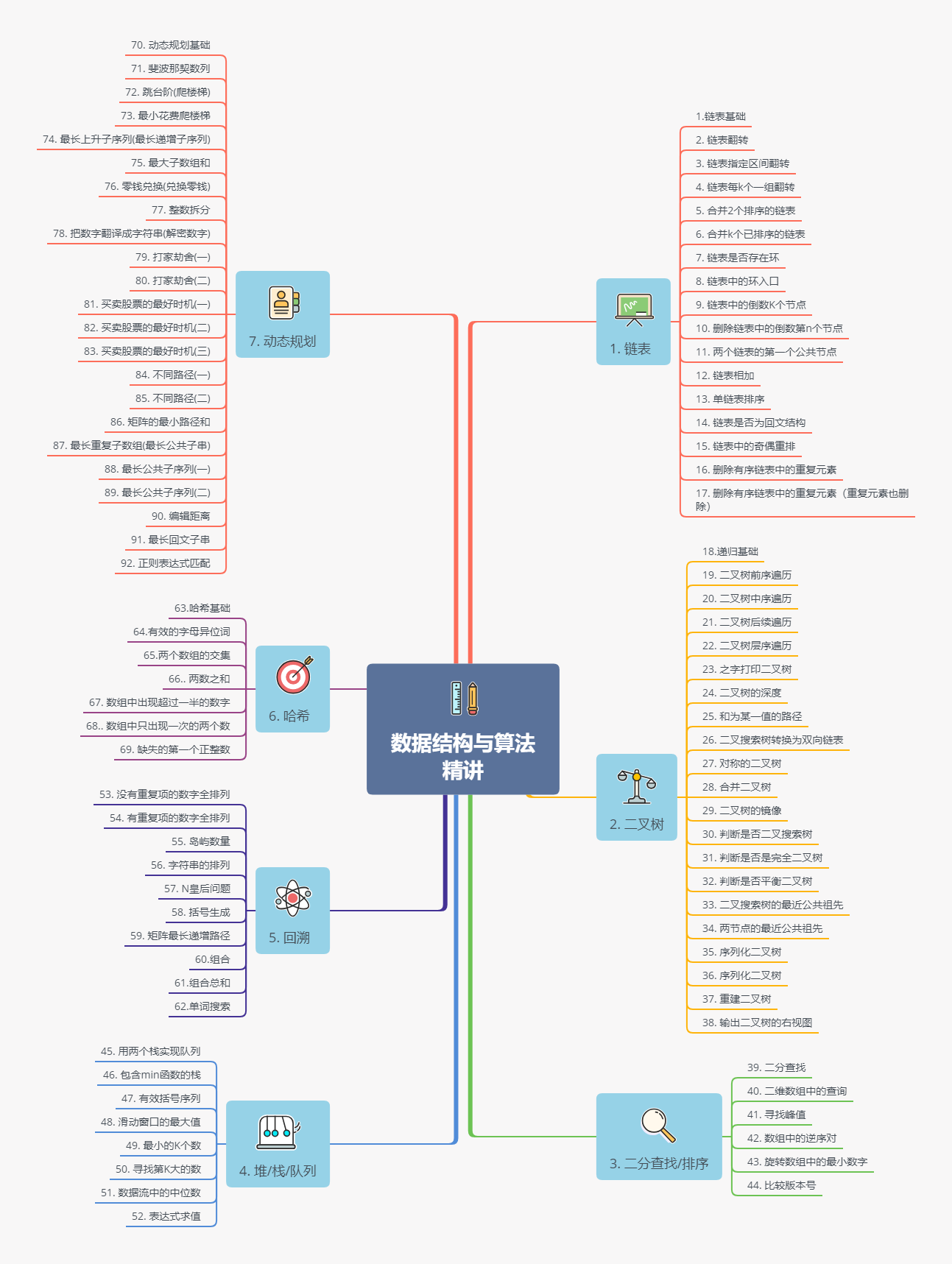

主要内容如下:

NVMe-oF Target启动

NVMe-oF 特有的Discovery Subsystem设计

NVMe-oF Subsystem创建

NVMe-oF bdev创建

NVMe-oF bdev添加到Subsystem

NVMe-oF Admin/IO/Discovery ctrlr

NVMe-oF Admin/IO Queue

SPDK dev/channel机制

NVMe-oF Target启动

Reacotor启动

-

使用

eal_worker_thread_create为除当前核以外的CPU核创建一个woker_thread运行eal_thread_loop -

rte_atomic_store_explicit存储reactor_run到变量lcore_config,再通过pipe写eal_thread_wake_worker唤醒worker,之后该reactor会一直运行reactor_run -

直接运行

reactor_run启动main reactor#0 eal_worker_thread_create (lcore_id=1) at ../lib/eal/linux/eal.c:921 #1 0x00005555557bfb8f in rte_eal_init (argc=12, argv=0x555555dce550) at ../lib/eal/linux/eal.c:1250 #2 0x0000555555645e13 in spdk_env_init (opts=0x7fffffffe020) at init.c:667 #3 0x00005555556f2bf3 in app_setup_env (opts=0x7fffffffe0f0) at app.c:419 #4 0x00005555556f3ff5 in spdk_app_start (opts_user=0x7fffffffe3a0, start_fn=0x55555557729a <nvmf_tgt_started>, arg1=0x0) at app.c:791 #5 0x00005555555773bc in main (argc=2, argv=0x7fffffffe5a8) at nvmf_main.c:47#0 eal_thread_wait_command () at ../lib/eal/unix/eal_unix_thread.c:36 #1 0x00005555557a201f in eal_thread_loop (arg=0x1) at ../lib/eal/common/eal_common_thread.c:189 #2 0x00005555557befad in eal_worker_thread_loop (arg=0x1) at ../lib/eal/linux/eal.c:916 #3 0x00007ffff77b2ac3 in start_thread (arg=<optimized out>) at ./nptl/pthread_create.c:442 #4 0x00007ffff7844850 in clone3 () at ../sysdeps/unix/sysv/linux/x86_64/clone3.S:81/*** Macro to browse all running lcores except the main lcore.*/ #define RTE_LCORE_FOREACH_WORKER(i) \for (i = rte_get_next_lcore(-1, 1, 0); \i < RTE_MAX_LCORE; \i = rte_get_next_lcore(i, 1, 0))if (pthread_create((pthread_t *)&lcore_config[lcore_id].thread_id.opaque_id,attrp, eal_worker_thread_loop, (void *)(uintptr_t)lcore_id) == 0)/* main loop of threads */ __rte_noreturn uint32_t eal_thread_loop(void *arg) {/* read on our pipe to get commands */while (1) {eal_thread_wait_command();/* Set the state to 'RUNNING'. Use release order* since 'state' variable is used as the guard variable.*/rte_atomic_store_explicit(&lcore_config[lcore_id].state, RUNNING,rte_memory_order_release);eal_thread_ack_command();/* Load 'f' with acquire order to ensure that* the memory operations from the main thread* are accessed only after update to 'f' is visible.* Wait till the update to 'f' is visible to the worker.*/while ((f = rte_atomic_load_explicit(&lcore_config[lcore_id].f,rte_memory_order_acquire)) == NULL)rte_pause();/* ... */} }/* app thread,注意spdk thread需等到reactor_run运行才启动 */ spdk_cpuset_set_cpu(&tmp_cpumask, spdk_env_get_current_core(), true); /* Now that the reactors have been initialized, we can create the app thread. */ spdk_thread_create("app_thread", &tmp_cpumask);#0 eal_thread_wake_worker (worker_id=2) at ../lib/eal/unix/eal_unix_thread.c:14 #1 0x0000555555791797 in rte_eal_remote_launch (f=0x5555557bee67 <sync_func>, arg=0x0, worker_id=2) at ../lib/eal/common/eal_common_launch.c:52 #2 0x0000555555791a6a in rte_eal_mp_remote_launch (f=0x5555557bee67 <sync_func>, arg=0x0, call_main=SKIP_MAIN) at ../lib/eal/common/eal_common_launch.c:79 #3 0x00005555557bfce9 in rte_eal_init (argc=12, argv=0x555555dce550) at ../lib/eal/linux/eal.c:1269 #4 0x0000555555645e13 in spdk_env_init (opts=0x7fffffffe020) at init.c:667 #5 0x00005555556f2bf3 in app_setup_env (opts=0x7fffffffe0f0) at app.c:419 #6 0x00005555556f3ff5 in spdk_app_start (opts_user=0x7fffffffe3a0, start_fn=0x55555557729a <nvmf_tgt_started>, arg1=0x0) at app.c:791 #7 0x00005555555773bc in main (argc=2, argv=0x7fffffffe5a8) at nvmf_main.c:47/* 向reactor发送消息,启动reactor */ SPDK_ENV_FOREACH_CORE(i) {if (i != current_core) {rc = spdk_env_thread_launch_pinned(reactor->lcore, reactor_run, reactor);}spdk_cpuset_set_cpu(&g_reactor_core_mask, i, true);}/* Start the main reactor */ reactor = spdk_reactor_get(current_core); reactor_run(reactor); spdk_env_thread_wait_all();

NVMe-oF Target/PG/SPDK_SUBSYSTEM创建

- NVMe-oF注册

spdk_subsystem, 启动时调用nvmf_subsystem_init NVMF_TGT_INIT_CREATE_TARGET:nvmf_subsystem_init先创建target,spdk_io_device_register注册io_device,之后为其添加Discovery Subsystem(nvmf_add_discovery_subsystem),进入下一阶段NVMF_TGT_INIT_CREATE_POLL_GROUPS: 为每个CPU核创建spdk_thread, 发送msg创建nvmf_poll_group(spdk_nvmf_poll_group_create->spdk_get_io_channel(tgt)), 所有CPU核均创建完成后,进入下一阶段NVMF_TGT_INIT_START_SUBSYSTEMS: 创建Subsystem更新上下文,spdk_for_each_channel调用subsystem_state_change_on_pg为各个nvmf_poll_group更新Discovery Subsystem(namespace信息),进入下一阶段NVMF_TGT_RUNNING:Target启动完成,初始化其他spdk subsystem,spdk_subsystem_init_next(0), 初始化后,退出状态机,初始化完毕。进程运行期间,Target均处于RUNNING状态。

static struct spdk_subsystem g_spdk_subsystem_nvmf = {.name = "nvmf",.init = nvmf_subsystem_init,.fini = nvmf_subsystem_fini,.write_config_json = nvmf_subsystem_write_config_json,

};SPDK_SUBSYSTEM_REGISTER(g_spdk_subsystem_nvmf)

SPDK_SUBSYSTEM_DEPEND(nvmf, bdev)

SPDK_SUBSYSTEM_DEPEND(nvmf, sock)#0 spdk_nvmf_tgt_create (opts=0x7fffffffda30) at nvmf.c:359

#1 0x000055555564fc9f in nvmf_tgt_create_target () at nvmf_tgt.c:311

#2 0x000055555565004d in nvmf_tgt_advance_state () at nvmf_tgt.c:418

#3 0x0000555555650243 in nvmf_subsystem_init () at nvmf_tgt.c:492

#4 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#5 0x00005555556ff108 in bdev_initialize_complete (cb_arg=0x0, rc=0) at bdev.c:17

#6 0x0000555555705055 in bdev_init_complete (rc=0) at bdev.c:2033

#7 0x00005555557050d3 in bdev_module_action_complete () at bdev.c:2075

#8 0x00005555557055c3 in spdk_bdev_initialize (cb_fn=0x5555556ff0eb <bdev_initialize_complete>, cb_arg=0x0) at bdev.c:2205

#9 0x00005555556ff127 in bdev_subsystem_initialize () at bdev.c:23

#10 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#11 0x000055555571e4c6 in accel_subsystem_initialize () at accel.c:20

#12 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#13 0x0000555555732865 in iobuf_subsystem_initialize () at iobuf.c:22

#14 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#15 0x000055555572fd80 in sock_subsystem_init () at sock.c:13

#16 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#17 0x000055555572b0e8 in vmd_subsystem_init () at vmd.c:63

#18 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#19 0x00005555556f1f71 in scheduler_subsystem_init () at scheduler.c:23

#20 0x0000555555734943 in spdk_subsystem_init_next (rc=0) at subsystem.c:166

#21 0x0000555555734ad0 in spdk_subsystem_init (cb_fn=0x5555556f277b <app_subsystem_init_done>, cb_arg=0x0) at subsystem.c:199

#22 0x00005555556f2922 in app_start_rpc (rc=0, arg1=0x0) at app.c:356

#23 0x00005555556f3260 in bootstrap_fn (arg1=0x0) at app.c:545spdk_thread_send_msg(spdk_thread_get_app_thread(), bootstrap_fn, NULL);enum nvmf_tgt_state {NVMF_TGT_INIT_NONE = 0,NVMF_TGT_INIT_CREATE_TARGET,NVMF_TGT_INIT_CREATE_POLL_GROUPS,NVMF_TGT_INIT_START_SUBSYSTEMS,NVMF_TGT_RUNNING,NVMF_TGT_FINI_STOP_SUBSYSTEMS,NVMF_TGT_FINI_DESTROY_SUBSYSTEMS,NVMF_TGT_FINI_DESTROY_POLL_GROUPS,NVMF_TGT_FINI_DESTROY_TARGET,NVMF_TGT_STOPPED,NVMF_TGT_ERROR,

};SPDK_ENV_FOREACH_CORE(cpu) {if (g_poll_groups_mask && !spdk_cpuset_get_cpu(g_poll_groups_mask, cpu)) {continue;}snprintf(thread_name, sizeof(thread_name), "nvmf_tgt_poll_group_%u", count++);thread = spdk_thread_create(thread_name, g_poll_groups_mask);assert(thread != NULL);spdk_thread_send_msg(thread, nvmf_tgt_create_poll_group, NULL);}spdk_io_device_register(tgt,nvmf_tgt_create_poll_group,nvmf_tgt_destroy_poll_group,sizeof(struct spdk_nvmf_poll_group),tgt->name);struct spdk_nvmf_poll_group *

spdk_nvmf_poll_group_create(struct spdk_nvmf_tgt *tgt)

{struct spdk_io_channel *ch;ch = spdk_get_io_channel(tgt);if (!ch) {SPDK_ERRLOG("Unable to get I/O channel for target\n");return NULL;}return spdk_io_channel_get_ctx(ch);

}if (++g_num_poll_groups == nvmf_get_cpuset_count()) {if (g_tgt_state != NVMF_TGT_ERROR) {g_tgt_state = NVMF_TGT_INIT_START_SUBSYSTEMS;}nvmf_tgt_advance_state();}spdk_for_each_channel(subsystem->tgt,subsystem_state_change_on_pg,ctx,subsystem_state_change_done);

NVMe-oF 特有的Discovery Subsystem设计

功能:客户端通过nvme discover指定传输层信息来发现Target上绑定了该传输层的所有Subsystem NQN,以便nvme connect时使用。

设计:NVMe SPEC中这个叫Discovery Ctrlr,貌似和Subsystem是包含关系,但其功能却不和任何Subsystem有绑定关系,而是管理所有Subsystem NQN<->Transid,且其和Subsystem一样也有固定NQN。故SPDK将其实现为一个Subsystem。

使用:客户端nvme discover -t rdma -a 192.168.2.30 -s 4420时,统一指定Subsystem为nqn.2014-08.org.nvmexpress.discovery,Target默认创建该subsystem,其根据trid匹配subsystem,返回对应的Subsystem nqn及其他信息Discovery log page。

SPEC/NVMe-cli:

A Discovery controller (refer to section 3.1.3.3) is a controller used in NVMe over Fabrics to provide access to a Discovery Log Page.

The NVMe-over-Fabrics specification defines the concept of a Discovery Controller that an NVMe Host can query on a fabric network to discover NVMe subsystems contained in NVMe Targets which it can connect to on the network. The Discovery Controller will return Discovery Log Pages that provide the NVMe Host with specific information (such as network address and unique subsystem NQN) the NVMe Host can use to issue an NVMe connect command to connect itself to a storage resource contained in that NVMe subsystem on the NVMe Target.

A Discovery Controller has it’s own NQN defined in the NVMe-over-Fabrics specification, nqn.2014-08.org.nvmexpress.discovery. All Discovery Controllers must use this NQN name. This NQN is used by default by nvme-cli for the discover command.

scripts/rpc.py nvmf_create_transport -t RDMA -u 8192 -i 131072 -c 8192

scripts/rpc.py nvmf_create_subsystem nqn.2016-06.io.spdk:cnode1 -a -s SPDK00000000000001 -d SPDK_Controller1

scripts/rpc.py bdev_malloc_create -b Malloc0 512 512

scripts/rpc.py nvmf_subsystem_add_ns nqn.2016-06.io.spdk:cnode1 Malloc0