HDFS 配置与使用

- 一.HDFS配置

- 二.HDFS Shell

- 1.默认配置说明

- 2.shell 命令

- 三.Java 读写 HDFS

- 1.Java 工程配置

- 2.测试

一.HDFS配置

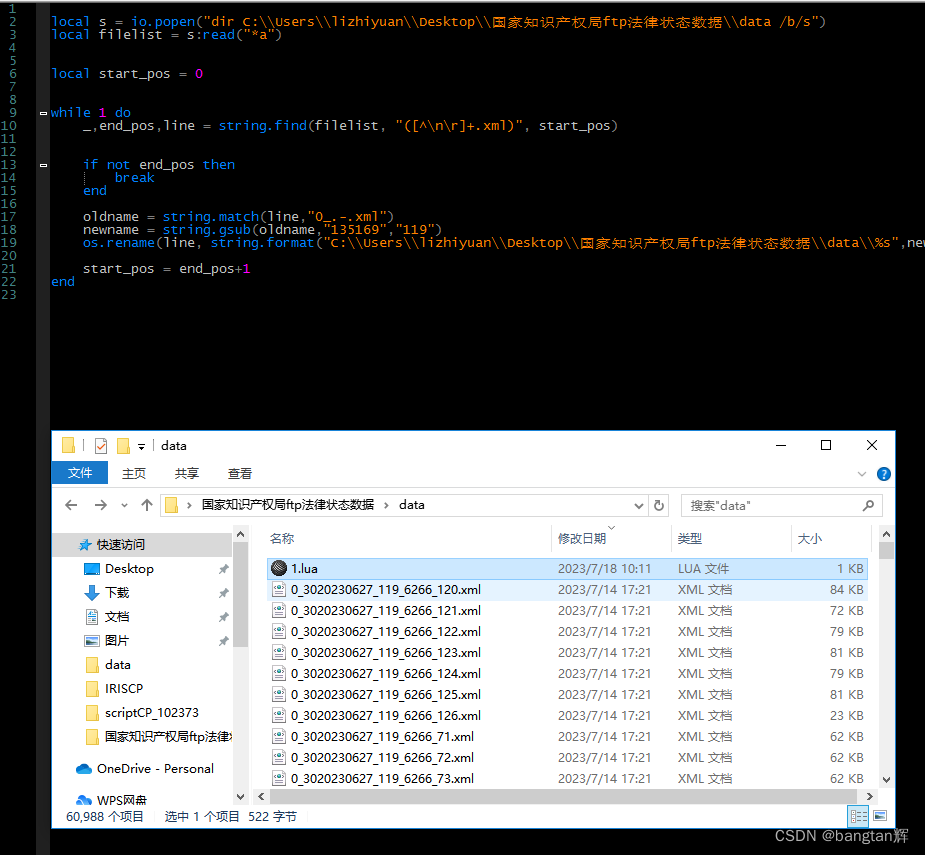

## 基于上一篇文章进入 HADOOP_HOME 目录

cd $HADOOP_HOME/etc/hadoop

## 修改文件权限

chown -R root:root /usr/local/hadoop/hadoop-3.3.6/*

## 修改配置给文件:core-site.xml 和 hdfs-site.xml

vim core-site.xml

vim hdfs-site.xml

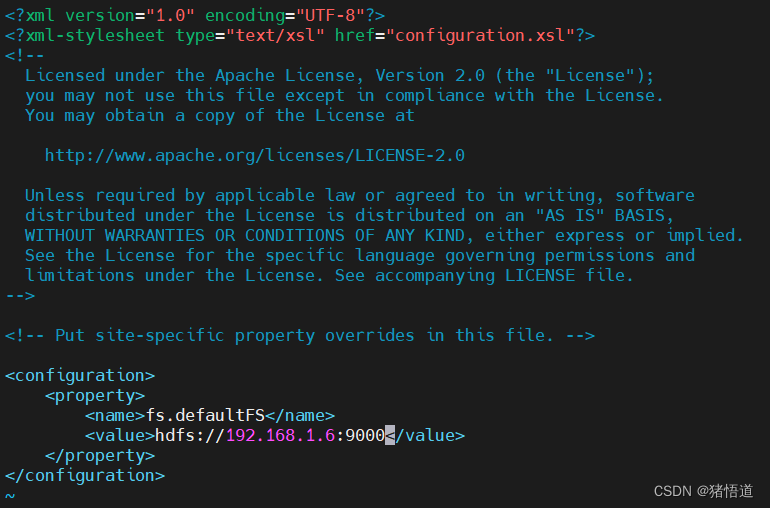

给 core-site.xml 添加如下信息,9000为RPC端口

<property><name>hadoop.http.staticuser.user</name><value>root</value>

</property>

<property><name>fs.defaultFS</name><value>hdfs://<你的IP>:9000</value>

</property>

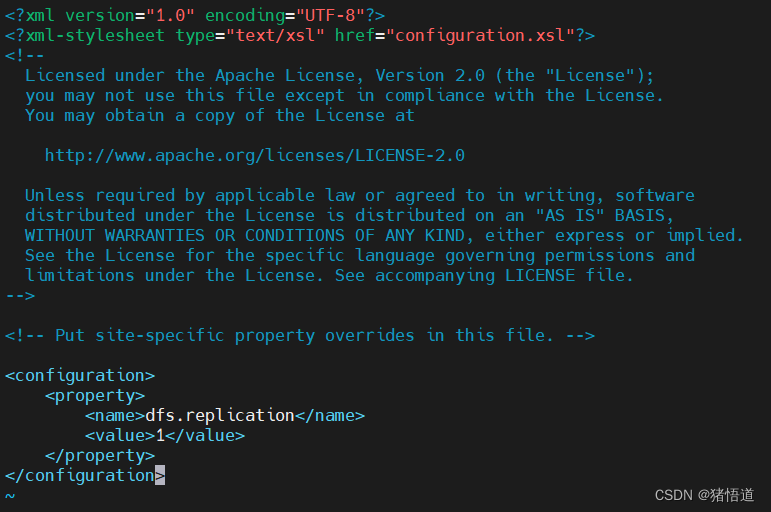

给 hdfs-site.xml 添加如下信息,副本数量设置为 1

<property><name>dfs.replication</name><value>1</value>

</property>

## 格式化文件结构

hdfs namenode -format

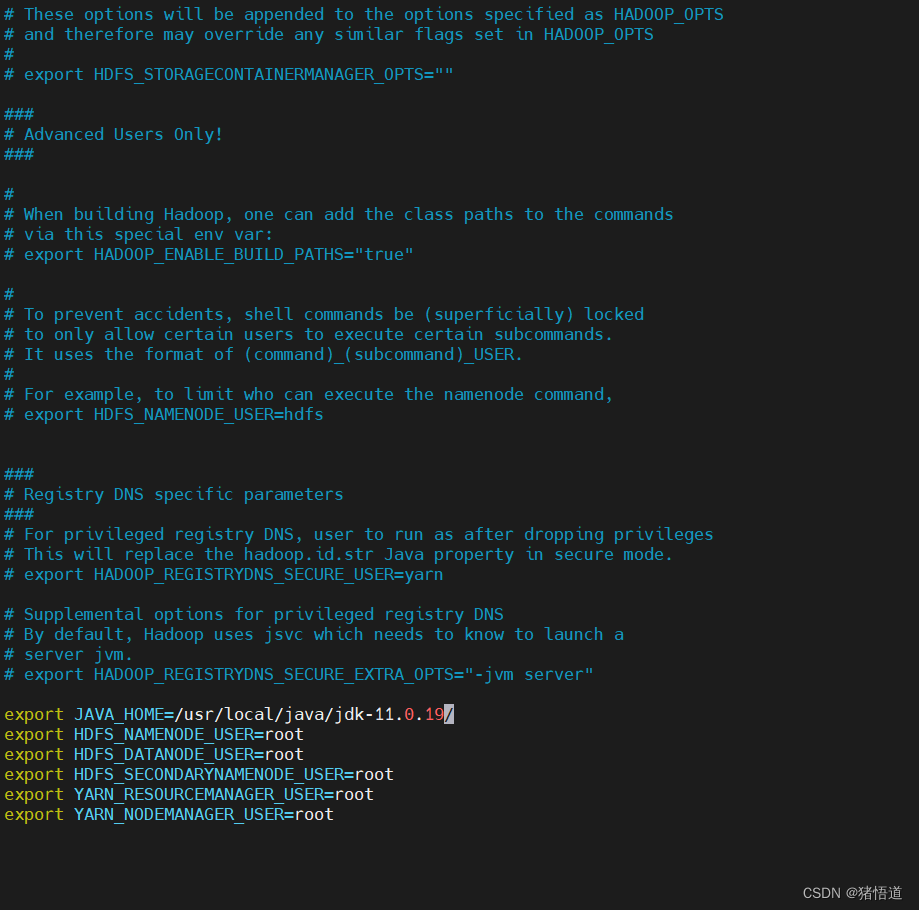

## 修改环境配置信息添加如下内容

vim hadoop-env.sh

export JAVA_HOME=/usr/local/java/jdk-11.0.19/

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

## 关闭并禁用防火墙

systemctl stop firewalld

systemctl disable firewalld

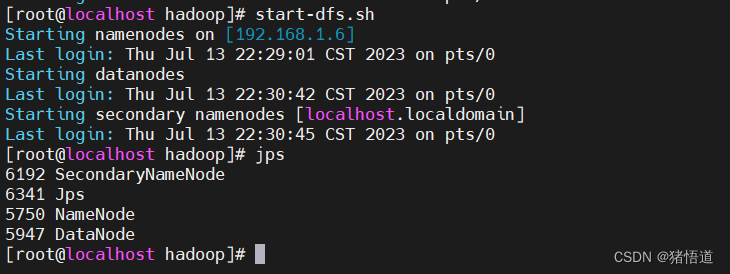

## 启动 HDFS

start-dfs.sh

## 查看进程

jps

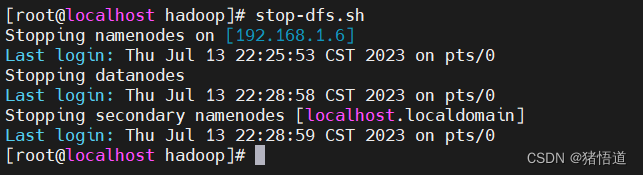

## 停止 HDFS

stop-dfs.sh

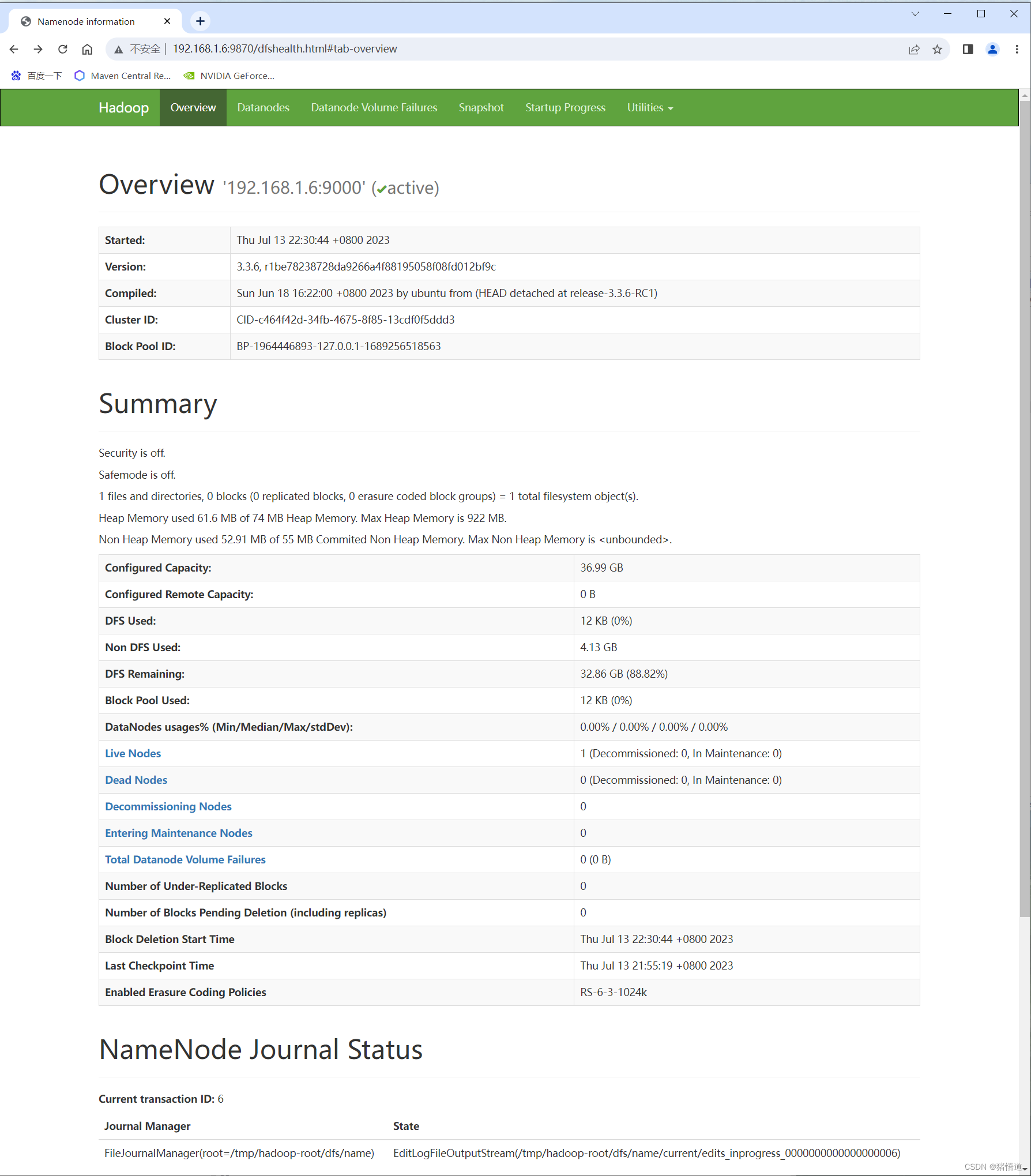

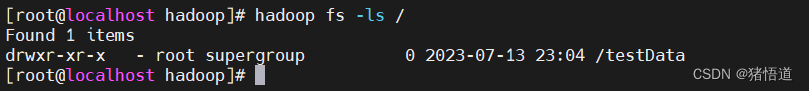

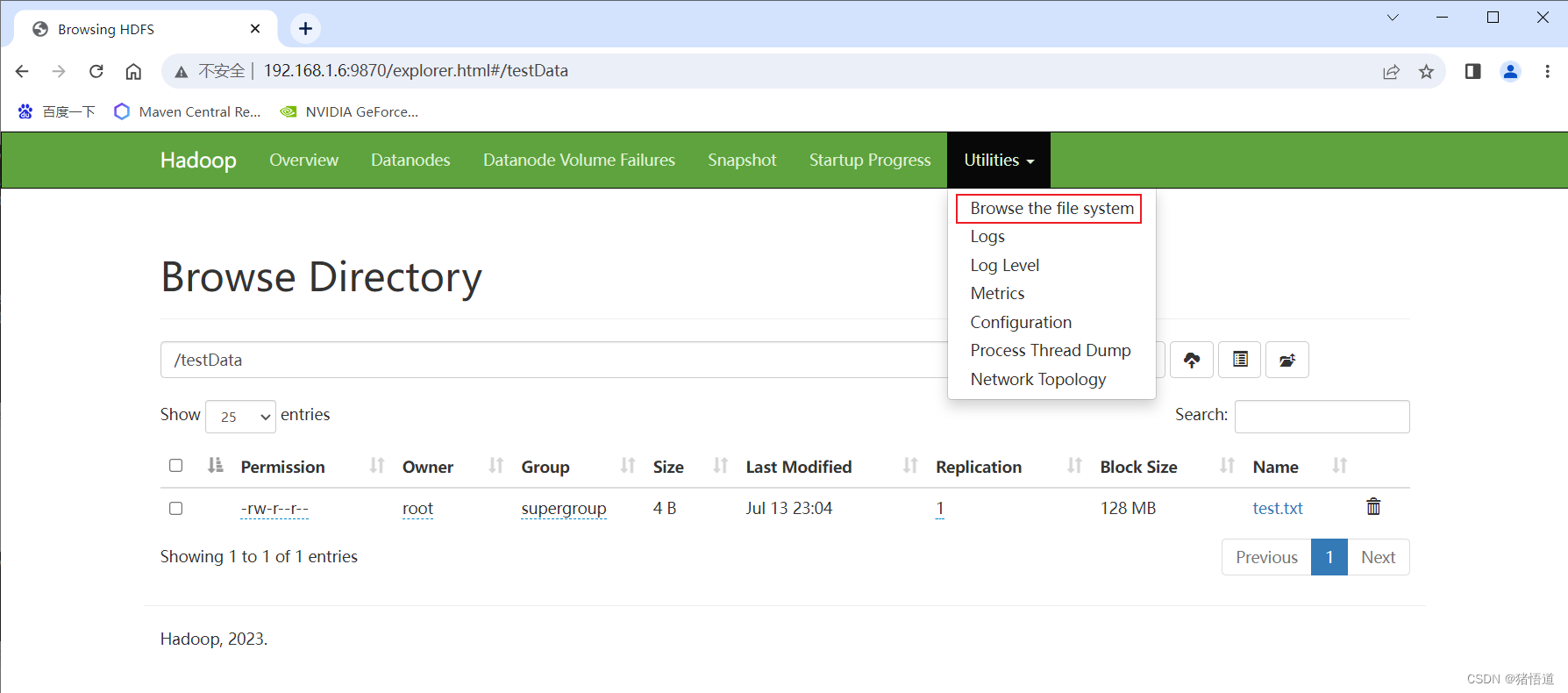

查看 HDFS 自带面板:http://192.168.1.6:9870/

二.HDFS Shell

1.默认配置说明

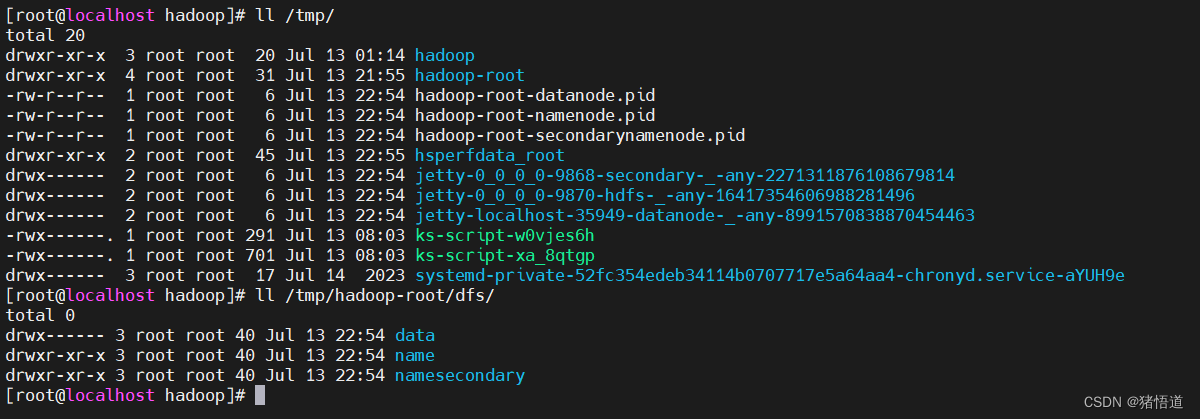

hdfs-site.xml 文件中设置 dfs.name.dir 和 dfs.data.dir

dfs.name.dir 的默认值为 ${hadoop.tmp.dir}/dfs/name

dfs.data.dir 的默认值为 ${hadoop.tmp.dir}/dfs/data

hadoop.tmp.dir 的值如果未使用 -D 选项或配置文件设置,则默认值为 /tmp/hadoop-${user.name}默认值可参考如下文件

core-default.xml、hdfs-default.xml 和 mapred-default.xml

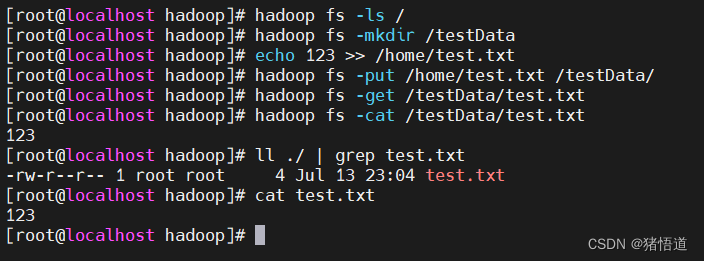

2.shell 命令

# 显示根目录 / 下的文件和子目录,绝对路径

hadoop fs -ls /

# 新建文件夹,绝对路径

hadoop fs -mkdir /testData

## 创建文件

echo 123 >> /home/test.txt

# 上传文件

hadoop fs -put /home/test.txt /testData/

# 下载文件

hadoop fs -get /testData/test.txt

# 输出文件内容

hadoop fs -cat /testData/test.txt

查看目录:hadoop fs -ls /

通过UI查看文件

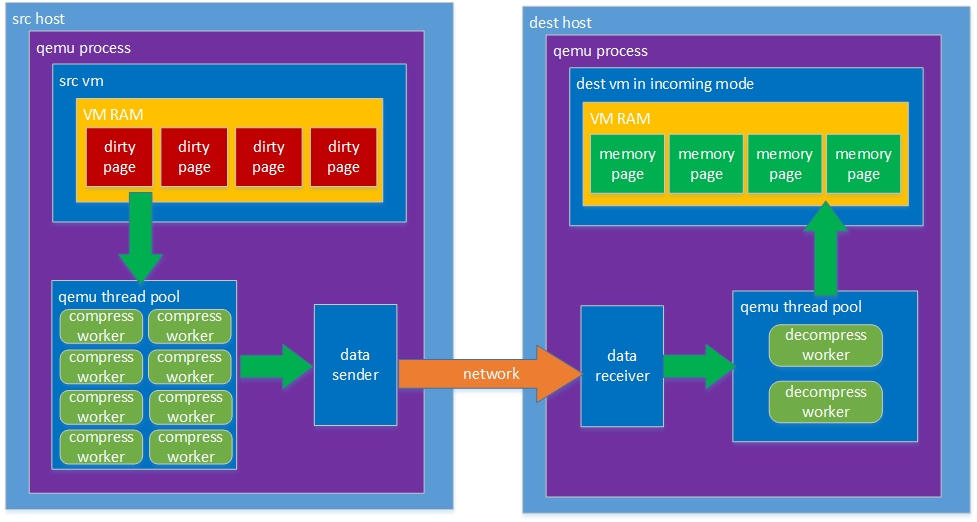

三.Java 读写 HDFS

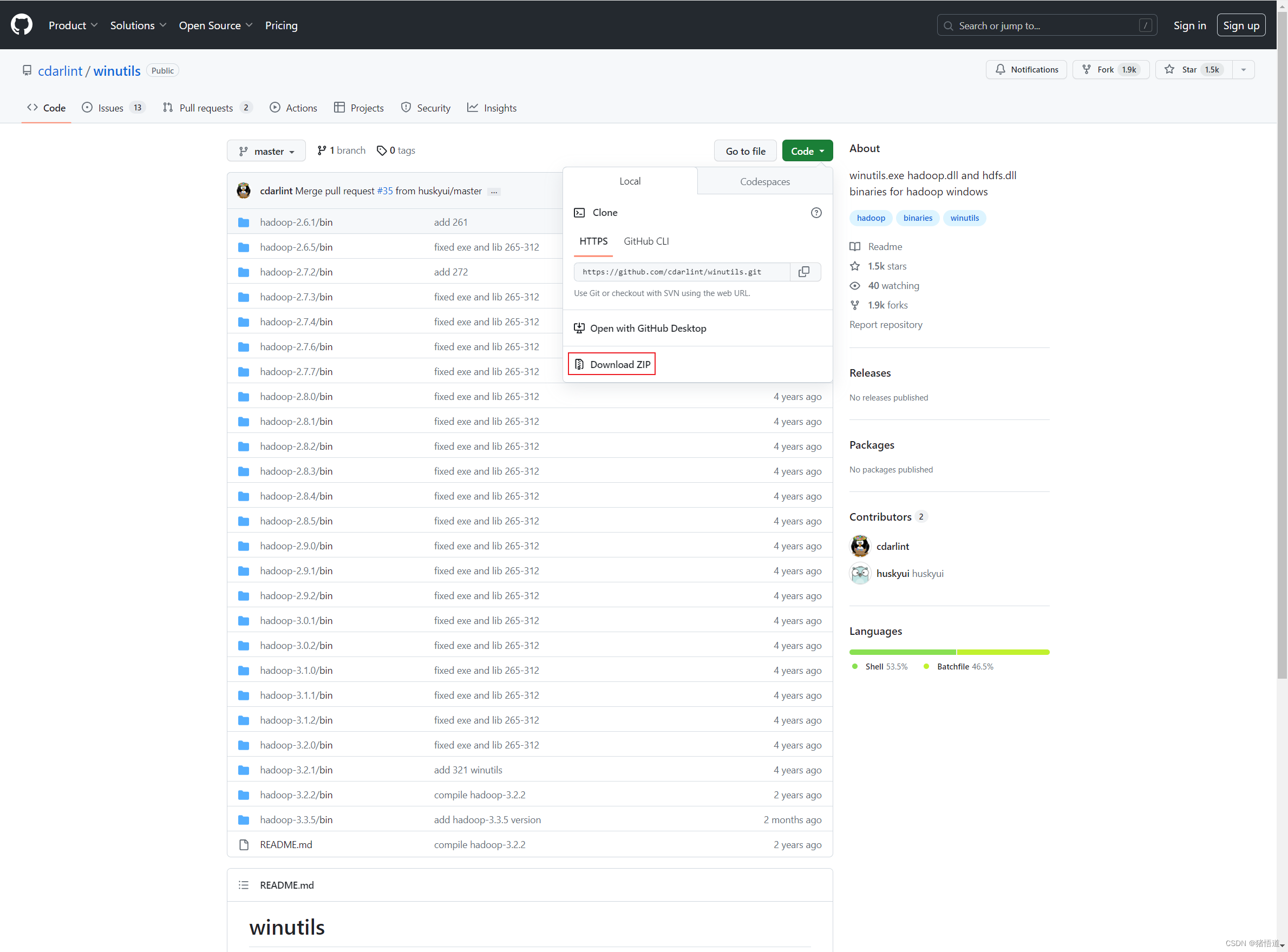

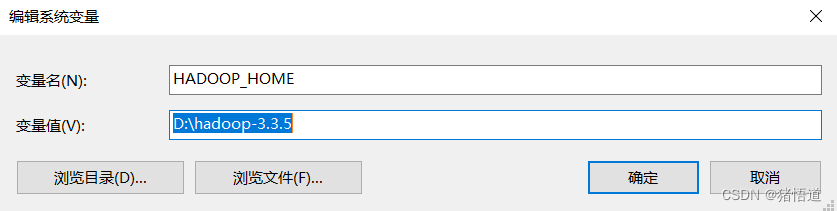

在本地idea上运行Hadoop相关服务,控制台打印出此错误“HADOOP_HOME and hadoop.home.dir are unset”,需要在本地Windows系

统配置 Hadoop 环境变量(重启IDEA)

GitHub 下载包路径

解压取出对应版本放到某个目录,如:D:\hadoop-3.3.5\bin

添加到环境变量 HADOOP_HOME

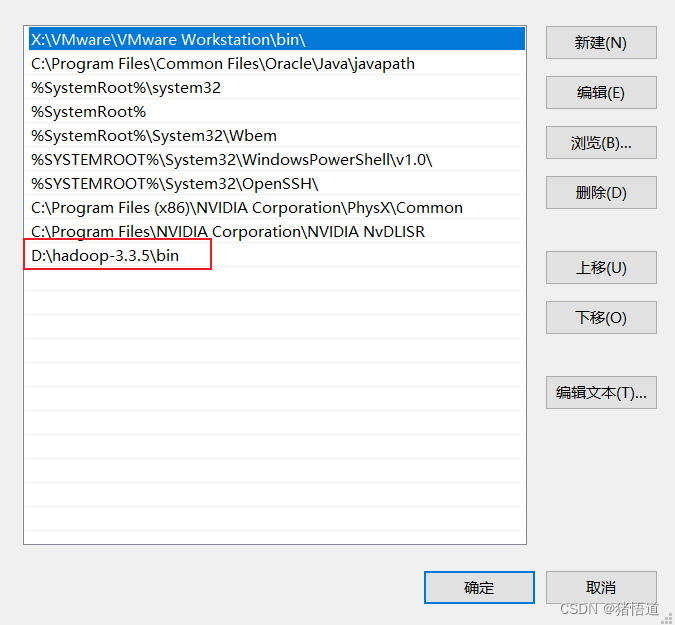

修改 PATH

1.Java 工程配置

Xml 配置

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>org.example</groupId><artifactId>HadoopDemo</artifactId><version>1.0-SNAPSHOT</version><properties><maven.compiler.source>19</maven.compiler.source><maven.compiler.target>19</maven.compiler.target><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><spring.version>3.1.1</spring.version><hadoop.version>3.3.6</hadoop.version></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId><version>${spring.version}</version></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-log4j2</artifactId><version>${spring.version}</version></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-aop</artifactId><version>${spring.version}</version></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.18.26</version></dependency><dependency><groupId>com.alibaba</groupId><artifactId>fastjson</artifactId><version>2.0.32</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>${hadoop.version}</version></dependency></dependencies></project>

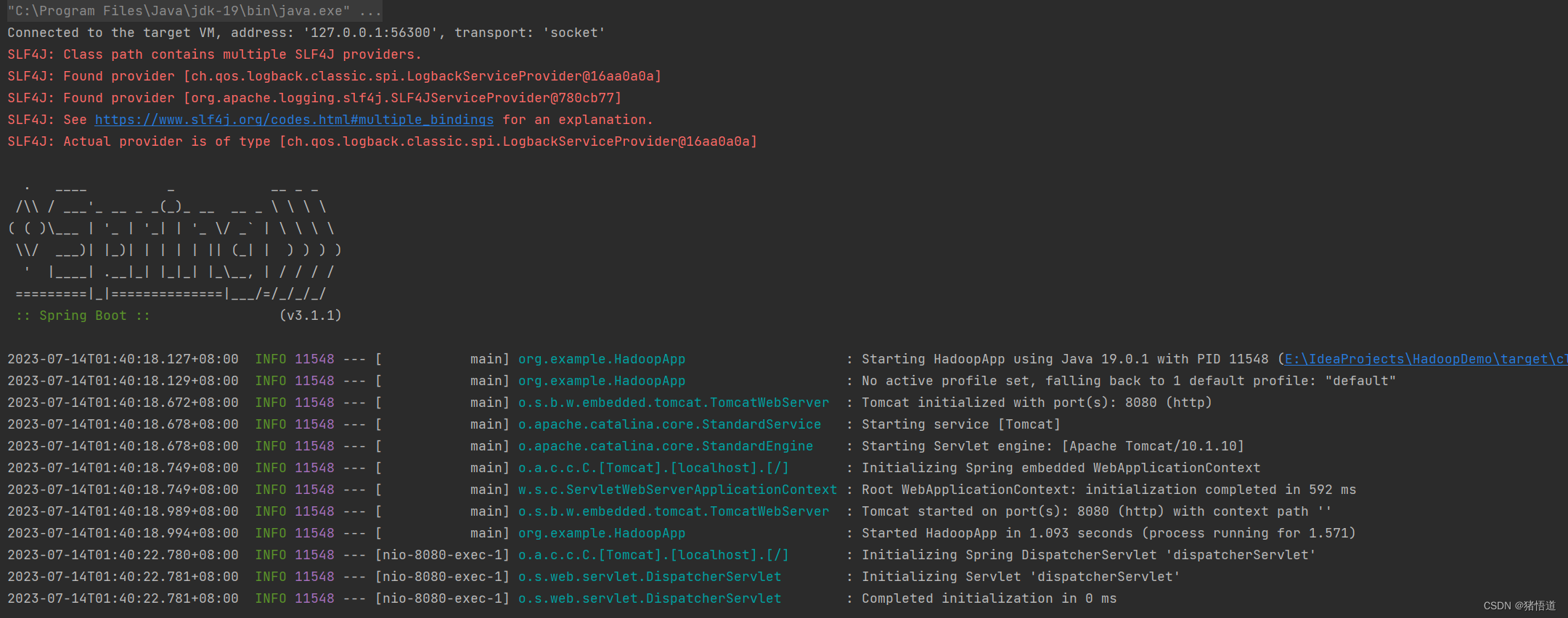

启动类

package org.example;import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;/*** @author Administrator*/

@SpringBootApplication

public class HadoopApp {public static void main(String[] args) {SpringApplication.run(HadoopApp.class,args);}

}

读写类

package org.example.controller;import jakarta.annotation.PostConstruct;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;import java.io.IOException;/*** @author Administrator* @Description* @create 2023-07-13 23:19*/

@RestController

@RequestMapping("/test")

public class TestController {@PostConstructpublic void init(){System.setProperty("HADOOP_USER_NAME","root");}@GetMapping("/get")public String get(){String result = "";try {// 配置连接地址Configuration conf = new Configuration();conf.set("fs.defaultFS", "hdfs://192.168.1.6:9000");FileSystem fs = FileSystem.get(conf);// 打开文件并读取输出Path path = new Path("/testData/test.txt");FSDataInputStream ins = fs.open(path);StringBuilder builder = new StringBuilder();int ch = ins.read();while (ch != -1) {builder.append((char)ch);ch = ins.read();}result = builder.toString();} catch (IOException ioe) {ioe.printStackTrace();}return result;}@GetMapping("/set")public void set() {FileSystem fs = null;FSDataOutputStream ws = null;try {// 配置连接地址Configuration conf = new Configuration();conf.set("fs.defaultFS", "hdfs://192.168.1.6:9000");fs = FileSystem.get(conf);// 打开文件并读取输出Path path = new Path("/testData/test.txt");ws = fs.append(path);ws.writeBytes("Hello World!");} catch (IOException ioe) {ioe.printStackTrace();} finally {if (null != fs){try {fs.close();} catch (IOException e) {throw new RuntimeException(e);}}if (null != ws){try {ws.close();} catch (IOException e) {throw new RuntimeException(e);}}}}

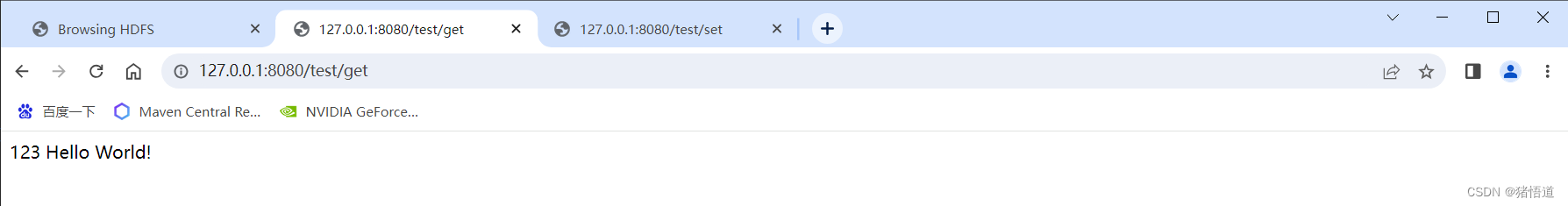

}2.测试

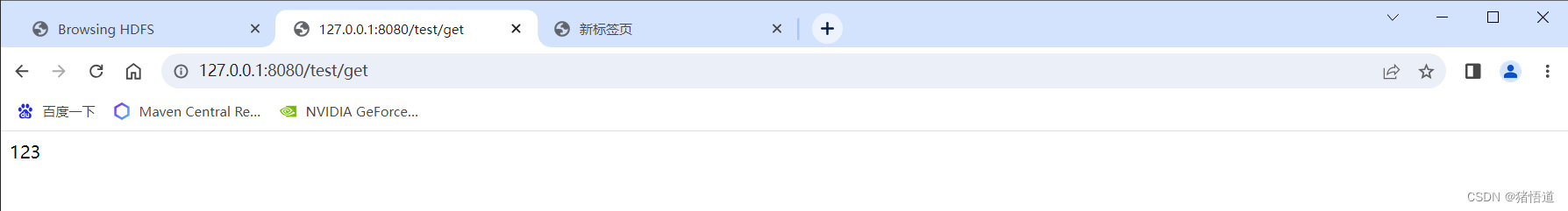

读数据

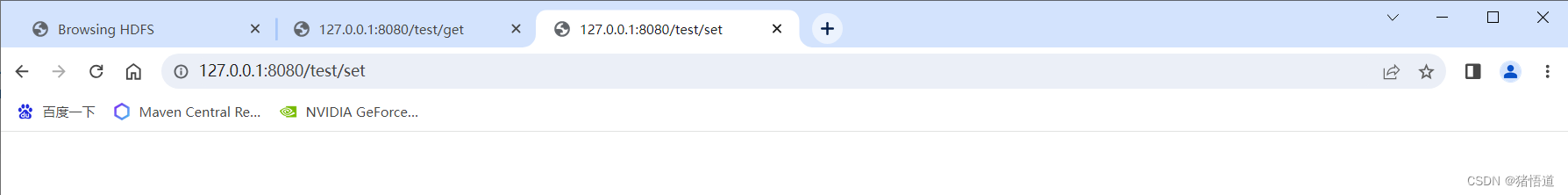

写数据

读数据

Windows 下 JDK 版本为 19