HTSK model Study

- Abstract

- Introduction

- II TSK for high-dimentional dataset

- III Results

- A Dateset

- B Algorithm

- C性能评估

Abstract

The TSK Fuzzy System with Gaussian membership functions can not address high dimentional datasets, if add softmax function to solve it, will be produce new problem that performence will decline. so, The author designed HTSK and LogTSK models to slove above problems.

Introduction

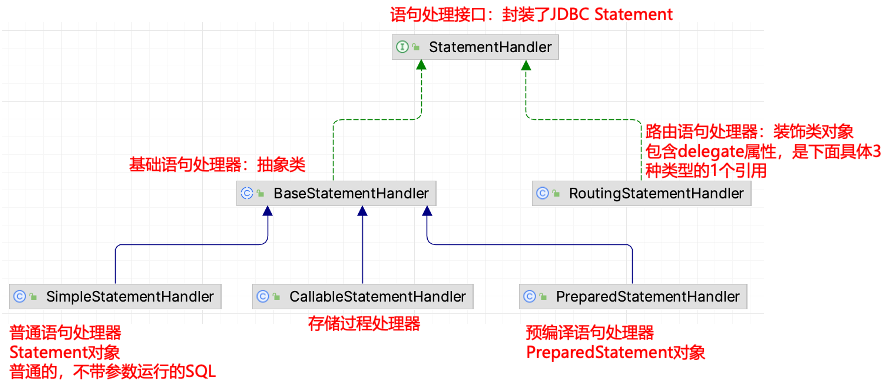

TSK fuzzy system(TSK fuzzy neural network) achieve numerous successes in machine learning for classification and regression, so it is widely used. It’s parameters is difficult to determine, output fuzzy clustering and evolution algorithm need higher time cost to caculate. so, this paper used mini-batch gradient descend method to optimize TSK fuzzy system. But the curse of dimensionality problem persists and affects all machine learning models, when high-dimensional data is used as input, the distances between individual data may be close enough to affect the fuzzy set delineation. Previous work focuses on data preprocessing, such as PCA, other dimensionality reduction methods, and neural networks, and so on. Sure, some scientists also try to choose fuzzy set in each rules, but few methods use tsk to train high dimensional dataset. It is experimentally demonstrated that in Gaussian MFs-based TSK, the standard deviation has a significant impact on performance, the author validate why standard deviation can influent result, and dimensional numbers impact.

Contribution:

1.Find the reason why curse dimentional problem is softmax saturate.

2.proposed HTSK method to solve problem which curse dimention.

3.validate the LogTSK and HTSK can produce better performence.

II TSK for high-dimentional dataset

First the author introduce the traditional TSK for high-dimentional dataset, and parameters for it.

Then tell us that author use the k-means to get TSK antecedent parameters. this paper modifed part is:

To enhance the HTSK performance, the author use follows to caculate firing level:

but in next paper Cui, the (9) re-write:

III Results

Copy a few words for the paper, if it is not suitable, please contact me and delete it.

A Dateset

数据集划分以及预处理步骤。

Twelve regression datasets with various sizes and dimensionalities, summarized in Table I, were used to validate our proposed algorithms. For each dataset, we randomly selected 70% samples as the

training set, and the remaining 30% as the test set. 10% samples from the training set were further randomly selected as the validation set for parameter tuning and model selection. z-score normalization was applied to both the features and labels, so that the results from different datasets were at the same scale and can be directly compared.

B Algorithm

对比算法的介绍,以及参数设置。MSE作为损失函数

C性能评估

- means and std;

- p value for post-hoc test

- different numbers of rules

- ablation experiment

- different optimizers

- different batch-size influence for mean, standard deviation, Grad of consequence parameters