Spark Core

一、Spark RDD

RDD概述

1.RDD基础

2.RDD源代码描述

3.RDD特性

4.Spark宽窄依赖

RDD创建

在驱动器中创建RDD

1.parallelize

读取外部数据集创建RDD

2.textFile

RDD操作

缓存rdd到内存

1.RDD转化操作

2.常见的转化操作

3.RDD行动操作

4.常见的行动操作

Spark传递函数

RDD惰性求值与持久化(缓存)

1.惰性求值

2.缓存方法

cache()

persist()

移除缓存

unpersist()

3.持节化级别

RDD练习

Test-01

scala>val rdd1=sc.parallelize(List(5,6,4,2,9,7,8,1,10))scala>val rdd2=rdd1.map(x=>x*2)scala>val sort=rdd2.sortBy(x=>x,true)scala>val rdd3=sort.filter(_>=10)scala>rdd3.collect()

Test-02

scala>val rdd1=sc.parallelize(Array("a b c","d e f","h i j"))scala>val rdd2=rdd1.flatMap(_.split(" "))scala>rdd2.collect()

Test-03

RDD并集、交集和去重操作

scala>val rdd1=sc.parallelize(List(1,2,3,4))scala>val rdd2=sc.parallelize(List(5,6,4,3))scala>val rdd3=rdd1.union(rdd2)scala>rdd3.collect()scala>val rdd4=rdd1.intersection(rdd2)scala>rdd4.collect()scala>rdd3.distinct.collect

Test-04

RDD求并集并按照Key进行分组

scala>val rdd1=sc.parallelize(List(("tom",1),("jerry",3),("kitty",2)))scala>val rdd2=sc.parallelize(List(("jerry",2),("tom",1),("shuke",2)))scala>val rdd3=rdd1.join(rdd2)scala>rdd3.collect()scala>val rdd4=rdd1.union(rdd2)scala>rdd4.groupByKey.collect

Test-05

RDD聚合

scala>val rdd1=sc.parallelize(List(1,2,3,4,5))scala>val rdd2=rdd1.reduce(_+_)scala>rdd2

Test-06

RDD按照Key进行聚合,按照value进行排序

("tom",1)把值1作为key(t=>(t._2,t._1)) ====(1,"tom")最后再反转输出

scala>val rdd1=sc.parallelize(List(("tom",1),("kitty",2),("shuke",1)))scala>val rdd2=sc.parallelize(List(("jerry",2),("tom",3),("shuke",2),("kitty",5)))scala>val rdd3=rdd1.union(rdd2)scala>val rdd4=rdd3.reduceByKey(_+_)scala>rdd4.collect()scala>val rdd5=rdd4.map(t=>(t._2,t._1)).sortByKey(false).map(t=>(t._2,t._1))scala>rdd5.collect()

二、Spark RDD键值对操作

Pair RDD(键值对)

1.概念

2.创建方式

A.直接在程序中创建

B.将普通的RDD转化为Pair RDD(K,V)=>Pair RDD

C.数据格式存储为键值对格式的读取(json)

3.创建Pair RDD-三种语言

file.txtHadoop HDFS MapReduce

Spark Core SQL Streaming

在Scala中使用第一个单词为键创建一个Pair RDD

package com.saddam.spark.SparkCoreimport org.apache.spark.{SparkConf, SparkContext}object PairRDD {def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair rdd")val sc=new SparkContext(conf)val lines = sc.textFile("D:\\Spark\\DataSets\\file.txt")val pairs=lines.map(x=>(x.split(" ")(0),x))pairs.foreach(p=>{println(p._1+":"+p._2)})/*Hadoop:Hadoop HDFS MapReduceSpark:Spark Core SQL Streaming*/sc.stop()}

}

在Java中使用第一个单词为键创建一个Pair RDD

在Python中使用第一个单词为键创建一个Pair RDD

4.Pair RDD转化操作

元素过滤

对第二个元素进行筛选

a.读取文件生成rdd

b.将rdd转化为pair rdd

c.使用filter进行过滤

聚合操作

package com.saddam.spark.SparkCoreimport org.apache.spark.{SparkConf, SparkContext}object PairRDD_聚合操作 {def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair rdd")val sc=new SparkContext(conf)val rdd=sc.parallelize(List(("panda",0),("pink",3),("pirate",3),("panda",1),("pink",4)))val rdd1=rdd.mapValues(x=>(x,1)).reduceByKey((x,y)=>(x._1+y._1,x._2+y._2))rdd1.foreach(x=>{println(x._1+":"+x._2._1/x._2._2)})}}panda:0

pirate:3

pink:3

数据分组

package com.saddam.spark.SparkCoreimport org.apache.spark.{SparkConf, SparkContext}object PairRDD_数据分组 {def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair rdd")val sc=new SparkContext(conf)val rdd=sc.parallelize(List(("panda",0),("pink",3),("pirate",3),("panda",1),("pink",4)))val rdd1=rdd.groupByKey()//持久化val rdd2 = rdd1.persist()// println(rdd2.first()._1+":"+rdd2.first()._2)rdd2.foreach(x=>{println(x._1+":"+x._2)})}}pirate:CompactBuffer(3)

panda:CompactBuffer(0, 1)

pink:CompactBuffer(3, 4)

连接操作

package com.saddam.spark.SparkCoreimport org.apache.spark.{SparkConf, SparkContext}object PairRDD_连接操作 {def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair rdd")val sc=new SparkContext(conf)val rdd1=sc.parallelize(List(("frank",30),("bob",9),("silly",3)))val rdd2=sc.parallelize(List(("frank",88),("bob",12),("marry",22),("frank",21),("bob",22)))//内连接val rdd3=rdd1.join(rdd2)rdd3.foreach(x=>{println(x._1+":"+x._2)})/*

frank:(30,88)

bob:(9,12)

frank:(30,21)

bob:(9,22)*///左外连接val rdd4=rdd1.leftOuterJoin(rdd2)rdd4.foreach(x=>{println(x._1+":"+x._2)})/*

silly:(3,None)

frank:(30,Some(88))

frank:(30,Some(21))

bob:(9,Some(12))

bob:(9,Some(22))*///右外连接val rdd5=rdd1.rightOuterJoin(rdd2)rdd5.foreach(x=>{println(x._1+":"+x._2)})/*

frank:(Some(30),88)

frank:(Some(30),21)

marry:(None,22)

bob:(Some(9),12)

bob:(Some(9),22)*///全连接val rdd6=rdd1.fullOuterJoin(rdd2)rdd6.foreach(x=>{println(x._1+":"+x._2)})/*

frank:(Some(30),Some(88))

frank:(Some(30),Some(21))

silly:(Some(3),None)

marry:(None,Some(22))

bob:(Some(9),Some(12))

bob:(Some(9),Some(22))*/}

}

数据排序

package com.saddam.spark.SparkCoreimport org.apache.spark.{SparkConf, SparkContext}object PairRDD_数据排序 {def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair rdd")val sc=new SparkContext(conf)var rdd=sc.parallelize(List(("frank",30),("bob",9),("silly",3)))//排序//按照字典序val rdd2=rdd.sortByKey()rdd2.collect().foreach(println)/*

(bob,9)

(frank,30)

(silly,3)*///按照值排序val rdd3=rdd.map(x=>(x._2,x._1)).sortByKey().map(x=>(x._2,x._1)) //sortByKey(false)-降序rdd3.collect().foreach(println)

/*

(silly,3)

(bob,9)

(frank,30)*/}

}

5.Pair RDD行动操作

6.数据分区

数据分区原因

数据分区操作

示例:PageRank算法

计算网页价值

package com.saddam.spark.SparkCoreimport org.apache.spark.{HashPartitioner, SparkConf, SparkContext}object PageRank {def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair rdd")val sc=new SparkContext(conf)val links=sc.parallelize(List(("A",List("B","C")),("B",List("A","C")),("C",List("A","B","D")),("D",List("C")))).partitionBy(new HashPartitioner(10)).persist()var ranks = links.mapValues(v => 1.0)for (i<-0 until 10){val contributions=links.join(ranks).flatMap{case (pageId,(links,rank))=>links.map(dest=>(dest,rank/links.size))}ranks=contributions.reduceByKey((x,y)=>x+y).mapValues(v=>0.15+0.85*v)}ranks.sortByKey().collect().foreach(println)ranks.saveAsTextFile("D:\\Spark\\OutPut\\PageRank")}

/*

(A,0.9850243302878132)

(B,0.9850243302878132)

(C,1.4621033282930214)

(D,0.5678480111313515)*/

}

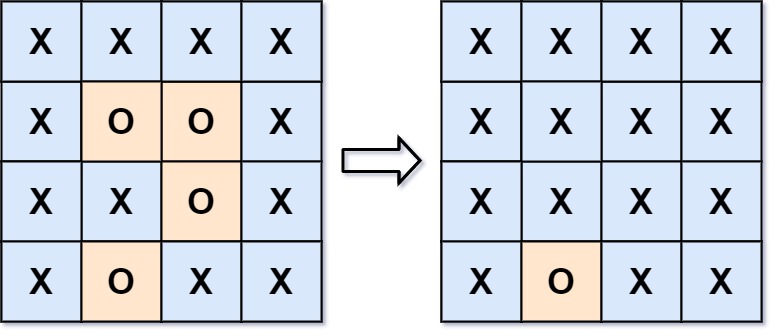

7.宽窄依赖

概念

窄依赖优点

宽窄依赖的使用

三、Spark数据读取与保存

Spark数据源

概述及分类

文件格式

文件系统

Spark SQL中的结构化数据

Spark访问数据库系统

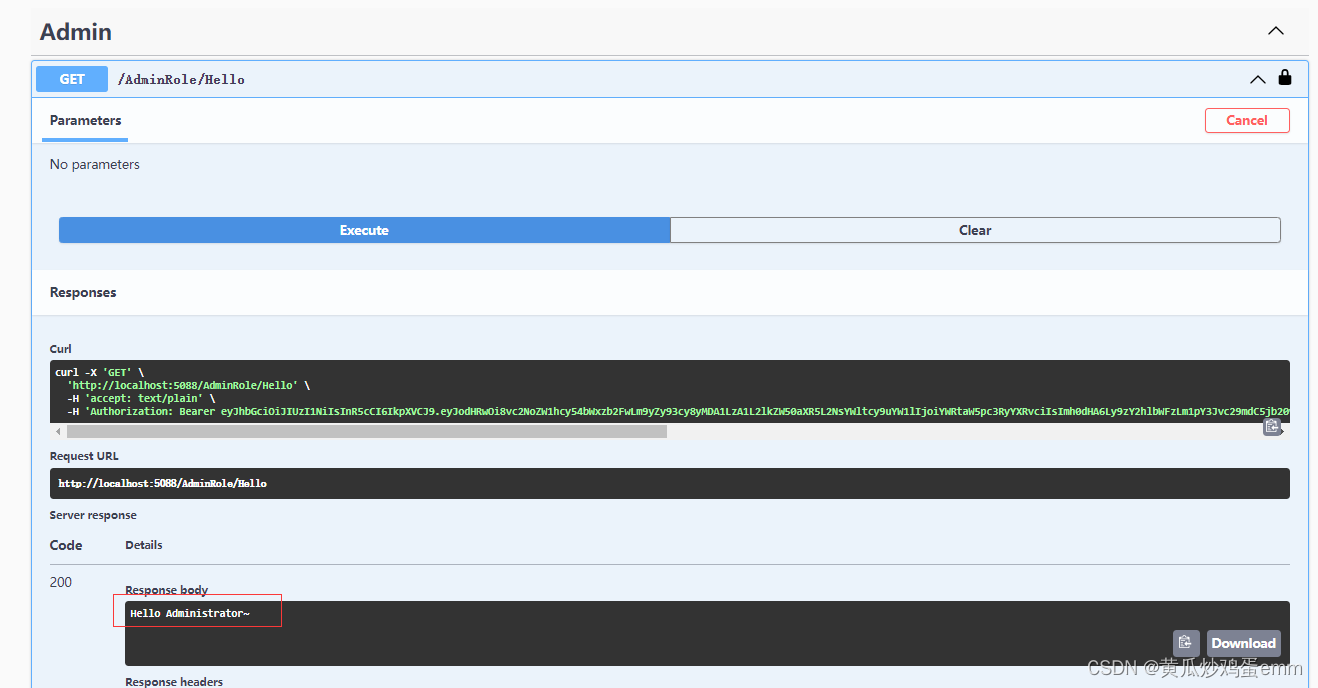

1.MySQL数据库连接

1.代码实战

package com.saddam.spark.SparkCoreimport java.sql.{DriverManager, ResultSet}

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.rdd.JdbcRDD/*** Spark访问MySQL数据库*/

object SparkConnectMySQL {//第一步:定义函数:创建一个连接连接数据库def createConnection()={Class.forName("com.mysql.jdbc.Driver").newInstance()DriverManager.getConnection("jdbc:mysql://localhost:3306/saddam","root","324419")}//第二步:定义一个函数:映射def extractValues(r:ResultSet)={(r.getInt(1),r.getString(2))}def main(args: Array[String]): Unit = {val conf=new SparkConf().setMaster("local[2]").setAppName("pair")val sc=new SparkContext(conf)//第三步:创建RDD读取数据val data=new JdbcRDD(sc,createConnection,"select * from users where ?<=id and id<=?",lowerBound = 1,upperBound = 3,numPartitions = 2,mapRow = extractValues)data.foreach(println)sc.stop()}}(1,admin)

(1,zhangsan)

(1,lisi)

2.问题发现与解决

javax.net.ssl.SSLException MESSAGE:

closing inbound before receiving peer's close_notify解决:useSSL=false

jdbc:mysql://localhost:3306/saddam?useSSL=false

2.Cassandra连接

开源的NoSQL数据库

3.HBase连接

四、Spark编程进阶

Spark的高级特性累加器广播变量基于分区的操作与外部程序间的广播数值RDD操作

Spark的高级特性

累加器