离线LaTex公式识别工具V0.9开发

文章目录

- 离线LaTex公式识别工具V0.9开发

- 工程来源

- GUI

- 界面

- streamlit

- 模型文件离线调用

- Pix2Text

- texify

- Nougat-LaTex

- 打包成exe

- pyinstaller打包方法

- pyinstaller打包遇到的问题

- File "subprocess.py", line 1420, in _execute_child FileNotFoundError: [WinError 2] 系统找不到指定的文件。

- pyinstaller打包streamlit

- ValueError: When using CUDAExecutionProvider, the parameters combination use_cache=False, use_io_binding=True is not supported. Please either pass use_cache=True, use_io_binding=True (default), or use_cache=False, use_io_binding=False.

- [WinError 3] 系统找不到指定的路径。: '~~\\dist\\main\\_internal\\cnstd\\yolov7\\torch_utils.pyc'

- ImportError: DLL load failed while importing aggregations:

- Importing the numpy C-extensions failed. This error can happen for many reasons, often due to issues with your setup or how NumPy was installed.

- ImportError: DLL load failed while importing _path: 找不到指定的模块。

- 整体需要导入`_internal`的文件

- 下载地址

- 工程文件

- 后记

工程来源

感谢这些github上的工程:

LaTeX-OCR: pix2tex提供GUI;

Pix2Text提供模型;

nougat-latex-ocr提供模型;

texify 提供模型、提供StreamLit_app代码。

GUI

GUI是直接拿pix2tex.gui来直接用的,这是这位大佬katie-lim贡献的,万能君的软件库)的AI公式识别离线工具V1.1大概也是借鉴了这个界面,界面很像。

由于没有学过PyQt6,对于PyQt语法不是很熟悉,所有没有添加其他的功能。

LaTex转为MathML其实不难的

import latex2mathml.converter

mathml_output = latex2mathml.converter.convert(latex_input)

这样子就可以转换过来。

有人已经画了一个GUI在Github上。

mygoosh/Mathematical_formula: 基于Pix2Text+PyQT5完成的可视化数学公式识别提取工具,可直接提取为LaxTex、MathML格式,也可仅识别文本内容(准确率低)(Python期末设计/大作业) (github.com),等我打包完了才发现这个工程,害。

from shutil import which

import io

import subprocess

import sys

import os

import tempfile

from PyQt6 import QtCore, QtGui

from PyQt6.QtCore import Qt, pyqtSlot, pyqtSignal, QThread, QTimer

from PyQt6.QtGui import QGuiApplication

from PyQt6.QtWebEngineWidgets import QWebEngineView

from PyQt6.QtWidgets import QMainWindow, QApplication, QMessageBox, QVBoxLayout, QWidget, \QPushButton, QTextEdit, QFormLayout, QHBoxLayout, QDoubleSpinBox

from pynput.mouse import Controllerfrom PIL import ImageGrab, Image

import numpy as np

from screeninfo import get_monitorsfrom pix2text import Pix2Text, merge_line_texts# 一定要引用的resources,显示icon.

import resources.resources

from texify.inference import batch_inference

from texify.model.model import load_model

from texify.model.processor import load_processorfrom nougat import nougat_latex

class App(QMainWindow):isProcessing = Falsedef __init__(self, args=None):super().__init__()self.args = argsself.img = Noneself.processor = 1if self.args.mode==1:self.model = nougat_latex(self.args)elif self.args.mode==2:self.model = load_model()self.processor = load_processor()elif self.args.mode==3:self.model = Pix2Text(analyzer_config=dict( model_name='mfd',model_type='yolov7_tiny', model_fp='./models/pix2text/mfd/mfd-yolov7_tiny.pt', ),formula_config = dict(model_name='mfr', model_backend='onnx',model_dir='./models/pix2text/mfr/', ), device = 'cpu',)else:self.model = nougat_latex(self.args)print("Model load OK!")self.initUI()self.snipWidget = SnipWidget(self)self.show()def initUI(self):self.setWindowTitle("LaTeX OCR")QApplication.setWindowIcon(QtGui.QIcon(':/icons/icon.svg'))self.left = 300self.top = 300self.width = 500self.height = 300self.setGeometry(self.left, self.top, self.width, self.height)# Create LaTeX displayself.webView = QWebEngineView()self.webView.setHtml("")self.webView.setMinimumHeight(80)# Create textboxself.textbox = QTextEdit(self)self.textbox.textChanged.connect(self.displayPrediction)self.textbox.setMinimumHeight(40)# Create temperature text inputself.tempField = QDoubleSpinBox(self)if self.args==None:self.tempField.setValue(0.0)else:self.tempField.setValue(self.args.temperature)self.tempField.setRange(0, 1)self.tempField.setSingleStep(0.1)# Create snip buttonif sys.platform == "darwin":self.snipButton = QPushButton('Snip [Option+S]', self)self.snipButton.clicked.connect(self.onClick)else:self.snipButton = QPushButton('Snip [Alt+S]', self)self.snipButton.clicked.connect(self.onClick)self.shortcut = QtGui.QShortcut(QtGui.QKeySequence('Alt+S'), self)self.shortcut.activated.connect(self.onClick)# Create retry buttonself.retryButton = QPushButton('Retry', self)self.retryButton.setEnabled(False)self.retryButton.clicked.connect(self.returnSnip)# Create layoutcentralWidget = QWidget()centralWidget.setMinimumWidth(200)self.setCentralWidget(centralWidget)lay = QVBoxLayout(centralWidget)lay.addWidget(self.webView, stretch=4)lay.addWidget(self.textbox, stretch=2)buttons = QHBoxLayout()buttons.addWidget(self.snipButton)buttons.addWidget(self.retryButton)lay.addLayout(buttons)settings = QFormLayout()settings.addRow('Temperature:', self.tempField)lay.addLayout(settings)def toggleProcessing(self, value=None):if value is None:self.isProcessing = not self.isProcessingelse:self.isProcessing = valueif self.isProcessing:text = 'Interrupt'func = self.interruptelse:if sys.platform == "darwin":text = 'Snip [Option+S]'else:text = 'Snip [Alt+S]'func = self.onClickself.retryButton.setEnabled(True)self.shortcut.setEnabled(not self.isProcessing)self.snipButton.setText(text)self.snipButton.clicked.disconnect()self.snipButton.clicked.connect(func)self.displayPrediction()@pyqtSlot()def onClick(self):self.close()if os.environ.get('SCREENSHOT_TOOL') == "gnome-screenshot":self.snip_using_gnome_screenshot()elif os.environ.get('SCREENSHOT_TOOL') == "grim":self.snip_using_grim()elif os.environ.get('SCREENSHOT_TOOL') == "pil":self.snipWidget.snip()elif which('gnome-screenshot'):self.snip_using_gnome_screenshot()elif which('grim') and which('slurp'):self.snip_using_grim()else:self.snipWidget.snip()@pyqtSlot()def interrupt(self):if hasattr(self, 'thread'):self.thread.terminate()self.thread.wait()self.toggleProcessing(False)def snip_using_gnome_screenshot(self):try:with tempfile.NamedTemporaryFile() as tmp:subprocess.run(["gnome-screenshot", "--area", f"--file={tmp.name}"])# Use `tmp.name` instead of `tmp.file` due to compatability issues between Pillow and tempfileself.returnSnip(Image.open(tmp.name))except:print(f"Failed to load saved screenshot! Did you cancel the screenshot?")print("If you don't have gnome-screenshot installed, please install it.")self.returnSnip()def snip_using_grim(self):try:p = subprocess.run('slurp',check=True,capture_output=True,text=True)geometry = p.stdout.strip()p = subprocess.run(['grim', '-g', geometry, '-'],check=True,capture_output=True)self.returnSnip(Image.open(io.BytesIO(p.stdout)))except:print(f"Failed to load saved screenshot! Did you cancel the screenshot?")print("If you don't have slurp and grim installed, please install them.")self.returnSnip()def returnSnip(self, img=None):if img is None or img is False:img = self.img# print(type(self.img)) self.toggleProcessing(True)self.retryButton.setEnabled(False)self.show()try:self.args.temperature = self.tempField.value()# if self.model.args.temperature == 0:# self.model.args.temperature = 1e-8except:pass# Run the model in a separate threadself.thread = ModelThread(img=img, model=self.model,processor=self.processor,mode=self.args.mode,temp=self.args.temperature)self.thread.finished.connect(self.returnPrediction)self.thread.finished.connect(self.thread.deleteLater)self.thread.start()def returnPrediction(self, result):self.toggleProcessing(False)success, prediction = result["success"], result["prediction"]if success:self.displayPrediction(prediction)self.retryButton.setEnabled(True)else:self.webView.setHtml("")msg = QMessageBox()msg.setWindowTitle(" ")msg.setText("Prediction failed.")msg.exec()def displayPrediction(self, prediction=None):if self.isProcessing:pageSource = """<center><img src="qrc:/icons/processing-icon-anim.svg" width="50", height="50"></center>"""else:if prediction is not None:self.textbox.setText("${equation}$".format(equation=prediction))else:prediction = self.textbox.toPlainText().strip('$')pageSource = """<html><head><script id="MathJax-script" src="qrc:MathJax.js"></script><script>MathJax.Hub.Config({messageStyle: 'none',tex2jax: {preview: 'none'}});MathJax.Hub.Queue(function () {document.getElementById("equation").style.visibility = "";});</script></head> """ + """<body><div id="equation" style="font-size:1em; visibility:hidden">$${equation}$$</div></body></html>""".format(equation=prediction)self.webView.setHtml(pageSource)class ModelThread(QThread):finished = pyqtSignal(dict)def __init__(self, img, model,processor,mode,temp):super().__init__()self.img = imgself.model = modelself.processor = processorself.mode = modeself.temp = tempdef run(self):try:if self.mode==1:prediction = self.model.predict(self.img)print(prediction)print()elif self.mode==2:results = batch_inference([self.img], self.model, self.processor,temperature=self.temp)# 将原本输出的\\转换为\results = repr(results).replace("\\\\", "\\")results = results.strip("['$$")prediction = results.strip("$$']")print(prediction)print()elif self.mode==3:prediction = self.model.recognize_formula(self.img)# prediction = merge_line_texts(prediction, auto_line_break=True)# 去掉首尾3个数字# prediction = prediction[3:-3]print(prediction)print()else:prediction = self.model.predict(self.img)print(prediction)print()self.finished.emit({"success": True, "prediction": prediction})except Exception as e:import tracebacktraceback.print_exc()self.finished.emit({"success": False, "prediction": None})class SnipWidget(QMainWindow):isSnipping = Falsedef __init__(self, parent):super().__init__()self.parent = parentmonitos = get_monitors()bboxes = np.array([[m.x, m.y, m.width, m.height] for m in monitos])x, y, _, _ = bboxes.min(0)w, h = bboxes[:, [0, 2]].sum(1).max(), bboxes[:, [1, 3]].sum(1).max()self.setGeometry(x, y, w-x, h-y)self.begin = QtCore.QPoint()self.end = QtCore.QPoint()self.mouse = Controller()# Create and start the timerself.factor = QGuiApplication.primaryScreen().devicePixelRatio()self.timer = QTimer(self)self.timer.timeout.connect(self.update_geometry_based_on_cursor_position)self.timer.start(500)def update_geometry_based_on_cursor_position(self):if not self.isSnipping:return# Update the geometry of the SnipWidget based on the current screenmouse_pos = QtGui.QCursor.pos()screen = QGuiApplication.screenAt(mouse_pos)if screen:self.factor = screen.devicePixelRatio()screen_geometry = screen.geometry()self.setGeometry(screen_geometry)def snip(self):self.isSnipping = Trueself.setWindowFlags(QtCore.Qt.WindowType.WindowStaysOnTopHint)QApplication.setOverrideCursor(QtGui.QCursor(QtCore.Qt.CursorShape.CrossCursor))self.show()def paintEvent(self, event):if self.isSnipping:brushColor = (0, 180, 255, 100)opacity = 0.3else:brushColor = (255, 255, 255, 0)opacity = 0self.setWindowOpacity(opacity)qp = QtGui.QPainter(self)qp.setPen(QtGui.QPen(QtGui.QColor('black'), 2))qp.setBrush(QtGui.QColor(*brushColor))qp.drawRect(QtCore.QRect(self.begin, self.end))def keyPressEvent(self, event):if event.key() == QtCore.Qt.Key.Key_Escape.value:QApplication.restoreOverrideCursor()self.close()self.parent.show()event.accept()def mousePressEvent(self, event):self.startPos = self.mouse.positionself.begin = event.pos()self.end = self.beginself.update()def mouseMoveEvent(self, event):self.end = event.pos()self.update()def mouseReleaseEvent(self, event):self.isSnipping = FalseQApplication.restoreOverrideCursor()startPos = self.startPosendPos = self.mouse.positionx1 = int(min(startPos[0], endPos[0]))y1 = int(min(startPos[1], endPos[1]))x2 = int(max(startPos[0], endPos[0]))y2 = int(max(startPos[1], endPos[1]))self.repaint()QApplication.processEvents()try:img = ImageGrab.grab(bbox=(x1, y1, x2, y2), all_screens=True)except Exception as e:if sys.platform == "darwin":img = ImageGrab.grab(bbox=(x1//self.factor, y1//self.factor,x2//self.factor, y2//self.factor), all_screens=True)else:raise eQApplication.processEvents()self.close()self.begin = QtCore.QPoint()self.end = QtCore.QPoint()# self.parent.returnSnip(img)self.parent.img = imgself.parent.returnSnip(self.parent.img)def main(arguments):# with in_model_path():# if os.name != 'nt':# os.environ['QTWEBENGINE_DISABLE_SANDBOX'] = '1'# app = QApplication(sys.argv)# ex = App(arguments)# sys.exit(app.exec())if os.name != 'nt':os.environ['QTWEBENGINE_DISABLE_SANDBOX'] = '1'app = QApplication(sys.argv)ex = App(arguments)sys.exit(app.exec())界面

Pix2Text

Nougat_Latex

Texify

streamlit

模型文件离线调用

Pix2Text

调用部分指定模型的路径。

self.model = Pix2Text(analyzer_config=dict( model_name='mfd',model_type='yolov7_tiny', model_fp='./models/pix2text/mfd/mfd-yolov7_tiny.pt', ),formula_config = dict(model_name='mfr', model_backend='onnx',model_dir='./models/pix2text/mfr/', ), device = 'cpu',)

当然仅仅指定调用模型的路径还不错。

在模块cnstd、cnorc初始化时会调用一些函数下载某些模型文件。比如

cnstd.LayoutAnalyzer

class LayoutAnalyzer(object):def __init__(self,model_name: str = 'mfd', # 'layout' or 'mfd'*,model_type: str = 'yolov7_tiny', # 当前支持 [`yolov7_tiny`, `yolov7`]'model_backend: str = 'pytorch',model_categories: Optional[List[str]] = None,model_fp: Optional[str] = None,model_arch_yaml: Optional[str] = None,root: Union[str, Path] = data_dir(),device: str = 'cpu',**kwargs,):'''省略'''self.text_ocr = prepare_ocr_engine(languages, text_config)

改成

def data_dir_default():""":return: default data directory depending on the platform and environment variables"""# system = platform.system()# if system == 'Windows':# return os.path.join(os.environ.get('APPDATA'), 'cnstd')# else:# return os.path.join(os.path.expanduser("~"), '.cnstd')return os.path.join('./models/cnstd/')防止其偷偷下载模型导致离线运行不了。

调用self.text_ocr = prepare_ocr_engine(languages, text_config)会初始化cnocr, ocr_engine = CnOcr(**ocr_engine_config),会自动下载模型。

class CnOcr(object):def __init__(self,rec_model_name: str = 'densenet_lite_136-gru',*,det_model_name: str = 'ch_PP-OCRv3_det',cand_alphabet: Optional[Union[Collection, str]] = None,context: str = 'cpu', # ['cpu', 'gpu', 'cuda']rec_model_fp: Optional[str] = './models/cnocr/cnocr-v2.3-densenet_lite_136-gru-epoch=004-ft-model.onnx',rec_model_backend: str = 'onnx', # ['pytorch', 'onnx']rec_vocab_fp: Optional[Union[str, Path]] = None,rec_more_configs: Optional[Dict[str, Any]] = None,rec_root: Union[str, Path] = data_dir(),det_model_fp: Optional[str] = None,det_model_backend: str = 'onnx', # ['pytorch', 'onnx']det_more_configs: Optional[Dict[str, Any]] = None,det_root: Union[str, Path] = det_data_dir(),**kwargs,):

其中修改rec_model_fp: Optional[str] = None为 rec_model_fp: Optional[str] = './models/cnocr/cnocr-v2.3-densenet_lite_136-gru-epoch=004-ft-model.onnx',

texify

简要修改模型的地址即可

class Settings(BaseSettings):# GeneralTORCH_DEVICE: Optional[str] = NoneMAX_TOKENS: int = 384 # Will not work well above 768, since it was not trained with moreMAX_IMAGE_SIZE: Dict = {"height": 420, "width": 420}MODEL_CHECKPOINT: str = "./models/texify/"BATCH_SIZE: int = 16 # Should use ~5GB of RAMDATA_DIR: str = "data"TEMPERATURE: float = 0.0 # Temperature for generation, 0.0 means greedy

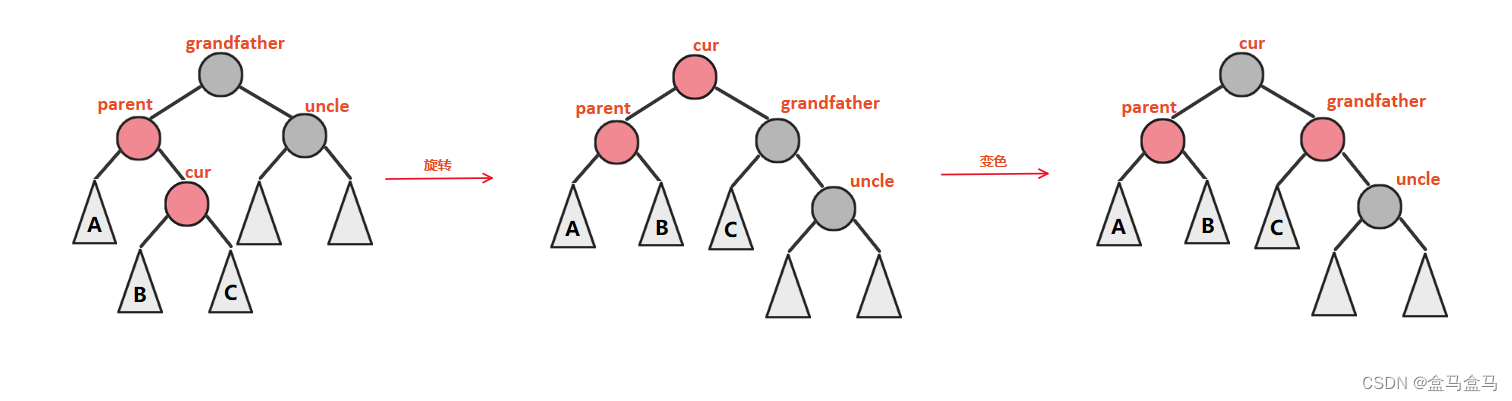

Nougat-LaTex

重写一个类方便调用

# -*- coding:utf-8 -*-

# create: @time: 10/8/23 11:47

import argparseimport torch

from PIL import Image

from transformers import VisionEncoderDecoderModel

from transformers.models.nougat import NougatTokenizerFast

from nougat_latex.util import process_raw_latex_code

from nougat_latex import NougatLaTexProcessordef parse_option():parser = argparse.ArgumentParser(prog="nougat inference config", description="model archiver")parser.add_argument("--pretrained_model_name_or_path", default="./models/nougat_latex/")parser.add_argument("--device", default="gpu")parser.add_argument("--mode", default="1",help='Use which model')parser.add_argument('-t', '--temperature', type=float, default=.333, help='Softmax sampling frequency')return parser.parse_args()class nougat_latex():def __init__(self, args = None):self.args = argsif self.args.device == "gpu":self.device = torch.device("cuda:0")else:self.device = torch.device("cpu")# init modelself.model = VisionEncoderDecoderModel.from_pretrained(args.pretrained_model_name_or_path).to(self.device)# init processorself.tokenizer = NougatTokenizerFast.from_pretrained(args.pretrained_model_name_or_path)self.latex_processor = NougatLaTexProcessor.from_pretrained(args.pretrained_model_name_or_path)def predict(self,image):if not image.mode == "RGB":image = image.convert('RGB')pixel_values = self.latex_processor(image, return_tensors="pt").pixel_valuestask_prompt = self.tokenizer.bos_tokendecoder_input_ids = self.tokenizer(task_prompt, add_special_tokens=False,return_tensors="pt").input_idswith torch.no_grad():outputs = self.model.generate(pixel_values.to(self.device),decoder_input_ids=decoder_input_ids.to(self.device),max_length=self.model.decoder.config.max_length,early_stopping=True,pad_token_id=self.tokenizer.pad_token_id,eos_token_id=self.tokenizer.eos_token_id,use_cache=True,num_beams=1,bad_words_ids=[[self.tokenizer.unk_token_id]],return_dict_in_generate=True,)sequence = self.tokenizer.batch_decode(outputs.sequences)[0]sequence = sequence.replace(self.tokenizer.eos_token, "").replace(self.tokenizer.pad_token, "").replace(self.tokenizer.bos_token, "")sequence = process_raw_latex_code(sequence)return sequenceif __name__ == '__main__':# run_nougat_latex()args = parse_option()img = Image.open("test.png") # Your image name heremodel = nougat_latex(args)results = model.predict(img)print(results)通过 parser.add_argument("--pretrained_model_name_or_path", default="./models/nougat_latex/")添加模型的路径。

打包成exe

pyinstaller打包方法

用 Pyinstaller 模块将 Python 程序打包成 exe 文件(全网最全面最详细,万字详述)_pyinstaller打包-CSDN博客

参考这位博主的方法进行打包。

pyi-makespec -D .\texify_pdf_ocr_app.py -p ocr_app.py -i icon4.ico

pyi-makespec -D pix2text_app.py -p gui_pix2text.py -i icon1.ico

pyi-makespec -D nougat_app.py -p gui_nougat.py -i icon2.ico

pyi-makespec -D texify_app.py -p gui_texify.py -i icon3.ico

pyi-makespec -D main.py -p texify_pdf_ocr_app.py -p gui.py -i icon.ico

生成main.spec文件,通过编辑main.spec文件实现隐藏包的引入,models文件的引入。

# -*- mode: python ; coding: utf-8 -*-from PyInstaller.utils.hooks import collect_data_files

from PyInstaller.utils.hooks import copy_metadatadatas = [("~/trial/Lib/site-packages/streamlit/runtime","./streamlit/runtime")]

datas += collect_data_files("streamlit")

datas += copy_metadata("streamlit")a = Analysis(['main.py'],pathex=['texify_pdf_ocr_app.py', 'gui.py','texify_app.py','nougat_app.py','pix2text_app.py','ocr_app.py','gui_nougat.py','gui_texify.py','gui_pix2text.py'],binaries=[],datas=datas,hiddenimports=[],hookspath=['./hooks/'],hooksconfig={},runtime_hooks=[],excludes=[],noarchive=False,

)

pyz = PYZ(a.pure)exe = EXE(pyz,a.scripts,[],exclude_binaries=True,name='main',debug=False,bootloader_ignore_signals=False,strip=False,upx=True,console=True,disable_windowed_traceback=False,argv_emulation=False,target_arch=None,codesign_identity=None,entitlements_file=None,icon=['icon.ico'],

)

coll = COLLECT(exe,a.binaries,a.datas,strip=False,upx=True,upx_exclude=[],name='main',

)要说明的是pyinstaller==6.4.0的打包的文件结构比较不同。出现了_internal文件,包含库文件,.exe是在外面。这和pyinstaller==5.9.0之前有一定区别,所以导入资源文件/modles/比较麻烦,我直接拷过去完事。

pyinstaller打包遇到的问题

File “subprocess.py”, line 1420, in _execute_child FileNotFoundError: [WinError 2] 系统找不到指定的文件。

参考这两篇博文。

FileNotFoundError WinError 2 系统找不到指定的文件_winerror2系统找不到指定文件python-CSDN博客

subprocess报错FileNotFoundError: WinError 2 系统找不到指定的文件_subprocess.popen filenotfounderror: [winerror 2] 系-CSDN博客

发现subprocess.Popen执行命令报错,大概是没法识别CMD,因此

subprocess.run(["streamlit", "run", ocr_app_path],shell=True)

pyinstaller打包streamlit

首先采用该博主的方法:

利用pyinstaller打包streamlit移植到其他电脑上使用_streamlit打包-CSDN博客

接着会出现:关于'streamlit' 不是内部或外部命令,也不是可运行的程序或批处理文件。

run_app()中的subprocess.run(["streamlit", "run", ocr_app_path],shell=True)调用了streamlit.exe,把~~...\envs\trial\Scripts\streamlit.exe拷到生成的目录下~~\text\dist\main\streamlit.exe即可。

采取了以下方法。

发现这中方法只能在自己电脑中实现。

大概率是subprocess.run的锅。采用Helloorld_11的的调用方法进行重写。

import subprocess

import osimport streamlit.web.cli as stcli

import os, sys

import texify

# def run_app():

# cur_dir = os.path.dirname(os.path.abspath(__file__))

# ocr_app_path = os.path.join(cur_dir, "ocr_app.py")

# subprocess.run(["streamlit", "run", ocr_app_path],shell=True)

# import ocr_appdef resolve_path(path):# resolved_path = os.path.abspath(os.path.join(os.getcwd(), path))cur_dir = os.path.dirname(os.path.abspath(__file__))resolved_path = os.path.join(cur_dir, "ocr_app.py")return resolved_pathif __name__ == '__main__':sys.argv = ["streamlit","run",resolve_path("ocr_app.py"),"--global.developmentMode=false",]sys.exit(stcli.main())问题最终解决。所以大概率是subprocess.run的锅。

ValueError: When using CUDAExecutionProvider, the parameters combination use_cache=False, use_io_binding=True is not supported. Please either pass use_cache=True, use_io_binding=True (default), or use_cache=False, use_io_binding=False.

pip uninstall onnxruntime-gpu

pip install onnxruntime

参考 Issue #65 · breezedeus/Pix2Text (github.com)与CnOCR/P2T 常见问题(FAQ) - 飞书云文档 (feishu.cn),使用过程中发现Pix2Text对于GPU的支持稀烂,不知道是ONNX的问题还是Pix2Text的问题,打包会出现

-

onnx RegisterTensorRTPluginsAsCustomOps Please install TensorRT libraries as mentioned in the GPU requirements page, make sure they're in the PATH or LD_LIBRARY_PATH, and that your GPU is supported. -

LoadLibrary failed with error 126 "" when trying to load "~\text\dist\main\_internal\onnxruntime\capi\onnxruntime_providers_shared.dll"

之类的问题

索性全改CPU。

self.model = Pix2Text(analyzer_config=dict( model_name='mfd',model_type='yolov7_tiny', model_fp='./models/pix2text/mfd/mfd-yolov7_tiny.pt', ),formula_config = dict(model_name='mfr', model_backend='onnx',model_dir='./models/pix2text/mfr/', ), device = 'cpu',)

[WinError 3] 系统找不到指定的路径。: ‘~~\dist\main\_internal\cnstd\yolov7\torch_utils.pyc’

参考pyinstaller 编译成exe 提示缺少torch_utils.pyc_人工智能-CSDN问答解决

这个问题是导入cnstd模块出现的问题,直接复制一份cnstd库的文件到~~\\dist\\main\\_internal,并把torch_utils.py改成torch_utils.pyc没有报错。

ImportError: DLL load failed while importing aggregations:

没有导入pandas的dll,即是pandas.libs,直接复制一份即可

当然还有

Importing the numpy C-extensions failed. This error can happen for many reasons, often due to issues with your setup or how NumPy was installed.

参考了【踩坑】修复报错 you should not try to import numpy from its source directory-CSDN博客

没有导入numpy的dll,即是numpy.libs,直接复制一份即可。

pyinstaller得背锅,要么numpy。后面应该会修复这个bug.

ImportError: DLL load failed while importing _path: 找不到指定的模块。

没有导入matplotlib的dll,即是matplotlib.libs,直接复制一份即可。

整体需要导入_internal的文件

下载地址

这是万能君的软件库的:

公式识别离线小工具V1.1更新下载地址 (qq.com)

夸克网盘:链接:https://pan.quark.cn/s/0f74765bb95f 提取码:U9cq 注意解压目录不能有中文。

功能

-

截支持识别QQ或者微信等截图工具截图

-

截图识别公式;

-

支持复制成latex和mathml格式的。

我的LaTex OCR。

夸克网盘:链接:https://pan.quark.cn/s/d693012c2ea9 提取码:2Da2 解压目录无限制

功能:

- 集成了3个模型,其中Pix2Text支持手写文字。

- 能实现PDF页面截取公式,识别(通过streamlit实现)。

工程文件

longchentian/Pix2Text-nougat-texify-GUI: GUI for offline LaTex OCR tool for Pix2Text nougat texify three models:用于Pix2Text-nougat-texify三个模型的离线LaTex-OCR的工具的GUI (github.com)

功能上不及前者,能力有限,把制作流程发出来了,有能力的人进一步去折腾吧。

后记

- 快捷键截图实现LaTex识别的GUI。

- LaTex转MathML,LaTex代码直接复制。

- 监听剪切板的图片。