Unsloth + colab 微调llama3

并且使用ollama调用微调好的量化模型

因为llama3推出,大模型市场引来新的强力性能成员,并且带来的也是安全和自由定制,为了适应我们自己的场景,我们可以微调训练它来适应我们的特定任务和问题。比如llama3不支持中文,我们可以微调它支持中文。

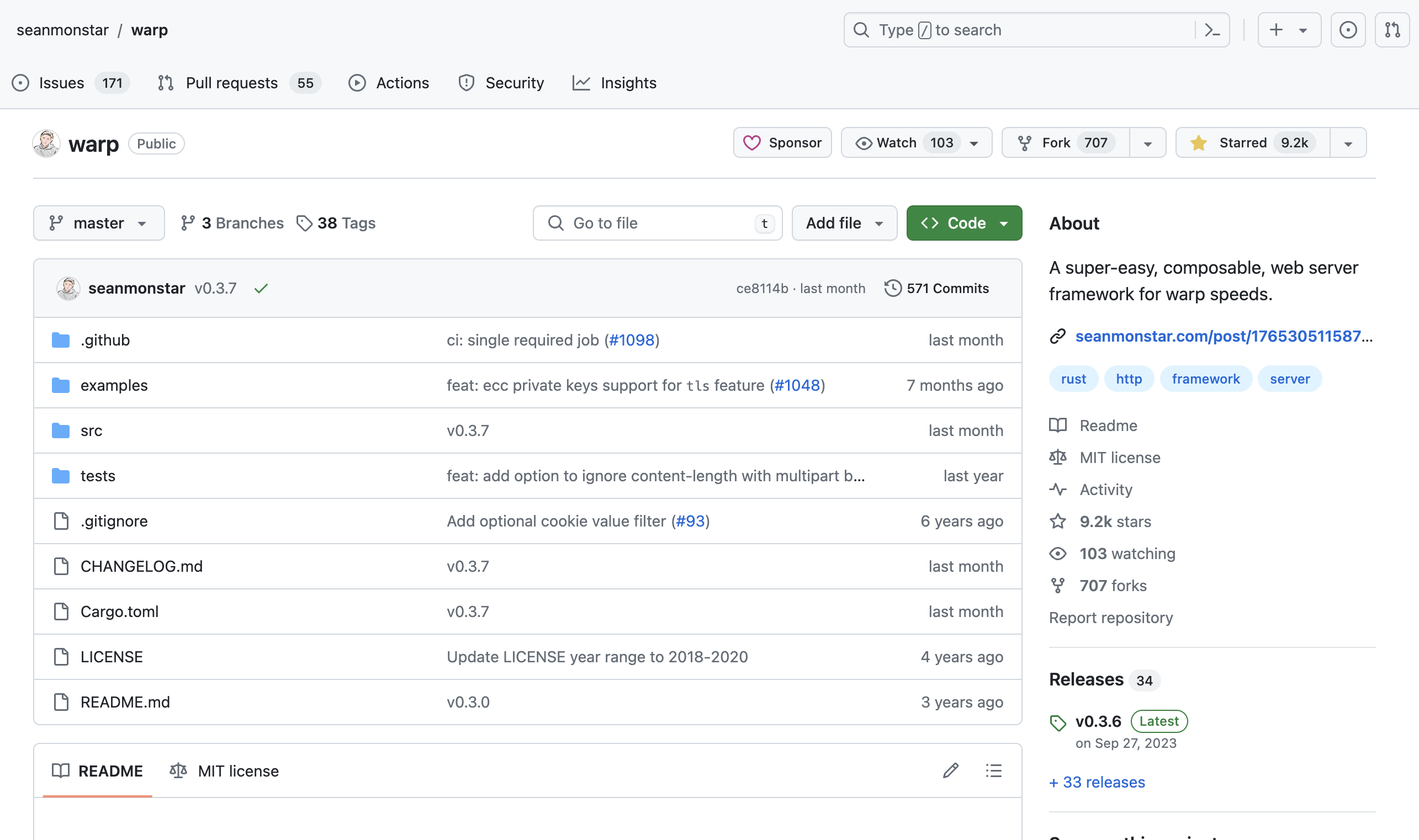

1.访问Unsloth

地址: https://github.com/unslothai/unsloth

进入unsloth仓库,找到llama3并点击start on colab选项.

[注意] 最好是跟着下面的流程自己单独保存一份笔记

我们便进入了谷歌的colab界面.

[注意] google colab是一个免费的云服务并支持gpu,对于我们学习和调试很方便

下面的代码我们都在colab里面执行。

2. 添加谷歌云硬盘的授权

方便我们保存,需要连接Google Drive,以便将训练好的模型保存到云端。

from google.colab import drive

drive.mount('/content/drive')

连接T4 GPU

3.安装unsloth

%%capture

import torch!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

!pip install --no-deps "xformers<0.0.26" trl peft accelerate bitsandbytes

4.下载预训练模型

下载要微调的模型,支持16位LoRA或4位QLoRA。

max_seq_length可以设置为任何值,因为我们通过kaiokendev的方法自动进行RoPE缩放。

通过PR 26037,支持下载4位模型快4倍!

在unsloth仓库有Llama、Mistral 等4位模型。

from unsloth import FastLanguageModel

import torch

max_seq_length = 2048

dtype = None

load_in_4bit = Truefourbit_models = ["unsloth/mistral-7b-bnb-4bit","unsloth/mistral-7b-instruct-v0.2-bnb-4bit","unsloth/llama-2-7b-bnb-4bit","unsloth/gemma-7b-bnb-4bit","unsloth/gemma-7b-it-bnb-4bit", "unsloth/gemma-2b-bnb-4bit","unsloth/gemma-2b-it-bnb-4bit","unsloth/llama-3-8b-bnb-4bit",

] model, tokenizer = FastLanguageModel.from_pretrained(model_name = "unsloth/llama-3-8b-bnb-4bit",max_seq_length = max_seq_length,dtype = dtype,load_in_4bit = load_in_4bit,# token = "hf_...",

)5.设置LoRA训练参数

我们现在添加LoRA适配器,所以我们只需要更新所有参数的1%到10% !

model = FastLanguageModel.get_peft_model(model,r = 16,target_modules = ["q_proj", "k_proj", "v_proj", "o_proj","gate_proj", "up_proj", "down_proj",],lora_alpha = 16,lora_dropout = 0, bias = "none", use_gradient_checkpointing = "unsloth",random_state = 3407,use_rslora = False,loftq_config = None,

)6.数据准备

我们现在使用来自yahma的Alpaca数据集,也可以用我们自己的数据准备替换此代码部分。

alpaca_prompt = """Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.### Instruction:

{}### Input:

{}### Response:

{}"""EOS_TOKEN = tokenizer.eos_token

def formatting_prompts_func(examples):instructions = examples["instruction"]inputs = examples["input"]outputs = examples["output"]texts = []for instruction, input, output in zip(instructions, inputs, outputs):text = alpaca_prompt.format(instruction, input, output) + EOS_TOKENtexts.append(text)return { "text" : texts, }

passfrom datasets import load_dataset

dataset = load_dataset("yahma/alpaca-cleaned", split = "train")

dataset = dataset.map(formatting_prompts_func, batched = True,)

如果你想加载自己的数据集,使用下列代码

### json格式,path="json"

### csv格式, path="csv"

### 纯文本格式, path="text"

### dataframe格式, path="panda"

### 图片,path="imagefolder"from datasets import load_dataset

dataset = load_dataset('csv', data_files='my_file.csv')dataset = load_dataset('json', data_files='my_file.json')dataset = load_dataset(path="imagefolder", data_dir="D:\Desktop\workspace\code\loaddataset\data\images")dataset = load_dataset('text', data_files={'train': ['my_text_1.txt', 'my_text_2.txt'], 'test': 'my_test_file.txt'})7.训练模型

现在使用Huggingface TRL的SFTTrainer,我们执行 max_steps = 60 来加快速度,但您可以设置num_train_epochs=1进行完整运行,并关闭max_steps=None。当然还支持TRL的DPOTrainer!更多文档请查看:TRL SFT文档!

from trl import SFTTrainer

from transformers import TrainingArgumentstrainer = SFTTrainer(model = model,tokenizer = tokenizer,train_dataset = dataset,dataset_text_field = "text",max_seq_length = max_seq_length,dataset_num_proc = 2,packing = False, args = TrainingArguments(per_device_train_batch_size = 2,gradient_accumulation_steps = 4,warmup_steps = 5,max_steps = 60,learning_rate = 2e-4,fp16 = not torch.cuda.is_bf16_supported(),bf16 = torch.cuda.is_bf16_supported(),logging_steps = 1,optim = "adamw_8bit",weight_decay = 0.01,lr_scheduler_type = "linear",seed = 3407,output_dir = "outputs",),

)

显示当前内存状态

gpu_stats = torch.cuda.get_device_properties(0)

start_gpu_memory = round(torch.cuda.max_memory_reserved() / 1024 / 1024 / 1024, 3)

max_memory = round(gpu_stats.total_memory / 1024 / 1024 / 1024, 3)

print(f"GPU = {gpu_stats.name}. 最大内存 = {max_memory} GB.")

print(f"{start_gpu_memory} GB of 内存剩余。")开始训练

trainer_stats = trainer.train()

8.测试模型效果

开始运行模型!更改指令(instruction)和输入(input),将输出(output)留空。

FastLanguageModel.for_inference(model)

inputs = tokenizer(

[alpaca_prompt.format("Continue the fibonnaci sequence.","1, 1, 2, 3, 5, 8","", )

], return_tensors = "pt").to("cuda")outputs = model.generate(**inputs, max_new_tokens = 64, use_cache = True)

tokenizer.batch_decode(outputs)还可以使用TextStreamer进行流式推断 ,这样就可以逐个标记地查看生成过程,而不必等待答案全部生成完成!

# alpaca_prompt = 从上面复制

FastLanguageModel.for_inference(model)

inputs = tokenizer(

[alpaca_prompt.format("Continue the fibonnaci sequence.", "1, 1, 2, 3, 5, 8", "", )

], return_tensors = "pt").to("cuda")from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer)

_ = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 128)9.保存、加载新模型

要将最终模型保存为 LoRA 适配器,使用 save_pretrained 进行本地保存。

[注意] 这仅保存 LoRA 适配器,而不是完整模型。要保存为16位或GGUF。

import os

current_path = os.getcwd()

model.save_pretrained("lora_model")

print(f"保存地址 {current_path}/lora_model")测试LoRA模型:如果您想加载我们刚刚保存的 LoRA 适配器用于推断,请将 False 设置为 True:

if True:from unsloth import FastLanguageModelmodel, tokenizer = FastLanguageModel.from_pretrained(model_name = "lora_model",max_seq_length = max_seq_length,dtype = dtype,load_in_4bit = load_in_4bit,)FastLanguageModel.for_inference(model) # Enable native 2x faster inference# alpaca_prompt = You MUST copy from above!inputs = tokenizer(

[alpaca_prompt.format("What is a famous tall tower in Paris?", "", "",)

], return_tensors = "pt").to("cuda")outputs = model.generate(**inputs, max_new_tokens = 64, use_cache = True)

tokenizer.batch_decode(outputs)

GGUF/llama.cpp转换,现在我们原生支持保存为GGUF/llama.cpp!我们克隆llama.cpp,并默认保存为q8_0。我们允许所有方法,如q4_k_m。使用save_pretrained_gguf进行本地保存,使用push_to_hub_gguf上传至HF。

还有一些支持的量化方法(完整列表请查看unslothai的Wiki页面):

if True: model.save_pretrained_gguf("model", tokenizer, quantization_method = "q4_k_m")if False: model.save_pretrained_gguf("model", tokenizer, quantization_method = "f16")

F16模型量化成Q8,减少模型体积(如果是Q4可以不用)

![ -d "llama.cpp" ] || git clone https://github.com/ggerganov/llama.cpp.git

!cd llama.cpp && make

#!./quantize /content/model-unsloth.F16.gguf /content/model-unsloth.q8_0.gguf q8_0

将刚刚生成的模型移动到谷歌云

import shutilsource_path = '/content/model-unsloth.Q4_K_M.gguf'

destination_path = '/content/drive/MyDrive/'shutil.move(source_path, destination_path)然后我们微调后的模型到此就完全保存到我们的谷歌云盘了,然后到谷歌云MyDrive中下载。

10.ollama调用微调之后的gguf格式模型

导入方式:导入方式页面

创建modelfile文件到ollama下面的models目录,并且写入下面内容:

FROM ./model.uslouth.Q8_0.gguf

用下面命令创建model:

ollama create example -f Modelfile

查看model:

ollama list

运行model:

ollama run example "What is your favourite condiment?"

11:总结

unsloth 对于我们微调模型是最便利的工具,我们平常使用当中,特别是前期,尽量使用成熟且方便快捷的工具,可以极大节省我们训练微调对显存的使用。

![[240512] x-cmd 发布 v0.3.6: (se,wkp,ddgo...)x( kimi,gemini,gpt...)](https://img-blog.csdnimg.cn/direct/f09b4b037c3d4fc69ce82f7278a79382.gif#pic_center)