数据集下载

这一部分比较简单,就不过多赘述了,把代码粘贴到自己的项目文件里,运行一下就可以下载了。

from torchvision import datasets, transforms# 定义数据转换,将数据转换为张量并进行标准化

transform = transforms.Compose([transforms.ToTensor(), # 转换为张量transforms.Normalize((0.5,), (0.5,)) # 标准化

])# 下载和加载训练集

train_data = datasets.MNIST(root='./data', train=True, download=True, transform=transform)# 下载和加载测试集

test_data = datasets.MNIST(root='./data', train=False, download=True, transform=transform)

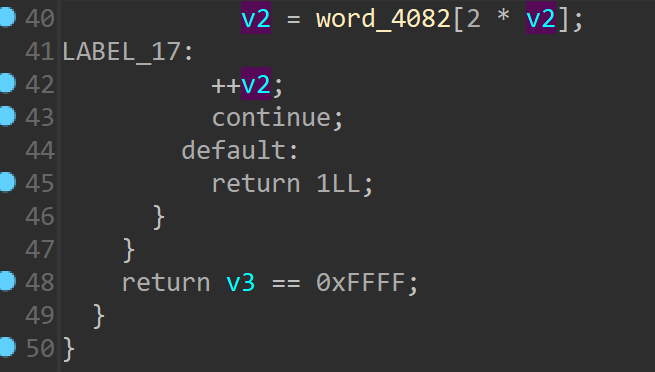

该代码运行效果如下图:

该代码运行效果如下图

import torch'''=============== 数据集部分 ==============='''

# 定义数据转换

import torchvision.transforms as transforms

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.5,), (0.5,))

])# 打开已经下载的训练集和测试集

from torchvision.datasets import MNIST

train_dataset = MNIST(root='./data', train=True, download=False, transform=transform)

test_dataset = MNIST(root='./data', train=False, download=False, transform=transform)# 创建数据加载器

batch_size = 256

from torch.utils.data import random_split

from torch.utils.data import DataLoader# 将数据集分割为6000和剩余的数据

train_size = 6000

train_subset, _ = random_split(train_dataset, [train_size, len(train_dataset) - train_size])train_loader = DataLoader(dataset=train_subset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False)'''=============== 网络定义 ==============='''

# 初始化网络

from net import CNN

net = CNN()# 初始化优化器、学习率调整器、评价函数

import torch.nn as nn

from torch import optim

learning_rate = 0.001 # 0.05 ~ 1e-6

weight_decay = 1e-4 # 1e-2 ~ 1e-8

momentum = 0.8 # 0.3~0.9

optimizer = optim.RMSprop(net.parameters(), lr=learning_rate, weight_decay=weight_decay, momentum=momentum)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'min', patience=2)

criterion = nn.CrossEntropyLoss()# GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

net.to(device=device)'''=============== 模型信息管理 ==============='''

model_path = Noneif model_path is not None:net.load_state_dict(torch.load(model_path, map_location=device))'''=============== 网络训练 ==============='''

epochs = 50def train(net, device, optimizer, scheduler, criterion):net.train() for epoch in range(epochs):epoch_loss = 0 # 集损失置0for images, labels in train_loader:''' ========== 数据获取和转移 ========== '''images = images.to(device=device, dtype=torch.float32)labels = labels.to(device=device, dtype=torch.long)''' ========== 数据操作 ========== '''outputs = net(images)# net.forward()loss = criterion(outputs, labels)epoch_loss += loss.detach().item()''' ========== 反向传播 ========== '''optimizer.zero_grad()loss.requires_grad_(True)loss.backward() # 梯度裁剪for param in net.parameters():if param.grad is not None and param.grad.nelement() > 0:nn.utils.clip_grad_value_([param], clip_value=0.1)optimizer.step()epoch_loss /= len(train_loader)# 输出每个 epoch 的平均损失print(f'Epoch [{epoch+1}/{epochs}], Loss: {epoch_loss}')train(net, device, optimizer, scheduler, criterion)'''=============== 网络保存 ==============='''

from datetime import datetime# 获取当前时间

current_time = datetime.now().strftime('%Y%m%d_%H%M%S')

model_path = f'./output/final_model_{current_time}.pth'# 保存模型

torch.save(net.state_dict(), model_path)