Flannel VxLAN DR 模式

一、环境信息

| 主机 | IP |

|---|---|

| ubuntu | 172.16.94.141 |

| 软件 | 版本 |

|---|---|

| docker | 26.1.4 |

| helm | v3.15.0-rc.2 |

| kind | 0.18.0 |

| clab | 0.54.2 |

| kubernetes | 1.23.4 |

| ubuntu os | Ubuntu 20.04.6 LTS |

| kernel | 5.11.5 内核升级文档 |

二、安装服务

kind 配置文件信息

$ cat install.sh#!/bin/bash

date

set -v# 1.prep noCNI env

cat <<EOF | kind create cluster --name=clab-flannel-vxlan-directrouting --image=kindest/node:v1.23.4 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:disableDefaultCNI: truepodSubnet: "10.244.0.0/16"

nodes:

- role: control-planekubeadmConfigPatches:- |kind: InitConfigurationnodeRegistration:kubeletExtraArgs:node-ip: 10.1.5.10node-labels: "rack=rack0"- role: workerkubeadmConfigPatches:- |kind: JoinConfigurationnodeRegistration:kubeletExtraArgs:node-ip: 10.1.5.11node-labels: "rack=rack0"- role: workerkubeadmConfigPatches:- |kind: JoinConfigurationnodeRegistration:kubeletExtraArgs:node-ip: 10.1.8.10node-labels: "rack=rack1"- role: workerkubeadmConfigPatches:- |kind: JoinConfigurationnodeRegistration:kubeletExtraArgs:node-ip: 10.1.8.11node-labels: "rack=rack1"containerdConfigPatches:

- |-[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.dayuan1997.com"]endpoint = ["https://harbor.dayuan1997.com"]

EOF# 2.remove taints

controller_node=`kubectl get nodes --no-headers -o custom-columns=NAME:.metadata.name| grep control-plane`

kubectl taint nodes $controller_node node-role.kubernetes.io/master:NoSchedule-

kubectl get nodes -o wide# 3.install necessary tools

# cd /opt/

# curl -o calicoctl -O -L "https://gh.api.99988866.xyz/https://github.com/containernetworking/plugins/releases/download/v0.9.0/cni-plugins-linux-amd64-v0.9.0.tgz"

# tar -zxvf cni-plugins-linux-amd64-v0.9.0.tgzfor i in $(docker ps -a --format "table {{.Names}}" | grep flannel)

doecho $idocker cp /opt/bridge $i:/opt/cni/bin/docker cp /usr/bin/ping $i:/usr/bin/pingdocker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

- 安装

k8s集群

root@kind:~# ./install.shCreating cluster "clab-flannel-vxlan-directrouting" ...✓ Ensuring node image (kindest/node:v1.23.4) 🖼✓ Preparing nodes 📦 📦 📦 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜

Set kubectl context to "kind-clab-flannel-vxlan-directrouting"

You can now use your cluster with:kubectl cluster-info --context kind-clab-flannel-vxlan-directroutingHave a nice day! 👋

root@kind:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-flannel-vxlan-directrouting-control-plane NotReady control-plane,master 2m48s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker NotReady <none> 2m14s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker2 NotReady <none> 2m14s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker3 NotReady <none> 2m14s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

创建 clab 容器环境

创建网桥

root@kind:~# brctl addbr br-leaf0

root@kind:~# ifconfig br-leaf0 up

root@kind:~# brctl addbr br-leaf1

root@kind:~# ifconfig br-leaf1 uproot@kind:~# ip a l

19: br-pool0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000link/ether aa:c1:ab:14:8f:99 brd ff:ff:ff:ff:ff:ffinet6 fe80::e8df:fcff:fed4:3e17/64 scope link valid_lft forever preferred_lft forever

20: br-pool1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000link/ether aa:c1:ab:08:cc:9d brd ff:ff:ff:ff:ff:ffinet6 fe80::88c:adff:fef2:f336/64 scope link valid_lft forever preferred_lft forever

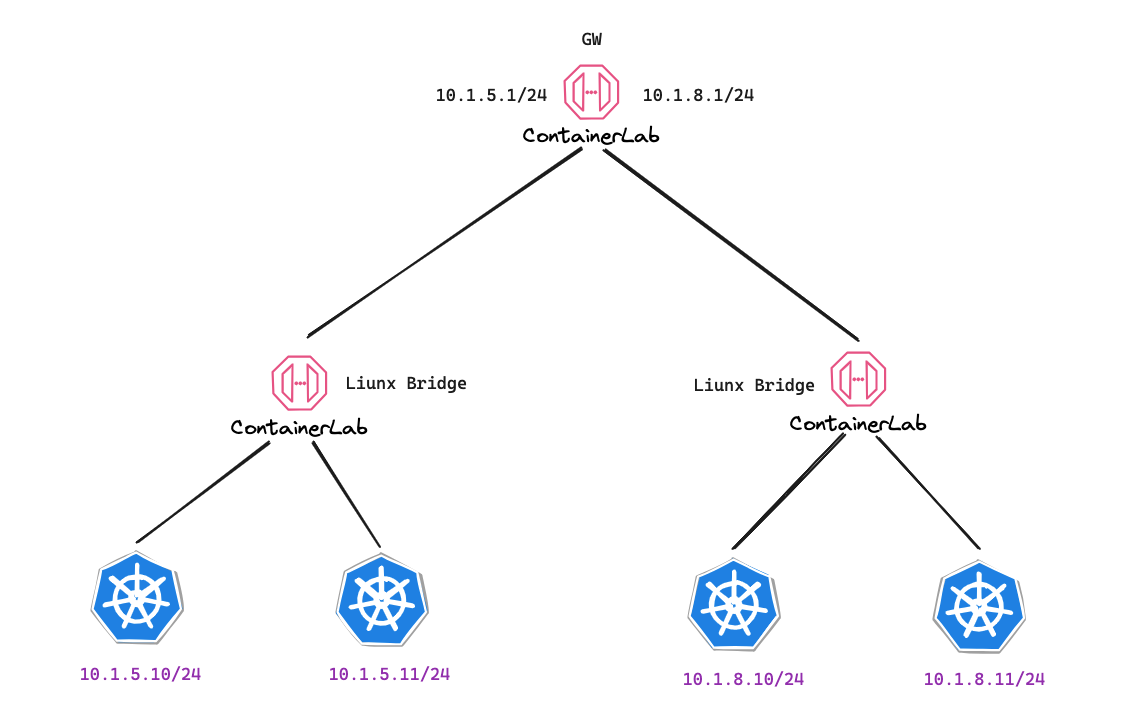

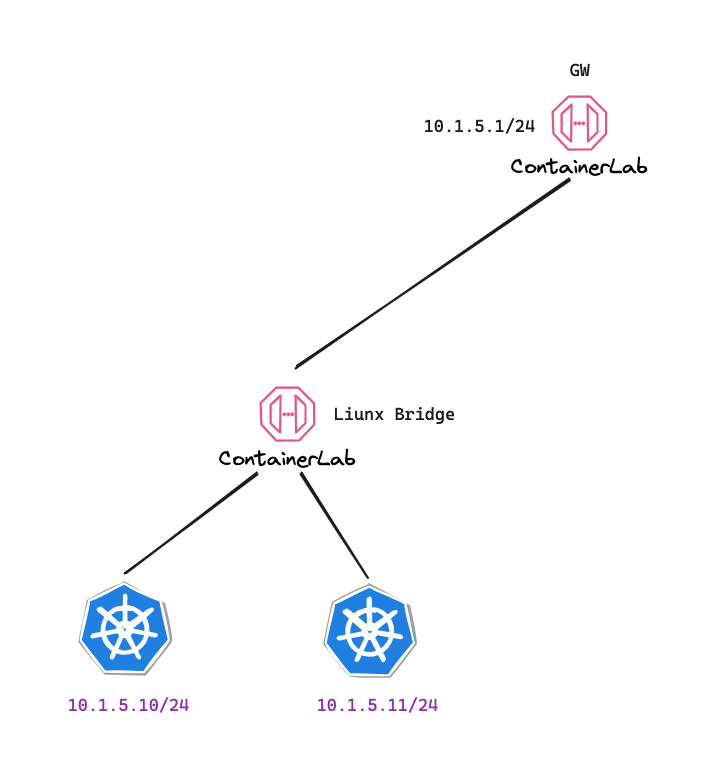

创建这两个网桥主要是为了让

kind上节点通过虚拟交换机连接到containerLab,为什么不直连接containerLab,如果10.1.5.10/24使用vethPair和containerLab进行连接,10.1.5.11/24就没有额外的端口进行连接

clab 网络拓扑文件

# flannel.vxlan.directrouting.clab.yml

name: flannel-vxlan-directrouting

topology:nodes:gw0:kind: linuximage: vyos/vyos:1.2.8cmd: /sbin/initbinds:- /lib/modules:/lib/modules- ./startup-conf/gw0-boot.cfg:/opt/vyatta/etc/config/config.bootbr-pool0:kind: bridgebr-pool1:kind: bridgeserver1:kind: linuximage: harbor.dayuan1997.com/devops/nettool:0.9# 复用节点网络,共享网络命名空间network-mode: container:clab-flannel-vxlan-directrouting-control-plane# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。exec: - ip addr add 10.1.5.10/24 dev net0 - ip route replace default via 10.1.5.1server2:kind: linuximage: harbor.dayuan1997.com/devops/nettool:0.9# 复用节点网络,共享网络命名空间network-mode: container:clab-flannel-vxlan-directrouting-worker# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。exec:- ip addr add 10.1.5.11/24 dev net0- ip route replace default via 10.1.5.1server3:kind: linuximage: harbor.dayuan1997.com/devops/nettool:0.9# 复用节点网络,共享网络命名空间network-mode: container:clab-flannel-vxlan-directrouting-worker2# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。exec:- ip addr add 10.1.8.10/24 dev net0- ip route replace default via 10.1.8.1server4:kind: linuximage: harbor.dayuan1997.com/devops/nettool:0.9# 复用节点网络,共享网络命名空间network-mode: container:clab-flannel-vxlan-directrouting-worker3# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。exec:- ip addr add 10.1.8.11/24 dev net0- ip route replace default via 10.1.8.1links:- endpoints: ["br-pool0:br-pool0-net0", "server1:net0"]- endpoints: ["br-pool0:br-pool0-net1", "server2:net0"]- endpoints: ["br-pool1:br-pool1-net0", "server3:net0"]- endpoints: ["br-pool1:br-pool1-net1", "server4:net0"]- endpoints: ["gw0:eth1", "br-pool0:br-pool0-net2"]- endpoints: ["gw0:eth2", "br-pool1:br-pool1-net2"]

VyOS 配置文件

gw0-boot.cfg

配置文件

# ./startup-conf/gw0-boot.cfg

interfaces {ethernet eth1 {address 10.1.5.1/24duplex autosmp-affinity autospeed auto}ethernet eth2 {address 10.1.8.1/24duplex autosmp-affinity autospeed auto}loopback lo {}

}

# 配置 nat 信息,gw0 网络下的其他服务器可以访问外网

nat {source {rule 100 {outbound-interface eth0source {address 10.1.0.0/16}translation {address masquerade}}}

}

system {config-management {commit-revisions 100}console {device ttyS0 {speed 9600}}host-name vyoslogin {user vyos {authentication {encrypted-password $6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/plaintext-password ""}level admin}}ntp {server 0.pool.ntp.org {}server 1.pool.ntp.org {}server 2.pool.ntp.org {}}syslog {global {facility all {level info}facility protocols {level debug}}}time-zone UTC

}/* Warning: Do not remove the following line. */

/* === vyatta-config-version: "wanloadbalance@3:l2tp@1:pptp@1:ntp@1:mdns@1:webgui@1:conntrack@1:ipsec@5:cluster@1:dhcp-server@5:nat@4:dhcp-relay@2:webproxy@1:system@10:pppoe-server@2:dns-forwarding@1:ssh@1:quagga@7:broadcast-relay@1:qos@1:snmp@1:firewall@5:zone-policy@1:config-management@1:webproxy@2:vrrp@2:conntrack-sync@1" === */

/* Release version: 1.2.8 */

部署服务

# tree -L 2 ./

./

├── flannel.vxlan.directrouting.clab.yml

└── startup-conf└── gw0-boot.cfg# clab deploy -t flannel.vxlan.directrouting.clab.yml

INFO[0000] Containerlab v0.54.2 started

INFO[0000] Parsing & checking topology file: clab.yaml

INFO[0000] Creating docker network: Name="clab", IPv4Subnet="172.20.20.0/24", IPv6Subnet="2001:172:20:20::/64", MTU=1500

INFO[0000] Creating lab directory: /root/wcni-kind/flannel/4-flannel-vxlan-directrouting/clab-flannel-vxlan-directrouting

WARN[0000] node clab-flannel-vxlan-directrouting-control-plane referenced in namespace sharing not found in topology definition, considering it an external dependency.

WARN[0000] node clab-flannel-vxlan-directrouting-worker referenced in namespace sharing not found in topology definition, considering it an external dependency.

WARN[0000] node clab-flannel-vxlan-directrouting-worker2 referenced in namespace sharing not found in topology definition, considering it an external dependency.

WARN[0000] node clab-flannel-vxlan-directrouting-worker3 referenced in namespace sharing not found in topology definition, considering it an external dependency.

INFO[0000] Creating container: "gw0"

INFO[0001] Created link: gw0:eth1 <--> br-pool0:br-pool0-net2

INFO[0001] Created link: gw0:eth2 <--> br-pool1:br-pool1-net2

INFO[0003] Creating container: "server2"

INFO[0003] Creating container: "server1"

INFO[0003] Created link: br-pool0:br-pool0-net1 <--> server2:net0

INFO[0003] Created link: br-pool0:br-pool0-net0 <--> server1:net0

INFO[0004] Creating container: "server3"

INFO[0004] Creating container: "server4"

INFO[0004] Created link: br-pool1:br-pool1-net0 <--> server3:net0

INFO[0004] Created link: br-pool1:br-pool1-net1 <--> server4:net0

INFO[0005] Executed command "ip addr add 10.1.5.10/24 dev net0" on the node "server1". stdout:

INFO[0005] Executed command "ip route replace default via 10.1.5.1" on the node "server1". stdout:

INFO[0005] Executed command "ip addr add 10.1.8.11/24 dev net0" on the node "server4". stdout:

INFO[0005] Executed command "ip route replace default via 10.1.8.1" on the node "server4". stdout:

INFO[0005] Executed command "ip addr add 10.1.8.10/24 dev net0" on the node "server3". stdout:

INFO[0005] Executed command "ip route replace default via 10.1.8.1" on the node "server3". stdout:

INFO[0005] Executed command "ip addr add 10.1.5.11/24 dev net0" on the node "server2". stdout:

INFO[0005] Executed command "ip route replace default via 10.1.5.1" on the node "server2". stdout:

INFO[0005] Adding containerlab host entries to /etc/hosts file

INFO[0005] Adding ssh config for containerlab nodes

INFO[0005] 🎉 New containerlab version 0.56.0 is available! Release notes: https://containerlab.dev/rn/0.56/

Run 'containerlab version upgrade' to upgrade or go check other installation options at https://containerlab.dev/install/

+---+------------------------------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

| # | Name | Container ID | Image | Kind | State | IPv4 Address | IPv6 Address |

+---+------------------------------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

| 1 | clab-flannel-vxlan-directrouting-gw0 | 2ac429824caa | vyos/vyos:1.2.8 | linux | running | 172.20.20.2/24 | 2001:172:20:20::2/64 |

| 2 | clab-flannel-vxlan-directrouting-server1 | c3cc494ff542 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 3 | clab-flannel-vxlan-directrouting-server2 | bc24767595b4 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 4 | clab-flannel-vxlan-directrouting-server3 | 71c0daf892e3 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 5 | clab-flannel-vxlan-directrouting-server4 | b8361f60cfe6 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

+---+------------------------------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

检查 k8s 集群信息

root@kind:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-flannel-vxlan-directrouting-control-plane NotReady control-plane,master 8m38s v1.23.4 10.1.5.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker NotReady <none> 8m4s v1.23.4 10.1.5.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker2 NotReady <none> 8m4s v1.23.4 10.1.8.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker3 NotReady <none> 8m4s v1.23.4 10.1.8.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10# 查看 node 节点 ip 信息

root@kind:~# docker exec -it clab-flannel-vxlan-directrouting-control-plane ip a l

17: eth0@if18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:12:00:05 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.18.0.5/16 brd 172.18.255.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fc00:f853:ccd:e793::5/64 scope global nodad valid_lft forever preferred_lft foreverinet6 fe80::42:acff:fe12:5/64 scope link valid_lft forever preferred_lft forever

22: net0@if23: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default link/ether aa:c1:ab:ab:fa:3b brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.1.5.10/24 scope global net0valid_lft forever preferred_lft foreverinet6 fe80::a8c1:abff:feab:fa3b/64 scope link valid_lft forever preferred_lft forever# 查看 node 节点路由信息

root@kind:~# docker exec -it clab-flannel-vxlan-directrouting-control-plane ip r s

default via 10.1.5.1 dev net0

10.1.5.0/24 dev net0 proto kernel scope link src 10.1.5.10

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.5

查看 k8s 集群发现

node节点ip地址分配了,登陆容器查看到了新的ip地址,并且默认路由信息调整为了10.1.5.0/24 dev net0 proto kernel scope link src 10.1.5.10

安装 flannel 服务

flannel.yaml

配置文件

# flannel.yaml

---

kind: Namespace

apiVersion: v1

metadata:name: kube-flannellabels:pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

rules:

- apiGroups:- ""resources:- podsverbs:- get

- apiGroups:- ""resources:- nodesverbs:- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:name: flannelnamespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flannel

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan","DirectRouting": true}}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-dsnamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cni-plugin#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)#image: 192.168.2.100:5000/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0image: harbor.dayuan1997.com/devops/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0command:- cpargs:- -f- /flannel- /opt/cni/bin/flannelvolumeMounts:- name: cni-pluginmountPath: /opt/cni/bin- name: install-cni#image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)#image: 192.168.2.100:5000/rancher/mirrored-flannelcni-flannel:v0.19.2image: harbor.dayuan1997.com/devops/rancher/mirrored-flannelcni-flannel:v0.19.2command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannel#image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)#image: 192.168.2.100:5000/rancher/mirrored-flannelcni-flannel:v0.19.2image: harbor.dayuan1997.com/devops/rancher/mirrored-flannelcni-flannel:v0.19.2command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: EVENT_QUEUE_DEPTHvalue: "5000"volumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/- name: xtables-lockmountPath: /run/xtables.lock- name: tunmountPath: /dev/net/tunvolumes:- name: tunhostPath:path: /dev/net/tun- name: runhostPath:path: /run/flannel- name: cni-pluginhostPath:path: /opt/cni/bin- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate

flannel.yaml 参数解释

Backend.Type- 含义: 用于指定

flannel工作模式。 vxlan:flannel工作在vxlan模式。

- 含义: 用于指定

Backend.DirectRouting- 含义: 用于指定

vxlan模式同网段使用host-gw模式。 true:vxlan模式中,同网段的node节点之间数据转发使用host-gw模式。

- 含义: 用于指定

root@kind:~# kubectl apply -f flannel.yaml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

- 查看

k8s集群和flannel服务

root@kind:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-flannel-vxlan-directrouting-control-plane Ready control-plane,master 13m v1.23.4 10.1.5.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker Ready <none> 13m v1.23.4 10.1.5.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker2 Ready <none> 13m v1.23.4 10.1.8.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-flannel-vxlan-directrouting-worker3 Ready <none> 13m v1.23.4 10.1.8.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

- 查看安装的服务

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-64897985d-8dt79 1/1 Running 0 13m

kube-system coredns-64897985d-sprng 1/1 Running 0 13m

kube-system etcd-clab-flannel-vxlan-directrouting-control-plane 1/1 Running 0 14m

kube-system kube-apiserver-clab-flannel-vxlan-directrouting-control-plane 1/1 Running 0 14m

kube-system kube-controller-manager-clab-flannel-vxlan-directrouting-control-plane 1/1 Running 0 14m

kube-system kube-flannel-ds-6b4sk 1/1 Running 0 3m52s

kube-system kube-flannel-ds-6vqf2 1/1 Running 0 3m52s

kube-system kube-flannel-ds-76czr 1/1 Running 0 3m52s

kube-system kube-flannel-ds-xhw4f 1/1 Running 0 3m52s

kube-system kube-proxy-d9kjr 1/1 Running 0 13m

kube-system kube-proxy-fl2v7 1/1 Running 0 13m

kube-system kube-proxy-mgv2m 1/1 Running 0 13m

kube-system kube-proxy-xcl4n 1/1 Running 0 13m

kube-system kube-scheduler-clab-flannel-vxlan-directrouting-control-plane 1/1 Running 0 14m

local-path-storage local-path-provisioner-5ddd94ff66-lk6kc 1/1 Running 0 13m

k8s 集群安装 Pod 测试网络

root@kind:~# cat cni.yamlapiVersion: apps/v1

kind: DaemonSet

#kind: Deployment

metadata:labels:app: cniname: cni

spec:#replicas: 1selector:matchLabels:app: cnitemplate:metadata:labels:app: cnispec:containers:- image: harbor.dayuan1997.com/devops/nettool:0.9name: nettoolboxsecurityContext:privileged: true---

apiVersion: v1

kind: Service

metadata:name: serversvc

spec:type: NodePortselector:app: cniports:- name: cniport: 80targetPort: 80nodePort: 32000

root@kind:~# kubectl apply -f cni.yaml

daemonset.apps/cni created

service/serversvc createdroot@kind:~# kubectl run net --image=harbor.dayuan1997.com/devops/nettool:0.9

pod/net created

- 查看安装服务信息

root@kind:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-5sxkl 1/1 Running 0 18s 10.244.2.2 clab-flannel-vxlan-directrouting-worker2 <none> <none>

cni-vwgqx 1/1 Running 0 18s 10.244.0.2 clab-flannel-vxlan-directrouting-control-plane <none> <none>

cni-w79ph 1/1 Running 0 18s 10.244.3.5 clab-flannel-vxlan-directrouting-worker3 <none> <none>

cni-x2rxr 1/1 Running 0 18s 10.244.2.2 clab-flannel-vxlan-directrouting-worker <none> <none>

net 1/1 Running 0 13s 10.244.1.3 clab-flannel-vxlan-directrouting-worker2 <none> <none>root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15m

serversvc NodePort 10.96.50.15 <none> 80:32000/TCP 27s

三、测试网络

同节点 Pod 网络通讯

可以查看此文档 Flannel UDP 模式 中,同节点网络通讯,数据包转发流程一致

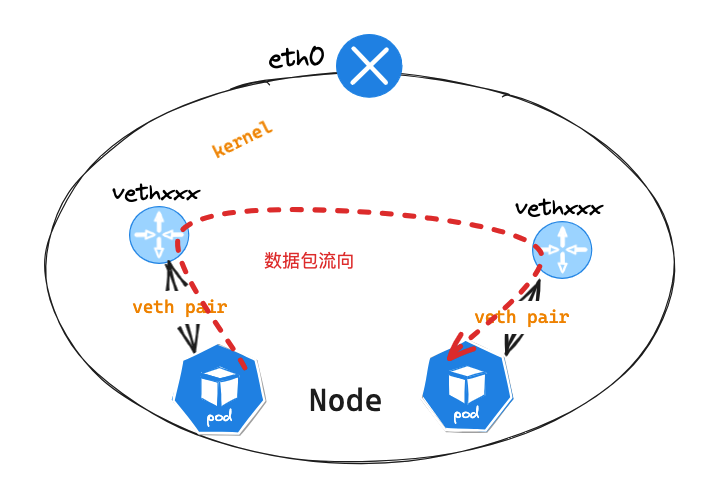

Flannel 同节点通信通过

l2网络,2层交换机完成

跨节点同 Node 网段 Pod 网络通讯

可以查看此文档 Flannel HOST-GW 模式 中,不同节点 Pod 网络通讯,数据包转发流程一致

跨节点不同 Node 网段 Pod 网络通讯

Pod节点信息

## ip 信息

root@kind:~# kubectl exec -it net -- ip a l

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9450 qdisc noqueue state UP group default link/ether 26:a8:8d:74:59:3f brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.244.1.3/24 brd 10.244.2.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fe80::24a8:8dff:fe74:593f/64 scope link valid_lft forever preferred_lft forever## 路由信息

root@kind:~# kubectl exec -it net -- ip r s

default via 10.244.1.1 dev eth0

10.244.0.0/16 via 10.244.1.1 dev eth0

10.244.1.0/24 dev eth0 proto kernel scope link src 10.244.1.3

Pod节点所在Node节点信息

root@kind:~# docker exec -it clab-flannel-vxlan-directrouting-worker2 bash## ip 信息

root@clab-flannel-vxlan-directrouting-worker2:/# ip a l

2: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9450 qdisc noqueue state UNKNOWN group default link/ether ca:7d:71:63:e2:a2 brd ff:ff:ff:ff:ff:ffinet 10.244.1.0/32 scope global flannel.1valid_lft forever preferred_lft foreverinet6 fe80::c87d:71ff:fe63:e2a2/64 scope link valid_lft forever preferred_lft forever

3: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9450 qdisc noqueue state UP group default qlen 1000link/ether d2:3c:81:f3:9c:6f brd ff:ff:ff:ff:ff:ffinet 10.244.1.1/24 brd 10.244.2.255 scope global cni0valid_lft forever preferred_lft foreverinet6 fe80::d03c:81ff:fef3:9c6f/64 scope link valid_lft forever preferred_lft forever

4: vethd3fcbe42@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9450 qdisc noqueue master cni0 state UP group default link/ether 92:01:1c:7e:82:65 brd ff:ff:ff:ff:ff:ff link-netns cni-baf1f367-320c-1afa-a624-88ba3fc51a48inet6 fe80::9001:1cff:fe7e:8265/64 scope link valid_lft forever preferred_lft forever

5: veth25e540eb@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9450 qdisc noqueue master cni0 state UP group default link/ether b6:10:4f:07:c1:0e brd ff:ff:ff:ff:ff:ff link-netns cni-07b75239-d571-5b75-5257-e3c3e6ed5c01inet6 fe80::b410:4fff:fe07:c10e/64 scope link valid_lft forever preferred_lft forever

13: eth0@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fc00:f853:ccd:e793::3/64 scope global nodad valid_lft forever preferred_lft foreverinet6 fe80::42:acff:fe12:3/64 scope link valid_lft forever preferred_lft forever

28: net0@if29: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default link/ether aa:c1:ab:84:fd:ec brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.1.8.10/24 scope global net0valid_lft forever preferred_lft foreverinet6 fe80::a8c1:abff:fe84:fdec/64 scope link valid_lft forever preferred_lft forever## 路由信息

root@clab-flannel-vxlan-directrouting-worker2:/# ip r s

default via 10.1.8.1 dev net0

10.1.8.0/24 dev net0 proto kernel scope link src 10.1.8.10

10.244.0.0/24 via 10.244.0.0 dev flannel.1 onlink

10.244.1.0/24 dev cni0 proto kernel scope link src 10.244.1.1

10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink

10.244.3.0/24 via 10.1.8.11 dev net0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

Pod节点进行ping包测试,访问cni-x2rxrPod节点

root@kind:~# kubectl exec -it net -- ping 10.244.2.2 -c 1

PING 10.244.2.2 (10.244.2.2): 56 data bytes

64 bytes from 10.244.2.2: seq=0 ttl=62 time=1.402 ms--- 10.244.2.2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.402/1.402/1.402 ms

Pod节点eth0网卡抓包

net~$ tcpdump -pne -i eth0

08:23:24.189370 26:a8:8d:74:59:3f > d2:3c:81:f3:9c:6f, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.2.2: ICMP echo request, id 54, seq 14, length 64

08:23:24.189496 d2:3c:81:f3:9c:6f > 26:a8:8d:74:59:3f, ethertype IPv4 (0x0800), length 98: 10.244.2.2 > 10.244.1.3: ICMP echo reply, id 54, seq 14, length 64

数据包源 mac 地址: 26:a8:8d:74:59:3f 为 eth0 网卡 mac 地址,而目的 mac 地址: d2:3c:81:f3:9c:6f 为 net Pod 节点 cni0 网卡对应的网卡 mac 地址,cni0

网卡 ip 地址为网络网关地址 10.244.2.1 , flannel 为 2 层网络模式通过路由送往数据到网关地址

net~$ arp -n

Address HWtype HWaddress Flags Mask Iface

10.244.1.1 ether d2:3c:81:f3:9c:6f C eth0

而通过 veth pair 可以确定 Pod 节点 eth0 网卡对应的 veth pair 为 veth25e540eb@if3 网卡

clab-flannel-vxlan-directrouting-worker2节点veth25e540eb网卡抓包

root@clab-flannel-vxlan-directrouting-worker2:/# tcpdump -pne -i veth25e540eb

08:26:28.784300 26:a8:8d:74:59:3f > 42:a5:47:f5:4b:9f, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.1.2: ICMP echo request, id 23, seq 1576, length 64

08:26:28.784371 42:a5:47:f5:4b:9f > 26:a8:8d:74:59:3f, ethertype IPv4 (0x0800), length 98: 10.244.1.2 > 10.244.1.3: ICMP echo reply, id 23, seq 1576, length 64

因为他们互为 veth pair 所以抓包信息相同

clab-flannel-vxlan-directrouting-worker2节点cni0网卡抓包

root@clab-flannel-vxlan-directrouting-worker2:/# tcpdump -pne -i cni0

08:26:45.256754 26:a8:8d:74:59:3f > d2:3c:81:f3:9c:6f, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.2.2: ICMP echo request, id 54, seq 215, length 64

08:26:45.256878 d2:3c:81:f3:9c:6f > 26:a8:8d:74:59:3f, ethertype IPv4 (0x0800), length 98: 10.244.2.2 > 10.244.1.3: ICMP echo reply, id 54, seq 215, length 64

数据包源 mac 地址: 26:a8:8d:74:59:3f 为 net Pod 节点 eth0 网卡 mac 地址,而目的 mac 地址: d2:3c:81:f3:9c:6f 为 cni0 网卡 mac 地址

查看

clab-flannel-vxlan-directrouting-worker2主机路由信息,发现并在数据包会在通过10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink路由信息转发

clab-flannel-vxlan-directrouting-worker2节点flannel.1网卡抓包

root@clab-flannel-vxlan-directrouting-worker2:/# tcpdump -pne -i flannel.1 icmp

08:30:06.337766 ca:7d:71:63:e2:a2 > 3e:0a:47:72:56:37, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.2.2: ICMP echo request, id 54, seq 416, length 64

08:30:06.337895 3e:0a:47:72:56:37 > ca:7d:71:63:e2:a2, ethertype IPv4 (0x0800), length 98: 10.244.2.2 > 10.244.1.3: ICMP echo reply, id 54, seq 416, length 64

数据包源 mac 地址: ca:7d:71:63:e2:a2 为 clab-flannel-vxlan-directrouting-worker2 节点 flannel.1 网卡 mac 地址,而目的 mac 地址: 3e:0a:47:72:56:37 是谁的 mac 地址?查看宿主机 arp 信息,目的 mac 地址: 3e:0a:47:72:56:37 是 10.244.2.0 网段 mac 地址,这个地址如何学习到的?可以查看 FDB 自动学习绑定过程检测

root@clab-flannel-vxlan-directrouting-worker2:/# arp -n

Address HWtype HWaddress Flags Mask Iface

10.244.2.0 ether 3e:0a:47:72:56:37 CM flannel.1

查看 fdb 信息

root@clab-flannel-vxlan-directrouting-worker2:/# bridge fdb show

3e:0a:47:72:56:37 dev flannel.1 dst 10.1.5.11 self permanent

通过查看 fdb 表信息可以看到 3e:0a:47:72:56:37 dev flannel.1 dst 10.1.5.11 self permanent 标示了 mac 地址 3e:0a:47:72:56:37 所在的主机为 10.1.5.11 ,因此 vxlan 封装的外层数据的目的 ip 是使用 10.1.5.11,而 vxlan 封装的外层数据的源的 ip 是本机 net0 网卡 ip

clab-flannel-vxlan-directrouting-worker2节点net0网卡抓包

-

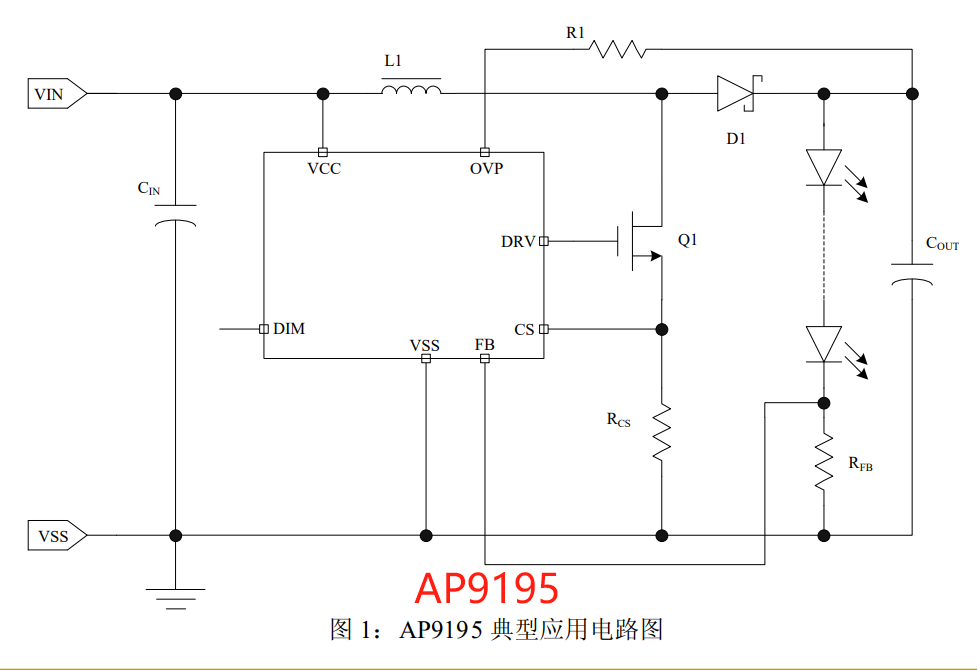

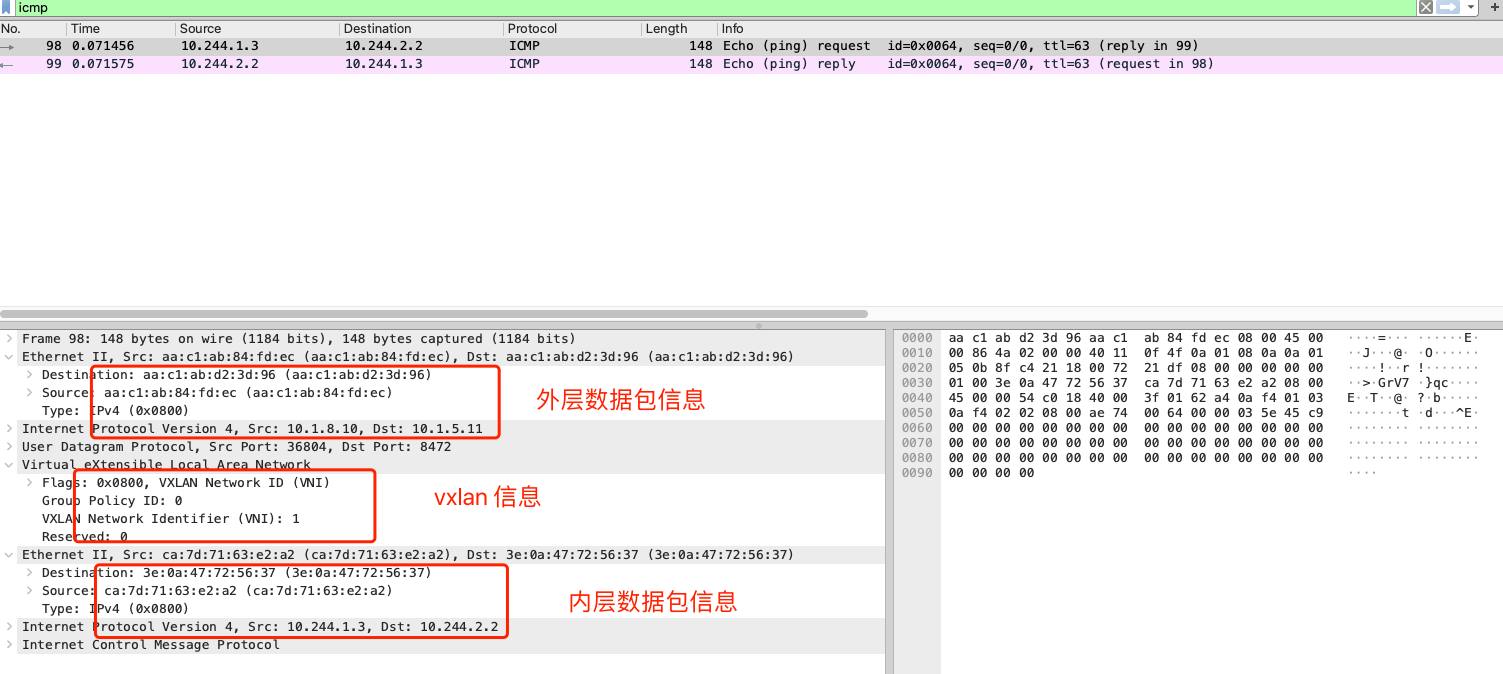

request数据包信息信息icmp包中,外部mac信息中,源mac: aa:c1:ab:84:fd:ec为clab-flannel-vxlan-directrouting-worker2的net0网卡mac,目的mac: aa:c1:ab:d2:3d:96为gw0主机的eth2网卡mac。使用udp协议8472端口进行数据传输,vxlan信息中vni为1。icmp包中,内部mac信息中,源mac: ca:7d:71:63:e2:a2为clab-flannel-vxlan-directrouting-worker2的flannel.1网卡mac,目的mac: 3e:0a:47:72:56:37为对端clab-flannel-vxlan-directrouting-worker主机的flannel.1网卡mac。

-

clab-flannel-vxlan-directrouting-worker2节点vxlan信息

root@clab-flannel-vxlan-directrouting-worker2:/# ip -d link show

2: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9450 qdisc noqueue state UNKNOWN mode DEFAULT group default link/ether ca:7d:71:63:e2:a2 brd ff:ff:ff:ff:ff:ff promiscuity 0 minmtu 68 maxmtu 65535 vxlan id 1 local 10.1.8.10 dev net0 srcport 0 0 dstport 8472 nolearning ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

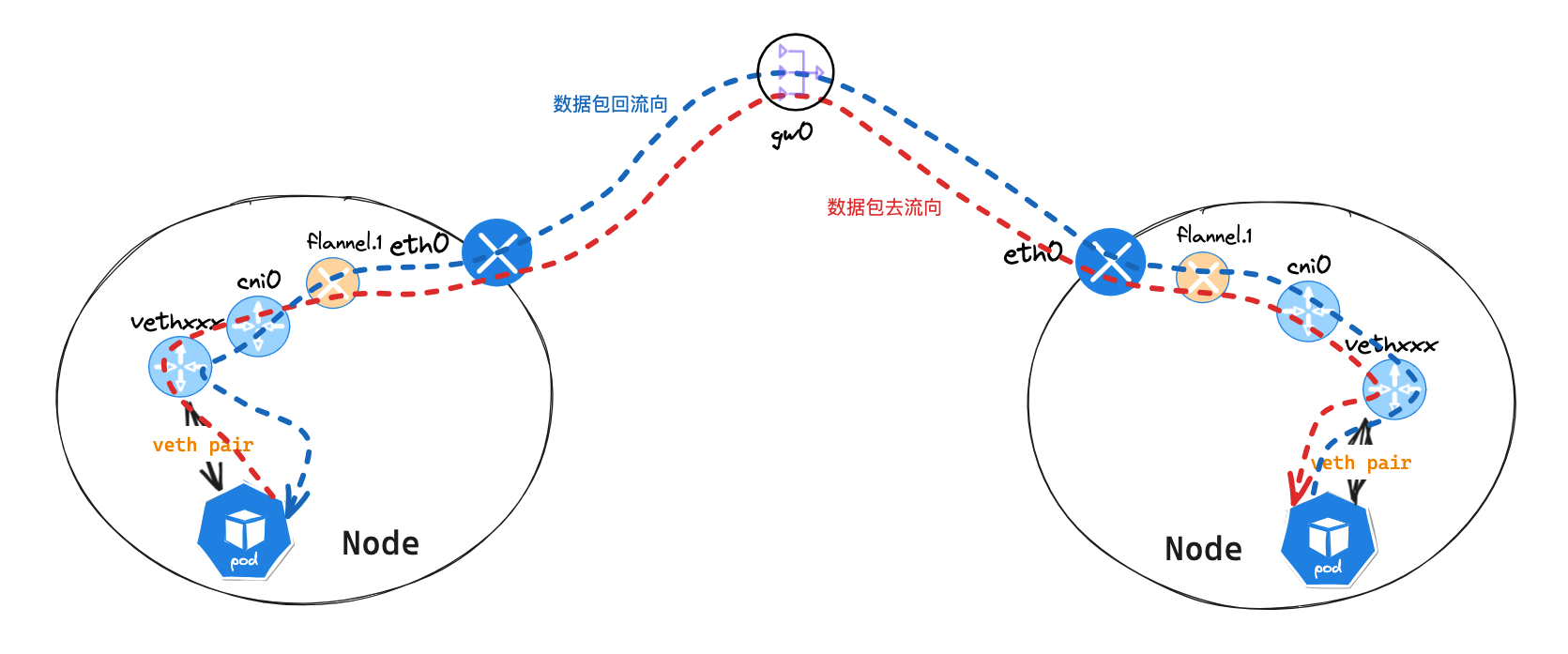

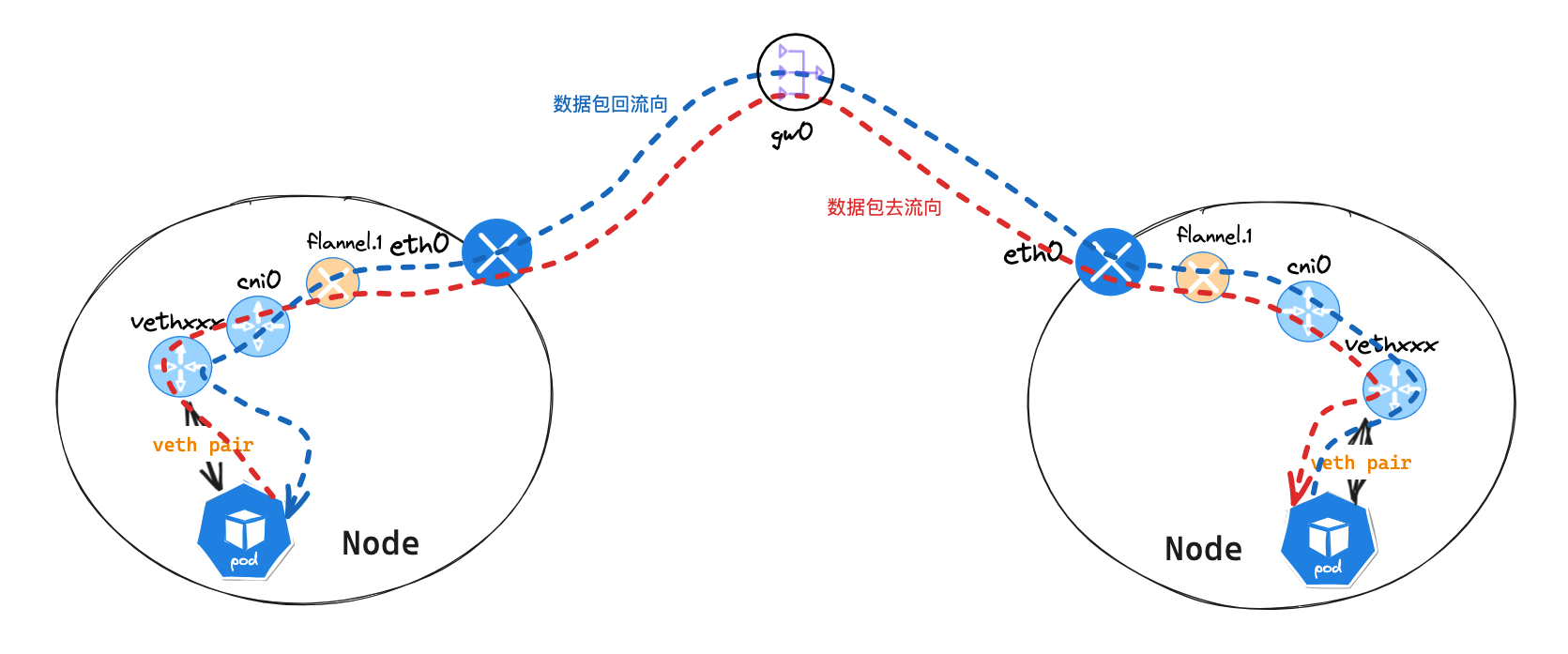

数据包流向

- 数据从

pod服务发出,通过查看本机路由表,送往10.244.1.1网卡。路由:10.244.0.0/16 via 10.244.1.1 dev eth0 - 通过

veth pair网卡veth25e540eb发送数据到clab-flannel-vxlan-directrouting-worker2主机上,在转送到cni0: 10.244.1.1网卡 clab-flannel-vxlan-directrouting-worker2主机查看自身路由后,会送往flannel.1接口,因为目的地址为10.244.2.2。路由:10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlinkflannel.1接口为vxlan模式,会重新封装数据包,封装信息查看arp信息10.244.2.0 ether 3e:0a:47:72:56:37 CM flannel.1、fdb信息3e:0a:47:72:56:37 dev flannel.1 dst 10.1.5.11 self permanent- 数据封装完成后,会送往

net0 接口,并送往gw0主机。 gw0主机接受到数据包后,发目的地址为10.1.5.11,会查看路由表,送往eth1接口。路由:10.1.5.0/24 dev eth1 proto kernel scope link src 10.1.5.1- 通过

gw0主机eth1网卡重新封装数据包后,最终会把数据包送到clab-flannel-vxlan-directrouting-worker主机 - 对端

clab-flannel-vxlan-directrouting-worker主机接受到数据包后,发现这个是本机数据包信息,在解封装数据包过程中发现这是一个送往UDP 8472接口的vxlan数据包,将数据包交给监听UDP 8472端口的应用程序或内核模块处理。 - 解封装后发现内部的数据包,目的地址为

10.244.2.2,通过查看本机路由表,送往cni0网卡。路由:10.244.1.0/24 dev cni0 proto kernel scope link src 10.244.1.1 - 通过

cni0网卡brctl showmacs cni0mac信息 ,最终会把数据包送到cni-x2rxr主机

Service 网络通讯

可以查看此文档 Flannel UDP 模式 中,Service 网络通讯,数据包转发流程一致

Flannel VxLAN DR 模式

Flannel VxLAN DR 模式