第1关

在 CompassArena 中选择双模型对话,与InternLM2.5及另外任意其他模型对话,收集 5 个 InternLM2.5 输出结果不如其他模型的对话案例,以及 InternLM2.5 的 5 个 Good Case,并写成一篇飞书文档提交到:https://aicarrier.feishu.cn/share/base/form/shrcnZ4bQ4YmhEtMtnKxZUcf1vd

作业链接:https://p157xvvpk2d.feishu.cn/docx/KDzJd4Q66o6jhLxPsqYc1G1PnIf

第2关

基础任务

- 使用 Lagent 自定义一个智能体,并使用 Lagent Web Demo 成功部署与调用,记录复现过程并截图。

-

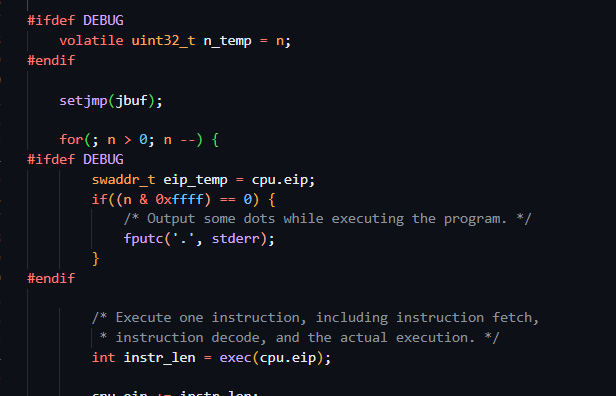

环境配置

git clone https://github.com/InternLM/lagent.git cd lagent && git checkout 81e7ace && pip install -e . -

使用LMDeploy部署 InernLM2.5-7B-Chat 模型:

model_dir="/home/scy/models/internlm/internlm2_5-7b-chat" # 模型本地路径 lmdeploy serve api_server $model_dir --model-name internlm2_5-7b-chat -

基于Lagent自定义智能体,创建文件

lagent/actions/magicmake.py,代码如下:import json import requestsfrom lagent.actions.base_action import BaseAction, tool_api from lagent.actions.parser import BaseParser, JsonParser from lagent.schema import ActionReturn, ActionStatusCodeclass MagicMaker(BaseAction):styles_option = ['dongman', # 动漫'guofeng', # 国风'xieshi', # 写实'youhua', # 油画'manghe', # 盲盒]aspect_ratio_options = ['16:9', '4:3', '3:2', '1:1','2:3', '3:4', '9:16']def __init__(self,style='guofeng',aspect_ratio='4:3'):super().__init__()if style in self.styles_option:self.style = styleelse:raise ValueError(f'The style must be one of {self.styles_option}')if aspect_ratio in self.aspect_ratio_options:self.aspect_ratio = aspect_ratioelse:raise ValueError(f'The aspect ratio must be one of {aspect_ratio}')@tool_apidef generate_image(self, keywords: str) -> dict:"""Run magicmaker and get the generated image according to the keywords.Args:keywords (:class:`str`): the keywords to generate imageReturns::class:`dict`: the generated image* image (str): path to the generated image"""try:response = requests.post(url='https://magicmaker.openxlab.org.cn/gw/edit-anything/api/v1/bff/sd/generate',data=json.dumps({"official": True,"prompt": keywords,"style": self.style,"poseT": False,"aspectRatio": self.aspect_ratio}),headers={'content-type': 'application/json'})except Exception as exc:return ActionReturn(errmsg=f'MagicMaker exception: {exc}',state=ActionStatusCode.HTTP_ERROR)image_url = response.json()['data']['imgUrl']return {'image': image_url} -

修改文件

lagent/examples/internlm2_agent_web_demo.py,添加我们自定义的工具MagicMaker:from lagent.actions import ActionExecutor, ArxivSearch, IPythonInterpreter + from lagent.actions.magicmaker import MagicMaker from lagent.agents.internlm2_agent import INTERPRETER_CN, META_CN, PLUGIN_CN, Internlm2Agent, Internlm2Protocol...action_list = [ArxivSearch(), + MagicMaker(),] -

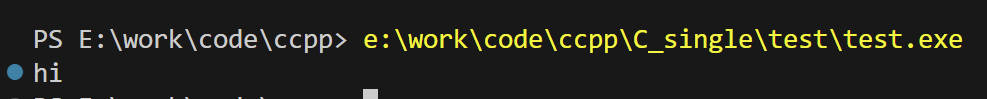

在另一个窗口中启动 Lagent 的Web Demo:

streamlit run examples/internlm2_agent_web_demo.py -

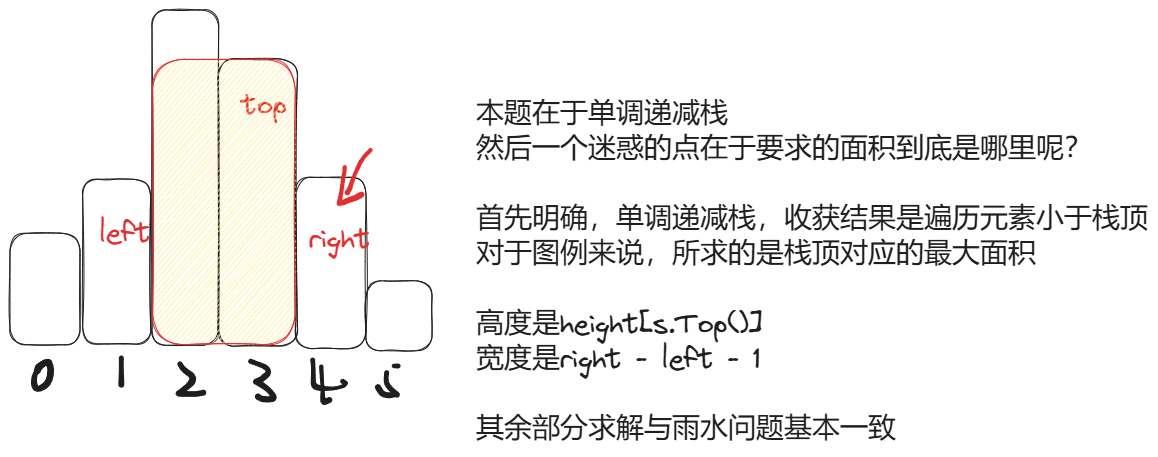

效果图如下:

第3关

基础任务

- 使用结合W4A16量化与kv cache量化的

internlm2_5-1_8b-chat模型封装本地API并与大模型进行一次对话,作业截图需包括显存占用情况与大模型回复,参考4.1 API开发(优秀学员必做),请注意2.2.3节与4.1节应使用作业版本命令。

-

使用w4a16方式进行模型量化,执行以下脚本:

lmdeploy lite auto_awq \/home/scy/models/internlm/internlm2_5-1_8b-chat \ # 模型本地路径--calib-dataset 'ptb' \--calib-samples 128 \--calib-seqlen 2048 \--w-bits 4 \--w-group-size 128 \--batch-size 1 \--search-scale False \--work-dir /home/scy/models/internlm/internlm2_5-1_8b-chat-w4a16-4bit # 量化后的模型存储路径 -

执行如下命令,查看量化后的模型所占的磁盘空间:

du -sh ~/models/internlm/*效果图如下:

量化前模型占空间3.6G,量化后占空间1.5G

-

对w4a16量化后的模型使用kv cache int4量化,执行以下脚本:

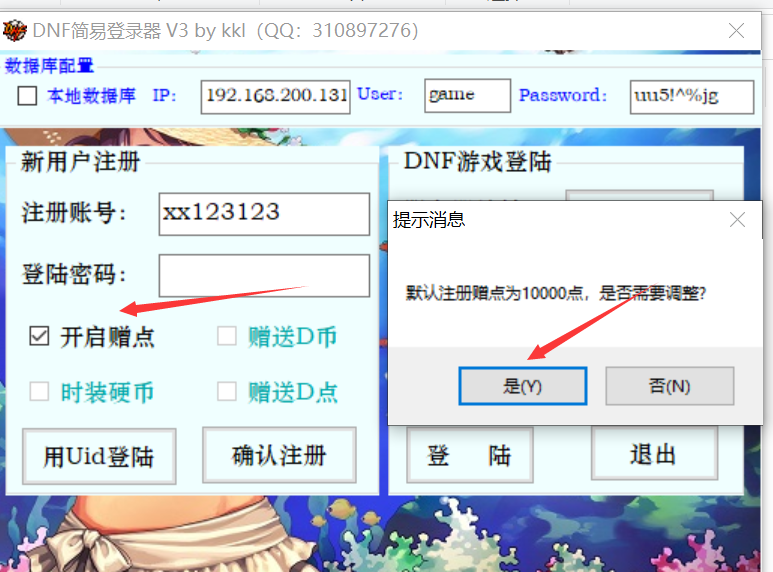

lmdeploy serve api_server \/home/scy/models/internlm/internlm2_5-1_8b-chat-w4a16-4bit \--model-format awq \--quant-policy 4 \--cache-max-entry-count 0.4\--server-name 0.0.0.0 \--server-port 23333 \--tp 1查看显存占用情况,如下图:

-

执行如下命令,调用部署后的接口:

lmdeploy serve api_client http://0.0.0.0:23333对话效果图如下:

- 使用Function call功能让大模型完成一次简单的"加"与"乘"函数调用,作业截图需包括大模型回复的工具调用情况,参考4.2 Function call(选做)

-

执行以下脚本,部署internlm2.5-7b-chat模型:

model_dir="/home/scy/models/internlm/internlm2_5-7b-chat" # 模型本地存储路径 lmdeploy serve api_server $model_dir --server-port 23333 --api-keys internlm -

新建文件

internlm2_5_func.py,代码如下:from openai import OpenAIdef add(a: int, b: int):return a + bdef mul(a: int, b: int):return a * btools = [{'type': 'function','function': {'name': 'add','description': 'Compute the sum of two numbers','parameters': {'type': 'object','properties': {'a': {'type': 'int','description': 'A number',},'b': {'type': 'int','description': 'A number',},},'required': ['a', 'b'],},} }, {'type': 'function','function': {'name': 'mul','description': 'Calculate the product of two numbers','parameters': {'type': 'object','properties': {'a': {'type': 'int','description': 'A number',},'b': {'type': 'int','description': 'A number',},},'required': ['a', 'b'],},} }] messages = [{'role': 'user', 'content': 'Compute (3+5)*2'}]client = OpenAI(api_key='internlm', # 填写正确的api_keybase_url='http://0.0.0.0:23333/v1') model_name = client.models.list().data[0].id response = client.chat.completions.create(model=model_name,messages=messages,temperature=0.8,top_p=0.8,stream=False,tools=tools) print(response) func1_name = response.choices[0].message.tool_calls[0].function.name func1_args = response.choices[0].message.tool_calls[0].function.arguments func1_out = eval(f'{func1_name}(**{func1_args})') print(func1_out)messages.append({'role': 'assistant','content': response.choices[0].message.content }) messages.append({'role': 'environment','content': f'3+5={func1_out}','name': 'plugin' }) response = client.chat.completions.create(model=model_name,messages=messages,temperature=0.8,top_p=0.8,stream=False,tools=tools) print(response) func2_name = response.choices[0].message.tool_calls[0].function.name func2_args = response.choices[0].message.tool_calls[0].function.arguments func2_out = eval(f'{func2_name}(**{func2_args})') print(func2_out)

效果图如下: