带着问题来学习

-

BERT 的预训练过程是如何完成的,在预训练过程中,采用了哪两种任务?

-

本次实战是用 SQuAD 数据集微调 BERT, 来完成我们的问答任务,你能否用 IMDB 影评数据集来微调 BERT,改进 BERT 的结果准确率?

文章最后会公布问题的参考答案~

一、BERT 简介

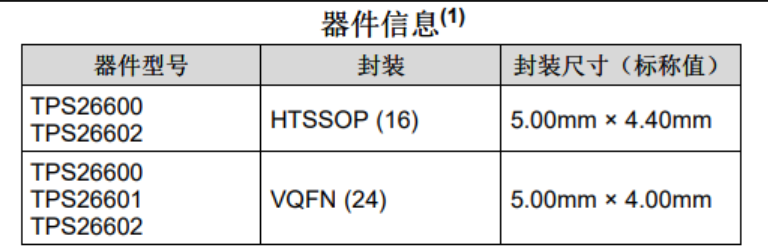

BERT 全称 Bidirectional Encoder Representations from Transformers,是 Google 在2018 年提出来的,核心架构是多层 Transformer 编码器,引入了 Masked Language Model(MLM)和 Next Sentence Prediction(NSP)两个任务来训练模型。对于每个任务,可以通过在预训练模型的顶部添加一些额外的层来微调模型。

微调 BERT 需要用到 HuggingFace 组件,建议先学习参考:HuggingFace 核心组件及应用实战

二、BERT 实战:原生 BERT 完成问答任务

我们用 Google 原生发布的 BERT 去做问答任务,看看它效果如何。完成问答任务步骤如下:

-

准备问题和问答类型任务

-

下载含有问题任务头的原始版 BERT

-

直接用 BERT 做推理,回答问题

代码(BERT_for_QA.py)如下:

from transformers import BertTokenizer, BertForQuestionAnswering

import torch

import numpy as np# Set the random seed for PyTorch and NumPy

torch.manual_seed(0)

np.random.seed(0)tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForQuestionAnswering.from_pretrained('bert-base-uncased')question, text = "What is the capital of China?", "The capital of China is Beijing."inputs = tokenizer.encode_plus(question, text, add_special_tokens=True, return_tensors="pt")

with torch.no_grad():outputs = model(**inputs)answer_start_index = torch.argmax(outputs.start_logits)

answer_end_index = torch.argmax(outputs.end_logits) + 1predict_answer_tokens = inputs['input_ids'][0][answer_start_index:answer_end_index]

predicted_answer = tokenizer.decode(predict_answer_tokens)print("What is the capital of China?", predicted_answer)

运行结果如下:

C:\Users\Lenovo\anaconda3\envs\pytorch211\python.exe "BERT_for_QA.py"

Some weights of BertForQuestionAnswering were not initialized from the model checkpoint at bert-base-uncased and are newly initialized: ['qa_outputs.bias', 'qa_outputs.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

What is the capital of China? the

其中,我们的问题是:What is China's capital?

并提供的上下文: The capital of China is Beijing.

BERT 回答是: the

😓可以看到,原生 BERT 的回答有点无厘头,因为它没有通过问答任务进行训练,效果很差。接下来我们就来微调BERT看看。

三、BERT 实战:微调 BERT 完成问答任务

用 SQuAD 数据集去微调BERT,之后再尝试让它完成问答任务,再看看效果如何,这里首先介绍下 SQuAD数据集。

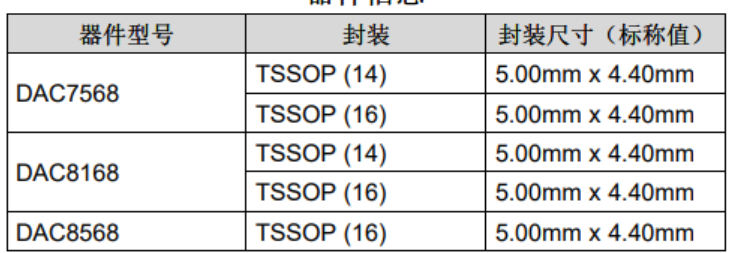

1、SQuAD数据集

SQuAD(Stanford Question Answering Dataset)数据集是 Stanford 大学发布的用于问答任务的标准数据集,它从维基百科中抽取出来了很多问题和答案。

SQuAD 数据集中每个问题(Question)接一个上下文(Context),答案(Answering)必须包含在上下文中,它本质上是一个抽取类型的任务,从一个大段的文本中,抽取几个相邻的文字,代表这个问题的答案。

2、BERT 微调流程

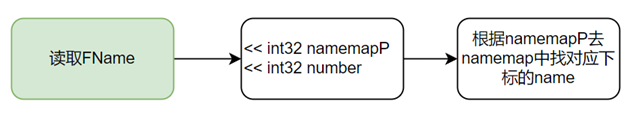

BERT 预训练+微调的核心流程图如下:

对 BERT 进行微调,一般有两种方式:

-

只微调分类输出头,保持预训练 BERT 大量的参数都不变,只聚焦于我们自己的分类输出头。相当于是 BERT 已经有很多人类的自然语言处理知识了,我们只是告诉它要干什么就够了。

-

在微调的过程中把 BERT 的参数整体也进行微调。

通常用第1种方式就够了,除非你的任务比较复杂和特殊,才回采用第2中方式。

梳理下微调 BERT 步骤,方便更好的编写代码:

-

准备问题和问答类型任务

-

下载含有问答任务头的原始版 BERT

-

转换 SQuAD 特征数据集

-

用 SQuAD 微调 BERT

-

用微调后的 BERT 做推理,回答问题

为了提高训练的速度,其中第3步可以提前完成。

2、转换为 BERT 输入特征

这里是将 SQuAD 2.0 数据集下载下来,并通过程序先转换为BERT输入特征,代码(squad_feature_creation.py)如下:

import pickle

from transformers.data.processors.squad import SquadV2Processor, squad_convert_examples_to_features

from transformers import BertTokenizer# 初始化SQuAD Processor, 数据集, 和分词器

processor = SquadV2Processor()

train_examples = processor.get_train_examples('SQuAD')

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')if __name__ == '__main__':# 将SQuAD 2.0示例转换为BERT输入特征train_features = squad_convert_examples_to_features(examples=train_examples,tokenizer=tokenizer,max_seq_length=384,doc_stride=128,max_query_length=64,is_training=True,return_dataset=False,threads=1)# 将特征保存到磁盘上with open('SQuAD_train_features.pkl', 'wb') as f:pickle.dump(train_features, f)

本地运行,会生成一份SQuAD_train_features.pkl文件,运行结果如下:

C:\Users\Lenovo\anaconda3\envs\pytorch211\python.exe "squad_feature_creation.py"

100%|██████████| 442/442 [00:18<00:00, 24.29it/s]

100%|██████████| 442/442 [00:18<00:00, 23.87it/s]

convert squad examples to features: 100%|██████████| 130319/130319 [08:20<00:00, 260.32it/s]

add example index and unique id: 100%|██████████| 130319/130319 [00:00<00:00, 1472740.97it/s]

3、BERT 微调并回答问题

接下来就是核心的 BERT 微调环节,代码(BERT_SQuAD_Finetuned.py)如下(完整代码见附件):

from transformers import BertForQuestionAnswering, BertTokenizer, BertForQuestionAnswering, AdamW

import torch

from torch.utils.data import TensorDataset# 是否有GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# 下载未经微调的BERT

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForQuestionAnswering.from_pretrained('bert-base-uncased').to(device)# 评估未经微调的BERT的性能

def china_capital():question, text = "What is the capital of China?", "The capital of China is Beijing."inputs = tokenizer.encode_plus(question, text, add_special_tokens=True, return_tensors="pt")with torch.no_grad():outputs = model(**inputs.to(device))answer_start_index = torch.argmax(outputs.start_logits)answer_end_index = torch.argmax(outputs.end_logits) + 1predict_answer_tokens = inputs['input_ids'][0][answer_start_index:answer_end_index]predicted_answer = tokenizer.decode(predict_answer_tokens)print("What is the capital of China?", predicted_answer)

china_capital() from transformers import BertTokenizer, BertForQuestionAnswering, AdamW

from torch.utils.data import DataLoader, RandomSampler, SequentialSampler

from transformers.data.processors.squad import SquadV2Processor, SquadExample, squad_convert_examples_to_features# 加载SQuAD 2.0数据集的特征

import pickle

with open('SQuAD_train_features.pkl', 'rb') as f:train_features = pickle.load(f)# 定义训练参数

train_batch_size = 8

num_epochs = 3

learning_rate = 3e-5# 将特征转换为PyTorch张量

all_input_ids = torch.tensor([f.input_ids for f in train_features], dtype=torch.long)

all_attention_mask = torch.tensor([f.attention_mask for f in train_features], dtype=torch.long)

all_token_type_ids = torch.tensor([f.token_type_ids for f in train_features], dtype=torch.long)

all_start_positions = torch.tensor([f.start_position for f in train_features], dtype=torch.long)

all_end_positions = torch.tensor([f.end_position for f in train_features], dtype=torch.long)train_dataset = TensorDataset(all_input_ids, all_attention_mask, all_token_type_ids, all_start_positions, all_end_positions)

num_samples = 100

train_dataset = TensorDataset(all_input_ids[:num_samples], all_attention_mask[:num_samples], all_token_type_ids[:num_samples], all_start_positions[:num_samples], all_end_positions[:num_samples])

train_sampler = RandomSampler(train_dataset)

train_dataloader = DataLoader(train_dataset, sampler=train_sampler, batch_size=train_batch_size)# 加载BERT模型和优化器

model = BertForQuestionAnswering.from_pretrained('bert-base-uncased').to(device)

optimizer = AdamW(model.parameters(), lr=5e-5)# 微调BERT

for epoch in range(num_epochs):for step, batch in enumerate(train_dataloader):model.train()optimizer.zero_grad()input_ids, attention_mask, token_type_ids, start_positions, end_positions = tuple(t.to(device) for t in batch)outputs = model(input_ids=input_ids, attention_mask=attention_mask, token_type_ids=token_type_ids, start_positions=start_positions, end_positions=end_positions)loss = outputs.lossloss.backward()optimizer.step()# Print the training loss every 500 stepsif step % 5 == 0:print(f"Epoch [{epoch+1}/{num_epochs}], Step [{step+1}/{len(train_dataloader)}], Loss: {loss.item():.4f}")china_capital() # 保存微调后的模型

model.save_pretrained("SQuAD_finetuned_bert")

运行结果如下:

C:\Users\Lenovo\anaconda3\envs\pytorch211\python.exe "BERT_SQuAD_Finetuned.py"

Some weights of BertForQuestionAnswering were not initialized from the model checkpoint at bert-base-uncased and are newly initialized: ['qa_outputs.bias', 'qa_outputs.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

What is the capital of China? Some weights of BertForQuestionAnswering were not initialized from the model checkpoint at bert-base-uncased and are newly initialized: ['qa_outputs.bias', 'qa_outputs.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

C:\Users\Lenovo\anaconda3\envs\pytorch211\Lib\site-packages\transformers\optimization.py:429: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warningwarnings.warn(Epoch [1/3], Step [1/13], Loss: 5.9771

Epoch [1/3], Step [6/13], Loss: 5.2767

Epoch [1/3], Step [11/13], Loss: 4.7677

Epoch [2/3], Step [1/13], Loss: 3.9339

Epoch [2/3], Step [6/13], Loss: 4.0097

Epoch [2/3], Step [11/13], Loss: 4.0402

Epoch [3/3], Step [1/13], Loss: 2.9276

Epoch [3/3], Step [6/13], Loss: 2.9915

Epoch [3/3], Step [11/13], Loss: 2.4421

What is the capital of China? beijing

其中,我们的问题是:What is China's capital?

提供的上下文是: The capital of China is Beijing.

微调 BERT 前回答:空

微调 BERT 后回答: beijing

👍这次回答对了!

微调前让 BERT 回答 问题,它给的答案是空,再经过8批次3轮的训练之后,它就能准确的回答出是 beijing。真赞!

四、总结

我们首先学习了 BERT 的原理和架构,它是通过 MLM 和 NSP 两种预训练模式增加了其对语言的全局理解能力,接着学习了 BERT 的预训练和微调过程。接着,我们进行了两个实战,实战一使用原始 BERT 完成问答任务,实战二通过 SQuAD 数据集微调后的 BERT 来完成问答任务。从结果可以看到,经过具体任务微调之后的 BERT 能够给出更好的答案。

建议没玩过 BERT 的同学跑一遍代码,整体感受下模型的推理和微调的过程。

五、参考及附件

开头问题参考:

-

BERT 的预训练过程是如何完成的,在预训练过程中,采用了哪两种任务?

- 参考 BERT 预训练流程图,采用了 MLM 和 NSP 两种任务。

-

本次实战是用 SQuAD 数据集微调 BERT, 来完成我们的问答任务,你能否用 IMDB 影评数据集来微调 BERT,改进 BERT 的结果准确率?

- 这个任务就由同学们自行完成,可先学习 HuggingFace 核心组件及应用实战,了解如何通过 HuggingFace 和 IMDB 数据集来完成影评情绪判断任务。

内容参考:黄佳老师的《ChatGPT和预训练模型课》