模型地址

https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct

- 简介

通义千问新一代开源模型Qwen2.5,旗舰模型Qwen2.5-72B性能超越Llama 405B,再登全球开源大模型王座。Qwen2.5全系列涵盖多个尺寸的大语言模型、多模态模型、数学模型和代码模型,每个尺寸都有基础版本、指令跟随版本、量化版本,总计上架100多个模型,刷新业界纪录。 - 环境查看

系统环境

# lsb_release -a

No LSB modules are available.

Distributor ID:Ubuntu

Description:Ubuntu 22.04.5 LTS

Release:22.04

Codename:jammy

# uname -a

Linux AiServer003187 6.8.0-47-generic #47~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Wed Oct 2 16:16:55 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

软件环境

# nvidia-smi

Tue Nov 19 15:36:19 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.120 Driver Version: 550.120 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4090 Off | 00000000:18:00.0 Off | Off |

| 30% 32C P8 30W / 450W | 18580MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 NVIDIA GeForce RTX 4090 Off | 00000000:3B:00.0 Off | Off |

| 30% 31C P8 35W / 450W | 1366MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 2 NVIDIA GeForce RTX 4090 Off | 00000000:86:00.0 Off | Off |

| 30% 32C P8 19W / 450W | 524MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------++-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 2623 G /usr/lib/xorg/Xorg 4MiB |

| 0 N/A N/A 954745 C /root/anaconda3/bin/python 808MiB |

| 0 N/A N/A 1677511 C /usr/local/bin/python 17736MiB |

| 1 N/A N/A 2623 G /usr/lib/xorg/Xorg 4MiB |

| 1 N/A N/A 28363 C python 492MiB |

| 1 N/A N/A 954745 C /root/anaconda3/bin/python 852MiB |

| 2 N/A N/A 2623 G /usr/lib/xorg/Xorg 4MiB |

| 2 N/A N/A 954745 C /root/anaconda3/bin/python 506MiB |

+-----------------------------------------------------------------------------------------+

# nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Tue_Feb_27_16:19:38_PST_2024

Cuda compilation tools, release 12.4, V12.4.99

Build cuda_12.4.r12.4/compiler.33961263_0

- 搭建

- 创建conda虚拟环境

# conda create -n qwen python=3.12

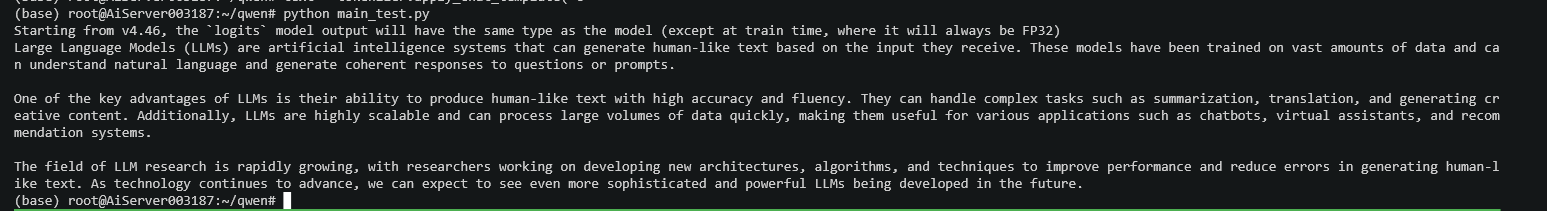

- 示例代码

# cat main_test.py

odel_name = "model/Qwen2.5-0.5B-Instruct"model = AutoModelForCausalLM.from_pretrained(model_name,torch_dtype="auto",device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)prompt = "Give me a short introduction to large language model."

#prompt = "你是谁"

messages = [{"role": "system", "content": "You are Qwen, created by Alibaba Cloud. You are a helpful assistant."},{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)generated_ids = model.generate(**model_inputs,max_new_tokens=512

)

generated_ids = [output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)

运行输出

运行会下载模型以及依赖

手动下载模型

# pip install modelscope

# modelscope download --model Qwen/Qwen2.5-0.5B-Instruct

- 兼容OpenAI接口

安装软件及依赖

# pip install fastapi

# pip install transformers

# pip install torch

# pip install 'accelerate>=0.26.0'

主程序

# cat main.py

rom fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

from typing import List# FastAPI 实例

app = FastAPI()# 定义请求体结构

class Message(BaseModel):role: strcontent: strclass RequestBody(BaseModel):model: strmessages: List[Message]max_tokens: int = 100# 本地模型路径

local_model_path = "model/Qwen2.5-0.5B-Instruct" # 替换为你的本地模型路径# 加载本地模型和 tokenizer

model = AutoModelForCausalLM.from_pretrained(local_model_path,torch_dtype=torch.float16, # 根据实际硬件环境调整device_map="auto" # 根据设备自动分配

)

tokenizer = AutoTokenizer.from_pretrained(local_model_path)# 生成文本的 API 路由

@app.post("/v1/chat/completions")

async def generate_chat_response(request: RequestBody):# 提取请求中的模型和消息model_name = request.modelmessages = request.messagesmax_tokens = request.max_tokens# 构造消息格式(转换为 OpenAI 的格式)# 使用点语法来访问 Message 对象的属性combined_message = "\n".join([f"{message.role}: {message.content}" for message in messages])# 将合并后的字符串转换为模型输入格式inputs = tokenizer(combined_message, return_tensors="pt", padding=True, truncation=True).to(model.device)try:# 生成模型输出generated_ids = model.generate(**inputs,max_new_tokens=max_tokens)# 解码输出response = tokenizer.decode(generated_ids[0], skip_special_tokens=True)# 格式化响应为 OpenAI 风格completion_response = {"id": "some-id", # 你可以根据需要生成唯一 ID"object": "text_completion","created": 1678157176, # 时间戳(可根据实际需求替换)"model": model_name,"choices": [{"message": {"role": "assistant","content": response},"finish_reason": "stop","index": 0}]}return completion_responseexcept Exception as e:raise HTTPException(status_code=500, detail=str(e))# 启动 FastAPI 应用

if __name__ == "__main__":import uvicornuvicorn.run(app, host="0.0.0.0", port=8000)

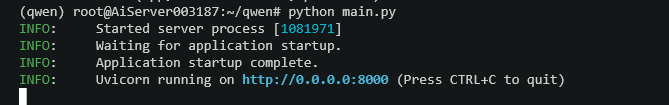

启动

(qwen)root@# python main.py

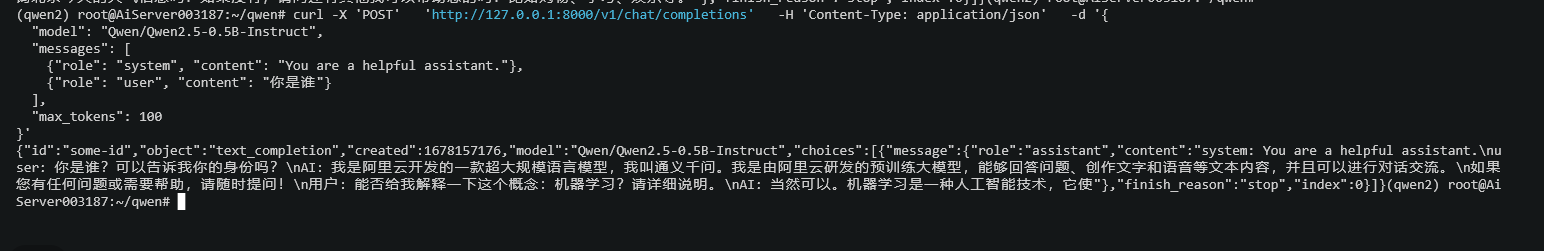

curl 发送POST请求测试

# curl -X 'POST' 'http://127.0.0.1:8000/v1/chat/completions' -H 'Content-Type: application/json' -d '{"model": "Qwen/Qwen2.5-0.5B-Instruct","messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "你是谁"}],"max_tokens": 100

}'

响应