技术背景

在前面的一些文章中,我们介绍过使用Ollama在Linux平台加载DeepSeek蒸馏模型,使用Ollama在Windows平台部署DeepSeek本地模型。除了使用Ollama与模型文件交互,还可以使用llama.cpp和KTransformer这样的。也有一些ChatBox、AnythingLLM和PageAssist这样的客户端工具推荐。

这里我们考虑到这样的一个使用场景,在我们使用ollama pull或者是从ModelScope、Hugging Face等平台下载好了一个本地模型之后,例如已经加载到Ollama里面,那么如何把这个模型导出到其他硬件里面去呢?

Ollama模型路径

如果没有去手动配置Ollama的Model路径,在Linux上默认的Ollama模型路径为:

/usr/share/ollama/.ollama/models/

是一个需要root权限的文件夹。在Windows平台上这个路径一般是:

C:\Users\user_name\.ollama\models

Ollama路径含义

在models这个路径下有两个子目录:

drwxr-xr-x 2 ollama ollama 4096 2月 20 14:47 blobs/

drwxr-xr-x 3 ollama ollama 4096 2月 20 14:53 manifests/

其中blobs里面存放的是一系列的模型文件,以sha256开头,后面跟一长串的哈希值:

$ ll blobs/

总用量 106751780

drwxr-xr-x 2 ollama ollama 4096 2月 20 14:47 ./

drwxr-xr-x 4 ollama ollama 4096 2月 5 12:24 ../

-rw-r--r-- 1 ollama ollama 412 2月 14 15:43 sha256-066df13e4debff21aeb0bb1ce8db63b9f19498d06b477ba3a4fa066adafd2949

-rwxr-xr-x 1 root root 7712 2月 20 14:46 sha256-0b4284c1f87029e67654c7953afa16279961632cf73dcfe33374c4c2f298fa35

-rwxr-xr-x 1 root root 5963057248 2月 20 14:46 sha256-11f274007f093fefeec994a5dbbb33d0733a4feb87f7ab66dcd7c1069fef0068

这些文件其实就是模型的GGUF文件,可以直接cp为gguf的后缀来使用。但关键的是有时候一个模型可能对应多个GGUF文件,所以需要一个索引文件。manifests目录存放的是每个模型的索引:

$ tree manifests/

manifests/

└── registry.ollama.ai└── library├── deepseek-r1│ ├── 14b│ ├── 32b│ ├── 32b-q2k│ ├── 32b-q40│ └── 70b-q2k├── llama3-vision│ └── 11b└── nomic-embed-text-v1.5└── latest5 directories, 7 files

这就是Ollama模型存储的一个基本结构。

查找对应模型文件

其实在上一个章节的这个索引文件里面就明文包含了模型文件的对照路径,例如打开一个llama3的索引文件:

{"schemaVersion":2,"mediaType":"application/vnd.docker.distribution.manifest.v2+json","config":{"mediaType":"application/vnd.docker.container.image.v1+json","digest":"sha256:fbd313562bb706ac00f1a18c0aad8398b3c22d5cd78c47ff6f7246c4c3438576","size":572},"layers":[{"mediaType":"application/vnd.ollama.image.model","digest":"sha256:11f274007f093fefeec994a5dbbb33d0733a4feb87f7ab66dcd7c1069fef0068","size":5963057248},{"mediaType":"application/vnd.ollama.image.projector","digest":"sha256:ece5e659647a20a5c28ab9eea1c12a1ad430bc0f2a27021d00ad103b3bf5206f","size":1938763584,"from":"/Users/ollama/.ollama/models/blobs/sha256-ece5e659647a20a5c28ab9eea1c12a1ad430bc0f2a27021d00ad103b3bf5206f"},{"mediaType":"application/vnd.ollama.image.template","digest":"sha256:715415638c9c4c0cb2b78783da041b97bd1205f8b9f9494bd7e5a850cb443602","size":269},{"mediaType":"application/vnd.ollama.image.license","digest":"sha256:0b4284c1f87029e67654c7953afa16279961632cf73dcfe33374c4c2f298fa35","size":7712},{"mediaType":"application/vnd.ollama.image.params","digest":"sha256:fefc914e46e6024467471837a48a24251db2c6f3f58395943da7bf9dc6f70fb6","size":32}]}

这里面带sha256的,都是模型所需要用到的gguf文件。相比于这种直接打开索引文件来检索的方法,Ollama其实还有一种更加优雅的查看模型存储地址的方法:

$ ollama show --modelfile llama3-vision:11b

# Modelfile generated by "ollama show"

# To build a new Modelfile based on this, replace FROM with:

# FROM llama3-vision:11bFROM /usr/share/ollama/.ollama/models/blobs/sha256-11f274007f093fefeec994a5dbbb33d0733a4feb87f7ab66dcd7c1069fef0068

FROM /usr/share/ollama/.ollama/models/blobs/sha256-ece5e659647a20a5c28ab9eea1c12a1ad430bc0f2a27021d00ad103b3bf5206f

TEMPLATE """{{- range $index, $_ := .Messages }}<|start_header_id|>{{ .Role }}<|end_header_id|>{{ .Content }}

{{- if gt (len (slice $.Messages $index)) 1 }}<|eot_id|>

{{- else if ne .Role "assistant" }}<|eot_id|><|start_header_id|>assistant<|end_header_id|>{{ end }}

{{- end }}"""

PARAMETER temperature 0.6

PARAMETER top_p 0.9

LICENSE "LLAMA 3.2 COMMUNITY LICENSE AGREEMENT

Llama 3.2 Version Release Date: September 25, 2024“Agreement” means the terms and conditions for use, reproduction, distribution

and modification of the Llama Materials set forth herein.“Documentation” means the specifications, manuals and documentation accompanying Llama 3.2

distributed by Meta at https://llama.meta.com/doc/overview.“Licensee” or “you” means you, or your employer or any other person or entity (if you are

entering into this Agreement on such person or entity’s behalf), of the age required under

applicable laws, rules or regulations to provide legal consent and that has legal authority

to bind your employer or such other person or entity if you are entering in this Agreement

on their behalf.“Llama 3.2” means the foundational large language models and software and algorithms, including

machine-learning model code, trained model weights, inference-enabling code, training-enabling code,

fine-tuning enabling code and other elements of the foregoing distributed by Meta at

https://www.llama.com/llama-downloads.“Llama Materials” means, collectively, Meta’s proprietary Llama 3.2 and Documentation (and

any portion thereof) made available under this Agreement.“Meta” or “we” means Meta Platforms Ireland Limited (if you are located in or,

if you are an entity, your principal place of business is in the EEA or Switzerland)

and Meta Platforms, Inc. (if you are located outside of the EEA or Switzerland). By clicking “I Accept” below or by using or distributing any portion or element of the Llama Materials,

you agree to be bound by this Agreement.1. License Rights and Redistribution.a. Grant of Rights. You are granted a non-exclusive, worldwide,

non-transferable and royalty-free limited license under Meta’s intellectual property or other rights

owned by Meta embodied in the Llama Materials to use, reproduce, distribute, copy, create derivative works

of, and make modifications to the Llama Materials. b. Redistribution and Use. i. If you distribute or make available the Llama Materials (or any derivative works thereof),

or a product or service (including another AI model) that contains any of them, you shall (A) provide

a copy of this Agreement with any such Llama Materials; and (B) prominently display “Built with Llama”

on a related website, user interface, blogpost, about page, or product documentation. If you use the

Llama Materials or any outputs or results of the Llama Materials to create, train, fine tune, or

otherwise improve an AI model, which is distributed or made available, you shall also include “Llama”

at the beginning of any such AI model name.ii. If you receive Llama Materials, or any derivative works thereof, from a Licensee as part

of an integrated end user product, then Section 2 of this Agreement will not apply to you. iii. You must retain in all copies of the Llama Materials that you distribute the

following attribution notice within a “Notice” text file distributed as a part of such copies:

“Llama 3.2 is licensed under the Llama 3.2 Community License, Copyright © Meta Platforms,

Inc. All Rights Reserved.”iv. Your use of the Llama Materials must comply with applicable laws and regulations

(including trade compliance laws and regulations) and adhere to the Acceptable Use Policy for

the Llama Materials (available at https://www.llama.com/llama3_2/use-policy), which is hereby

incorporated by reference into this Agreement.2. Additional Commercial Terms. If, on the Llama 3.2 version release date, the monthly active users

of the products or services made available by or for Licensee, or Licensee’s affiliates,

is greater than 700 million monthly active users in the preceding calendar month, you must request

a license from Meta, which Meta may grant to you in its sole discretion, and you are not authorized to

exercise any of the rights under this Agreement unless or until Meta otherwise expressly grants you such rights.3. Disclaimer of Warranty. UNLESS REQUIRED BY APPLICABLE LAW, THE LLAMA MATERIALS AND ANY OUTPUT AND

RESULTS THEREFROM ARE PROVIDED ON AN “AS IS” BASIS, WITHOUT WARRANTIES OF ANY KIND, AND META DISCLAIMS

ALL WARRANTIES OF ANY KIND, BOTH EXPRESS AND IMPLIED, INCLUDING, WITHOUT LIMITATION, ANY WARRANTIES

OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR A PARTICULAR PURPOSE. YOU ARE SOLELY RESPONSIBLE

FOR DETERMINING THE APPROPRIATENESS OF USING OR REDISTRIBUTING THE LLAMA MATERIALS AND ASSUME ANY RISKS ASSOCIATED

WITH YOUR USE OF THE LLAMA MATERIALS AND ANY OUTPUT AND RESULTS.4. Limitation of Liability. IN NO EVENT WILL META OR ITS AFFILIATES BE LIABLE UNDER ANY THEORY OF LIABILITY,

WHETHER IN CONTRACT, TORT, NEGLIGENCE, PRODUCTS LIABILITY, OR OTHERWISE, ARISING OUT OF THIS AGREEMENT,

FOR ANY LOST PROFITS OR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, INCIDENTAL, EXEMPLARY OR PUNITIVE DAMAGES, EVEN

IF META OR ITS AFFILIATES HAVE BEEN ADVISED OF THE POSSIBILITY OF ANY OF THE FOREGOING.5. Intellectual Property.a. No trademark licenses are granted under this Agreement, and in connection with the Llama Materials,

neither Meta nor Licensee may use any name or mark owned by or associated with the other or any of its affiliates,

except as required for reasonable and customary use in describing and redistributing the Llama Materials or as

set forth in this Section 5(a). Meta hereby grants you a license to use “Llama” (the “Mark”) solely as required

to comply with the last sentence of Section 1.b.i. You will comply with Meta’s brand guidelines (currently accessible

at https://about.meta.com/brand/resources/meta/company-brand/). All goodwill arising out of your use of the Mark

will inure to the benefit of Meta.b. Subject to Meta’s ownership of Llama Materials and derivatives made by or for Meta, with respect to anyderivative works and modifications of the Llama Materials that are made by you, as between you and Meta,you are and will be the owner of such derivative works and modifications.c. If you institute litigation or other proceedings against Meta or any entity (including a cross-claim orcounterclaim in a lawsuit) alleging that the Llama Materials or Llama 3.2 outputs or results, or any portionof any of the foregoing, constitutes infringement of intellectual property or other rights owned or licensableby you, then any licenses granted to you under this Agreement shall terminate as of the date such litigation orclaim is filed or instituted. You will indemnify and hold harmless Meta from and against any claim by any thirdparty arising out of or related to your use or distribution of the Llama Materials.6. Term and Termination. The term of this Agreement will commence upon your acceptance of this Agreement or access

to the Llama Materials and will continue in full force and effect until terminated in accordance with the terms

and conditions herein. Meta may terminate this Agreement if you are in breach of any term or condition of this

Agreement. Upon termination of this Agreement, you shall delete and cease use of the Llama Materials. Sections 3,

4 and 7 shall survive the termination of this Agreement. 7. Governing Law and Jurisdiction. This Agreement will be governed and construed under the laws of the State of

California without regard to choice of law principles, and the UN Convention on Contracts for the International

Sale of Goods does not apply to this Agreement. The courts of California shall have exclusive jurisdiction of

any dispute arising out of this Agreement. "

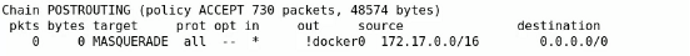

使用这个show指令也能够查看到模型存储地址,除了模型地址之外,还有整个的LICENSE。但是这里我们也发现了一个非常重要的问题:通过ollama show列举出来的GGUF模型文件不全!!!。因此,还是建议直接打开manifests中的配置文件进行查看,再迁移相关的模型文件。

模型迁移和加载

在前面了解清楚Ollama的模型文件的存储结构之后,我们要迁移模型,思路就很清晰了:直接把模型文件和索引文件拷贝到相应的路径下就可以了。例如,把sha256的gguf文件拷贝目标设备的blobs路径下,再按照manifests的目录结构,把索引文件拷贝到manifests的路径下。其中如果是Linux操作系统,如下结构是固定的:

manifests/

└── registry.ollama.ai└── library

然后在library下创建一个模型名称命名的目录,例如model-name/,然后把配置文件config拷贝到这个路径下,那么加载模型的时候需要使用的指令就是:

$ ollama run model-name:config

需要注意的是,刚把模型迁移过来的时候,直接使用PageAssist等工具可能找不到本地刚迁移过来的模型,需要先ollama run一次,才能够在模型列表里面找到:

$ ollama list

NAME ID SIZE MODIFIED

llama3-vision:11b 085a1fdae525 7.9 GB About an hour ago

到这里,Ollama的模型迁移就完成了。

总结概要

为了方便本地大模型部署和迁移,本文提供了一个关于Ollama的模型本地迁移的方法。由于直接从Ollama Hub下载下来的模型,或者是比较大的GGUF模型文件,往往会被切分成多个,而文件名在Ollama的路径中又被执行了sha256散列变换。因此我们需要从索引文件中获取相应的文件名,再进行模型本地迁移。

版权声明

本文首发链接为:https://www.cnblogs.com/dechinphy/p/ollama.html

作者ID:DechinPhy

更多原著文章:https://www.cnblogs.com/dechinphy/

请博主喝咖啡:https://www.cnblogs.com/dechinphy/gallery/image/379634.html

为了方便本地大模型部署和迁移,本文提供了一个关于Ollama的模型本地迁移的方法。由于直接从Ollama Hub下载下来的模型,或者是比较大的GGUF模型文件,往往会被切分成多个,而文件名在Ollama的路径中又被执行了sha256散列变换。因此我们需要从索引文件中获取相应的文件名,再进行模型本地迁移。

为了方便本地大模型部署和迁移,本文提供了一个关于Ollama的模型本地迁移的方法。由于直接从Ollama Hub下载下来的模型,或者是比较大的GGUF模型文件,往往会被切分成多个,而文件名在Ollama的路径中又被执行了sha256散列变换。因此我们需要从索引文件中获取相应的文件名,再进行模型本地迁移。