【李宏毅】2024大语言模型课程

课程学习

课程链接:https://speech.ee.ntu.edu.tw/~hylee/genai/2024-spring.php

Bilibili相关视频链接:https://www.bilibili.com/video/BV1XS411w7qr

GPT: Autoregressive model

In-context Learning

- Chain of Thoughts (CoT)

- Tree of Thoughts (ToT)

- Algorithm of Thoughts (AoT)

- ....

使用工具:

- 搜寻引擎 Retrieval Augmented Generation (RAG)

- 写程序 Program of Thought (PoT)

- 文字生图 DALL-E

Explainable ML:

- Local Explanation

- Saliency Map

- SmoothGrad (improved Saliency Map)

- Integrated Gradient(IG)

- Global Explanation

Three steps of LLM training:

- Pre-train -> Foundation model

- Instruction Fine-tuning (Supervised Learning)

- Reinforcement Learning from Human Feedback (RLHF)

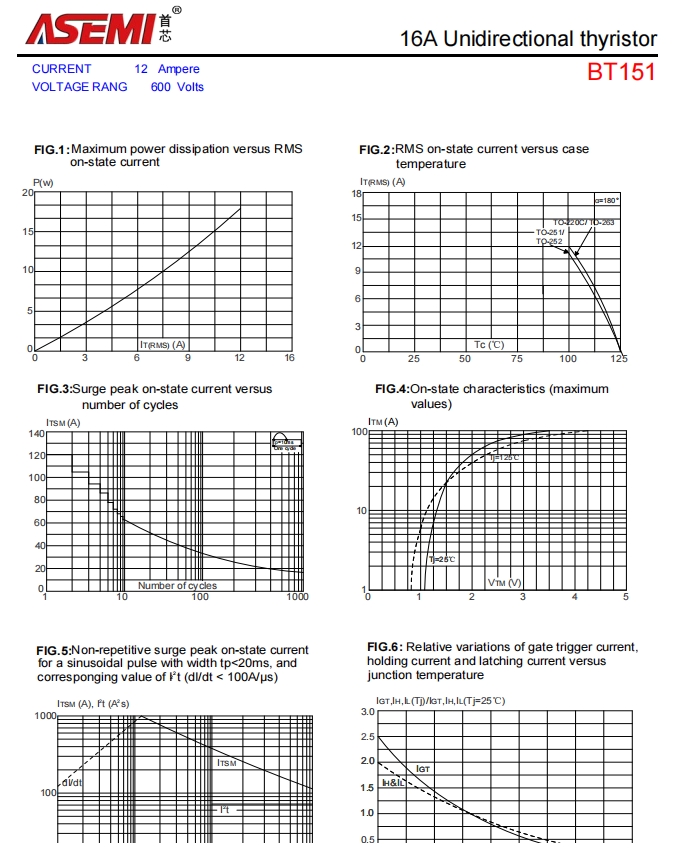

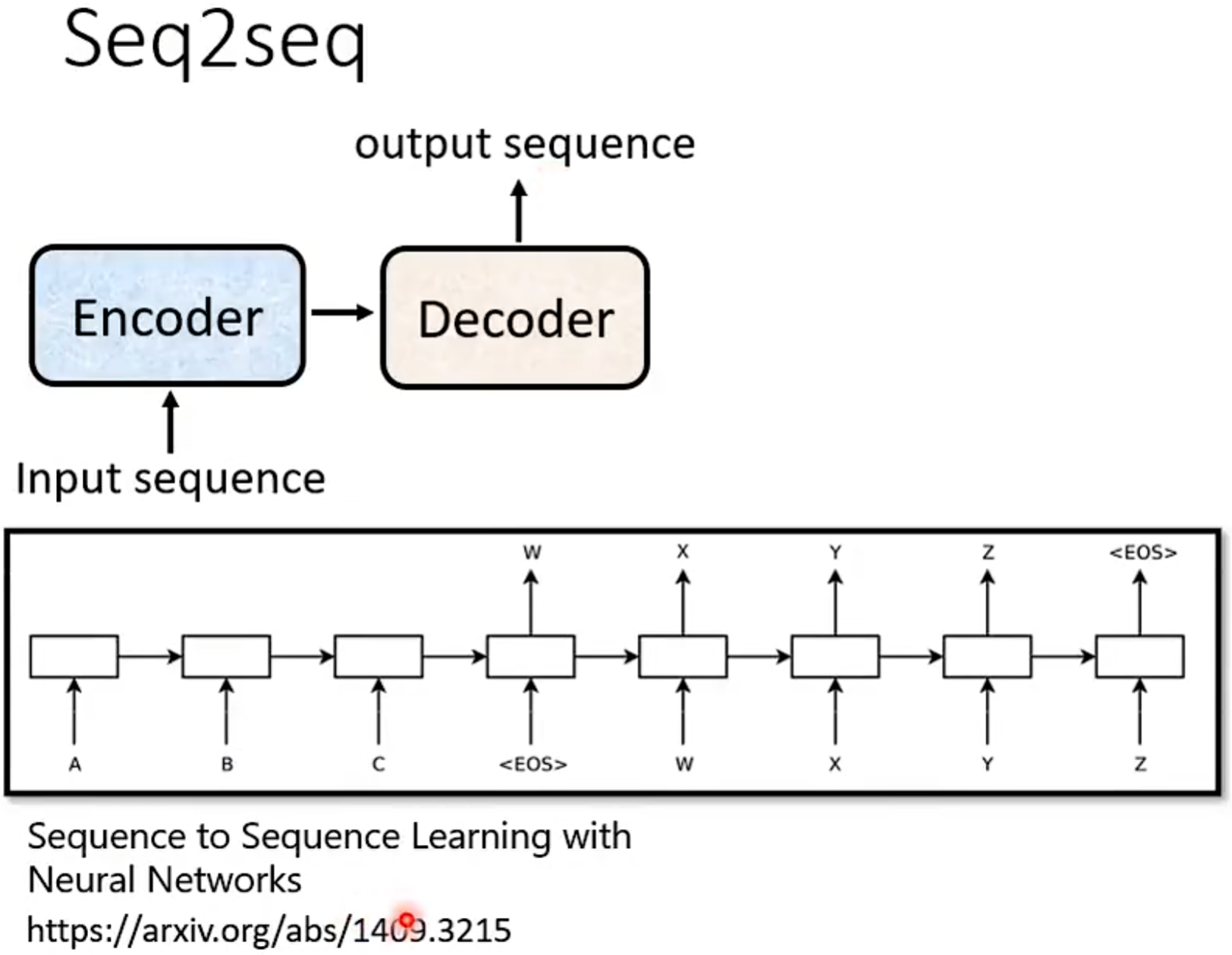

Seq2seq:

-

Syntactic Parsing (文法分析)

-

Multi-label Classification (区别于 Multi-class Classification)

An object can belong to multiple classes

-

Object Detection

Tranformer:

- Self-attention

- Cross-attention

Copy Mechanism => Summarization

- Pointer Network

Attention Decoder

- Greedy Decoding (每次都选择输出概率最大的token)

- Bean Search

- Sampling (more creative, randomness is needed for decoder when generating)

作业总结

【李宏毅】2023机器学习系列课程

课程链接:https://speech.ee.ntu.edu.tw/~hylee/ml/2023-spring.php

课程学习

能够使用工具的AI:

- WebGPT

- Toolformer

作业总结

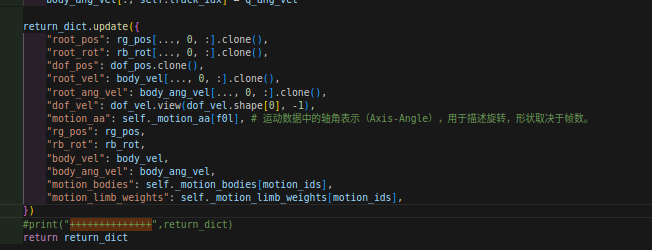

trainer

# trainer

n_epochs = config['n_epochs']

criterion = nn.MSELoss(reduction='mean') # define loss function

optimizer = torch.optim.SGD(model.parameters(), lr=config['learning_rate'], momentum=0.7) # define optimizerfor epoch in range(n_epochs):# trainmodel.train()loss_record = []for X, y in train_loader:optimizer.zero_grad()X, y = X.to(device), y.to(device)pred = model(X)loss = criterion(pred, y)loss.backward()optimizer.step()loss_record.append(loss.detach().item()) # loss value of a batch : loss.detach().item()mean_train_loss = sum(loss_record) / len(loss_record)# evaluatemodel.eval()loss_record = []with torch.no_grad():for X, y in valid_loader:X, y = X.to(device), y.to(device)pred = model(X)loss = criterion(pred, y)loss_record.append(loss.detach().item()) # loss value of a batch : loss.detach().item()mean_eval_loss = sum(loss_record) / len(loss_record)

tensorboard

.from torch.utils.tensorboard import SummaryWriterwriter = SummaryWriter() # Writer of tensoboard.

writer.add_scalar('Loss/train', mean_train_loss, step)

"""

def add_scalar(tag: Any, # 图表的名称scalar_value: Any, # 纵坐标取值global_step: Any | None = None, # 横坐标取值walltime: Any | None = None,new_style: bool = False,double_precision: bool = False

)

"""

【ETH】2020 Digital Design and Computer Architecture

课程链接:https://safari.ethz.ch/digitaltechnik/spring2020/doku.php?id=start

课程视频链接:https://www.youtube.com/playlist?list=PL5Q2soXY2Zi_FRrloMa2fUYWPGiZUBQo2

课程学习

作业总结

【UCB】2020 Structure and Interpretation of Computer Programs

课程链接:https://web.archive.org/web/20210104105406/https://cs61a.org/

课程视频链接:https://www.bilibili.com/video/BV1s3411G7yM/

![[QOJ 8366] 火车旅行](https://img2024.cnblogs.com/blog/3584318/202502/3584318-20250224140737540-1060414145.png)

![BUUCTF-RE-[2019红帽杯]easyRE](https://img2024.cnblogs.com/blog/3596699/202502/3596699-20250224131420256-1314204608.png)