题目

题目链接

梯度下降是一种在机器学习中常用的优化算法,其计算步骤如下:

- 初始化参数\[w_0 = 0 \]

- 计算梯度\[g_t = \nabla f(w_t) \]

本题采用MSE作为损失函数,其梯度为:

\[g_t = \frac{2}{n} \sum_{i=1}^{n} (y_i - w_t^T x_i)x_i

\]

- 更新参数\[w_{t+1} = w_t - \eta \cdot g_t \]

- 重复上述步骤,直到收敛。

本题中采用了三种梯度下降方法,分别是批量梯度下降(batch)、随机梯度下降(stochastic)和mini-batch梯度下降(mini_batch)。区别如下:

- 批量梯度下降:每次迭代使用所有数据点来计算梯度,更新参数。

- 随机梯度下降:每次迭代使用一个数据点来计算梯度,更新参数。

- mini-batch梯度下降:每次迭代使用一部分数据点来计算梯度,更新参数。

需要注意的是,本题中随机梯度下降与mini-batch梯度下降都需要对数据集整体进行遍历更新,并不是定义上对单个数据点/小批量数据点进行更新。

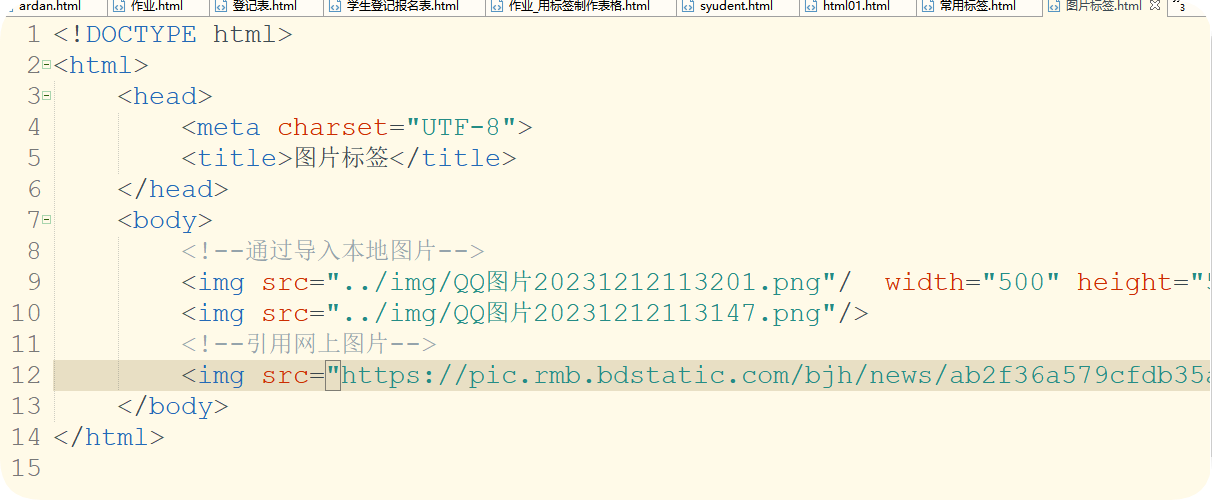

标准代码如下

def gradient_descent(X, y, weights, learning_rate, n_iterations, batch_size=1, method='batch'):m = len(y)for _ in range(n_iterations):if method == 'batch':# Calculate the gradient using all data pointspredictions = X.dot(weights)errors = predictions - ygradient = 2 * X.T.dot(errors) / mweights = weights - learning_rate * gradientelif method == 'stochastic':# Update weights for each data point individuallyfor i in range(m):prediction = X[i].dot(weights)error = prediction - y[i]gradient = 2 * X[i].T.dot(error)weights = weights - learning_rate * gradientelif method == 'mini_batch':# Update weights using sequential batches of data points without shufflingfor i in range(0, m, batch_size):X_batch = X[i:i+batch_size]y_batch = y[i:i+batch_size]predictions = X_batch.dot(weights)errors = predictions - y_batchgradient = 2 * X_batch.T.dot(errors) / batch_sizeweights = weights - learning_rate * gradientreturn weights