前言

本文总结梳理扩散模型的几种理解方式,基于苏神的扩散模型系列文章生成扩散模型漫谈(一):DDPM = 拆楼 + 建楼,生成扩散模型漫谈(二):DDPM = 自回归式VAE,生成扩散模型漫谈(三):DDPM = 贝叶斯 + 去噪,结合笔者的一些思考,权当梳理笔记使用。

生成模型

生成模型采用神经网络\(q(x|\theta)\)拟合数据分布\(p(x)\),如果\(q(x|\theta)\)能够进行采样,便可生成样本。因此,笔者认为生成模型的关键点有三点:构建生成模型结构;设计模型训练目标;实现采样。

- 关于构建生成模型结构:由于\(q(x|\theta)\)只能建模出少量的显式分布,不能够满足数据分布多样性的需求,因此,往往引入隐变量\(z\)辅助建模。此时,\(q(x|\theta)=\int{q(x|\theta, z)q(z)dz}\),由于\(z\)是已知的先验分布,此时生成模型只需要建模\(q(x|\theta, z)\)。通过这种方式,即使\(q(x|\theta, z)\)简单建模为高斯分布\(\mathcal{N}(x; \mu(z, \theta), \sigma^{2}(z, \theta))\),\(q(x|\theta)\)在理论上也能够建模为任意分布。目前存在多种生成模型架构,如GAN,VAE,flow-based model,diffusion model等等。本文只针对扩散模型进行介绍。

- 关于采样,建模先验分布\(q(z)\)时,往往采用可以直接采样的分布,如标准高斯分布,然后通过采样隐变量\(z_{i}\)的方式间接采样生成样本\(x_{i}\)。

- 关于模型训练目标,为了用\(q(x|\theta)\)逼近\(p(x)\),如果二者的KL散度能够计算,可以采用KL散度作为训练目标

\[\begin{align}

loss&=KL(p(x)\|q(x|\theta))=\int{p(x)\log\frac{p(x)}{q(x|\theta)}}dx=\int p(x)\log{p(x)}dx-\int p(x)\log{q(x|\theta)}dx \notag \\

&=-\mathbb{E}_{x\sim p(x)}[\log{q(x|\theta)}]+C \notag

\end{align}

\]

其中,积分\(\int p(x)\log{p(x)}dx\)为仅与数据分布有关的常数\(C\),计算\(loss\)时可以忽略。

扩散模型

扩散模型的核心思想是通过逐步添加噪声将数据分布转化为简单分布(如高斯分布),然后学习如何逆向这一过程以生成新的数据。

前向扩散:前向扩散过程由原始数据\(x_{0}\)(如一张干净图片)开始,逐步加噪获得最终的纯噪声\(x_{T}\),其过程为

\[x_{0} \rightarrow x_{1} \rightarrow x_{2} \rightarrow \dots \rightarrow x_{T}

\]

其中,第\(t\)步的加噪过程为

\[x_{t}=\alpha_{t}x_{t-1}+\beta_{t}\epsilon_{t}, ~~~~~~~~~~\alpha_{t}^{2}+\beta_{t}^{2} = 1, ~~~ \epsilon_{t} \sim \mathcal{N}(0,I) \tag{1}\label{1}

\]

迭代\eqref{1}式可以得到\(x_{t}\)与\(x_{0}\)之间的关系为

\[\begin{align}

x_{t}&=\alpha_{t}x_{t-1}+\beta_{t}\epsilon_{t} \notag\\

&=\alpha_{t}\alpha_{t-1}x_{t-2}+\alpha_{t}\beta_{t-1}\epsilon_{t-1}+\beta_{t}\epsilon_{t} \notag\\

&=(\prod_{i=t-1}^{t}\alpha_{i})x_{t-2}+\sqrt{1-\prod_{i=t-1}^{t}\alpha_{i}^{2}}\tilde{\epsilon_{t}} \notag\\

&=\dots \notag\\

&=(\prod_{i=1}^{t}\alpha_{i})x_{0}+\sqrt{1-\prod_{i=1}^{t}\alpha_{i}^{2}}\bar{\epsilon_{t}} \notag \\

&=\bar{\alpha}_{t}x_{0}+\bar{\beta}_{t}\bar{\epsilon}_{t} ~~~~~~ \bar{\alpha}_{t}= \prod_{i=1}^{t}\alpha_{i}, ~~~~ \bar{\alpha}^{2}_{t}+\bar{\beta}^{2}_{t}=1, ~~~\bar{\epsilon}_{t} \sim \mathcal{N}(0,I) \tag{2}\label{2}

\end{align}

\]

因此,\(x_{t}\)既可以通过\(x_{t-1}\)加噪获得,也可以通过\(x_{0}\)加噪获得。将\eqref{1},\eqref{2}两式转化为概率语言即得到

\[p(x_{t}|x_{t-1}) \sim \mathcal{N}(x_{t}; \alpha_{t}x_{t-1}, \beta_{t}^{2}I) \tag{3}\label{3}

\]

\[p(x_{t}|x_{0}) \sim \mathcal{N}(x_{t}; \bar{\alpha}_{t}x_{0}, \bar{\beta}_{t}^{2}I) \tag{4}\label{4}

\]

反向扩散:反向扩散过程由采样得到的纯噪声\(x_{t}\)开始,逐步去噪获得生成的样本\(x_{0}\),其过程为

\[x_{T} \rightarrow x_{T-1} \rightarrow x_{T-2} \rightarrow \dots \rightarrow x_{0}

\]

由式\eqref{1}可知,当\(x_{t}\),\(\alpha_{t}\),\(\beta_{t}\)已知时,只需要采用神经网络拟合噪声\(\epsilon_{t}\),即可得到第\(t\)步的去噪过程

\[\hat{x}_{t-1} = \frac{1}{\alpha_{t}}({x_{t} - \beta_{t}\epsilon(x_{t}, t, \theta)}) \tag{5}\label{5}

\]

训练目标:结合\eqref{1}式与\eqref{5}式,训练采用的损失函数可以设置为

\[\begin{align}

loss &= \mathbb{E}[\|x_{t-1}-\hat{x}_{t-1}\|^2]=\mathbb{E}_{{\epsilon}_{t} \sim \mathcal{N}(0, I), x_{t} \sim p(x_{t})}[\|\frac{1}{\alpha_{t}}({x_{t} - \beta_{t}\epsilon_{t}}) - \frac{1}{\alpha_{t}}({x_{t} - \beta_{t}\epsilon(x_{t}, t, \theta)}) \|^{2}] \notag \\

&= \frac{\beta_{t}^{2}}{\alpha_{t}^{2}}\mathbb{E}_{{\epsilon}_{t} \sim \mathcal{N}(0, I), x_{t} \sim p(x_{t})}[\|\epsilon_{t}-\epsilon(x_{t}, t, \theta)\|^{2}] \notag \\

&= \frac{\beta_{t}^{2}}{\alpha_{t}^{2}}\mathbb{E}_{\bar{\epsilon}_{t-1}, {\epsilon}_{t} \sim \mathcal{N}(0, I), x_{0} \sim p(x)}[\|\epsilon_{t}-\epsilon(\bar{\alpha}_{t}x_{0}+\alpha_{t}\bar{\beta}_{t-1}\bar{\epsilon}_{t-1}+\beta_{t}\epsilon_{t}, t, \theta)\|^{2}] \tag{6}\label{6}

\end{align}

\]

采用式\eqref{6}进行训练的流程为:

- 从数据集中采样出一个样本\(x_{0}\)

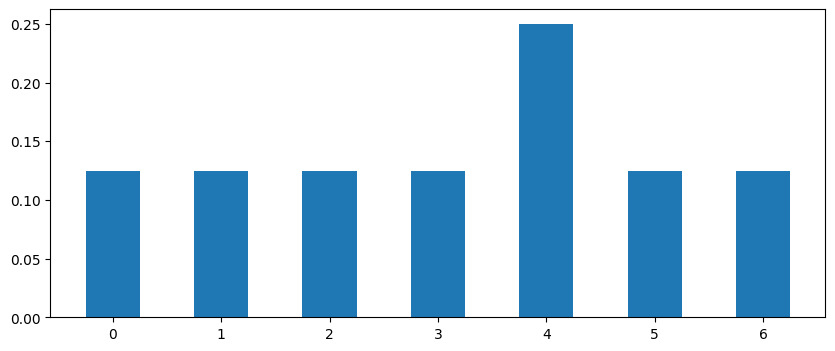

- 采样出时间\(t\)

- 采样噪声\(\bar{\epsilon}_{t-1}\),并根据式\eqref{2}计算得到\(x_{t-1}\)

- 采样噪声\({\epsilon}_{t}\),并根据式\eqref{1}计算得到\(x_{t}\)

- 代入式\eqref{6}计算损失

上述训练时,需要采样两次噪声,并进行两次前向扩散,增加了训练的不稳定性。那么,能否减少采样次数呢?事实上,由于\(\bar{\epsilon}_{t-1}\)与\(\epsilon_{t}\)耦合在了一起,可以对这两项解耦,假设

\[\alpha_{t}\bar{\beta}_{t-1}\bar{\epsilon}_{t-1}+\beta_{t}\epsilon_{t}=\bar{\beta}_{t}\epsilon

\]

同时,我们希望将\(\epsilon_{t}\)能够在\(\epsilon\)与\(\perp\epsilon\)方向进行分解,以便在计算时只需计算与\(\epsilon\)方向相同的部分。由于\(\bar{\epsilon}_{t-1}\)与\(\epsilon_{t}\)独立同分布,因此可以将上式视作\(\epsilon\)的基分解。

假设两个随机变量\(x\)与\(y\)独立同分布,\(z=ax+by\),那么\(p=bx-ay\)与\(z\)正交。此时,

\[y = \frac{bz-ap}{a^{2}+b^{2}}

\]

令\(a=\alpha_{t}\bar{\beta}_{t-1}\),\(b=\beta_{t}\),\(x=\bar{\epsilon}_{t-1}\),\(y=\epsilon_{t}\),\(z=\bar{\beta}_{t}\epsilon\),则有

\[\epsilon_{t}=\frac{\beta_{t}\bar{\beta}_{t}\epsilon-\alpha_{t}\bar{\beta}_{t-1}p}{\alpha^{2}_{t}\bar{\beta}^{2}_{t-1}+\beta_{t}^{2}}=\frac{\beta_{t}\bar{\beta}_{t}\epsilon-\alpha_{t}\bar{\beta}_{t-1}p}{\bar{\beta}_{t}^{2}}

\]

带入式\eqref{6}有

\[\begin{align}

loss &= \frac{\beta_{t}^{2}}{\alpha_{t}^{2}}\mathbb{E}_{\epsilon, p \sim \mathcal{N}(0, I), x_{0} \sim p(x)}[\|\frac{\beta_{t}\bar{\beta}_{t}\epsilon-\alpha_{t}\bar{\beta}_{t-1}p}{\bar{\beta}_{t}^{2}}-\epsilon(\bar{\alpha}_{t}x_{0}+\bar{\beta}_{t}\epsilon, t, \theta)\|^{2}] \notag\\

&= \frac{\beta_{t}^{2}}{\alpha_{t}^{2}}\mathbb{E}_{\epsilon \sim \mathcal{N}(0, I), x_{0} \sim p(x)}[\|\frac{\beta_{t}\bar{\beta}_{t}\epsilon}{\bar{\beta}_{t}^{2}}-\epsilon(\bar{\alpha}_{t}x_{0}+\bar{\beta}_{t}\epsilon, t, \theta)\|^{2}] + C \notag\\

&\Leftrightarrow \frac{\beta_{t}^{2}}{\alpha_{t}^{2}}\mathbb{E}_{x_{t} \sim p(x_{t})}[\|\frac{\beta_{t}\epsilon}{\bar{\beta}_{t}}-\epsilon(x_{t}, t, \theta)\|^{2}] \notag\\

&\Leftrightarrow \frac{\beta_{t}^{4}}{\alpha_{t}^{2}\bar{\beta}_{t}^{2}}\mathbb{E}_{x_{t} \sim p(x_{t})}[\|\epsilon-\frac{\bar{\beta}_{t}}{\beta_{t}}\epsilon(x_{t}, t, \theta)\|^{2}] \tag{7}\label{7}

\end{align}

\]

其中,第二个等号成立的原因为对\(p\)的一次项部分取期望为\(0\),对\(p\)的二次项部分取期望为常数。

采用式\eqref{7}进行训练的流程为:

- 从数据集中采样出一个样本\(x_{0}\)

- 采样出时间\(t\)

- 采样噪声\(\epsilon\)作为\(\bar{\epsilon}_{t}\),并根据式\eqref{2}计算得到\(x_{t}\)

- 代入式\eqref{7}计算损失

采样生成:得到训练之后的网络,我们可以采用式\eqref{5}进行采样。同时,也可以添加额外噪声实现随机采样

\[\hat{x}_{t-1} = \frac{1}{\alpha_{t}}({x_{t} - \beta_{t}\epsilon(x_{t}, t, \theta)})+\sigma_{t}z, ~~~~ z \sim \mathcal{N}(0, I) \tag{8}\label{8}

\]

自回归式VAE

前向扩散:\(x_{0} \rightarrow x_{1} \rightarrow x_{2} \rightarrow \dots \rightarrow x_{T}\)

反向扩散:\(x_{T} \rightarrow x_{T-1} \rightarrow x_{T-2} \rightarrow \dots \rightarrow x_{0}\)

训练目标:当前向扩散轨迹\(p(x_{0}, x_{1}, \dots, x_{T})\)和反向扩散轨迹\(q(x_{0}, x_{1}, \dots, x_{T})\)一致时,\(p(x_{0})=\int{p(x_{0:T})dx_{1:T}}\)可以采用\(q(x_{0})=\int{q(x_{0:T})dx_{1:T}}\)近似。因此,训练目标可以采用最小化\(p(x_{0:T})\)与\(q(x_{0:T})\)的KL散度。

\[\begin{align}

loss&=KL(p(x_{0:T})\|q(x_{0:T}))=\int{p(x_{0:T})\log{\frac{p(x_{0:T})}{q(x_{0:T})}}dx_{0:T}} \notag \\

&=\int{p(x_{0:T})[\log{p(x_{0:T})}}-\log{q(x_{T})}-\sum_{t=1}^{T}\log{q(x_{t-1}|x_{t})}]dx_{0:T} \notag \\

\end{align}

\]

其中,由于\(q(x_{T})\)为标准正态分布,\(p(x_{0})\)为数据分布,\(p(x_{t}|x_{t-1})\)满足式\eqref{3}。因此,\(p(x_{0:T})=\prod_{t=0}^{T-1}p(x_{t+1}|x_{t})\)\(p(x_{0})\)虽为未知的分布,但与模型参数无关。上式前两项均为常数,即

\[\int{p(x_{0:T})}\log{p(x_{0:T})}dx_{0:T}=C_{1}

\]

\[\int{p(x_{0:T})}\log{q(x_{T})}dx_{0:T}=C_{2}

\]

\[\begin{align}

\int{p(x_{0:T})\sum_{t=1}^{T}\log{q(x_{t-1}|x_{t})}]dx_{0:T}}

&=\sum_{t=1}^{T}\int{p(x_{0:T})\log{q(x_{t-1}|x_{t})}]dx_{0:T}} \notag\\

&=\sum_{t=1}^{T}\int{p(x_{t-1}, x_{t})\log{q(x_{t-1}|x_{t})}]dx_{t-1}dx_{t}} \notag\\

&=\sum_{t=1}^{T}\int{p(x_{t-1}, x_{t})\log{q(x_{t-1}|x_{t})}]dx_{t-1}dx_{t}} \notag\\

&=\sum_{t=1}^{T}\int{p(x_{t}|x_{t-1})p(x_{t-1}|x_{0})p(x_{0})\log{q(x_{t-1}|x_{t})}]dx_{0}dx_{t-1}dx_{t}} \notag\\

&=\sum_{t=1}^{T}\mathbb{E}_{x_{0} \sim p(x), x_{t-1} \sim p(x_{t-1}|x_{0}), x_{t} \sim p(x_{t}|x_{t-1})}[\log{q(x_{t-1}|x_{t})}] \notag\\

&\Leftrightarrow \sum_{t=1}^{T}\mathbb{E}_{x_{0} \sim p(x), \bar{\epsilon}_{t-1}, {\epsilon}_{t} \sim \mathcal{N}(0, I)}[\log{q(x_{t-1}|x_{t})}] \notag

\end{align}

\]

假设\(q(x_{t-1}|x_{t}) \sim \mathcal{N}(x_{t-1}; \mu(x_{t}, t, \theta), \sigma_{t}^{2}I)\),则\(\log{q(x_{t-1}|x_{t})}=-\frac{1}{2\sigma^{2}_{t}}\|x_{t-1}-\mu(x_{t}, t, \theta)\|^{2}\)为了与前向扩散过程相匹配,假设\(\mu(x_{t}, t, \theta)=\frac{1}{\alpha_{t}}({x_{t} - \beta_{t}\epsilon(x_{t}, t, \theta)})\),代入上式可得

\[\begin{align}

loss&=KL(p(x_{0:T})\|q(x_{0:T}))=\sum_{t=1}^{T}\mathbb{E}_{x_{0} \sim p(x), \bar{\epsilon}_{t-1}, {\epsilon}_{t} \sim \mathcal{N}(0, I)}[\log{q(x_{t-1}|x_{t})}]+C \notag\\

&=\frac{\beta_{t}^{2}}{2\alpha_{t}^{2}\sigma_{t}^{2}}\sum_{t=1}^{T}\mathbb{E}_{x_{0} \sim p(x), \bar{\epsilon}_{t-1}, {\epsilon}_{t} \sim \mathcal{N}(0, I)}[\|\epsilon_{t}-\epsilon(x_{t}, t, \theta)\|^{2}]+C \notag \\

&\Leftrightarrow \frac{\beta_{t}^{4}}{2\alpha_{t}^{2}\bar{\beta}_{t}^{2}\sigma_{t}^{2}}\sum_{t=1}^{T}\mathbb{E}_{x_{t} \sim p(x_{t})}[\|\epsilon-\frac{\bar{\beta}_{t}}{\beta_{t}}\epsilon(x_{t}, t, \theta)\|^{2}] \tag{9}\label{9}

\end{align}

\]

相较于式\eqref{7},式\eqref{9}仅仅在建模损失函数时多除以了系数\(\frac{1}{2\sigma^{2}_{t}}\),并将时间采样纳入到损失函数中(多了对于不同扩散步数\(t\)的求和),因此,式\eqref{9}是看待扩散模型训练目标的另一个视角。

采样生成:由于将\(q(x_{t-1}|x_{t})\)建模为\(\mathcal{N}(x_{t-1}; \mu(x_{t}, t, \theta), \sigma_{t}^{2}I)\),采样过程为

\[x_{t-1}=\mu(x_{t}, t, \theta)+\sigma_{t}z=\frac{1}{\alpha_{t}}({x_{t} - \beta_{t}\epsilon(x_{t}, t, \theta)})+\sigma_{t}z

\]

该式与式\eqref{8}一致,并且能够解释式\eqref{8}中额外采样的随机项\(z\)。

贝叶斯后验

在最初推导扩散模型时,我们直接去优化\(x_{t-1}\)与\(\hat{x}_{t-1}\)之间的欧氏距离,并没有从概率的角度审视这类模型;将扩散模型视作自回归式的VAE时,我们采用概率的视角进行优化,但推导较为复杂。本节我们可以从贝叶斯后验的角度简化公式推导。

为了实现从\(x_{t}\)中采样出\(x_{t-1}\),我们可以将\(q(x_{t-1}|x_{t})\)建模为\(\mathcal{N}(x_{t-1}; \mu(x_{t}, t, \theta), \sigma_{t}^{2}I)\)。如果我们能够得到实际的条件分布\(p(x_{t-1}|x_{t})\),即可尝试采用KL散度优化二者之间的距离。可惜的是,我们不能够得到这一条件分布,因为\(x_{t}\)不能直接获得,而是与\(x_{0}\)相关。退而求其次的是,我们可以获得\(p(x_{t-1}|x_{t}, x_{0})\),并采用\(q(x_{t-1}|x_{t}, x_{0})\)近似该分布

\[p(x_{t-1}|x_{t}, x_{0})=\frac{p(x_{t}|x_{t-1}, x_{0})p(x_{t-1}|x_{0})}{p(x_{t}|x_{0})}=\frac{p(x_{t}|x_{t-1})p(x_{t-1}|x_{0})}{p(x_{t}|x_{0})} \tag{10}\label{10}

\]

将\eqref{3}式与\eqref{4}式带入\eqref{10}式可得

\[p(x_{t-1}|x_{t}, x_{0})=C\exp{(-\frac{\|x_{t}-\alpha_{t}x_{t-1}\|^{2}}{2\beta_{t}^{2}}-\frac{\|x_{t-1}-\bar{\alpha}_{t-1}x_{0}\|^{2}}{2\bar{\beta}_{t-1}^{2}}+\frac{\|x_{t}-\bar{\alpha}_{t}x_{0}\|^{2}}{2\bar{\beta_{t}^{2}}})} \sim \mathcal{N}(x_{t-1}; \mu, \sigma^{2}I)

\]

对于正态分布\(\mathcal{N}(x; \mu, \sigma^{2}I)\),\(p(x)=C\exp(-\frac{1}{2\sigma^{2}}\|x-\mu\|^{2})\)。其中,\(x\)的二次项对应于\(-\frac{1}{2\sigma^{2}}\),\(x\)的一次项对应于\(\frac{\mu}{\sigma^{2}}\)。

其中,\(x_{t-1}\)的二次项为\(-(\frac{\alpha_{t}^{2}}{2\beta_{t}^{2}}+\frac{1}{2\bar{\beta}_{t-1}^{2}})=-\frac{\bar{\beta}_{t}^{2}}{2\beta_{t}^{2}\bar{\beta}_{t-1}^{2}}\),\(x_{t-1}\)的一次项为\(\frac{\alpha_{t}x_{t}}{\beta_{t}^{2}}+\frac{\bar{\alpha}_{t-1}x_{0}}{\bar{\beta}_{t-1}^{2}}=\frac{\alpha_{t}\bar{\beta}_{t-1}^{2}x_{t}+\bar{\alpha}_{t-1}\beta_{t}^{2}x_{0}}{\beta_{t}^{2}\bar{\beta}_{t-1}^{2}}\)。带入上式并结合式\eqref{2}可得

\[\sigma=\frac{\beta_{t}\bar{\beta}_{t-1}}{\bar{\beta}_{t}} ~~~~~~~~~~~ \mu=\frac{\alpha_{t}\bar{\beta}_{t-1}^{2}x_{t}+\bar{\alpha}_{t-1}\beta_{t}^{2}x_{0}}{\bar{\beta}_{t}^{2}}=\frac{1}{\alpha_{t}}(x_{t}-\frac{\beta_{t}^{2}}{\bar{\beta}_{t}}\bar{\epsilon}_{t})

\]

因此,我们假设\(\mu(x_{t}, t, \theta)=\frac{\alpha_{t}\bar{\beta}_{t-1}^{2}x_{t}+\bar{\alpha}_{t-1}\beta_{t}^{2}x_{0}(x_{t}, t, \theta)}{\bar{\beta}_{t}^{2}}=\frac{1}{\alpha_{t}}(x_{t}-\frac{\beta_{t}^{2}}{\bar{\beta}_{t}}\epsilon(x_{t}, t, \theta))\),\(\sigma_{t}=\frac{\beta_{t}\bar{\beta}_{t-1}}{\bar{\beta}_{t}}\)。

训练目标:此时的训练目标可以采用\(p(x_{t-1}|x_{t}, x_{0})\)与\(q(x_{t-1}|x_{t}, x_{0})\)之间的交叉熵损失。

\[\begin{align}

loss &= \mathbb{E}_{x_{0} \sim p(x_{0}), x_{t} \sim p(x_{t}|x_{0}), x_{t-1}\sim p(x_{t-1}|x_{t}, x_{0})}[-\log{q(x_{t-1}|x_{t}, x_{0})}] \notag \\

&= \mathbb{E}_{x_{0} \sim p(x_{0}), x_{t} \sim p(x_{t}|x_{0}), x_{t-1}\sim p(x_{t-1}|x_{t}, x_{0})}[\frac{1}{2\sigma_{t}^{2}}\|x_{t-1}-\mu(x_{t}, t, \theta)\|^{2}] \notag \\

&= \mathbb{E}_{x_{0} \sim p(x_{0}), \bar{\epsilon}_{t}, p \sim \mathcal{N}(0, I)}[\frac{1}{2\sigma_{t}^{2}}\|\frac{1}{\alpha_{t}}(x_{t}-\frac{\beta_{t}^{2}}{\bar{\beta}_{t}}\bar{\epsilon}_{t})+\sigma_{t}p-\frac{1}{\alpha_{t}}(x_{t}-\frac{\beta_{t}^{2}}{\bar{\beta}_{t}}\epsilon(x_{t}, t, \theta))] \notag \\

&= \mathbb{E}_{x_{0} \sim p(x_{0}), \bar{\epsilon}_{t}, p \sim \mathcal{N}(0, I)}[\frac{\beta_{t}^{4}}{2\alpha_{t}^{2}\bar{\beta}_{t}^{2}\sigma_{t}^{2}}\|\bar{\epsilon}_{t}-\epsilon(x_{t}, t, \theta))-\sigma_{t}p\|^{2}] \notag \\

&\Leftrightarrow \frac{\beta_{t}^{4}}{2\alpha_{t}^{2}\bar{\beta}_{t}^{2}\sigma_{t}^{2}}\mathbb{E}_{x_{0} \sim p(x_{0}), \epsilon \sim \mathcal{N}(0, I)}[\|\epsilon-\epsilon(x_{t}, t, \theta))\|^{2}] \tag{11}\label{11}

\end{align}

\]

采样生成:由于将\(q(x_{t-1}|x_{t}, x_{0})\)建模为\(\mathcal{N}(x_{t-1}; \mu(x_{t}, t, \theta), \sigma_{t}^{2}I)\),采样过程为

\[x_{t-1} = \frac{1}{\alpha_{t}}(x_{t}-\frac{\beta_{t}^{2}}{\bar{\beta}_{t}}\epsilon(x_{t}, t, \theta))+\frac{\beta_{t}\bar{\beta}_{t-1}}{\bar{\beta}_{t}}z \tag{12}\label{12}

\]

值得一提的是,与式\eqref{9}相比,在式\eqref{11}中,网络拟合多拟合了一个乘法项\(\frac{\bar{\beta}_{t}}{\beta_{t}}\),同样的,在\eqref{12}中,也额外多除了这一项。并且,通过贝叶斯后验形式进行推导,可以给出\(\sigma_{t}\)的一个具体数学表达。

后记

我们还可以换一个角度看待上面三种方式的推导,在式\eqref{6}与式子\eqref{9}中,我们的优化目标是\(\|x_{t-1}-\hat{x}_{t-1}\|^{2}\),网络的输出拟合\(\epsilon_{t}\);而在式\eqref{11}中,我们的优化目标变为\(\|x_{0}-\hat{x}_{0}\|^{2}\),网络的输出拟合\(\bar{\epsilon}_{t}\),因此在训练目标和采样公式中有轻微区别。