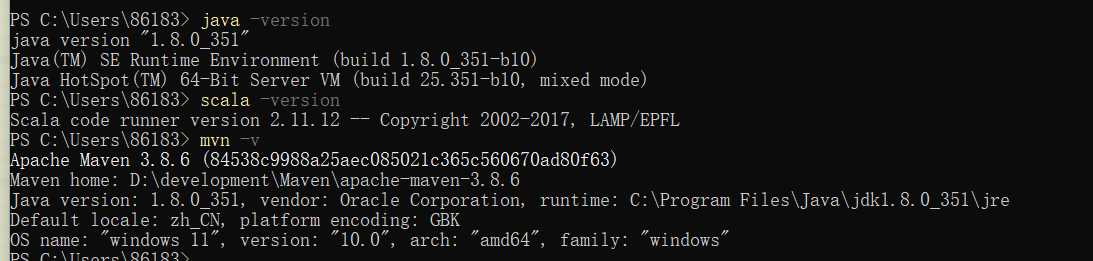

1、环境准备

java -version

scala -version

mvn -version

spark -version

2、创建spark项目

创建spark项目,有两种方式;一种是本地搭建hadoop和spark环境,另一种是下载maven依赖;最后在idea中进行配置,下面分别记录两种方法

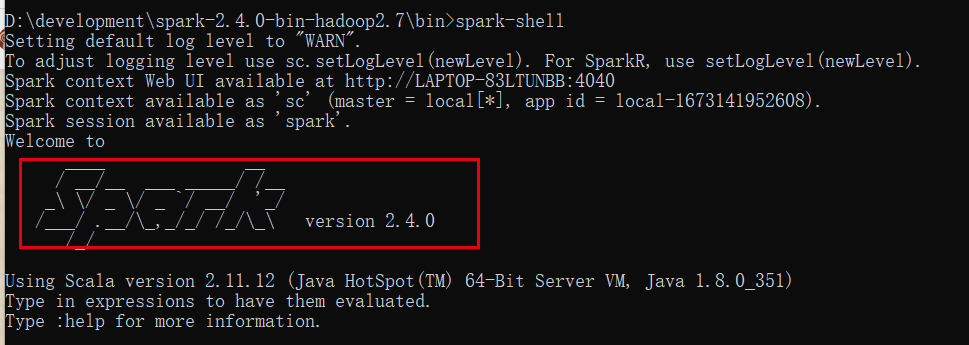

2.1 本地搭建hadoop和spark环境

参考window搭建spark + IDEA开发环境

2.2 下载maven依赖

参考 Windows平台搭建Spark开发环境(Intellij idea 2020.1社区版+Maven 3.6.3+Scala 2.11.8)

参考 Intellij IDEA编写Spark应用程序超详细步骤(IDEA+Maven+Scala)

2.2.1 maven项目pom配置

<properties><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><spark.version>2.4.0</spark.version><scala.version>2.11</scala.version><scope.flag>provide</scope.flag> </properties><dependencies><!--spark 依赖--><dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_${scala.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming_${scala.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-sql_${scala.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-hive_${scala.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-mllib_${scala.version}</artifactId><version>${spark.version}</version></dependency><!--maven自带依赖--><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>3.8.1</version><scope>test</scope></dependency> </dependencies>

2.2.2 maven中settings文件配置

<?xml version="1.0" encoding="UTF-8"?>

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd"><!--设置本地maven仓库-->

<localRepository>D:\development\LocalMaven</localRepository><!--设置镜像-->

<mirrors><mirror><id>nexus-aliyun</id><mirrorOf>central</mirrorOf><name>Nexus aliyun</name><url>http://maven.aliyun.com/nexus/content/groups/public</url></mirror>

</mirrors></settings>

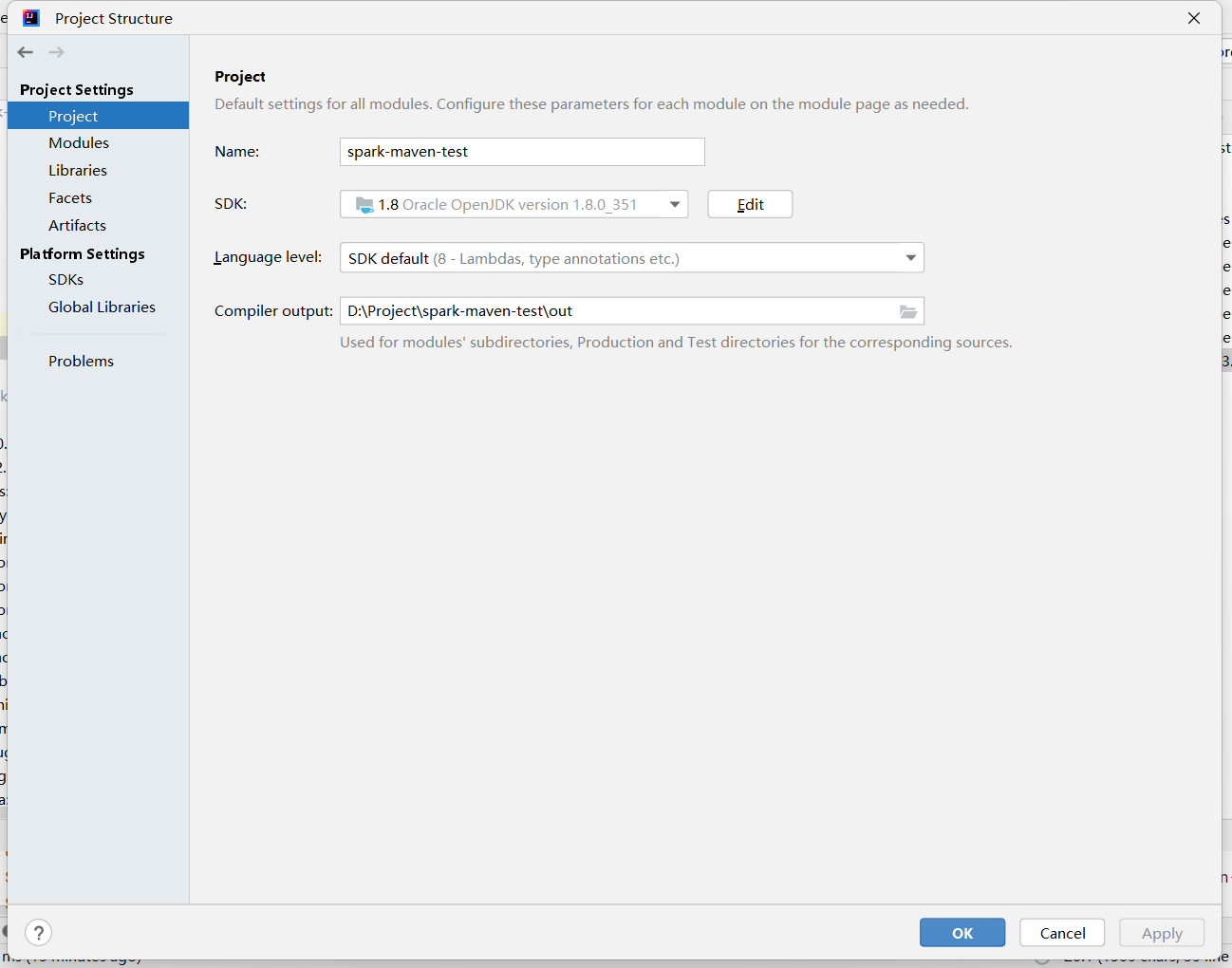

2.3 Project Settings 和 Project Structure配置

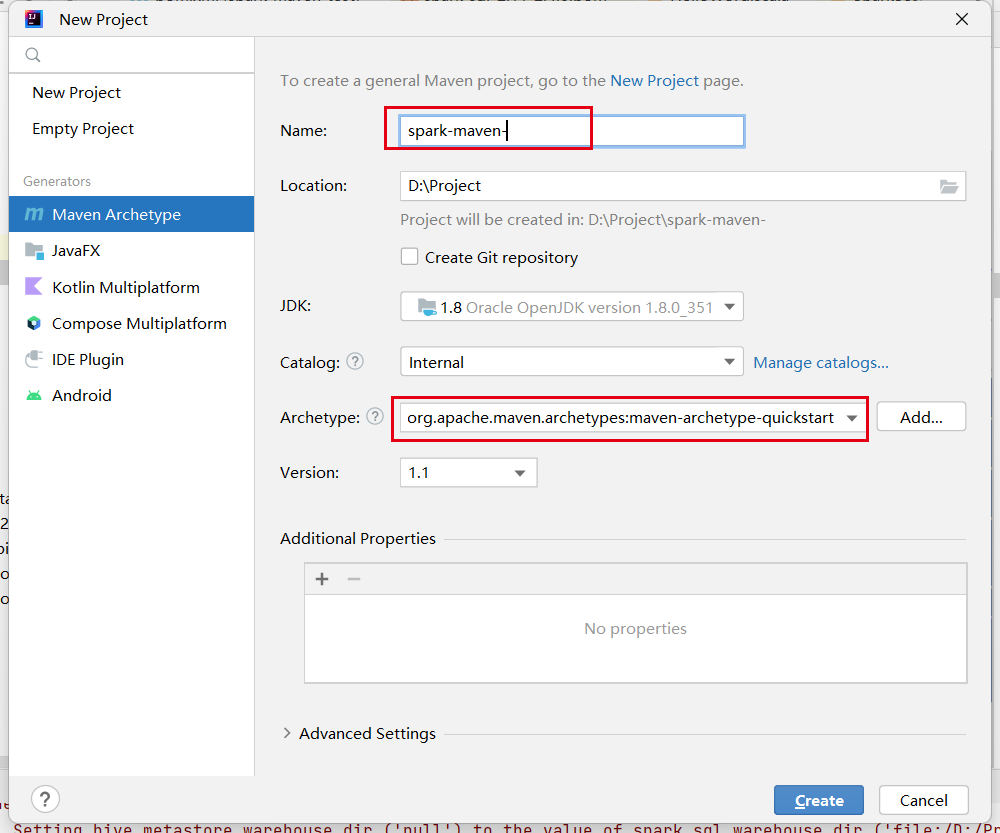

2.4 创建spark maven项目

2.4.1 Archetype选择quickstart,选择JDK

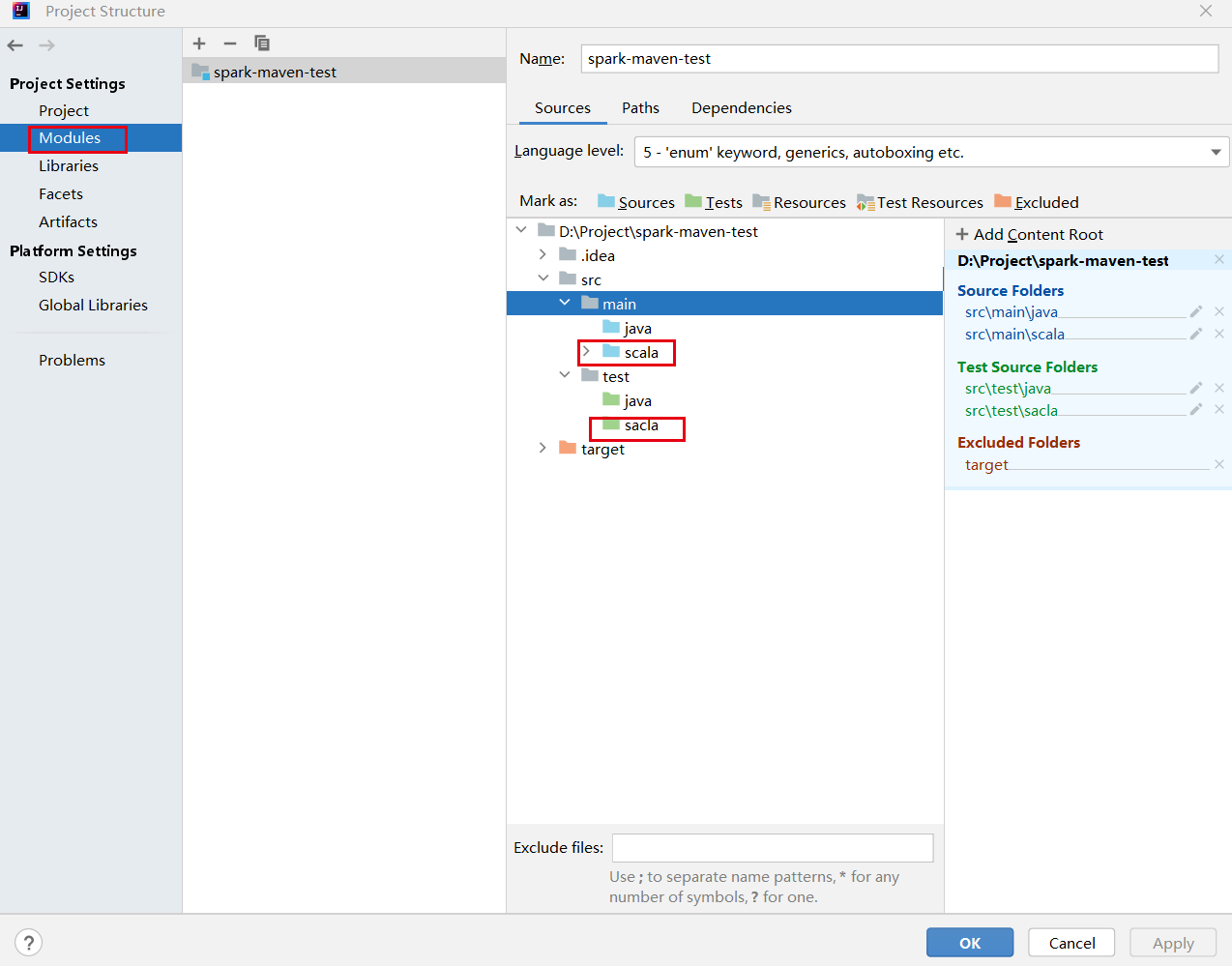

2.4.2 modules新建scala Sources文件

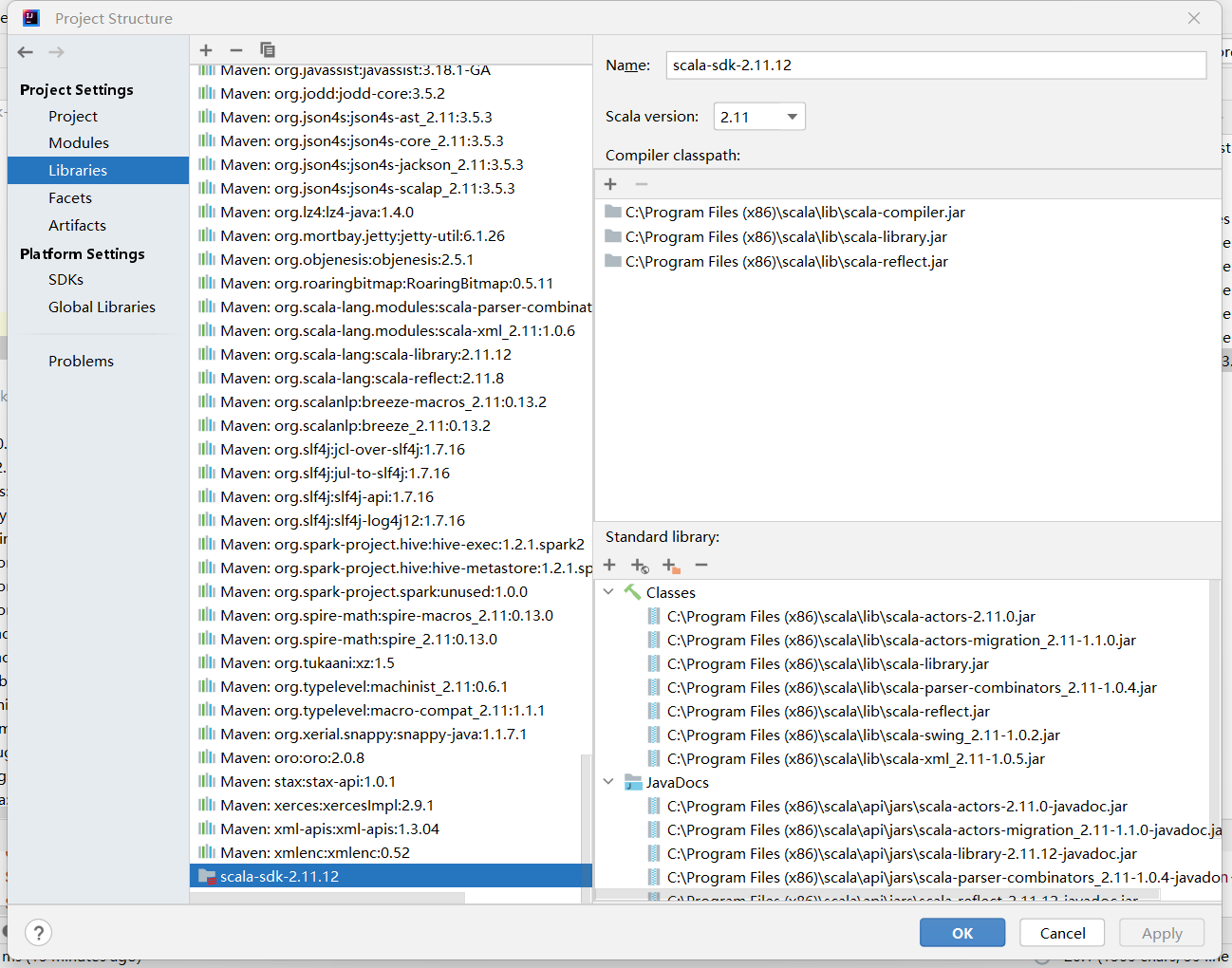

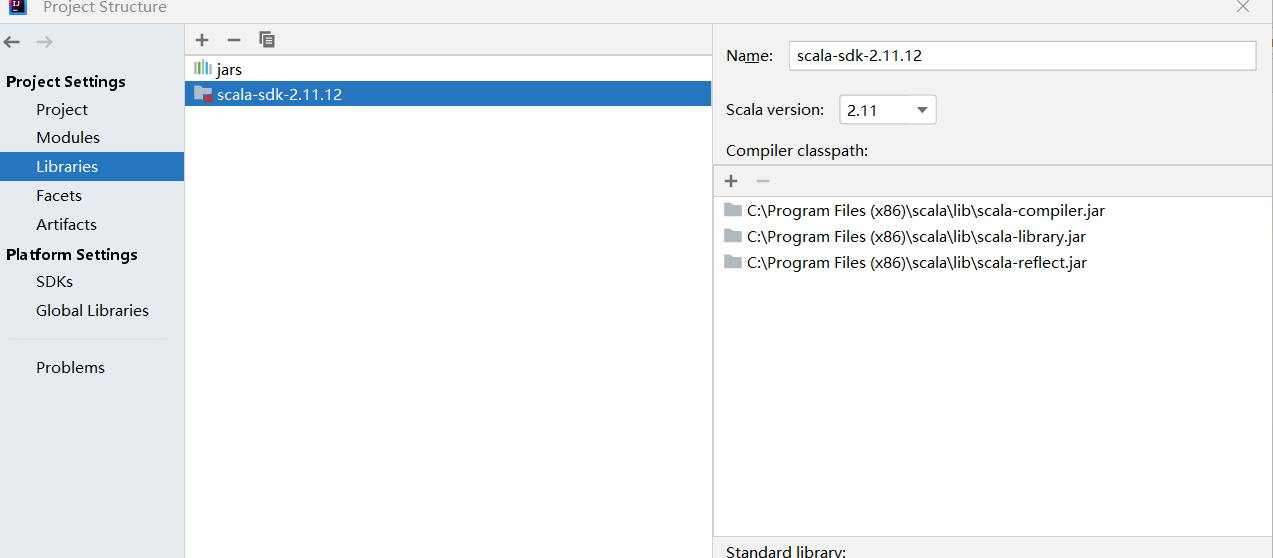

2.4.3 libraries新增sacla sdk,可以创建scala项目

3. spark程序

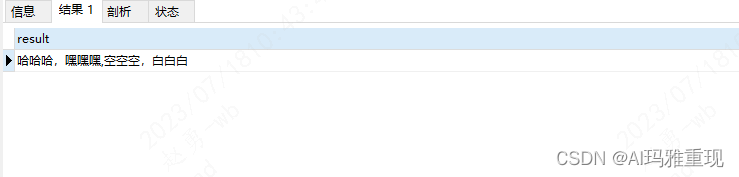

word count 和spark show函数

import org.apache.spark.sql.SparkSessionobject HelloWord {def main(args: Array[String]): Unit = {val spark = SparkSession.builder.master("local").appName("Spark CSV Reader").getOrCreateval sc = spark.sparkContext// 输入文件val input = "D:\\Project\\RecommendSystem\\src\\main\\scala\\weekwlkl"// 计算频次val count = sc.textFile(input).flatMap(x => x.split(" ")).map(x => (x, 1)).reduceByKey((x, y) => x + y);// 打印结果count.foreach(x => println(x._1 + ":" + x._2));import spark.implicits._Seq("1", "2").toDF().show()// 结束sc.stop()}

}

4. 总结

创建spark项目,并且本地调试通过,有很多注意点,包括idea的配置,再次记录一下,以便后面学习

tips

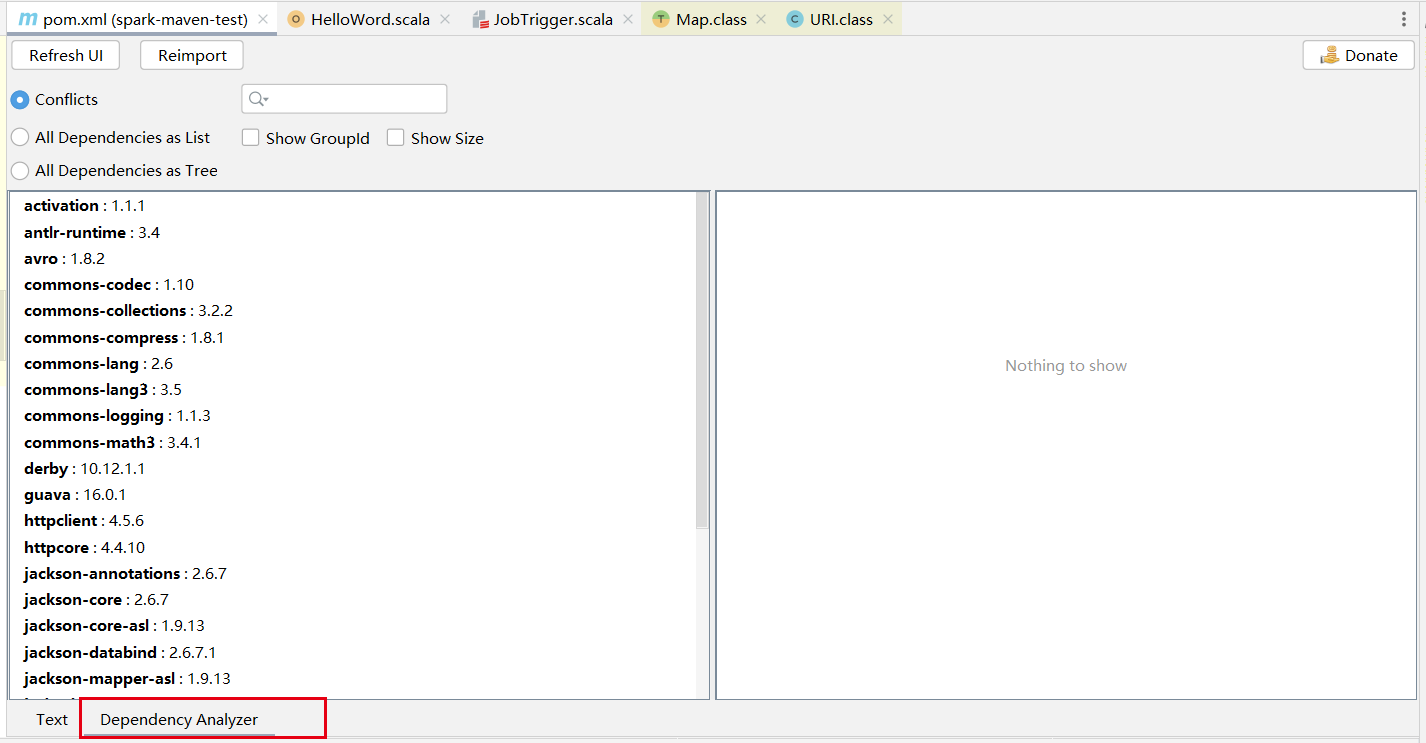

- maven helper用来查看是否存在jar包冲突

\weekwlkl)

4. 总结

创建spark项目,并且本地调试通过,有很多注意点,包括idea的配置,再次记录一下,以便后面学习

tips

- maven helper用来查看是否存在jar包冲突