- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

- 🚀 文章来源:K同学的学习圈子

文章目录

- 前言

- 1 我的环境

- 2 pytorch实现DenseNet算法

- 2.1 前期准备

- 2.1.1 引入库

- 2.1.2 设置GPU(如果设备上支持GPU就使用GPU,否则使用CPU)

- 2.1.3 导入数据

- 2.1.4 可视化数据

- 2.1.4 图像数据变换

- 2.1.4 划分数据集

- 2.1.4 加载数据

- 2.1.4 查看数据

- 2.2 搭建densenet121模型

- 2.3 训练模型

- 2.3.1 设置超参数

- 2.3.2 编写训练函数

- 2.3.3 编写测试函数

- 2.3.4 正式训练

- 2.4 结果可视化

- 2.4 指定图片进行预测

- 2.6 模型评估

- 3 tensorflow实现DenseNet算法

- 3.1.引入库

- 3.2.设置GPU(如果使用的是CPU可以忽略这步)

- 3.3.导入数据

- 3.4.查看数据

- 3.5.加载数据

- 3.6.再次检查数据

- 3.7.配置数据集

- 3.8.可视化数据

- 3.9.构建DenseNet网络

- 3.10.编译模型

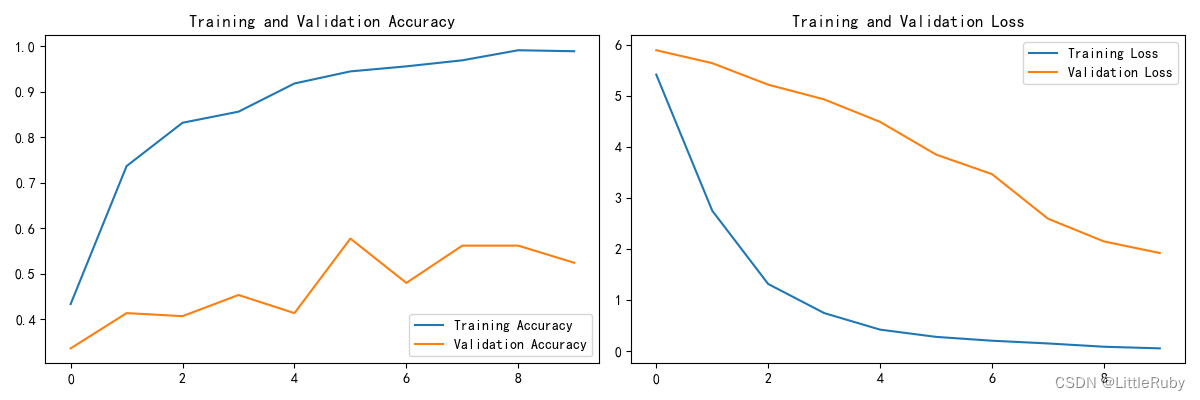

- 3.11.训练模型

- 3.12.模型评估

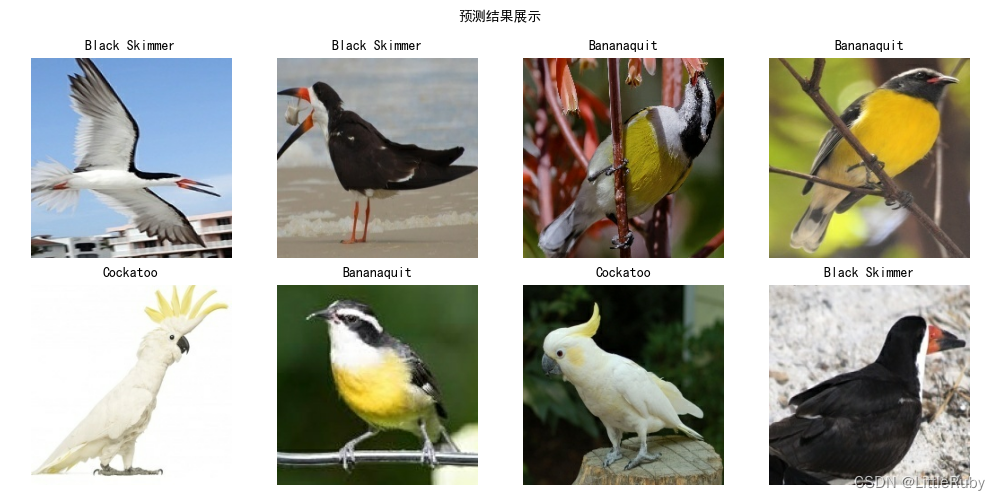

- 3.13.图像预测

- 4 知识点详解

- 4.1 DenseNet算法详解

- 4.1.1 前言

- 4.1.2 设计理念

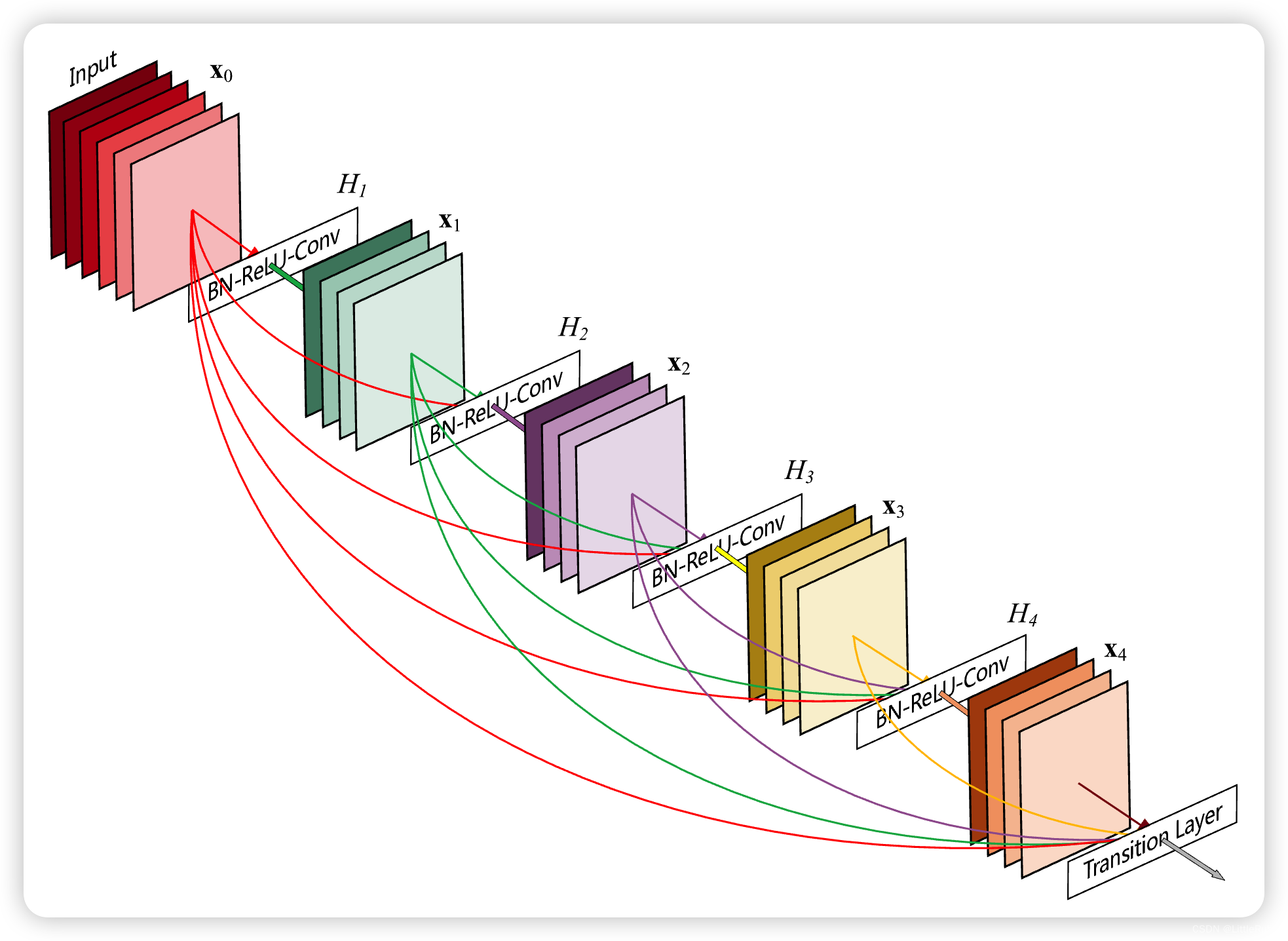

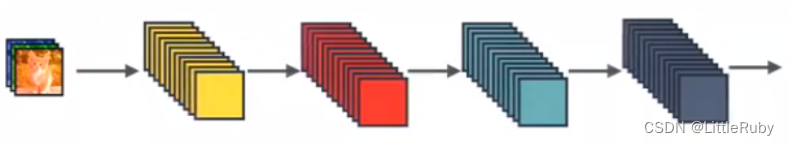

- 4.1.2.1 标准神经网络

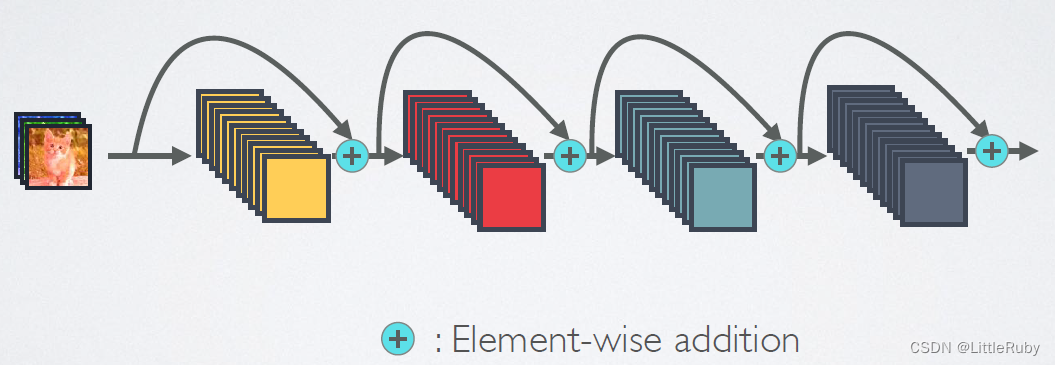

- 4.1.2.2 ResNet

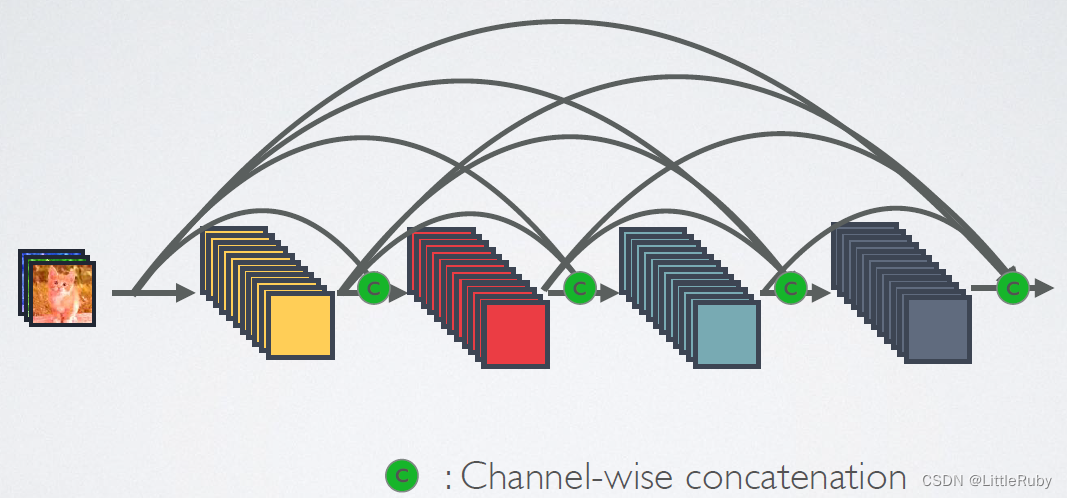

- 4.1.2.3 DenseNet

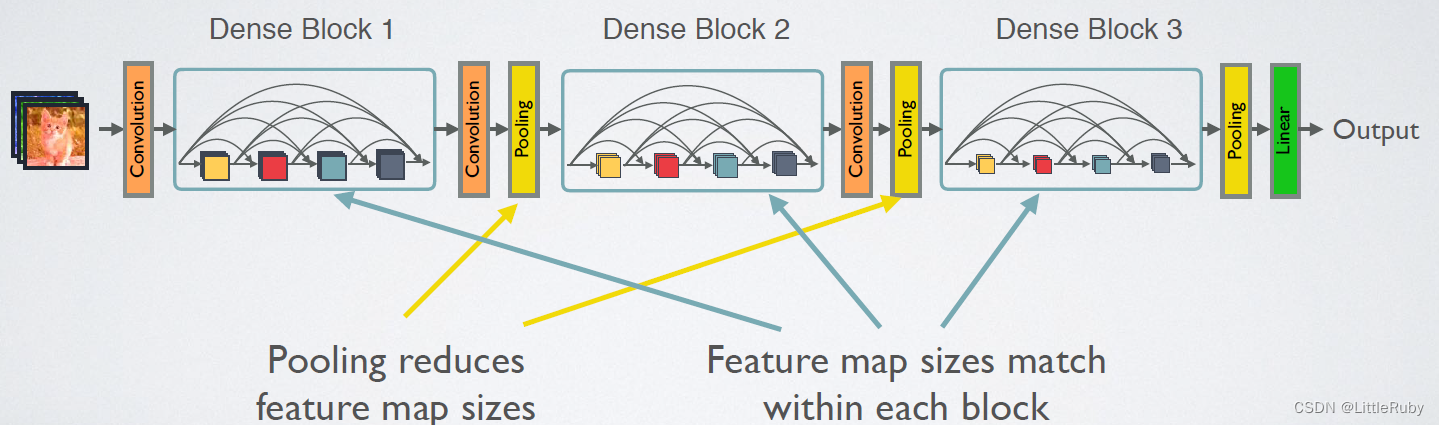

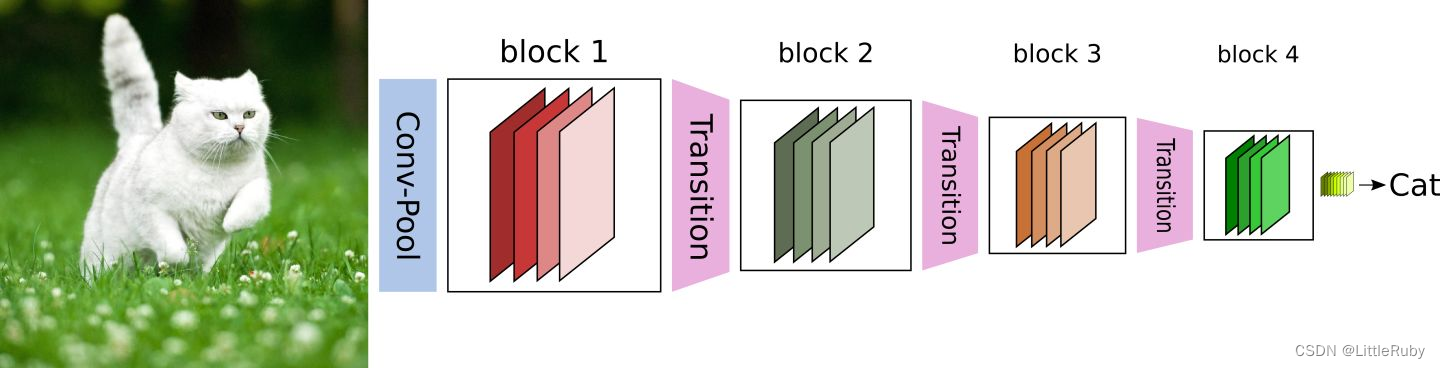

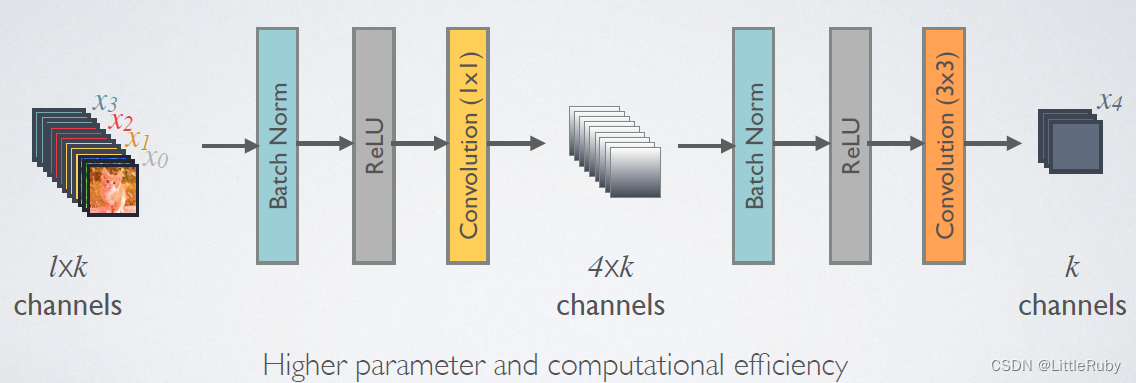

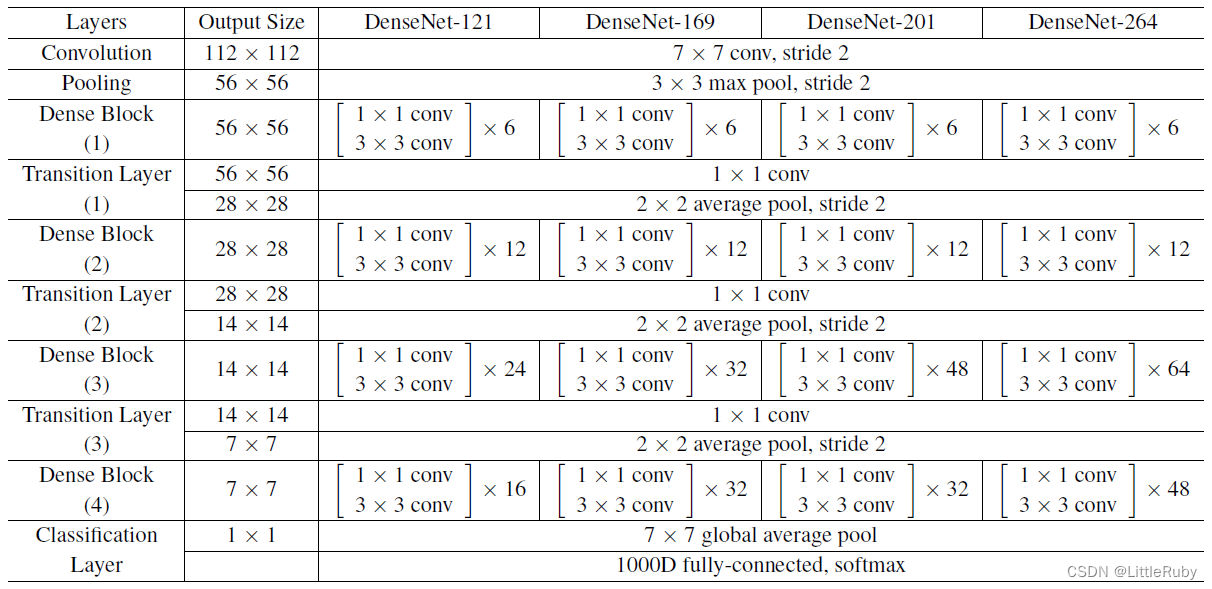

- 4.1.3 网络结构

- 4.1.4 效果对比

- 4.1.5 使用Pytroch实现DenseNet121

- 总结

前言

关键字: pytorch实现DenseNet算法,tensorflow实现DenseNet算法,DenseNet算法详解

1 我的环境

- 电脑系统:Windows 11

- 语言环境:python 3.8.6

- 编译器:pycharm2020.2.3

- 深度学习环境:

torch == 1.9.1+cu111

torchvision == 0.10.1+cu111

TensorFlow 2.10.1 - 显卡:NVIDIA GeForce RTX 4070

2 pytorch实现DenseNet算法

2.1 前期准备

2.1.1 引入库

import torch

import torch.nn as nn

import time

import copy

from torchvision import transforms, datasets

from pathlib import Path

from PIL import Image

import torchsummary as summary

import torch.nn.functional as F

from collections import OrderedDict

import re

import torch.utils.model_zoo as model_zoo

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

import warningswarnings.filterwarnings('ignore') # 忽略一些warning内容,无需打印2.1.2 设置GPU(如果设备上支持GPU就使用GPU,否则使用CPU)

"""前期准备-设置GPU"""

# 如果设备上支持GPU就使用GPU,否则使用CPUdevice = torch.device("cuda" if torch.cuda.is_available() else "cpu")print("Using {} device".format(device))

输出

Using cuda device

2.1.3 导入数据

'''前期工作-导入数据'''

data_dir = r"D:\DeepLearning\data\bird\bird_photos"

data_dir = Path(data_dir)data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[-1] for path in data_paths]

print(classeNames)

输出

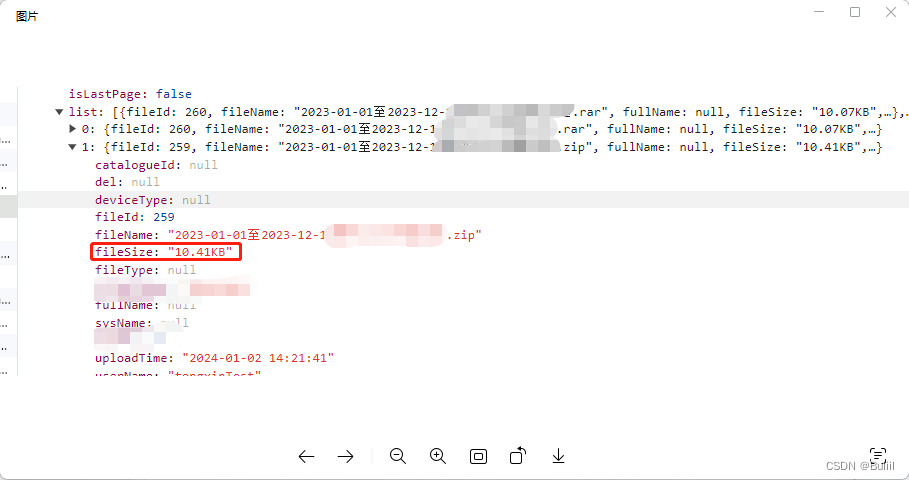

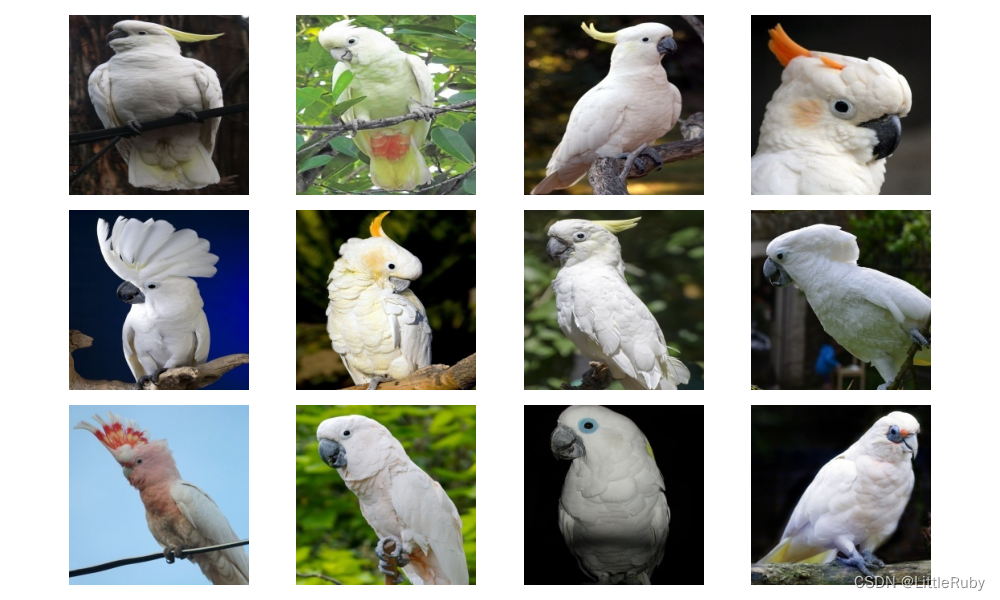

['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']2.1.4 可视化数据

'''前期工作-可视化数据'''

subfolder = Path(data_dir) / "Cockatoo"

image_files = list(p.resolve() for p in subfolder.glob('*') if p.suffix in [".jpg", ".png", ".jpeg"])

plt.figure(figsize=(10, 6))

for i in range(len(image_files[:12])):image_file = image_files[i]ax = plt.subplot(3, 4, i + 1)img = Image.open(str(image_file))plt.imshow(img)plt.axis("off")

# 显示图片

plt.tight_layout()

plt.show()

2.1.4 图像数据变换

'''前期工作-图像数据变换'''

total_datadir = data_dir# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder(total_datadir, transform=train_transforms)

print(total_data)

print(total_data.class_to_idx)

输出

Dataset ImageFolderNumber of datapoints: 565Root location: D:\DeepLearning\data\bird\bird_photosStandardTransform

Transform: Compose(Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)ToTensor()Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]))

{'Bananaquit': 0, 'Black Skimmer': 1, 'Black Throated Bushtiti': 2, 'Cockatoo': 3}2.1.4 划分数据集

'''前期工作-划分数据集'''

train_size = int(0.8 * len(total_data)) # train_size表示训练集大小,通过将总体数据长度的80%转换为整数得到;

test_size = len(total_data) - train_size # test_size表示测试集大小,是总体数据长度减去训练集大小。

# 使用torch.utils.data.random_split()方法进行数据集划分。该方法将总体数据total_data按照指定的大小比例([train_size, test_size])随机划分为训练集和测试集,

# 并将划分结果分别赋值给train_dataset和test_dataset两个变量。

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

print("train_dataset={}\ntest_dataset={}".format(train_dataset, test_dataset))

print("train_size={}\ntest_size={}".format(train_size, test_size))

输出

train_dataset=<torch.utils.data.dataset.Subset object at 0x000001309DFA26D0>

test_dataset=<torch.utils.data.dataset.Subset object at 0x000001309DFA2760>

train_size=452

test_size=113

2.1.4 加载数据

'''前期工作-加载数据'''

batch_size = 32train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

2.1.4 查看数据

'''前期工作-查看数据'''

for X, y in test_dl:print("Shape of X [N, C, H, W]: ", X.shape)print("Shape of y: ", y.shape, y.dtype)break

输出

Shape of X [N, C, H, W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

2.2 搭建densenet121模型

"""构建DenseNet网络"""

# 这里我们采用了Pytorch的框架来实现DenseNet,

# 首先实现DenseBlock中的内部结构,这里是BN+ReLU+1×1Conv+BN+ReLU+3×3Conv结构,最后也加入dropout层用于训练过程。

class _DenseLayer(nn.Sequential):"""Basic unit of DenseBlock (using bottleneck layer) """def __init__(self, num_input_features, growth_rate, bn_size, drop_rate):super(_DenseLayer, self).__init__()self.add_module('norm1', nn.BatchNorm2d(num_input_features)),self.add_module('relu1', nn.ReLU(inplace=True)),self.add_module('conv1', nn.Conv2d(num_input_features, bn_size * growth_rate,kernel_size=1, stride=1, bias=False)),self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate)),self.add_module('relu2', nn.ReLU(inplace=True)),self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,kernel_size=3, stride=1, padding=1, bias=False)),self.drop_rate = drop_ratedef forward(self, x):new_features = super(_DenseLayer, self).forward(x)if self.drop_rate > 0:new_features = F.dropout(new_features, p=self.drop_rate, training=self.training)return torch.cat([x, new_features], 1)# 实现DenseBlock模块,内部是密集连接方式(输入特征数线性增长):

class _DenseBlock(nn.Sequential):"""DenseBlock """def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate):super(_DenseBlock, self).__init__()for i in range(num_layers):layer = _DenseLayer(num_input_features + i * growth_rate, growth_rate, bn_size, drop_rate)self.add_module('denselayer%d' % (i + 1), layer)# 实现Transition层,它主要是一个卷积层和一个池化层:

class _Transition(nn.Sequential):def __init__(self, num_input_features, num_output_features):super(_Transition, self).__init__()self.add_module('norm', nn.BatchNorm2d(num_input_features))self.add_module('relu', nn.ReLU(inplace=True))self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,kernel_size=1, stride=1, bias=False))self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))# 最后我们实现DenseNet网络:

class DenseNet(nn.Module):r"""Densenet-BC model class, based on`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`Args:growth_rate (int) - how many filters to add each layer (`k` in paper)block_config (list of 3 or 4 ints) - how many layers in each pooling blocknum_init_features (int) - the number of filters to learn in the first convolution layerbn_size (int) - multiplicative factor for number of bottle neck layers(i.e. bn_size * k features in the bottleneck layer)drop_rate (float) - dropout rate after each dense layernum_classes (int) - number of classification classes"""def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16),num_init_features=24, bn_size=4, compression=0.5, drop_rate=0,num_classes=1000):super(DenseNet, self).__init__()# First Conv2dself.features = nn.Sequential(OrderedDict([('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2, padding=3, bias=False)),('norm0', nn.BatchNorm2d(num_init_features)),('relu0', nn.ReLU(inplace=True)),('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1))]))# Each denseblocknum_features = num_init_featuresfor i, num_layers in enumerate(block_config):block = _DenseBlock(num_layers, num_features, bn_size, growth_rate, drop_rate)self.features.add_module('denseblock%d' % (i + 1), block)num_features += num_layers * growth_rateif i != len(block_config) - 1:transition = _Transition(num_input_features=num_features,num_output_features=int(num_features * compression))self.features.add_module('transition%d' % (i + 1), transition)num_features = int(num_features * compression)# Final bn+reluself.features.add_module('norm5', nn.BatchNorm2d(num_features))self.features.add_module('relu5', nn.ReLU(inplace=True))# classification layerself.classifier = nn.Linear(num_features, num_classes)# params initializationfor m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight)elif isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.bias, 0)nn.init.constant_(m.weight, 1)elif isinstance(m, nn.Linear):nn.init.constant_(m.bias, 0)def forward(self, x):features = self.features(x)out = F.avg_pool2d(features, 7, stride=1).view(features.size(0), -1)out = self.classifier(out)return outmodel_urls = {'densenet121': 'https://download.pytorch.org/models/densenet121-a639ec97.pth','densenet169': 'https://download.pytorch.org/models/densenet169-b2777c0a.pth','densenet201': 'https://download.pytorch.org/models/densenet201-c1103571.pth','densenet161': 'https://download.pytorch.org/models/densenet161-8d451a50.pth'}def densenet121(pretrained=False, **kwargs):"""DenseNet121"""model = DenseNet(num_init_features=64, growth_rate=32, block_config=(6, 12, 24, 16), **kwargs)if pretrained:# '.'s are no longer allowed in module names, but pervious _DenseLayer# has keys 'norm.1', 'relu.1', 'conv.1', 'norm.2', 'relu.2', 'conv.2'.# They are also in the checkpoints in model_urls. This pattern is used# to find such keys.pattern = re.compile(r'^(.*denselayer\d+\.(?:norm|relu|conv))\.((?:[12])\.(?:weight|bias|running_mean|running_var))$')state_dict = model_zoo.load_url(model_urls['densenet121'])for key in list(state_dict.keys()):res = pattern.match(key)if res:new_key = res.group(1) + res.group(2)state_dict[new_key] = state_dict[key]del state_dict[key]model.load_state_dict(state_dict)return model"""搭建densenet121模型"""

# model = densenet121().to(device)

model = densenet121(True).to(device) # 使用预训练模型

print(model)

print(summary.summary(model, (3, 224, 224))) # 查看模型的参数量以及相关指标输出

DenseNet((features): Sequential((conv0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)(norm0): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu0): ReLU(inplace=True)(pool0): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(denseblock1): _DenseBlock((denselayer1): _DenseLayer((norm1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(64, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): _DenseLayer((norm1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(96, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): _DenseLayer((norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): _DenseLayer((norm1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(160, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): _DenseLayer((norm1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(192, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): _DenseLayer((norm1): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(224, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(transition1): _Transition((norm): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(pool): AvgPool2d(kernel_size=2, stride=2, padding=0))(denseblock2): _DenseBlock((denselayer1): _DenseLayer((norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): _DenseLayer((norm1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(160, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): _DenseLayer((norm1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(192, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): _DenseLayer((norm1): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(224, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): _DenseLayer((norm1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): _DenseLayer((norm1): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(288, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer7): _DenseLayer((norm1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(320, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer8): _DenseLayer((norm1): BatchNorm2d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(352, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer9): _DenseLayer((norm1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer10): _DenseLayer((norm1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(416, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer11): _DenseLayer((norm1): BatchNorm2d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(448, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer12): _DenseLayer((norm1): BatchNorm2d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(480, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(transition2): _Transition((norm): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(pool): AvgPool2d(kernel_size=2, stride=2, padding=0))(denseblock3): _DenseBlock((denselayer1): _DenseLayer((norm1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): _DenseLayer((norm1): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(288, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): _DenseLayer((norm1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(320, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): _DenseLayer((norm1): BatchNorm2d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(352, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): _DenseLayer((norm1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): _DenseLayer((norm1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(416, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer7): _DenseLayer((norm1): BatchNorm2d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(448, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer8): _DenseLayer((norm1): BatchNorm2d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(480, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer9): _DenseLayer((norm1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer10): _DenseLayer((norm1): BatchNorm2d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(544, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer11): _DenseLayer((norm1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(576, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer12): _DenseLayer((norm1): BatchNorm2d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(608, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer13): _DenseLayer((norm1): BatchNorm2d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(640, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer14): _DenseLayer((norm1): BatchNorm2d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(672, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer15): _DenseLayer((norm1): BatchNorm2d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(704, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer16): _DenseLayer((norm1): BatchNorm2d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(736, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer17): _DenseLayer((norm1): BatchNorm2d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer18): _DenseLayer((norm1): BatchNorm2d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(800, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer19): _DenseLayer((norm1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer20): _DenseLayer((norm1): BatchNorm2d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(864, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer21): _DenseLayer((norm1): BatchNorm2d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(896, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer22): _DenseLayer((norm1): BatchNorm2d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(928, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer23): _DenseLayer((norm1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(960, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer24): _DenseLayer((norm1): BatchNorm2d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(992, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(transition3): _Transition((norm): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(pool): AvgPool2d(kernel_size=2, stride=2, padding=0))(denseblock4): _DenseBlock((denselayer1): _DenseLayer((norm1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): _DenseLayer((norm1): BatchNorm2d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(544, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): _DenseLayer((norm1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(576, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): _DenseLayer((norm1): BatchNorm2d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(608, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): _DenseLayer((norm1): BatchNorm2d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(640, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): _DenseLayer((norm1): BatchNorm2d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(672, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer7): _DenseLayer((norm1): BatchNorm2d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(704, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer8): _DenseLayer((norm1): BatchNorm2d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(736, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer9): _DenseLayer((norm1): BatchNorm2d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer10): _DenseLayer((norm1): BatchNorm2d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(800, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer11): _DenseLayer((norm1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer12): _DenseLayer((norm1): BatchNorm2d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(864, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer13): _DenseLayer((norm1): BatchNorm2d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(896, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer14): _DenseLayer((norm1): BatchNorm2d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(928, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer15): _DenseLayer((norm1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(960, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer16): _DenseLayer((norm1): BatchNorm2d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(992, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(norm5): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu5): ReLU(inplace=True))(classifier): Linear(in_features=1024, out_features=1000, bias=True)

)

----------------------------------------------------------------Layer (type) Output Shape Param #

================================================================Conv2d-1 [-1, 64, 112, 112] 9,408BatchNorm2d-2 [-1, 64, 112, 112] 128ReLU-3 [-1, 64, 112, 112] 0MaxPool2d-4 [-1, 64, 56, 56] 0BatchNorm2d-5 [-1, 64, 56, 56] 128ReLU-6 [-1, 64, 56, 56] 0Conv2d-7 [-1, 128, 56, 56] 8,192BatchNorm2d-8 [-1, 128, 56, 56] 256ReLU-9 [-1, 128, 56, 56] 0Conv2d-10 [-1, 32, 56, 56] 36,864BatchNorm2d-11 [-1, 96, 56, 56] 192ReLU-12 [-1, 96, 56, 56] 0Conv2d-13 [-1, 128, 56, 56] 12,288BatchNorm2d-14 [-1, 128, 56, 56] 256ReLU-15 [-1, 128, 56, 56] 0Conv2d-16 [-1, 32, 56, 56] 36,864BatchNorm2d-17 [-1, 128, 56, 56] 256ReLU-18 [-1, 128, 56, 56] 0Conv2d-19 [-1, 128, 56, 56] 16,384BatchNorm2d-20 [-1, 128, 56, 56] 256ReLU-21 [-1, 128, 56, 56] 0Conv2d-22 [-1, 32, 56, 56] 36,864BatchNorm2d-23 [-1, 160, 56, 56] 320ReLU-24 [-1, 160, 56, 56] 0Conv2d-25 [-1, 128, 56, 56] 20,480BatchNorm2d-26 [-1, 128, 56, 56] 256ReLU-27 [-1, 128, 56, 56] 0Conv2d-28 [-1, 32, 56, 56] 36,864BatchNorm2d-29 [-1, 192, 56, 56] 384ReLU-30 [-1, 192, 56, 56] 0Conv2d-31 [-1, 128, 56, 56] 24,576BatchNorm2d-32 [-1, 128, 56, 56] 256ReLU-33 [-1, 128, 56, 56] 0Conv2d-34 [-1, 32, 56, 56] 36,864BatchNorm2d-35 [-1, 224, 56, 56] 448ReLU-36 [-1, 224, 56, 56] 0Conv2d-37 [-1, 128, 56, 56] 28,672BatchNorm2d-38 [-1, 128, 56, 56] 256ReLU-39 [-1, 128, 56, 56] 0Conv2d-40 [-1, 32, 56, 56] 36,864BatchNorm2d-41 [-1, 256, 56, 56] 512ReLU-42 [-1, 256, 56, 56] 0Conv2d-43 [-1, 128, 56, 56] 32,768AvgPool2d-44 [-1, 128, 28, 28] 0BatchNorm2d-45 [-1, 128, 28, 28] 256ReLU-46 [-1, 128, 28, 28] 0Conv2d-47 [-1, 128, 28, 28] 16,384BatchNorm2d-48 [-1, 128, 28, 28] 256ReLU-49 [-1, 128, 28, 28] 0Conv2d-50 [-1, 32, 28, 28] 36,864BatchNorm2d-51 [-1, 160, 28, 28] 320ReLU-52 [-1, 160, 28, 28] 0Conv2d-53 [-1, 128, 28, 28] 20,480BatchNorm2d-54 [-1, 128, 28, 28] 256ReLU-55 [-1, 128, 28, 28] 0Conv2d-56 [-1, 32, 28, 28] 36,864BatchNorm2d-57 [-1, 192, 28, 28] 384ReLU-58 [-1, 192, 28, 28] 0Conv2d-59 [-1, 128, 28, 28] 24,576BatchNorm2d-60 [-1, 128, 28, 28] 256ReLU-61 [-1, 128, 28, 28] 0Conv2d-62 [-1, 32, 28, 28] 36,864BatchNorm2d-63 [-1, 224, 28, 28] 448ReLU-64 [-1, 224, 28, 28] 0Conv2d-65 [-1, 128, 28, 28] 28,672BatchNorm2d-66 [-1, 128, 28, 28] 256ReLU-67 [-1, 128, 28, 28] 0Conv2d-68 [-1, 32, 28, 28] 36,864BatchNorm2d-69 [-1, 256, 28, 28] 512ReLU-70 [-1, 256, 28, 28] 0Conv2d-71 [-1, 128, 28, 28] 32,768BatchNorm2d-72 [-1, 128, 28, 28] 256ReLU-73 [-1, 128, 28, 28] 0Conv2d-74 [-1, 32, 28, 28] 36,864BatchNorm2d-75 [-1, 288, 28, 28] 576ReLU-76 [-1, 288, 28, 28] 0Conv2d-77 [-1, 128, 28, 28] 36,864BatchNorm2d-78 [-1, 128, 28, 28] 256ReLU-79 [-1, 128, 28, 28] 0Conv2d-80 [-1, 32, 28, 28] 36,864BatchNorm2d-81 [-1, 320, 28, 28] 640ReLU-82 [-1, 320, 28, 28] 0Conv2d-83 [-1, 128, 28, 28] 40,960BatchNorm2d-84 [-1, 128, 28, 28] 256ReLU-85 [-1, 128, 28, 28] 0Conv2d-86 [-1, 32, 28, 28] 36,864BatchNorm2d-87 [-1, 352, 28, 28] 704ReLU-88 [-1, 352, 28, 28] 0Conv2d-89 [-1, 128, 28, 28] 45,056BatchNorm2d-90 [-1, 128, 28, 28] 256ReLU-91 [-1, 128, 28, 28] 0Conv2d-92 [-1, 32, 28, 28] 36,864BatchNorm2d-93 [-1, 384, 28, 28] 768ReLU-94 [-1, 384, 28, 28] 0Conv2d-95 [-1, 128, 28, 28] 49,152BatchNorm2d-96 [-1, 128, 28, 28] 256ReLU-97 [-1, 128, 28, 28] 0Conv2d-98 [-1, 32, 28, 28] 36,864BatchNorm2d-99 [-1, 416, 28, 28] 832ReLU-100 [-1, 416, 28, 28] 0Conv2d-101 [-1, 128, 28, 28] 53,248BatchNorm2d-102 [-1, 128, 28, 28] 256ReLU-103 [-1, 128, 28, 28] 0Conv2d-104 [-1, 32, 28, 28] 36,864BatchNorm2d-105 [-1, 448, 28, 28] 896ReLU-106 [-1, 448, 28, 28] 0Conv2d-107 [-1, 128, 28, 28] 57,344BatchNorm2d-108 [-1, 128, 28, 28] 256ReLU-109 [-1, 128, 28, 28] 0Conv2d-110 [-1, 32, 28, 28] 36,864BatchNorm2d-111 [-1, 480, 28, 28] 960ReLU-112 [-1, 480, 28, 28] 0Conv2d-113 [-1, 128, 28, 28] 61,440BatchNorm2d-114 [-1, 128, 28, 28] 256ReLU-115 [-1, 128, 28, 28] 0Conv2d-116 [-1, 32, 28, 28] 36,864BatchNorm2d-117 [-1, 512, 28, 28] 1,024ReLU-118 [-1, 512, 28, 28] 0Conv2d-119 [-1, 256, 28, 28] 131,072AvgPool2d-120 [-1, 256, 14, 14] 0BatchNorm2d-121 [-1, 256, 14, 14] 512ReLU-122 [-1, 256, 14, 14] 0Conv2d-123 [-1, 128, 14, 14] 32,768BatchNorm2d-124 [-1, 128, 14, 14] 256ReLU-125 [-1, 128, 14, 14] 0Conv2d-126 [-1, 32, 14, 14] 36,864BatchNorm2d-127 [-1, 288, 14, 14] 576ReLU-128 [-1, 288, 14, 14] 0Conv2d-129 [-1, 128, 14, 14] 36,864BatchNorm2d-130 [-1, 128, 14, 14] 256ReLU-131 [-1, 128, 14, 14] 0Conv2d-132 [-1, 32, 14, 14] 36,864BatchNorm2d-133 [-1, 320, 14, 14] 640ReLU-134 [-1, 320, 14, 14] 0Conv2d-135 [-1, 128, 14, 14] 40,960BatchNorm2d-136 [-1, 128, 14, 14] 256ReLU-137 [-1, 128, 14, 14] 0Conv2d-138 [-1, 32, 14, 14] 36,864BatchNorm2d-139 [-1, 352, 14, 14] 704ReLU-140 [-1, 352, 14, 14] 0Conv2d-141 [-1, 128, 14, 14] 45,056BatchNorm2d-142 [-1, 128, 14, 14] 256ReLU-143 [-1, 128, 14, 14] 0Conv2d-144 [-1, 32, 14, 14] 36,864BatchNorm2d-145 [-1, 384, 14, 14] 768ReLU-146 [-1, 384, 14, 14] 0Conv2d-147 [-1, 128, 14, 14] 49,152BatchNorm2d-148 [-1, 128, 14, 14] 256ReLU-149 [-1, 128, 14, 14] 0Conv2d-150 [-1, 32, 14, 14] 36,864BatchNorm2d-151 [-1, 416, 14, 14] 832ReLU-152 [-1, 416, 14, 14] 0Conv2d-153 [-1, 128, 14, 14] 53,248BatchNorm2d-154 [-1, 128, 14, 14] 256ReLU-155 [-1, 128, 14, 14] 0Conv2d-156 [-1, 32, 14, 14] 36,864BatchNorm2d-157 [-1, 448, 14, 14] 896ReLU-158 [-1, 448, 14, 14] 0Conv2d-159 [-1, 128, 14, 14] 57,344BatchNorm2d-160 [-1, 128, 14, 14] 256ReLU-161 [-1, 128, 14, 14] 0Conv2d-162 [-1, 32, 14, 14] 36,864BatchNorm2d-163 [-1, 480, 14, 14] 960ReLU-164 [-1, 480, 14, 14] 0Conv2d-165 [-1, 128, 14, 14] 61,440BatchNorm2d-166 [-1, 128, 14, 14] 256ReLU-167 [-1, 128, 14, 14] 0Conv2d-168 [-1, 32, 14, 14] 36,864BatchNorm2d-169 [-1, 512, 14, 14] 1,024ReLU-170 [-1, 512, 14, 14] 0Conv2d-171 [-1, 128, 14, 14] 65,536BatchNorm2d-172 [-1, 128, 14, 14] 256ReLU-173 [-1, 128, 14, 14] 0Conv2d-174 [-1, 32, 14, 14] 36,864BatchNorm2d-175 [-1, 544, 14, 14] 1,088ReLU-176 [-1, 544, 14, 14] 0Conv2d-177 [-1, 128, 14, 14] 69,632BatchNorm2d-178 [-1, 128, 14, 14] 256ReLU-179 [-1, 128, 14, 14] 0Conv2d-180 [-1, 32, 14, 14] 36,864BatchNorm2d-181 [-1, 576, 14, 14] 1,152ReLU-182 [-1, 576, 14, 14] 0Conv2d-183 [-1, 128, 14, 14] 73,728BatchNorm2d-184 [-1, 128, 14, 14] 256ReLU-185 [-1, 128, 14, 14] 0Conv2d-186 [-1, 32, 14, 14] 36,864BatchNorm2d-187 [-1, 608, 14, 14] 1,216ReLU-188 [-1, 608, 14, 14] 0Conv2d-189 [-1, 128, 14, 14] 77,824BatchNorm2d-190 [-1, 128, 14, 14] 256ReLU-191 [-1, 128, 14, 14] 0Conv2d-192 [-1, 32, 14, 14] 36,864BatchNorm2d-193 [-1, 640, 14, 14] 1,280ReLU-194 [-1, 640, 14, 14] 0Conv2d-195 [-1, 128, 14, 14] 81,920BatchNorm2d-196 [-1, 128, 14, 14] 256ReLU-197 [-1, 128, 14, 14] 0Conv2d-198 [-1, 32, 14, 14] 36,864BatchNorm2d-199 [-1, 672, 14, 14] 1,344ReLU-200 [-1, 672, 14, 14] 0Conv2d-201 [-1, 128, 14, 14] 86,016BatchNorm2d-202 [-1, 128, 14, 14] 256ReLU-203 [-1, 128, 14, 14] 0Conv2d-204 [-1, 32, 14, 14] 36,864BatchNorm2d-205 [-1, 704, 14, 14] 1,408ReLU-206 [-1, 704, 14, 14] 0Conv2d-207 [-1, 128, 14, 14] 90,112BatchNorm2d-208 [-1, 128, 14, 14] 256ReLU-209 [-1, 128, 14, 14] 0Conv2d-210 [-1, 32, 14, 14] 36,864BatchNorm2d-211 [-1, 736, 14, 14] 1,472ReLU-212 [-1, 736, 14, 14] 0Conv2d-213 [-1, 128, 14, 14] 94,208BatchNorm2d-214 [-1, 128, 14, 14] 256ReLU-215 [-1, 128, 14, 14] 0Conv2d-216 [-1, 32, 14, 14] 36,864BatchNorm2d-217 [-1, 768, 14, 14] 1,536ReLU-218 [-1, 768, 14, 14] 0Conv2d-219 [-1, 128, 14, 14] 98,304BatchNorm2d-220 [-1, 128, 14, 14] 256ReLU-221 [-1, 128, 14, 14] 0Conv2d-222 [-1, 32, 14, 14] 36,864BatchNorm2d-223 [-1, 800, 14, 14] 1,600ReLU-224 [-1, 800, 14, 14] 0Conv2d-225 [-1, 128, 14, 14] 102,400BatchNorm2d-226 [-1, 128, 14, 14] 256ReLU-227 [-1, 128, 14, 14] 0Conv2d-228 [-1, 32, 14, 14] 36,864BatchNorm2d-229 [-1, 832, 14, 14] 1,664ReLU-230 [-1, 832, 14, 14] 0Conv2d-231 [-1, 128, 14, 14] 106,496BatchNorm2d-232 [-1, 128, 14, 14] 256ReLU-233 [-1, 128, 14, 14] 0Conv2d-234 [-1, 32, 14, 14] 36,864BatchNorm2d-235 [-1, 864, 14, 14] 1,728ReLU-236 [-1, 864, 14, 14] 0Conv2d-237 [-1, 128, 14, 14] 110,592BatchNorm2d-238 [-1, 128, 14, 14] 256ReLU-239 [-1, 128, 14, 14] 0Conv2d-240 [-1, 32, 14, 14] 36,864BatchNorm2d-241 [-1, 896, 14, 14] 1,792ReLU-242 [-1, 896, 14, 14] 0Conv2d-243 [-1, 128, 14, 14] 114,688BatchNorm2d-244 [-1, 128, 14, 14] 256ReLU-245 [-1, 128, 14, 14] 0Conv2d-246 [-1, 32, 14, 14] 36,864BatchNorm2d-247 [-1, 928, 14, 14] 1,856ReLU-248 [-1, 928, 14, 14] 0Conv2d-249 [-1, 128, 14, 14] 118,784BatchNorm2d-250 [-1, 128, 14, 14] 256ReLU-251 [-1, 128, 14, 14] 0Conv2d-252 [-1, 32, 14, 14] 36,864BatchNorm2d-253 [-1, 960, 14, 14] 1,920ReLU-254 [-1, 960, 14, 14] 0Conv2d-255 [-1, 128, 14, 14] 122,880BatchNorm2d-256 [-1, 128, 14, 14] 256ReLU-257 [-1, 128, 14, 14] 0Conv2d-258 [-1, 32, 14, 14] 36,864BatchNorm2d-259 [-1, 992, 14, 14] 1,984ReLU-260 [-1, 992, 14, 14] 0Conv2d-261 [-1, 128, 14, 14] 126,976BatchNorm2d-262 [-1, 128, 14, 14] 256ReLU-263 [-1, 128, 14, 14] 0Conv2d-264 [-1, 32, 14, 14] 36,864BatchNorm2d-265 [-1, 1024, 14, 14] 2,048ReLU-266 [-1, 1024, 14, 14] 0Conv2d-267 [-1, 512, 14, 14] 524,288AvgPool2d-268 [-1, 512, 7, 7] 0BatchNorm2d-269 [-1, 512, 7, 7] 1,024ReLU-270 [-1, 512, 7, 7] 0Conv2d-271 [-1, 128, 7, 7] 65,536BatchNorm2d-272 [-1, 128, 7, 7] 256ReLU-273 [-1, 128, 7, 7] 0Conv2d-274 [-1, 32, 7, 7] 36,864BatchNorm2d-275 [-1, 544, 7, 7] 1,088ReLU-276 [-1, 544, 7, 7] 0Conv2d-277 [-1, 128, 7, 7] 69,632BatchNorm2d-278 [-1, 128, 7, 7] 256ReLU-279 [-1, 128, 7, 7] 0Conv2d-280 [-1, 32, 7, 7] 36,864BatchNorm2d-281 [-1, 576, 7, 7] 1,152ReLU-282 [-1, 576, 7, 7] 0Conv2d-283 [-1, 128, 7, 7] 73,728BatchNorm2d-284 [-1, 128, 7, 7] 256ReLU-285 [-1, 128, 7, 7] 0Conv2d-286 [-1, 32, 7, 7] 36,864BatchNorm2d-287 [-1, 608, 7, 7] 1,216ReLU-288 [-1, 608, 7, 7] 0Conv2d-289 [-1, 128, 7, 7] 77,824BatchNorm2d-290 [-1, 128, 7, 7] 256ReLU-291 [-1, 128, 7, 7] 0Conv2d-292 [-1, 32, 7, 7] 36,864BatchNorm2d-293 [-1, 640, 7, 7] 1,280ReLU-294 [-1, 640, 7, 7] 0Conv2d-295 [-1, 128, 7, 7] 81,920BatchNorm2d-296 [-1, 128, 7, 7] 256ReLU-297 [-1, 128, 7, 7] 0Conv2d-298 [-1, 32, 7, 7] 36,864BatchNorm2d-299 [-1, 672, 7, 7] 1,344ReLU-300 [-1, 672, 7, 7] 0Conv2d-301 [-1, 128, 7, 7] 86,016BatchNorm2d-302 [-1, 128, 7, 7] 256ReLU-303 [-1, 128, 7, 7] 0Conv2d-304 [-1, 32, 7, 7] 36,864BatchNorm2d-305 [-1, 704, 7, 7] 1,408ReLU-306 [-1, 704, 7, 7] 0Conv2d-307 [-1, 128, 7, 7] 90,112BatchNorm2d-308 [-1, 128, 7, 7] 256ReLU-309 [-1, 128, 7, 7] 0Conv2d-310 [-1, 32, 7, 7] 36,864BatchNorm2d-311 [-1, 736, 7, 7] 1,472ReLU-312 [-1, 736, 7, 7] 0Conv2d-313 [-1, 128, 7, 7] 94,208BatchNorm2d-314 [-1, 128, 7, 7] 256ReLU-315 [-1, 128, 7, 7] 0Conv2d-316 [-1, 32, 7, 7] 36,864BatchNorm2d-317 [-1, 768, 7, 7] 1,536ReLU-318 [-1, 768, 7, 7] 0Conv2d-319 [-1, 128, 7, 7] 98,304BatchNorm2d-320 [-1, 128, 7, 7] 256ReLU-321 [-1, 128, 7, 7] 0Conv2d-322 [-1, 32, 7, 7] 36,864BatchNorm2d-323 [-1, 800, 7, 7] 1,600ReLU-324 [-1, 800, 7, 7] 0Conv2d-325 [-1, 128, 7, 7] 102,400BatchNorm2d-326 [-1, 128, 7, 7] 256ReLU-327 [-1, 128, 7, 7] 0Conv2d-328 [-1, 32, 7, 7] 36,864BatchNorm2d-329 [-1, 832, 7, 7] 1,664ReLU-330 [-1, 832, 7, 7] 0Conv2d-331 [-1, 128, 7, 7] 106,496BatchNorm2d-332 [-1, 128, 7, 7] 256ReLU-333 [-1, 128, 7, 7] 0Conv2d-334 [-1, 32, 7, 7] 36,864BatchNorm2d-335 [-1, 864, 7, 7] 1,728ReLU-336 [-1, 864, 7, 7] 0Conv2d-337 [-1, 128, 7, 7] 110,592BatchNorm2d-338 [-1, 128, 7, 7] 256ReLU-339 [-1, 128, 7, 7] 0Conv2d-340 [-1, 32, 7, 7] 36,864BatchNorm2d-341 [-1, 896, 7, 7] 1,792ReLU-342 [-1, 896, 7, 7] 0Conv2d-343 [-1, 128, 7, 7] 114,688BatchNorm2d-344 [-1, 128, 7, 7] 256ReLU-345 [-1, 128, 7, 7] 0Conv2d-346 [-1, 32, 7, 7] 36,864BatchNorm2d-347 [-1, 928, 7, 7] 1,856ReLU-348 [-1, 928, 7, 7] 0Conv2d-349 [-1, 128, 7, 7] 118,784BatchNorm2d-350 [-1, 128, 7, 7] 256ReLU-351 [-1, 128, 7, 7] 0Conv2d-352 [-1, 32, 7, 7] 36,864BatchNorm2d-353 [-1, 960, 7, 7] 1,920ReLU-354 [-1, 960, 7, 7] 0Conv2d-355 [-1, 128, 7, 7] 122,880BatchNorm2d-356 [-1, 128, 7, 7] 256ReLU-357 [-1, 128, 7, 7] 0Conv2d-358 [-1, 32, 7, 7] 36,864BatchNorm2d-359 [-1, 992, 7, 7] 1,984ReLU-360 [-1, 992, 7, 7] 0Conv2d-361 [-1, 128, 7, 7] 126,976BatchNorm2d-362 [-1, 128, 7, 7] 256ReLU-363 [-1, 128, 7, 7] 0Conv2d-364 [-1, 32, 7, 7] 36,864BatchNorm2d-365 [-1, 1024, 7, 7] 2,048ReLU-366 [-1, 1024, 7, 7] 0Linear-367 [-1, 1000] 1,025,000

================================================================

Total params: 7,978,856

Trainable params: 7,978,856

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 294.58

Params size (MB): 30.44

Estimated Total Size (MB): 325.59

----------------------------------------------------------------2.3 训练模型

2.3.1 设置超参数

"""训练模型--设置超参数"""

loss_fn = nn.CrossEntropyLoss() # 创建损失函数,计算实际输出和真实相差多少,交叉熵损失函数,事实上,它就是做图片分类任务时常用的损失函数

learn_rate = 1e-4 # 学习率

optimizer1 = torch.optim.SGD(model.parameters(), lr=learn_rate)# 作用是定义优化器,用来训练时候优化模型参数;其中,SGD表示随机梯度下降,用于控制实际输出y与真实y之间的相差有多大

optimizer2 = torch.optim.Adam(model.parameters(), lr=learn_rate)

lr_opt = optimizer2

model_opt = optimizer2

# 调用官方动态学习率接口时使用2

lambda1 = lambda epoch : 0.92 ** (epoch // 4)

# optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(lr_opt, lr_lambda=lambda1) #选定调整方法2.3.2 编写训练函数

"""训练模型--编写训练函数"""

# 训练循环

def train(dataloader, model, loss_fn, optimizer):size = len(dataloader.dataset) # 训练集的大小,一共60000张图片num_batches = len(dataloader) # 批次数目,1875(60000/32)train_loss, train_acc = 0, 0 # 初始化训练损失和正确率for X, y in dataloader: # 加载数据加载器,得到里面的 X(图片数据)和 y(真实标签)X, y = X.to(device), y.to(device) # 用于将数据存到显卡# 计算预测误差pred = model(X) # 网络输出loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失# 反向传播optimizer.zero_grad() # 清空过往梯度loss.backward() # 反向传播,计算当前梯度optimizer.step() # 根据梯度更新网络参数# 记录acc与losstrain_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc, train_loss

2.3.3 编写测试函数

"""训练模型--编写测试函数"""

# 测试函数和训练函数大致相同,但是由于不进行梯度下降对网络权重进行更新,所以不需要传入优化器

def test(dataloader, model, loss_fn):size = len(dataloader.dataset) # 测试集的大小,一共10000张图片num_batches = len(dataloader) # 批次数目,313(10000/32=312.5,向上取整)test_loss, test_acc = 0, 0# 当不进行训练时,停止梯度更新,节省计算内存消耗with torch.no_grad(): # 测试时模型参数不用更新,所以 no_grad,整个模型参数正向推就ok,不反向更新参数for imgs, target in dataloader:imgs, target = imgs.to(device), target.to(device)# 计算losstarget_pred = model(imgs)loss = loss_fn(target_pred, target)test_loss += loss.item()test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()#统计预测正确的个数test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss2.3.4 正式训练

"""训练模型--正式训练"""

epochs = 10

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_test_acc=0for epoch in range(epochs):milliseconds_t1 = int(time.time() * 1000)# 更新学习率(使用自定义学习率时使用)# adjust_learning_rate(lr_opt, epoch, learn_rate)model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, model_opt)scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)# 获取当前的学习率lr = lr_opt.state_dict()['param_groups'][0]['lr']milliseconds_t2 = int(time.time() * 1000)template = ('Epoch:{:2d}, duration:{}ms, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}, Lr:{:.2E}')if best_test_acc < epoch_test_acc:best_test_acc = epoch_test_acc#备份最好的模型best_model = copy.deepcopy(model)template = ('Epoch:{:2d}, duration:{}ms, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}, Lr:{:.2E},Update the best model')print(template.format(epoch + 1, milliseconds_t2-milliseconds_t1, epoch_train_acc * 100, epoch_train_loss, epoch_test_acc * 100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')

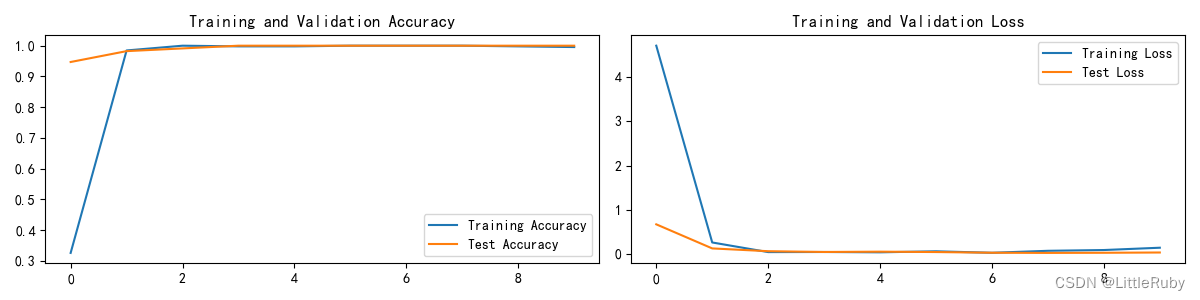

输出最高精度为Test_acc:100%

Epoch: 1, duration:5339ms, Train_acc:32.5%, Train_loss:4.717, Test_acc:94.7%,Test_loss:0.666, Lr:1.00E-04,Update the best model

Epoch: 2, duration:4585ms, Train_acc:98.5%, Train_loss:0.255, Test_acc:98.2%,Test_loss:0.120, Lr:1.00E-04,Update the best model

Epoch: 3, duration:4651ms, Train_acc:100.0%, Train_loss:0.037, Test_acc:99.1%,Test_loss:0.057, Lr:1.00E-04,Update the best model

Epoch: 4, duration:4610ms, Train_acc:99.8%, Train_loss:0.039, Test_acc:100.0%,Test_loss:0.040, Lr:1.00E-04,Update the best model

Epoch: 5, duration:4520ms, Train_acc:99.8%, Train_loss:0.032, Test_acc:100.0%,Test_loss:0.047, Lr:1.00E-04

Epoch: 6, duration:4528ms, Train_acc:100.0%, Train_loss:0.055, Test_acc:100.0%,Test_loss:0.038, Lr:1.00E-04

Epoch: 7, duration:4541ms, Train_acc:100.0%, Train_loss:0.021, Test_acc:100.0%,Test_loss:0.022, Lr:1.00E-04

Epoch: 8, duration:4568ms, Train_acc:100.0%, Train_loss:0.066, Test_acc:100.0%,Test_loss:0.018, Lr:1.00E-04

Epoch: 9, duration:4515ms, Train_acc:99.8%, Train_loss:0.084, Test_acc:100.0%,Test_loss:0.022, Lr:1.00E-04

Epoch:10, duration:4602ms, Train_acc:99.6%, Train_loss:0.136, Test_acc:100.0%,Test_loss:0.028, Lr:1.00E-04

这里使用了预训练模型,效果特别好

2.4 结果可视化

"""训练模型--结果可视化"""

epochs_range = range(epochs)plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

2.4 指定图片进行预测

def predict_one_image(image_path, model, transform, classes):test_img = Image.open(image_path).convert('RGB')plt.imshow(test_img) # 展示预测的图片plt.show()test_img = transform(test_img)img = test_img.to(device).unsqueeze(0)model.eval()output = model(img)_, pred = torch.max(output, 1)pred_class = classes[pred]print(f'预测结果是:{pred_class}')# 将参数加载到model当中

model.load_state_dict(torch.load(PATH, map_location=device))"""指定图片进行预测"""

classes = list(total_data.class_to_idx)

# 预测训练集中的某张照片

predict_one_image(image_path=str(Path(data_dir) / "Cockatoo/001.jpg"),model=model,transform=train_transforms,classes=classes)

输出

预测结果是:Cockatoo

2.6 模型评估

"""模型评估"""

best_model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, best_model, loss_fn)

# 查看是否与我们记录的最高准确率一致

print(epoch_test_acc, epoch_test_loss)输出

1.0 0.051054626121185723 tensorflow实现DenseNet算法

3.1.引入库

from PIL import Image

import numpy as np

from pathlib import Path

import matplotlib.pyplot as plt# 支持中文

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

import tensorflow as tf

from keras import layers, models, Input

from keras.layers import Input, Activation, BatchNormalization, Flatten

from keras.layers import Dense, Conv2D, MaxPooling2D, ZeroPadding2D, GlobalMaxPooling2D, AveragePooling2D, Flatten, \Dropout, BatchNormalization, GlobalAveragePooling2D

from keras.models import Model

from keras import regularizers

from tensorflow import keras

from keras.callbacks import ModelCheckpoint

import matplotlib.pyplot as plt

import warningswarnings.filterwarnings('ignore') # 忽略一些warning内容,无需打印

3.2.设置GPU(如果使用的是CPU可以忽略这步)

'''前期工作-设置GPU(如果使用的是CPU可以忽略这步)'''

# 检查GPU是否可用

print(tf.test.is_built_with_cuda())

gpus = tf.config.list_physical_devices("GPU")

print(gpus)

if gpus:gpu0 = gpus[0] # 如果有多个GPU,仅使用第0个GPUtf.config.experimental.set_memory_growth(gpu0, True) # 设置GPU显存用量按需使用tf.config.set_visible_devices([gpu0], "GPU")

执行结果

True

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

3.3.导入数据

'''前期工作-导入数据'''

data_dir = r"D:\DeepLearning\data\bird\bird_photos"

data_dir = Path(data_dir)

3.4.查看数据

'''前期工作-查看数据'''

image_count = len(list(data_dir.glob('*/*.jpg')))

print("图片总数为:", image_count)

image_list = list(data_dir.glob('Bananaquit/*.jpg'))

image = Image.open(str(image_list[1]))

# 查看图像实例的属性

print(image.format, image.size, image.mode)

plt.imshow(image)

plt.axis("off")

plt.show()

执行结果:

图片总数为: 565

JPEG (224, 224) RGB

3.5.加载数据

'''数据预处理-加载数据'''

batch_size = 32

img_height = 224

img_width = 224

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

train_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.2,subset="training",seed=123,image_size=(img_height, img_width),batch_size=batch_size)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.2,subset="validation",seed=123,image_size=(img_height, img_width),batch_size=batch_size)

class_names = train_ds.class_names

print(class_names)

运行结果:

Found 565 files belonging to 4 classes.

Using 452 files for training.

Found 565 files belonging to 4 classes.

Using 113 files for validation.

['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']3.6.再次检查数据

'''数据预处理-再次检查数据'''

# Image_batch是形状的张量(16, 336, 336, 3)。这是一批形状336x336x3的16张图片(最后一维指的是彩色通道RGB)。

# Label_batch是形状(16,)的张量,这些标签对应16张图片

for image_batch, labels_batch in train_ds:print(image_batch.shape)print(labels_batch.shape)break

运行结果

(32, 224, 224, 3)

(32,)

3.7.配置数据集

'''数据预处理-配置数据集'''

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

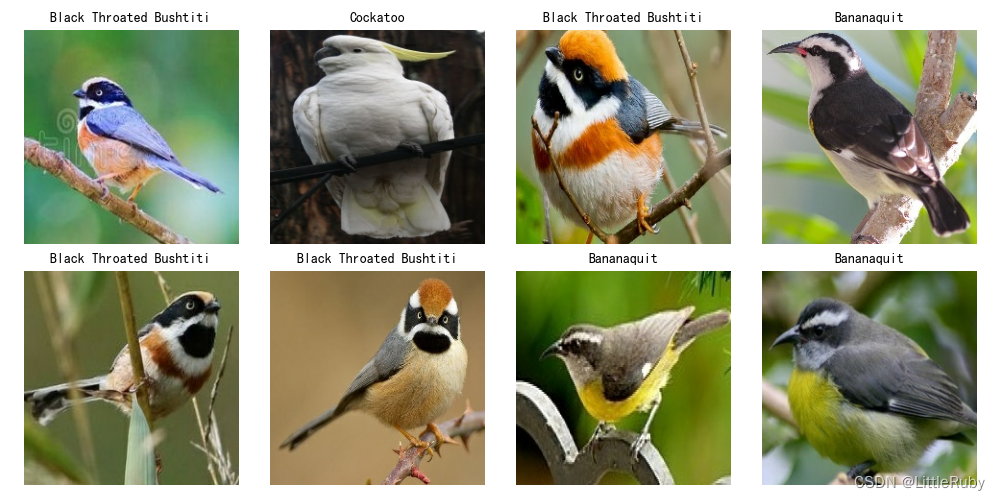

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)3.8.可视化数据

'''数据预处理-可视化数据'''

plt.figure(figsize=(10, 5))

for images, labels in train_ds.take(1):for i in range(8):ax = plt.subplot(2, 4, i + 1)plt.imshow(images[i].numpy().astype("uint8"))plt.title(class_names[labels[i]], fontsize=10)plt.axis("off")

# 显示图片

plt.show()

3.9.构建DenseNet网络

"""构建DenseNet网络"""

def conv_fn(x, growth_rate):x1 = keras.layers.BatchNormalization()(x)x1 = keras.layers.Activation('relu')(x)x1 = keras.layers.Activation('relu')(x1)x1 = keras.layers.Conv2D(4 * growth_rate, 1, 1, padding="same", use_bias=False)(x1)x1 = keras.layers.BatchNormalization()(x1)x1 = keras.layers.Activation("relu")(x1)x1 = keras.layers.Conv2D(growth_rate, 3, 1, padding="same", use_bias=False)(x1)return keras.layers.Concatenate(axis=3)([x, x1])def dense_block(x, block, growth_rate=32):for i in range(block):x = conv_fn(x, growth_rate)return xk = keras.backend

def trans_block(x, theta):x1 = keras.layers.BatchNormalization()(x)x1 = keras.layers.Activation("relu")(x1)x1 = keras.layers.Conv2D(int(k.int_shape(x)[3] * theta), 1, 1, use_bias=False)(x1)x1 = keras.layers.AveragePooling2D(pool_size=(2, 2), strides=2, padding="valid")(x1)return x1def densenet(input_shape, block, n_classes=1000):# 56*56*64x_input = keras.layers.Input(shape=input_shape)x = keras.layers.Conv2D(64, kernel_size=(7, 7), strides=2, padding="same", use_bias=False)(x_input)x = keras.layers.BatchNormalization()(x)x = keras.layers.MaxPooling2D(pool_size=3, strides=2, padding="same")(x)x = dense_block(x, block[0])x = trans_block(x, 0.5) # 28*28x = dense_block(x, block[1])x = trans_block(x, 0.5) # 14*14x = dense_block(x, block[2])x = trans_block(x, 0.5) # 7*7x = dense_block(x, block[3])x = keras.layers.BatchNormalization()(x)x = keras.layers.Activation("relu")(x)x = keras.layers.GlobalAveragePooling2D()(x)outputs = keras.layers.Dense(n_classes, activation="softmax")(x)model = keras.models.Model(inputs=[x_input], outputs=[outputs])return modelmodel_121 = densenet([224, 224, 3], [6, 12, 24, 16]) # DenseNet-121

model_169 = densenet([224, 224, 3], [6, 12, 32, 32]) # DenseNet-169

model_201 = densenet([224, 224, 3], [6, 12, 48, 32]) # DenseNet-201

model_269 = densenet([224, 224, 3], [6, 12, 64, 48]) # DenseNet-269

model = model_121

model.summary()

网络结构结果如下:

Model: "model"

__________________________________________________________________________________________________Layer (type) Output Shape Param # Connected to