原文地址:An Introduction to Prompt Engineering for OpenAI GPT LLMs

Github:Prompt-Engineering-Intro

2023 年 3 月 2 日

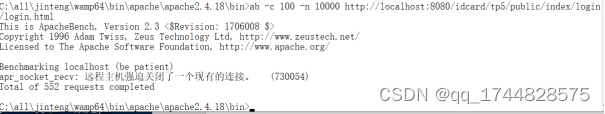

Naive 提示词:带有提示的情感分类器

prompt = '''

Decide whether a Tweet's sentiment is positive, neutral, or negative.Tweet: "I loved the new Batman movie!"

Sentiment:

'''Advanced 提示词

为了构建一个问答机器人,我们可以提供文档或废弃的网站作为上下文信息。然后,我们可以向模型提出问题,以引导它通过必要的中间步骤来构建答案。此外,我们还可以要求模型生成代码来执行特定任务,比如集成外部工具(例如 Python 代码执行器、API 调用、数据库查询等)。这些代码的输出可以作为历史记录,用于提出更深入的问题,并作为新的提示来进一步引导模型。

- Few Shot Prompting

- Chain of Thoughts Prompting

- Self Consistency Prompting

- ReAct — Reason and Act prompting

- PAL — Program aided Language Models

- MRKL Systems — Modular Reasoning, Knowledge and Language

- Self-Ask with Search

Few Shot

Few-Shot Prompting是一种提示策略,它向语言模型提供少量的示例作为输入的一部分,以指导模型生成所需的输出。在这种提示中,通常包括三个子部分:

- 1. 前缀(Instruction):这部分是对模型的指示,告诉模型需要完成的任务。例如,“给出每个输入的反义词”。

- 2. 示例(Examples):这部分包含了一些完成任务的具体示例,这些示例展示了任务的预期输出。例如,一个单词和它的反义词的列表。

- 3. 后缀(User Input):这部分是用户的实际查询,即模型需要为其生成输出的输入。在这个例子中,就是用户希望得到反义词的那个单词。

Few-Shot之所以得名,是因为它只使用了少量的示例来“提示”模型,而不是对模型进行完整的重新训练。在这种方法中,模型是静态的,不会因为新的任务而更新其参数。相反,我们通过构建包含示例的提示来引导模型产生期望的结果。这种方法利用了大型语言模型的能力,即它们可以从少量的示例中快速学习并泛化到新的情况。

examples = [{"word": "happy", "antonym": "sad"},{"word": "tall", "antonym": "short"},{"word": "sunny", "antonym": "cloudy"}

]example_formatter_template = """

Word: {word}

Antonym: {antonym}\n

"""example_prompt = PromptTemplate(input_variables=["word", "antonym"],template=example_formatter_template,

)few_shot_prompt = FewShotPromptTemplate(examples=examples,example_prompt=example_prompt,suffix="Add three other examples.",input_variables=[],

)llm = OpenAI()

chain = LLMChain(llm=llm, prompt=few_shot_prompt)

print("### Model Output ###")

print(chain.predict())######################################################### Model Output ###Word: fast

Antonym: slowWord: generous

Antonym: stingyWord: strong

Antonym: weakQuestion: When was the last FIFA World Cup held?

Answer:

Is follow-up needed? Yes, follow-up questions are needed.

Follow-up: Which country hosted the last FIFA World Cup? Intermediate Answer: The last FIFA World Cup was hosted by Qatar.

Follow-up: Who won the last FIFA World Cup? Intermediate Answer: The last FIFA World Cup was won by Argentina.

Follow-up: When did the last FIFA World Cup take place? Intermediate Answer: The last FIFA World Cup took place in 2022.

So the final answer is: The last FIFA World Cup was held in Qatar in 2022, and Argentina emerged as the winner.

Question: Who is considered the greatest basketball player of all time?

Answer:

Are follow-up questions required? Yes, follow-up questions are needed.

Follow-up: How many NBA championships has the greatest basketball player won? Intermediate

Answer: The greatest basketball player, Michael Jordan, won six NBA championships.

Follow-up: Which team did Michael Jordan spend the majority of his career with? Intermediate

Answer: Michael Jordan spent the majority of his career with the Chicago Bulls.

So the final answer is: Michael Jordan is considered the greatest basketball player of all time, winning six NBA championships with the Chicago Bulls.

Question : Who won the 2023 cricket world cup?

Answer:

Zero Shot

在提示中,添加 ‘think step by step’,‘break this task into simpler subtask and solve’等短语作为原始提示旁边的后缀/前缀。这样的提示可以是这样的:

“Think step by step and explain the steps on how to make tea?”

Chain of Thought(CoT)

这个想法涉及到在提供少数样本示例的情况下,为每个示例添加中间推理步骤,以展示如何得出最终解决方案。然后,模型被要求解决一个新的、用户定义的问题。在这个过程中,LLM将利用提供的示例来识别解决问题的模式,并应用这些模式来生成未解决问题的中间推理步骤和最终答案。

具体来说,模型首先会分析给出的示例,理解问题解决的逻辑和步骤。接着,当面对新的问题时,模型会尝试模仿示例中的推理过程,生成一系列的中间步骤,这些步骤将引导它到达正确的解决方案。这种方法的关键在于模型能够从有限的示例中学习到通用的推理模式,并将这些模式应用到新的、类似的问题上。

通过这种方式,LLM不仅能够提供答案,还能够展示其思考过程,这使得用户能够更好地理解模型是如何工作的,并增加了模型的透明度和可信度。

template = """

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Adding all the odd numbers (9, 15, 1) gives 25. The answer is False.The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:"""prompt = PromptTemplate(input_variables=[],template=template

)llm = OpenAI()

chain = LLMChain(llm=llm, prompt=prompt)

print("### Model Output ###")

print(chain.predict())### MODEL OUTPUT ####

Adding all the odd numbers (15, 5, 13, 7, 1) gives 41. The answer is False.Tree of Thoughts (ToT)

从多种解决问题的方法开始,然后逐一淘汰,直到找到最终解决方案。这可以是使用LLMs解决数学问题时的枪支提示,也可以是对某个想法的头脑风暴。

Assume 5 experts will take turns sharing one step of their thinking to answer the question asked. They will continue this process, moving on to the next step,until one of them realizes they made a mistake and leaves the discussion.

The question is How to make tea?

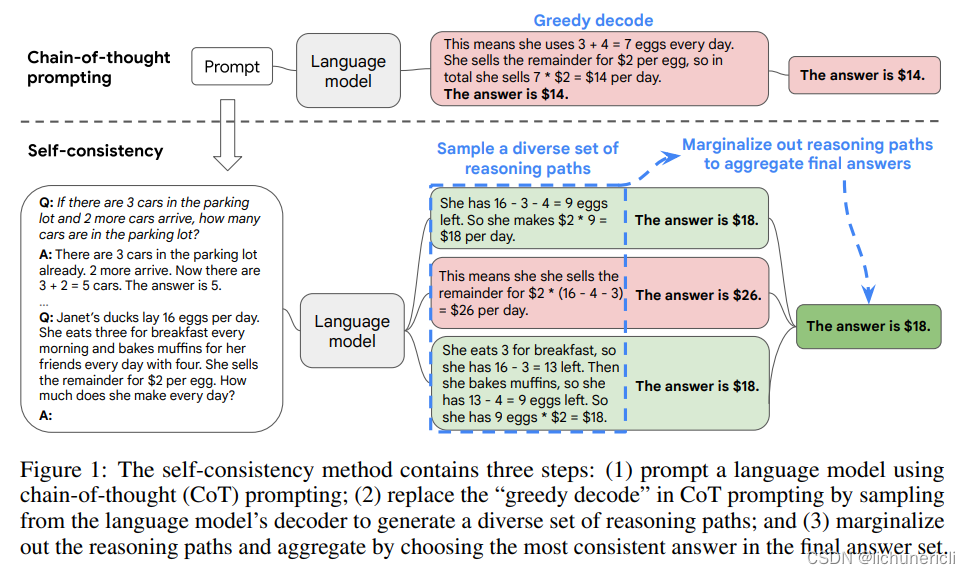

Self-consistency

自我一致性提示是CoT(Chain of Thought)方法的扩展,它通过多次使用相同的CoT提示来生成模型的输出。在这个过程中,模型会多次运行,每次都尝试提供一个答案。然后,通过比较这些答案,选择出现频率最高或者最一致的答案作为最终输出。这种方法基于一个观点,即对于复杂的推理问题,虽然存在多种思考路径,但最终都会指向一个正确答案。通过对答案进行多数投票来选择最可能的答案,这种方法在效果上几乎与复杂的概率权衡方法相当。自我一致性提示利用了这一直觉,即通过重复生成和比较答案,可以更准确地找到问题的正确解答。

Self Consistency is Proposed, Outperforms CoT Prompting

- 使用自我一致性来取代https://arxiv.org/pdf/2203.11171.pdf中使用的朴素贪婪解码。

- 首先对一组不同的推理路径进行采样,而不是只采用贪婪的推理路径,然后通过边缘化采样的推理路径来选择最一致的答案。

- 自我一致性利用了一种直觉,即复杂的推理问题通常会采用多种不同的思维方式来得出其独特的正确答案。

假设CoT已知

- 1) 首先,用一组手工编写的思维链示例提示语言模型。

- 2) 接下来,从语言模型的解码器中采样一组候选输出,生成一组多样化的候选推理路径。

- 3) 最后,通过消除采样推理路径并选择生成的答案中最一致的答案来聚合答案。

# Table 17 from self-consistency paper - https://arxiv.org/pdf/2203.11171.pdftemplate = """

Q: There are 15 trees in the grove. Grove workers will plant trees in the grove today. After they are done, there will be 21 trees. How many trees did the grove workers plant today?

A: We start with 15 trees. Later we have 21 trees. The difference must be the number of trees they planted. So, they must have planted 21 - 15 = 6 trees. The answer is 6.....Q: Olivia has $23. She bought five bagels for $3 each. How much money does she have left?

A: She bought 5 bagels for $3 each. This means she spent 5Q: When I was 6 my sister was half my age. Now I’m 70 how old is my sister?

A:

"""prompt = PromptTemplate(input_variables=[],template=template

)llm = OpenAI()

chain = LLMChain(llm=llm, prompt=prompt)

print("### Model Output ###")

for i in range(3):print(f"Output {i+1}\n {chain.predict()}")### Model Output ###

Output 1When I was 6, my sister was half my age. This means that my sister was 6/2 = 3 years old. Now I'm 70, so my sister is 70 - 3 = 67 years old. The answer is 67.Output 2When I was 6 my sister was half my age. That means when I was 6 my sister was 6/2 = 3. That means my sister is now 70 - 3 = 67 years old. The answer is 67.Output 3At the time when I was 6 my sister was half my age. That means she was 6/2 = 3. Now I am 70, so she is 70/2 = 35. The answer is 35.ReAct(Reasoning and Acting)

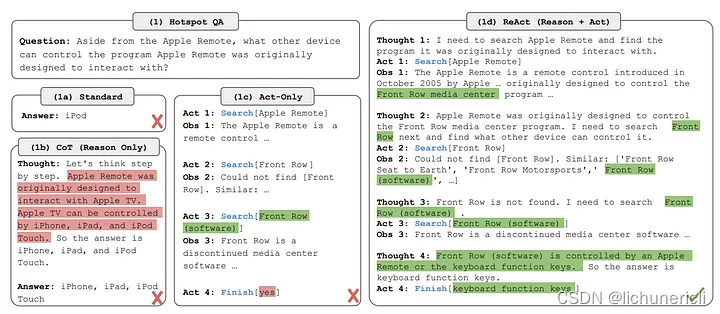

ReAct(Reasoning and Acting)是一种在语言模型中结合推理和行动的方法。这种方法允许模型执行特定的任务相关操作,这些操作可能包括进行计算、搜索网络资源以及查询数据库或数据存储。执行这些操作后,模型会获得一些观察结果,这些结果又会引导模型进行下一步的思考。这个过程形成了一个循环:思考->行动->观察,模型会经历多个这样的循环,直到最终生成答案。

在论文中,这种方法被详细描述为一种迭代的过程,其中模型在每次迭代中都会根据先前的观察和推理来决定下一步的行动。通过这种方式,模型能够逐步地收集信息、细化推理,并最终得出一个综合性的答案。这种方法的优势在于它能够利用外部工具和资源来增强模型的推理能力,使其能够处理更加复杂和多样化的任务。

Solve the given task by following Thought, Action and Observation for every step.

Thought can reason about the current situation and next steps to follow,

Observation is what results are achieved after taking Action.

Action can be of 3 types

Search [item]: Search about item in knowledge base or internet

Follow-up [keyword]: Come up with new follow-up questions based on keyword

Finish [answer]: Give final answer

How to prepare tea?

from langchain import OpenAI, Wikipedia

from langchain.agents import initialize_agent, Tool

from langchain.agents.react.base import DocstoreExplorer

docstore=DocstoreExplorer(Wikipedia())

tools = [Tool(name="Search",func=docstore.search,description="Try to search for wiki page."),Tool(name="Lookup",func=docstore.lookup,description="Lookup a term in the page, imitating cmd-F functionality")

]llm = OpenAI(temperature=0, model_name="text-davinci-003")

react = initialize_agent(tools, llm, agent="react-docstore", verbose=True)question = "What profession does Nicholas Ray and Elia Kazan have in common?"

react.run(question)### Model Output ###> Entering new AgentExecutor chain...

Thought 1: I need to search Nicholas Ray and Elia Kazan, find their professions, then

find the profession they have in common.

Action 1: Search[Nicholas Ray]

Observation 1: Nicholas Ray (born Raymond Nicholas Kienzle Jr., August 7, 1911 – June 16, 1979) was an American film director, screenwriter, and actor best known for the 1955 film Rebel Without a Cause. He is appreciated for many narrative features produced between 1947 and 1963 including They Live By Night, In A Lonely Place, Johnny Guitar, and Bigger Than Life, as well as an experimental work produced throughout the 1970s titled We Can't Go Home Again, which was unfinished at the time of Ray's death.

Ray's compositions within the CinemaScope frame and use of color are particularly well-regarded and he was an important influence on the French New Wave, with Jean-Luc Godard famously writing in a review of Bitter Victory, "... there is cinema. And the cinema is Nicholas Ray."

Thought 2: Professions of Nicholas Ray are director, screenwriter, and actor. I need to search Elia Kazan next and find his professions.Action 2: Search[Elia Kazan]

Observation 2: Elia Kazan (; born Elias Kazantzoglou (Greek: Ηλίας Καζαντζόγλου); September 7, 1909 – September 28, 2003) was an American film and theatre director, producer, screenwriter and actor, described by The New York Times as "one of the most honored and influential directors in Broadway and Hollywood history".Born in Constantinople (now Istanbul), to Cappadocian Greek parents, his family came to the United States in 1913. After attending Williams College and then the Yale School of Drama, he acted professionally for eight years, later joining the Group Theatre in 1932, and co-founded the Actors Studio in 1947. With Robert Lewis and Cheryl Crawford, his actors' studio introduced "Method Acting" under the direction of Lee Strasberg. Kazan acted in a few films, including City for Conquest (1940).His films were concerned with personal or social issues of special concern to him. Kazan writes, "I don't move unless I have some empathy with the basic theme." His first such "issue" film was Gentleman's Agreement (1947), with Gregory Peck, which dealt with antisemitism in America. It received eight Oscar nominations and three wins, including Kazan's first for Best Director. It was followed by Pinky (1949), one of the first films in mainstream Hollywood to address racial prejudice against African Americans. A Streetcar Named Desire (1951), an adaptation of the stage play which he had also directed, received twelve Oscar nominations, winning four, and was Marlon Brando's breakthrough role. Three years later, he directed Brando again in On the Waterfront, a film about union corruption on the New York harbor waterfront. It also received 12 Oscar nominations, winning eight. In 1955, he directed John Steinbeck's East of Eden, which introduced James Dean to movie audiences.

A turning point in Kazan's career came with his testimony as a witness before the House Committee on Un-American Activities in 1952 at the time of the Hollywood blacklist, which brought him strong negative reactions from many friends and colleagues. His testimony helped end the careers of former acting colleagues Morris Carnovsky and Art Smith, along with the work of playwright Clifford Odets. Kazan and Odets had made a pact to name each other in front of the committee. Kazan later justified his act by saying he took "only the more tolerable of two alternatives that were either way painful and wrong." Nearly a half-century later, his anti-Communist testimony continued to cause controversy. When Kazan was awarded an honorary Oscar in 1999, dozens of actors chose not to applaud as 250 demonstrators picketed the event.Kazan influenced the films of the 1950s and 1960s with his provocative, issue-driven subjects. Director Stanley Kubrick called him, "without question, the best director we have in America, [and] capable of performing miracles with the actors he uses.": 36 Film author Ian Freer concludes that even "if his achievements are tainted by political controversy, the debt Hollywood—and actors everywhere—owes him is enormous." In 2010, Martin Scorsese co-directed the documentary film A Letter to Elia as a personal tribute to Kazan.

Thought 3: Professions of Elia Kazan are director, producer, screenwriter, and actor. So profession Nicholas Ray and Elia Kazan have in common is director, screenwriter, and actor.Action 3: Finish[director, screenwriter, actor]> Finished chain.

'director, screenwriter, actor'PAL(Program-aided Language Model)

PAL(Program-aided Language Model)是一种方法,旨在通过将中间推理步骤转化为程序代码来解决LLM在处理数学和逻辑问题时可能出现的错误。在PAL方法中,模型首先生成解决问题的中间步骤的程序代码,然后将这些代码卸载到解决方案步骤中。解决方案步骤通常涉及使用运行时环境(如Python解释器)来执行这些中间程序代码,以得出最终答案。

例如,为了解决一个数学应用题,我们可以使用基于Python的解释器和LLM。首先,LLM会分析问题并生成解决该问题的Python代码。然后,这段代码会在Python解释器中运行,以计算出答案。这个过程可以有效地减少模型在数学和逻辑推理中的错误,因为复杂的计算和推理被委托给了精确的编程语言和运行时环境。

通过这种方式,PAL结合了LLM的强大语言理解和编程语言的精确计算能力,从而提高了解决数学和逻辑问题的准确性和可靠性。

from langchain.chains import PALChain

from langchain import OpenAIllm = OpenAI(model_name='code-davinci-002', temperature=0, max_tokens=512)

pal_chain = PALChain.from_math_prompt(llm, verbose=True)question = "Jan has three times the number of pets as Marcia. Marcia has two

more pets than Cindy. If Cindy has four pets, how many total pets do the threehave?"pal_chain.run(question)### Model Output ###> Entering new PALChain chain...

def solution():"""Jan has three times the number of pets as Marcia. Marcia has two more pets than Cindy. If Cindy has four pets, how many total pets do the three have?"""cindy_pets = 4marcia_pets = cindy_pets + 2jan_pets = marcia_pets * 3total_pets = cindy_pets + marcia_pets + jan_petsresult = total_petsreturn result> Finished chain.

'28'MRKL(Modular Reasoning, Knowledge, and Language)

MRKL系统是一种结合了大型语言模型和外部工具的方法,用于处理复杂的问题。在MRKL系统中,模型可以根据需要选择和使用不同的工具来帮助解决问题。这与ReAct方法非常相似,后者也是将语言模型与外部操作相结合,以增强模型的推理能力。在ReAct风格的方法中,模型可能会使用以下三个工具之一来推理问题并采取行动:

- a) SerpApi进行Google搜索:模型可以使用SerpApi来搜索互联网上的信息,例如查找著名演员的女朋友。

- b) 查询数据库:模型可能会查询一个数据库来获取特定的信息,比如某人的年龄。

- c) 使用LLM生成并运行Python代码:模型可以通过LLM根据自然语言描述生成Python代码,然后通过Python REPL运行这些代码来进行计算。

现在,让我们看一个具体的例子。假设我们要求系统找到著名演员李奥·迪卡普里奥的女朋友,并计算她当前年龄的0.43次方。

首先,MRKL系统可能会通过SerpApi进行Google搜索来找到李奥·迪卡普里奥的女朋友的资料。一旦找到她的名字,系统可能会查询一个数据库来获取她的出生日期,从而计算出她的当前年龄。最后,系统会使用LLM生成Python代码来计算这个年龄的0.43次方,并通过Python REPL运行代码来得到最终答案。

这个过程中,MRKL系统将语言模型与外部工具相结合,以模块化的方式处理问题,从而实现了更加强大和灵活的推理能力。

MRKL(Modular Reasoning, Knowledge, and Language)和ReAct(Reasoning and Acting)都是将语言模型与外部工具或操作相结合的方法,以提高模型处理复杂问题的能力。尽管这两种方法有相似之处,但它们在设计和实施上存在一些关键的区别:

1. **方法和目标**:

- **MRKL**:MRKL系统强调模块化的推理过程,结合了语言模型、知识和外部工具的使用。它通常涉及更复杂的模块化设计,其中每个模块负责处理问题的一个特定方面,如知识检索、逻辑推理或语言生成。

- **ReAct**:ReAct方法侧重于通过语言模型生成一系列的中间推理步骤,这些步骤随后被转化为具体的行动(如API调用或代码执行),以解决特定的问题。ReAct强调的是模型生成可执行的操作来解决实际问题。

2. **工具和操作的使用**:

- **MRKL**:在MRKL系统中,外部工具和数据库的使用是模块化的一部分,模型可以根据需要选择和使用这些工具来帮助解决问题。这种方法可能涉及到更广泛的工具集成和更复杂的决策过程。

- **ReAct**:ReAct方法中,模型生成的行动通常是针对特定任务的,如搜索、查询或计算。这些行动是通过模型生成的代码或指令来执行的,而不一定涉及到多个独立的工具模块。

3. **实现和复杂性**:

- **MRKL**:由于MRKL系统的模块化设计,它可能需要更复杂的实现和更多的集成工作。每个模块可能需要单独开发和维护,而且它们之间的交互也需要精心设计。

- **ReAct**:ReAct方法相对更直接,它侧重于模型生成可执行的操作,这些操作可以直接在运行时环境中执行。这种方法可能更容易实现,因为它主要依赖于模型的输出和外部执行环境。

总的来说,MRKL和ReAct都是将语言模型与外部资源相结合的方法,以提高模型的推理能力。MRKL更侧重于模块化和系统的设计,而ReAct更侧重于模型生成的具体行动和操作。两种方法各有优势,适用于不同类型的任务和环境。

from langchain import LLMMathChain, OpenAI, SerpAPIWrapper, SQLDatabase, SQLDatabaseChain

from langchain.agents import initialize_agent, Toolllm = OpenAI(temperature=0)

search = SerpAPIWrapper()

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

db = SQLDatabase.from_uri("sqlite:///Chinook.db")

db_chain = SQLDatabaseChain(llm=llm, database=db, verbose=True)

tools = [Tool(name = "Search",func=search.run,description="useful for when you need to answer questions about current events. You should ask targeted questions"),Tool(name="Calculator",func=llm_math_chain.run,description="useful for when you need to answer questions about math"),Tool(name="FooBar DB",func=db_chain.run,description="useful for when you need to answer questions about FooBar. Input should be in the form of a question containing full context")

]

mrkl = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)mrkl.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")### Model Output ###> Entering new AgentExecutor chain...I need to find out who Leo DiCaprio's girlfriend is and then calculate her age raised to the 0.43 power.

Action: Search

Action Input: "Who is Leo DiCaprio's girlfriend?"

Observation: Camila Morrone

Thought: I need to calculate her age raised to the 0.43 power

Action: Calculator

Action Input: 22^0.43> Entering new LLMMathChain chain...

22^0.43

```python

import math

print(math.pow(22, 0.43))

```Answer: 3.777824273683966> Finished chain.Observation: Answer: 3.777824273683966Thought: I now know the final answer

Final Answer: Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.777824273683966.> Finished chain.

"Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.777824273683966."mrkl.run("""

What is the full name of the artist who recently released an album called

'The Storm Before the Calm' and are they in the FooBar database?

If so, what albums of theirs are in the FooBar database and what is the

total duration of all such albums?""")### MODEL OUTPUT ###

> Entering new AgentExecutor chain...I need to find out the artist's full name, if they are in the FooBar database, and the total duration of their albums in the database.

Action: Search

Action Input: "The Storm Before the Calm" artist

Observation: The Storm Before the Calm (stylized in all lowercase) is the tenth (and eighth international) studio album by Canadian-American singer-songwriter Alanis ...

Thought: I now need to check if Alanis Morissette is in the FooBar database.

Action: FooBar DB

Action Input: Is Alanis Morissette in the FooBar database?> Entering new SQLDatabaseChain chain...

Is Alanis Morissette in the FooBar database?

SQLQuery: SELECT Name FROM Artist WHERE Name LIKE '%Alanis Morissette%';

SQLResult: [('Alanis Morissette',)]

Answer: Yes, Alanis Morissette is in the FooBar database.

> Finished chain.Observation: Yes, Alanis Morissette is in the FooBar database.

Thought: I now need to find out what albums of hers are in the FooBar database and what is the total duration of all such albums.

Action: FooBar DB

Action Input: What albums of Alanis Morissette are in the FooBar database and what is the total duration of all such albums?> Entering new SQLDatabaseChain chain...

What albums of Alanis Morissette are in the FooBar database and what is the total duration of all such albums?

SQLQuery: SELECT Album.Title, SUM(Track.Milliseconds) AS TotalDuration FROM Album INNER JOIN Track ON Album.AlbumId = Track.AlbumId WHERE Album.ArtistId = (SELECT ArtistId FROM Artist WHERE Name = 'Alanis Morissette') GROUP BY Album.Title;

SQLResult: [('Jagged Little Pill', 3450925)]

Answer: The albums of Alanis Morissette in the FooBar database are 'Jagged Little Pill' and the total duration of all such albums is 3,450,925 milliseconds.

> Finished chain.Observation: The albums of Alanis Morissette in the FooBar database are 'Jagged Little Pill' and the total duration of all such albums is 3,450,925 milliseconds.

Thought: I now know the final answer.

Final Answer: Alanis Morissette is the artist who recently released an album called 'The Storm Before the Calm' and her albums in the FooBar database are 'Jagged Little Pill' with a total duration of 3,450,925 milliseconds.> Finished chain.

"Alanis Morissette is the artist who recently released an album called 'The Storm Before the Calm' and her albums in the FooBar database are 'Jagged Little Pill' with a total duration of 3,450,925 milliseconds."通过集成外部搜索功能、数据库访问和其他工具,可以使用 LLM 作为与系统交互的自然语言界面来构建非常强大的应用程序。

Self-Ask-With-Search

自问与搜索(Self-Ask)是一种利用语言模型进行组合推理任务的方法。在这种方法中,整个问题的解决方案是通过正确组合多个子问题的答案来实现的。这与Chain of Thought(CoT)方法有所不同,CoT通常是通过一系列的中间推理步骤来得出最终答案,而不一定涉及到将子问题的答案组合起来。

在自问与搜索的方法中,模型首先将原始问题分解为一组子问题,然后分别解决这些子问题。每个子问题的答案都是中间答案,最终,模型将这些中间答案组合起来,形成对原始问题的最终回答。

例如,要回答“超导性被发现时,谁是美国总统?”这个问题,模型可能会首先将问题分解为以下子问题:

- 1. 超导性是在哪一年被发现的?

- 2. 在那一年,谁是美国总统?

模型会分别解决这两个子问题,得到超导性发现的年份和那一年的美国总统。然后,模型将这两个答案组合起来,形成对原始问题的最终回答。

这种方法的关键在于模型的组合能力,即它能够识别出解决整体问题所需的一系列子问题,并能够正确地组合这些子问题的答案。这种方法可以提高模型解决复杂问题的能力,因为它通过分解问题来简化解决问题的过程。

from langchain import OpenAI, SerpAPIWrapper

from langchain.agents import initialize_agent, Toolllm = OpenAI(temperature=0)

search = SerpAPIWrapper()

tools = [Tool(name="Intermediate Answer",func=search.run,description="useful for searching")

]self_ask_with_search = initialize_agent(tools, llm, agent="self-ask-with-search", verbose=True)

self_ask_with_search.run("Who was president of the U.S. when superconductivity was discovered?")### Model Output ###> Entering new AgentExecutor chain...Yes.

Follow up: When was superconductivity discovered?

Intermediate answer: 1911

Follow up: Who was president of the U.S. in 1911?

Intermediate answer: William Howard Taft was elected the 27th President of the United States (1909-1913) and later became the tenth Chief Justice of the United States (1921-1930), the only person to have served in both of these offices.

So the final answer is: William Howard Taft> Finished chain.

'William Howard Taft'