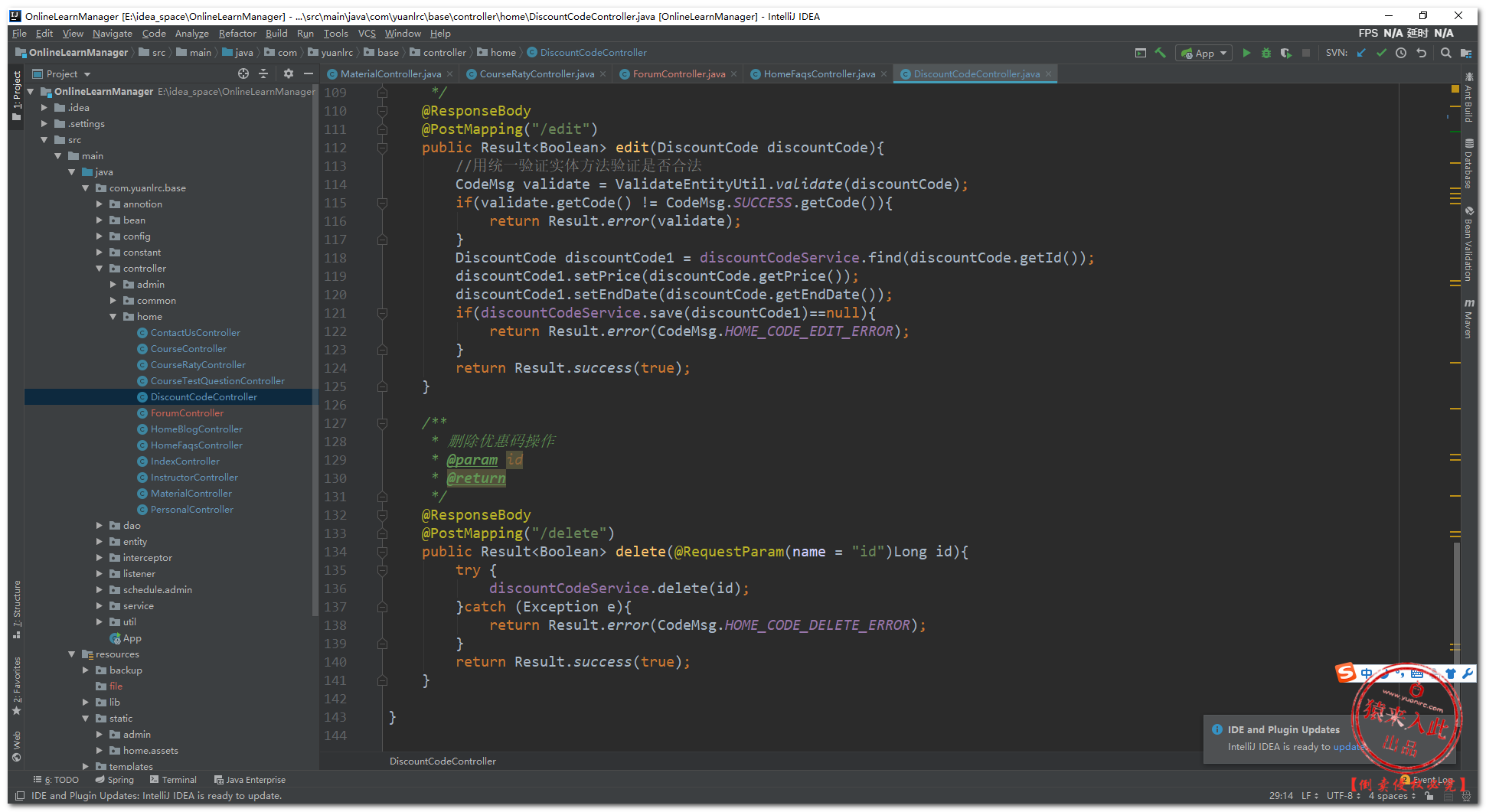

LOGIC ROUTER

使用代码逻辑调用子agent

https://github.com/ganeshnehru/RAG-Multi-Modal-Generative-AI-Agent

import re import logging from chains.code_assistant import CodeAssistant from chains.language_assistant import LanguageAssistant from chains.vision_assistant import VisionAssistantclass AssistantRouter:def __init__(self):self.code_assistant = CodeAssistant()self.language_assistant = LanguageAssistant()self.vision_assistant = VisionAssistant()def route_input(self, user_input='', image_path=None):"""Route the input to the appropriate assistant based on the content of the user input.:param user_input: str, The input text from the user.:param image_path: str, Path to an image file if provided.:return: tuple, The response from the appropriate assistant and the assistant name."""try:if image_path:# Process image and route to VisionAssistantimage_b64 = self.vision_assistant.process_image(image_path)if image_b64 is None:raise ValueError("Failed to process image.")input_string = f"{user_input}|{image_b64}"response = self.vision_assistant.invoke(input_string)return response, 'VisionAssistant'if self.is_code_related(user_input):response = self.code_assistant.invoke(user_input)return response, 'CodeAssistant'else:response = self.language_assistant.invoke(user_input)return response, 'LanguageAssistant'except Exception as e:logging.error(f"Error in AssistantRouter.route_input: {e}")return {"content": f"Error: {str(e)}"}, 'Error'def is_code_related(self, text):"""Determine if the text input is related to coding.:param text: str, The input text.:return: bool, True if the text is code related, False otherwise."""# Basic keyword-based detectioncode_keywords = ['function', 'class', 'def', 'import', 'print', 'variable','loop', 'array', 'list', 'dictionary', 'exception', 'error', 'bug','code', 'compile', 'execute', 'algorithm', 'data structure', 'java', 'python', 'javascript', 'c++','c#', 'ruby', 'php', 'html', 'css', 'sql', 'swift', 'kotlin', 'go', 'rust', 'typescript', 'r', 'perl','scala', 'shell', 'bash', 'powershell', 'objective-c', 'matlab', 'groovy', 'lua', 'dart', 'cobol','fortran', 'haskell', 'lisp', 'pascal', 'prolog', 'scheme', 'smalltalk', 'verilog', 'vhdl','assembly', 'coffeescript', 'f#', 'julia', 'racket', 'scratch', 'solidity', 'vba', 'abap', 'apex','awk', 'clojure', 'd', 'elixir', 'erlang', 'forth', 'hack', 'idris', 'j', 'julia', 'kdb+', 'labview','logtalk', 'lolcode', 'mumps', 'nim', 'ocaml', 'pl/i', 'postscript', 'powershell', 'rpg', 'sas', 'sml','tcl', 'turing', 'unicon', 'x10', 'xquery', 'zsh']pattern = re.compile(r'\b(?:' + '|'.join(re.escape(word) for word in code_keywords) + r')\b', re.IGNORECASE)return bool(pattern.search(text))

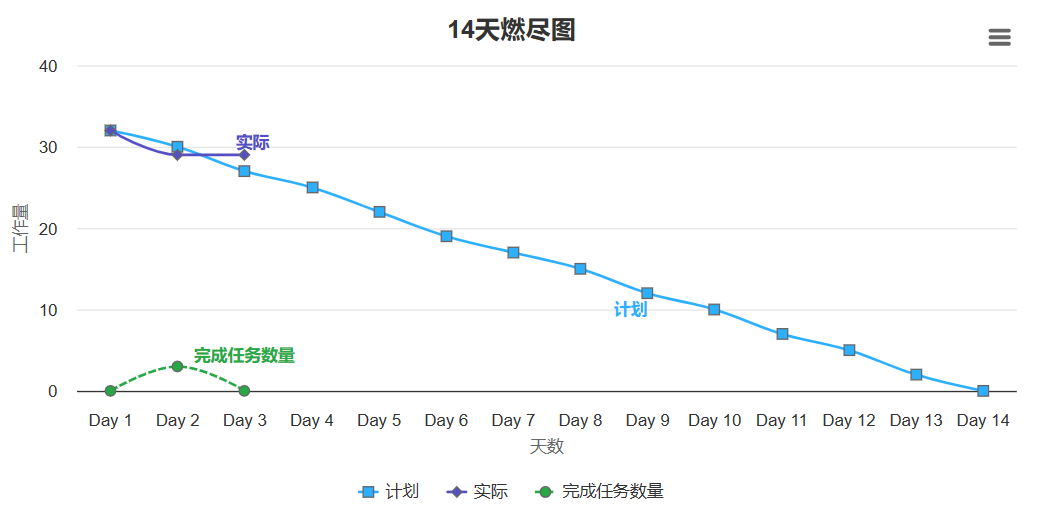

LANGCHAIN ROUTER

限定 with_structured_output 输出格式,使得langchain决定哪一个子路可以被调用。

https://github.com/MadhanMohanReddy2301/SmartChainAgents/blob/master/router.py

### Routerfrom typing import Literal from dotenv import load_dotenvload_dotenv()from langchain_core.prompts import ChatPromptTemplate from pydantic import BaseModel, Fieldimport os# Data model class RouteQuery(BaseModel):"""Route a user query to the most relevant datasource."""datasource: Literal["arxiv_search", "wiki_search", "llm_search"] = Field(...,description="Given a user question choose to route it to wikipedia or arxiv_search or llm_search.",)# LLM with function call from langchain_groq import ChatGroq import os groq_api_key=os.getenv('GROQ_API_KEY') os.environ["GROQ_API_KEY"]=groq_api_key llm=ChatGroq(groq_api_key=groq_api_key,model_name="Gemma2-9b-It") structured_llm_router = llm.with_structured_output(RouteQuery)# Prompt system = """You are an expert at routing a user question to a arxiv_search or wikipedia or llm_search. The arxiv_search contains documents related to research papers about ai agents and LLMS. Use the wikipedia for questions on the human related infomation. Otherwise, use llm_search."""route_prompt = ChatPromptTemplate.from_messages([("system", system),("human", "{question}"),] )question_router = route_prompt | structured_llm_router """print(question_router.invoke({"question": "who is Sharukh Khan?"}) ) print(question_router.invoke({"question": "What are the types of agent memory?"}))"""

RAG_SemanticRouting

https://github.com/UribeAlejandro/RAG_SemanticRouting/blob/main/src/main.py

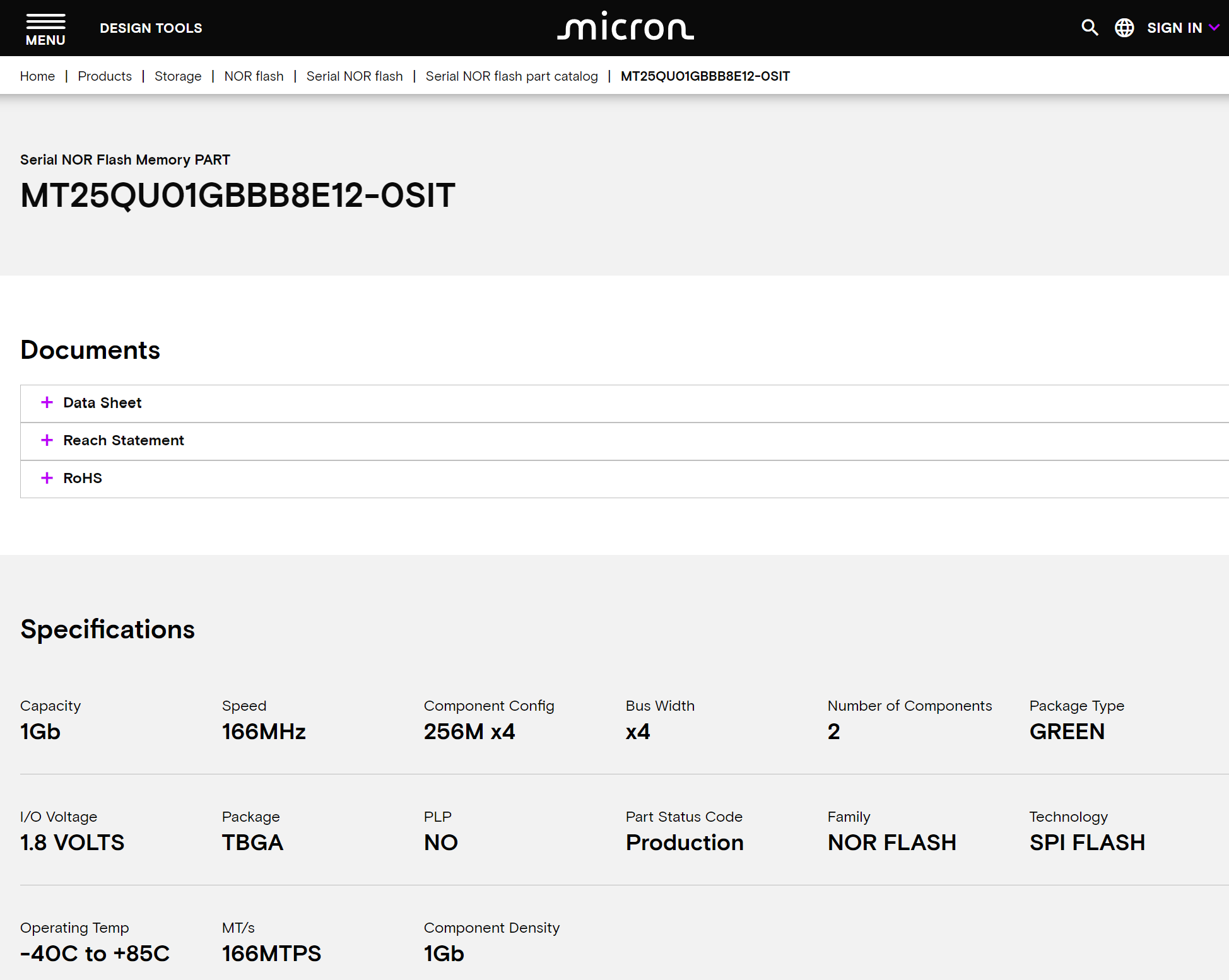

CSV-chat-and-code-Interpreter-agent

以工具的方式调用子agent

https://github.com/FrankAffatigato/CSV-chat-and-code-Interpreter-agent/blob/master/main.py

from typing import Any from dotenv import load_dotenv from langchain import hub from langchain_core.tools import Tool from langchain_openai import ChatOpenAI from langchain.agents import create_react_agent, AgentExecutor from langchain_experimental.tools import PythonREPLTool from langchain_experimental.agents.agent_toolkits import create_csv_agentload_dotenv()def main():print("Start")instructions = """You are an agent designed to write and execute python code to answer questions.You have access to a python REPL, which you can use to execute python code.If you get an error, debug your code and try again.Only use the output of your code to answer the question. You might know the answer without running any code, but you should still run the code to get the answer.If it does not seem like you can write code to answer the question, just return "I don't know" as the answer."""base_prompt = hub.pull("langchain-ai/react-agent-template")prompt = base_prompt.partial(instructions=instructions)tools = [PythonREPLTool()]python_agent = create_react_agent(prompt=prompt,llm=ChatOpenAI(temperature=0, model="gpt-4-turbo"),tools=tools,)python_agent_executor = AgentExecutor(agent=python_agent, tools=tools, verbose=True)# agent_executor.invoke(# input={# "input": """generate and save in current working directory 15 QRcodes# that point to www.udemy.com/course/langchain, you have qrcode package installed already"""# }# ) csv_agent_executor = create_csv_agent(llm=ChatOpenAI(temperature=0, model="gpt-4"),path="episode_info.csv",verbose=True,#agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,allow_dangerous_code=True)# csv_agent.invoke(# input={"input": "print the seasons by ascending order of the number of episodes they have?"# }# )################################ Router Grand Agent ########################################################def python_agent_executor_wrapper(original_prompt: str) -> dict[str, Any]:return python_agent_executor.invoke({"input": original_prompt})def python_agent_executor_wrapper(original_prompt: str) -> dict[str, Any]:return python_agent_executor.invoke({"input": original_prompt})tools = [Tool(name="Python Agent",func=python_agent_executor_wrapper,description="""useful when you need to transform natural language to python and execute the python code,returning the results of the code executionDOES NOT ACCEPT CODE AS INPUT""",),Tool(name="CSV Agent",func=csv_agent_executor.invoke,description="""useful when you need to answer question over episode_info.csv file,takes an input the entire question and returns the answer after running pandas calculations""",),]prompt = base_prompt.partial(instructions="")#Create router agentgrand_agent = create_react_agent(prompt=prompt,llm=ChatOpenAI(temperature=0, model="gpt-4-turbo"),tools=tools,)grand_agent_executor = AgentExecutor(agent=grand_agent, tools=tools, verbose=True)print(grand_agent_executor.invoke({"input": "which season has the most episodes?",}))# print(# grand_agent_executor.invoke(# {# "input": "generate and save in current working directory 15 QR codes that point to www.udemy.com/course/langchain, you have qrcode package installed already",# }# )# )if __name__ == "__main__":main()

https://smith.langchain.com/hub/langchain-ai/react-agent-template

{instructions}TOOLS:------You have access to the following tools:{tools}To use a tool, please use the following format:```Thought: Do I need to use a tool? YesAction: the action to take, should be one of [{tool_names}]Action Input: the input to the actionObservation: the result of the action```When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:```Thought: Do I need to use a tool? NoFinal Answer: [your response here]```Begin!Previous conversation history:{chat_history}New input: {input}{agent_scratchpad}

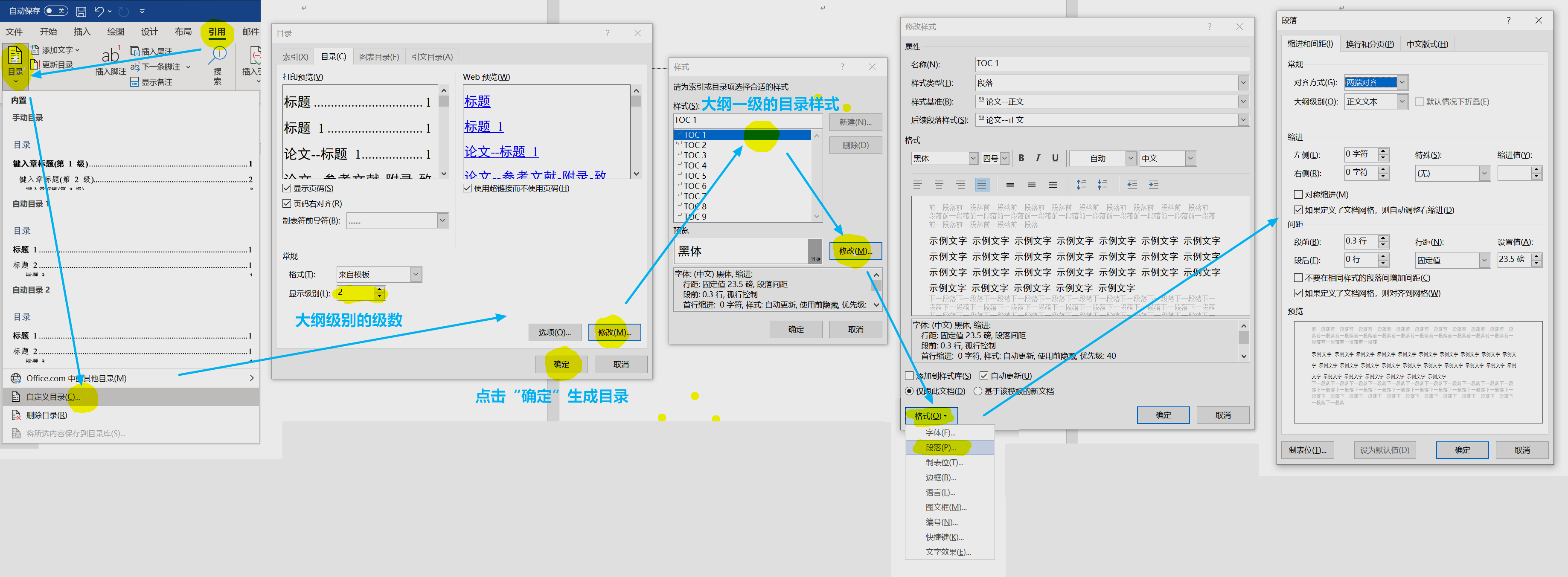

langchain MultiPromptChain

https://github.com/hyder110/LangChain-Router-Chains-for-PDF-Q-A/blob/master/multi_prompt.py

from langchain.chains.router import MultiPromptChain from langchain.llms import OpenAIphysics_template = """You are a very smart physics professor. \ You are great at answering questions about physics in a concise and easy to understand manner. \ When you don't know the answer to a question you admit that you don't know.Here is a question: {input}"""math_template = """You are a very good mathematician. You are great at answering math questions. \ You are so good because you are able to break down hard problems into their component parts, \ answer the component parts, and then put them together to answer the broader question.Here is a question: {input}"""biology_template = """You are a skilled biology professor. \ You are great at explaining complex biological concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""english_template = """You are a skilled english professor. \ You are great at explaining complex literary concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""cs_template = """You are a proficient computer scientist. \ You can explain complex algorithms and data structures in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""python_template = """You are a skilled python programmer. \ You can explain complex algorithms and data structures in simple terms. \ When you don't know the answer to a question, you admit it.here is a question: {input}"""accountant_template = """You are a skilled accountant. \ You can explain complex accounting concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""lawyer_template = """You are a skilled lawyer. \ You can explain complex legal concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""teacher_template = """You are a skilled teacher. \ You can explain complex educational concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""engineer_template = """You are a skilled engineer. \ You can explain complex engineering concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""psychologist_template = """You are a skilled psychologist. \ You can explain complex psychological concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""scientist_template = """You are a skilled scientist. \ You can explain complex scientific concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""economist_template = """You are a skilled economist. \ You can explain complex economic concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""architect_template = """You are a skilled architect. \ You can explain complex architectural concepts in simple terms. \ When you don't know the answer to a question, you admit it.Here is a question: {input}"""prompt_infos = [("physics", "Good for answering questions about physics", physics_template),("math", "Good for answering math questions", math_template),("biology", "Good for answering questions about biology", biology_template),("english", "Good for answering questions about english", english_template),("cs", "Good for answering questions about computer science", cs_template),("python", "Good for answering questions about python", python_template),("accountant", "Good for answering questions about accounting", accountant_template),("lawyer", "Good for answering questions about law", lawyer_template),("teacher", "Good for answering questions about education", teacher_template),("engineer", "Good for answering questions about engineering", engineer_template),("psychologist", "Good for answering questions about psychology", psychologist_template),("scientist", "Good for answering questions about science", scientist_template),("economist", "Good for answering questions about economics", economist_template),("architect", "Good for answering questions about architecture", architect_template), ]chain = MultiPromptChain.from_prompts(OpenAI(), *zip(*prompt_infos), verbose=True)# get user question while True:question = input("Ask a question: ")print(chain.run(question))

https://python.langchain.com.cn/docs/modules/chains/foundational/router

from langchain.chains.router.llm_router import LLMRouterChain, RouterOutputParser from langchain.chains.router.multi_prompt_prompt import MULTI_PROMPT_ROUTER_TEMPLATEdestinations = [f"{p['name']}: {p['description']}" for p in prompt_infos] destinations_str = "\n".join(destinations) router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str) router_prompt = PromptTemplate(template=router_template,input_variables=["input"],output_parser=RouterOutputParser(), ) router_chain = LLMRouterChain.from_llm(llm, router_prompt)chain = MultiPromptChain(router_chain=router_chain,destination_chains=destination_chains,default_chain=default_chain,verbose=True, )print(chain.run("What is black body radiation?"))

https://zhuanlan.zhihu.com/p/642971042

![[豪の学习笔记] 计算机网络#001](https://img2024.cnblogs.com/blog/3555868/202411/3555868-20241114213740559-965309427.png)