只有

import time

time.sleep(10)

前边加上

from torch.utils.data import Dataset

import torch# 检查是否支持 CUDA

if torch.cuda.is_available():print("CUDA is available!")print(f"Device count: {torch.cuda.device_count()}")print(f"Current device: {torch.cuda.current_device()}")print(f"Device name: {torch.cuda.get_device_name(torch.cuda.current_device())}")

else:print("CUDA is not available.")import time

time.sleep(10)

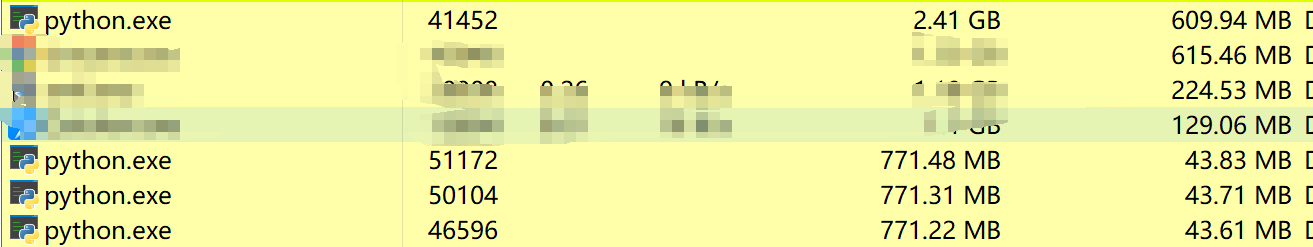

这样看来

import torch和from torch.utils.data import Dataset占用的内存是一样的

from transformers import AutoModelForZeroShotImageClassification, AutoProcessor# import torch

#

# # 检查是否支持 CUDA

# if torch.cuda.is_available():

# print("CUDA is available!")

# print(f"Device count: {torch.cuda.device_count()}")

# print(f"Current device: {torch.cuda.current_device()}")

# print(f"Device name: {torch.cuda.get_device_name(torch.cuda.current_device())}")

# else:

# print("CUDA is not available.")

import time

time.sleep(10)

中间的torch取消注释后几乎不变

多进程测试

from transformers import AutoModelForZeroShotImageClassification, AutoProcessor

import timedef apply_test(s):time.sleep(3)print(s)if __name__ == '__main__':import multiprocessingpool = multiprocessing.Pool(3)for i in range(3):res = pool.apply_async(apply_test, [i])time.sleep(20)

import timedef apply_test(s):time.sleep(3)print(s)if __name__ == '__main__':from transformers import AutoModelForZeroShotImageClassification, AutoProcessorimport multiprocessingpool = multiprocessing.Pool(3)for i in range(3):res = pool.apply_async(apply_test, [i])time.sleep(20)