lvs+dr+httpd+keepalived高可用部署

参考文档:http://blog.csdn.net/m582445672/article/details/7670015

a、 keepalived 是lvs 的扩展项目,因此它们之间具备良好的兼容性。这点应该是keepalived 部署比其他类似工具能更简洁的原因吧!

b、 通过对服务器池对象的健康检查,实现对失效机器/服务的故障隔离。

c、 负载均衡器之间的失败切换failover,是通过VRRPv2( Virtual Router Redundancy Protocol) stack 实现的。

第一部分:部署LVS+DR服务

详细搭建过程:

1、基本信息

|

主机 |

Port+ip |

主机名 |

备注 |

|

DR |

ens33:192.168.1.87 CentOS7.9 |

dr1 |

|

|

RS1 |

ens33:192.168.1.82 CentOS7.9 |

k8snode2 |

http 80服务 |

|

RS2 |

ens33:192.168.1.80 CentOS7.9 |

k8smaster |

http 80服务 |

|

VIP |

192.168.1.100 |

|

|

2、关闭防火墙、时间同步

# 关闭防火墙 systemctl stop firewalld systemctl disable firewalld# 关闭selinux sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久 setenforce 0 # 临时yum install ntpdate -y ntpdate time.windows.com hostnamectl set-hostname dr1 # 设置主机名

3、http业务测试

[root@k8snode2 ~]# yum install httpd [root@k8snode2 ~]# systemctl start httpd [root@k8snode2 ~]# systemctl status httpd [root@k8snode2 ~]# cat /var/www/html/index.html k8snode2 192.168.1.82

验证,

[root@k8smaster ~]# curl 192.168.1.80

k8smaster 192.168.1.80

[root@k8smaster ~]# curl 192.168.1.82

k8snode2 192.168.1.82

4、安装插件ipvsadm

[root@DR1 ~]# yum install ipvsadm

[root@DR1 ~]# ipvsadm 加载至内核

[root@DR1 ~]# lsmod |grep ip_vs 查看加载内核情况,有输出即为加载成功

[root@dr1 ~]# lsmod |grep ip_vs ip_vs_wrr 12697 0 ip_vs_rr 12600 1 ip_vs 145458 5 ip_vs_rr,ip_vs_wrr nf_conntrack 139264 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

5、创建并执行脚本文件

[root@dr1 ~]# cat lvs_dr.sh # DR主机配置

[root@dr1 ~]# cat lvs_dr.sh #!/bin/bash echo 1 > /proc/sys/net/ipv4/ip_forward ipv=/sbin/ipvsadm vip=192.168.1.100 rs1=192.168.1.82 rs2=192.168.1.80 ifconfig ens33:0 $vip broadcast $vip netmask 255.255.255.255 up route add -host $vip dev ens33:0 $ipv -C $ipv -A -t $vip:80 -s rr $ipv -a -t $vip:80 -r $rs1:80 -g $ipv -a -t $vip:80 -r $rs2:80 -g

[root@k8snode2 ~]# cat lvs_dr_rs.sh # 两台后端real server一样的配置

[root@k8snode2 ~]# cat lvs_dr_rs.sh

#!/bin/bash

vip=192.168.1.100

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip dev lo:0

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/ens33/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/ens33/arp_announc

启动脚本。

[root@k8smaster ~]# sh lvs_dr_rs.sh

[root@k8smaster ~]# ip a |grep 192.168.1.100

inet 192.168.1.100/32 brd 192.168.1.100 scope global lo:0

[root@dr1 ~]# sh lvs_dr.sh

[root@dr1 ~]# ip a |grep 192.168.1.100

inet 192.168.1.100/32 brd 192.168.1.100 scope global ens33:0

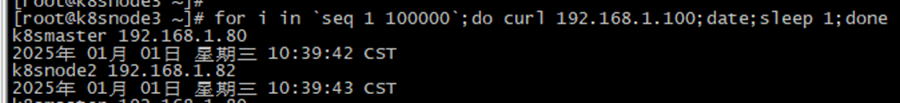

6、测试效果

[root@client ~]# for i in `seq 1 100000`;do curl 192.168.1.100;date;sleep 1;done

[root@dr1 ~]# ipvsadm -Ln

[root@dr1 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.1.100:80 rr persistent 50-> 192.168.1.80:80 Route 3 0 37 -> 192.168.1.82:80 Route 3 0 18

至此,第一部分完成,实现lvs负载均衡。这里提一点,如果是Windows,每次访问后,清空arp缓存 (arp -d ),便于轮询方式查看。

第二部分:高可用安装部署

7、新节点安装与验证

新增一个dr2节点,组成LVS高可用方式。

跟dr1环境和配置保持一致。

[root@k8snode3 ~]# for i in `seq 1 100000`;do curl 192.168.1.100;date;sleep 1;done

[root@dr1 ~]# systemctl restart network # 重启net,临时vip掉线

[root@dr2 ~]# ipvsadm –Ln # 查看,关闭iptables和firewalld

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.100:80 rr

-> 192.168.1.80:80 Route 1 0 47

-> 192.168.1.82:80 Route 1 0 47

如上。dr1失效后,dr2单点也可以运行,但是并不能实现自动切换,这里只是确认两个dr节点都能够正常转发。

8、安装keepalived

[root@DR1 ~]# yum install -y keepalived

[root@DR1 ~]# vim /etc/keepalived/keepalived.conf

[root@dr1 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id LVS_HTTP_TEST

}vrrp_instance VI_1 {state MASTERinterface ens33virtual_router_id 51priority 100advert_int 3authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.1.100}

}virtual_server 192.168.1.100 80 {delay_loop 6lb_algo wrrlb_kind DRpersistence_timeout 50protocol TCPreal_server 192.168.1.82 80 {weight 3TCP_CHECK {connect_timeout 3retry 3delay_before_retry 3connect_port 80}}real_server 192.168.1.80 80 {weight 3TCP_CHECK {connect_timeout 3retry 3delay_before_retry 3connect_port 80}}}

[root@dr2 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id LVS_HTTP_TEST

}vrrp_instance VI_1 {state BACKUPinterface ens33virtual_router_id 51priority 80advert_int 3authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.1.100}

}virtual_server 192.168.1.100 80 {delay_loop 6lb_algo wrrlb_kind DRpersistence_timeout 50protocol TCPreal_server 192.168.1.82 80 {weight 3TCP_CHECK {connect_timeout 3retry 3delay_before_retry 3connect_port 80}}real_server 192.168.1.80 80 {weight 3TCP_CHECK {connect_timeout 3retry 3delay_before_retry 3connect_port 80}}

}

[root@dr1 ~]# systemctl start keepalived

9、测试

开始测试,使用curl访问VIP,观察输出同时观察keepalived(tail -f /var/log/messages)日志

关闭启动一个dr,重启网络即可,也可以编写脚本实现

a、关闭RS2 http服务,再开启服务

[root@k8snode2 ~]# systemctl stop httpd

[root@k8snode2 ~]# systemctl start httpd

[root@dr1 ~]# tail -f /var/log/messages # 如下,探测在12s发送,然后重试了3次,一共4次探测不通过就失败了,剔出该节点。51分探测存活,重新加入后端服务到这里。

Jan 1 11:13:52 dr1 Keepalived_vrrp[2304]: Sending gratuitous ARP on ens33 for 192.168.1.100 Jan 1 11:20:12 dr1 Keepalived_healthcheckers[2303]: TCP connection to [192.168.1.82]:80 failed. Jan 1 11:20:15 dr1 Keepalived_healthcheckers[2303]: TCP connection to [192.168.1.82]:80 failed. Jan 1 11:20:18 dr1 Keepalived_healthcheckers[2303]: TCP connection to [192.168.1.82]:80 failed. Jan 1 11:20:21 dr1 Keepalived_healthcheckers[2303]: TCP connection to [192.168.1.82]:80 failed. Jan 1 11:20:21 dr1 Keepalived_healthcheckers[2303]: Check on service [192.168.1.82]:80 failed after 3 retry. Jan 1 11:20:21 dr1 Keepalived_healthcheckers[2303]: Removing service [192.168.1.82]:80 from VS [192.168.1.100]:80 Jan 1 11:20:51 dr1 Keepalived_healthcheckers[2303]: TCP connection to [192.168.1.82]:80 success. Jan 1 11:20:51 dr1 Keepalived_healthcheckers[2303]: Adding service [192.168.1.82]:80 to VS [192.68.1.100]:80

与此同时,观察测试VIP,rs2异常后会指向存活的rs1,恢复后重新分配。

[root@k8snode3 ~]# for i in `seq 1 100000`;do curl 192.168.1.100;date;sleep 1;done

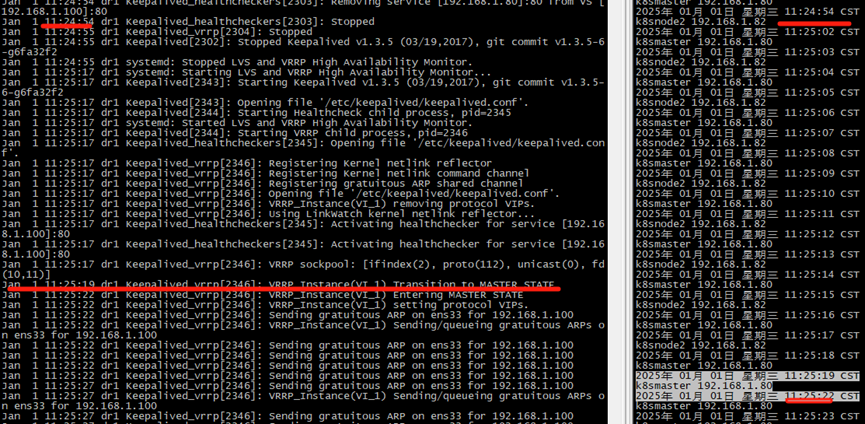

b、关闭keeplived master节点,然后开启

[root@dr1 ~]# systemctl stop keepalived

[root@dr1 ~]# systemctl start keepalived

[root@dr1 ~]# tail -f /var/log/message…

Jan 1 11:20:51 localhost Keepalived_healthcheckers[2490]: Adding service [192.168.1.82]:80 to VS [192.168.1.100]:80

Jan 1 11:24:55 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: Sending gratuitous ARP on ens33 for 192.168.1.100

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens33 for 192.168.1.100

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: Sending gratuitous ARP on ens33 for 192.168.1.100

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: Sending gratuitous ARP on ens33 for 192.168.1.100

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: Sending gratuitous ARP on ens33 for 192.168.1.100

Jan 1 11:24:58 localhost Keepalived_vrrp[2491]: Sending gratuitous ARP on ens33 for 192.168.1.100

...

Jan 1 11:25:19 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) Received advert with higher priority 100, ours 80

Jan 1 11:25:19 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 1 11:25:19 localhost Keepalived_vrrp[2491]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 1 12:01:01 localhost systemd: Started Session 5 of user root.

如上,因为keepalived是抢占模式,所以重启后vip会漂过来。

知识点:只有一个vrrp_instance 的环境里,主负载均衡器(MASTER)与备份负载均衡器。(BACKUP)配置文件的差异一共只有3 处: 全局定义的route_id、vrrp_instance state 以及vrrp_instance 的优先级priority。

注释:

这里说的LVS.并不是用ipvsadm软件配置.而是直接用keepalived的virtual_server配置项控制的. 安装ipvsadm只是可以看到负载状况,其实只需要keepalived也可以实现负载均衡集群.

完成配置。