持久卷与动态存储

NFS-PVC-PV实战案例

1、创建后端存储NFS节点

[root@web04 ~]# mkdir -pv /kubelet/pv/pv00{1..3}

mkdir: created directory ‘/kubelet/pv’

mkdir: created directory ‘/kubelet/pv/pv001’

mkdir: created directory ‘/kubelet/pv/pv002’

mkdir: created directory ‘/kubelet/pv/pv003’

[root@web04 ~]# tree /kubelet/pv/

/kubelet/pv/

├── pv001

├── pv002

└── pv003

[root@web04 ~]# tail -n3 /etc/exports

/kubelet/pv/pv001 10.0.0.0/24(rw,sync,no_root_squash,no_subtree_check)

/kubelet/pv/pv002 10.0.0.0/24(rw,sync,no_root_squash,no_subtree_check)

/kubelet/pv/pv003 10.0.0.0/24(rw,sync,no_root_squash,no_subtree_check)

[root@web04 ~]# exportfs

/kubelet/pv/pv00110.0.0.0/24

/kubelet/pv/pv00210.0.0.0/24

/kubelet/pv/pv00310.0.0.0/24

2、创建持久卷PersistentVolume

[root@k8smaster01 ~]# vim nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: nfs-pv

spec:# 持久卷3种回收策略persistentVolumeReclaimPolicy: Retainnfs:server: 10.0.0.2path: /kubelet/pv/pv001# 持久卷4种访问模式accessModes:- ReadWriteMany# 持久卷容量capacity:storage: 5Gi[root@k8smaster01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv 5Gi RWX Retain Available 17s

| 访问模式 | 简写 | 描述 |

|---|---|---|

| ReadWriteOnce | RWO | 卷可以由单个节点作为读写挂载。如果多个 Pod 在同一节点上运行,它们可以共享同一个 PVC 以读写方式访问。 |

| ReadOnlyMany | ROX | 卷可以由多个节点以只读方式挂载。这意味着数据可以被集群中的任何数量的 Pod 同时读取,但不允许任何写入操作。 |

| ReadWriteMany | RWX | 卷可以由多个节点作为读写挂载。这允许集群中不同节点上的多个 Pod 同时对相同的 PVC 进行读写访问。这种模式适用于需要高并发读写的场景。 |

| ReadWriteOncePod | RWO (Pod) | 从 Kubernetes v1.29 开始稳定支持,卷可以由单个 Pod 以读写方式挂载。无论该 Pod 运行在哪个节点上,它都是唯一能够对该 PVC 进行读写访问的 Pod。 |

| 回收策略 | 描述 |

|---|---|

| Delete | 当 PersistentVolumeClaim (PVC) 释放卷后,Kubernetes 将从系统中删除该卷。这意味着与该卷相关的所有数据都将被永久删除。此操作要求所使用的卷插件支持删除功能。 |

| Recycle | 当 PVC 释放卷后,卷将被回收并重新加入到未绑定的持久化卷池中,以供后续的 PVC 使用。这允许卷在释放后再次被使用,但要求所使用的卷插件支持回收功能。rm -rf /thevolume/* |

| Retain | 当 PVC 释放卷后,卷将保持在其当前的 "Released" 状态,等待管理员进行手动回收或重新分配。这是默认的回收策略,适用于需要保留数据或手动管理卷的情况。 |

| 列名 | 含义 |

|---|---|

| NAME | 持久卷(PersistentVolume)的名称。这是 PV 的唯一标识符。 |

| CAPACITY | 卷的容量,通常以字节为单位表示(例如 10Gi 表示 10 吉字节)。这指定了 PV 可以存储的最大数据量。 |

| ACCESS MODES | 卷的访问模式,定义了卷可以如何被挂载和访问。常见的访问模式包括 ReadWriteOnce (RWO), ReadOnlyMany (ROX), ReadWriteMany (RWX),以及 ReadWriteOncePod。 |

| RECLAIM POLICY | 当 PersistentVolumeClaim (PVC) 释放卷时,Kubernetes 应如何处理该卷的回收策略。可能的值包括 Delete, Recycle 和 Retain。 |

| STATUS | 卷的状态。常见的状态包括: - Available:卷可用,尚未被任何 PVC 绑定。 - Bound:卷已被 PVC 绑定。 - Released:PVC 已删除,但卷尚未被回收或删除。 - Failed:卷创建或绑定过程中出现问题。 |

| CLAIM | 如果卷已经被绑定到一个 PVC,则此列显示该 PVC 的命名空间和名称(格式为 <namespace>/<claim>)。如果卷未被绑定,则此列为空。 |

| STORAGECLASS | 创建该卷所使用的存储类(StorageClass)的名称。如果没有使用存储类,则此列可能为空或显示 <none>。 |

| VOLUMEATTRIBUTESCLASS | 定义卷属性的类,用于描述卷的额外特性或要求。不是所有集群都会使用这一列。 |

| REASON | 如果卷处于非正常状态(如 Failed),则此列会提供导致问题的原因。 |

| AGE | 卷创建以来的时间长度。这通常是自卷创建以来经过的时间,以天、小时等为单位表示。 |

3、创建持久卷声明PersistentVolumeClaim

[root@k8smaster01 pvc]# cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: pvc

spec:accessModes:- ReadWriteManyresources:limits:storage: 2Girequests:storage: 1Gi[root@k8smaster01 ~]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 5Gi RWX Retain Bound default/nfs-pvc 13mNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nfs-pvc Bound nfs-pv 5Gi RWX 5s

4、创建Pod

[root@k8smaster01 ~]# cat nginx-deployment-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:creationTimestamp: nulllabels:app: nginx-deployment-pvname: nginx-deployment-pv

spec:replicas: 1selector:matchLabels:app: nginx-deployment-pvstrategy: {}template:metadata:creationTimestamp: nulllabels:app: nginx-deployment-pvspec:volumes:- name: data-pvcpersistentVolumeClaim:claimName: nfs-pvccontainers:- image: nginxname: nginxvolumeMounts:- name: data-pvcmountPath: /usr/share/nginx/htmlresources: {}

status: {}[root@k8smaster01 ~]# curl 10.100.1.126 -I

HTTP/1.1 403 Forbidden

Server: nginx/1.27.3

Date: Thu, 23 Jan 2025 09:15:37 GMT

Content-Type: text/html

Content-Length: 153

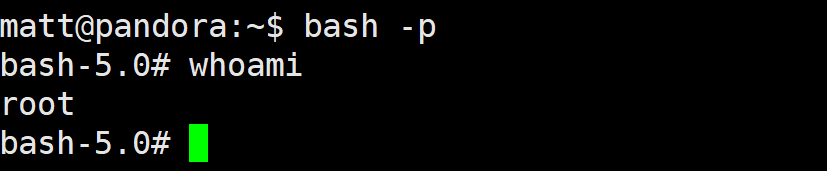

Connection: keep-alive[root@k8smaster01 pvc]# kubectl exec -it pods/ng-pvc-6867d44796-czxbt sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# echo "HackMe~" > /usr/share/nginx/html/index.html

#

# exit

[root@k8smaster01 pvc]# curl -I 10.244.5.80

HTTP/1.1 200 OK

Server: nginx/1.27.3

Date: Wed, 11 Dec 2024 02:53:22 GMT

Content-Type: text/html

Content-Length: 8

Last-Modified: Wed, 11 Dec 2024 02:53:19 GMT

Connection: keep-alive

ETag: "6758fe9f-8"

Accept-Ranges: bytes

[root@k8smaster01 pvc]# curl 10.244.5.80

HackMe~[root@web04 ~]# cat /kubelet/pv/pv001/index.html

HackMe~

清理

- Retain -- manual reclamation

- Recycle -- basic scrub (

rm -rf /thevolume/*) - Delete -- delete the volume

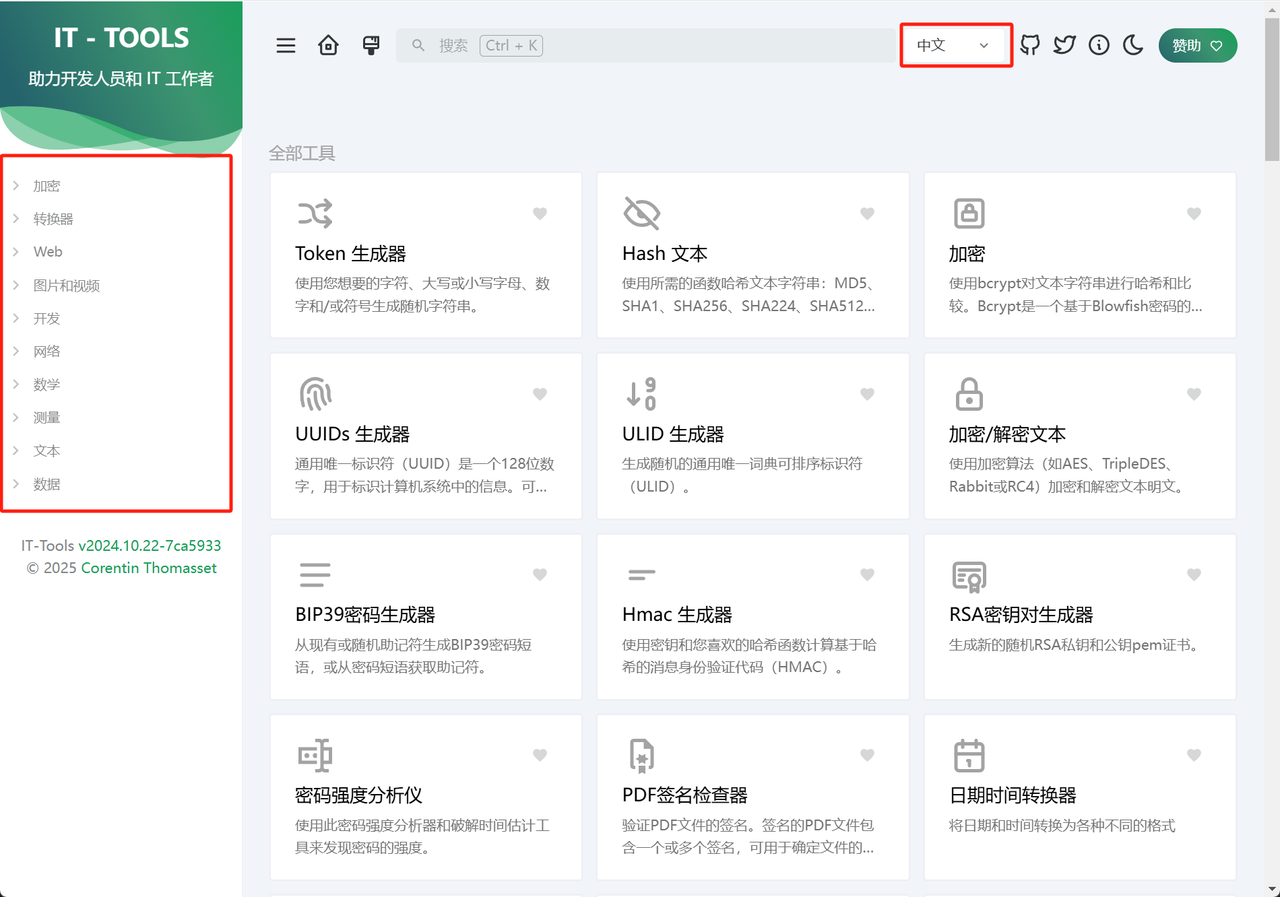

NFS动态存储实战案例

Kubernetes 不包含内部 NFS 驱动。你需要使用外部驱动为 NFS 创建 StorageClass。

| 卷插件 | 内置配置器 | 配置示例 |

|---|---|---|

| AzureFile | ✓ | Azure File |

| CephFS | - | - |

| FC | - | - |

| FlexVolume | - | - |

| iSCSI | - | - |

| Local | - | Local |

| NFS | - | NFS |

| PortworxVolume | ✓ | Portworx Volume |

| RBD | ✓ | Ceph RBD |

| VsphereVolume | ✓ | vSphere |

1、开放后端存储NFS

[root@web04 ~]# mkdir -pv /kubelet/storage_class

[root@web04 ~]# tail -n1 /etc/exports

/kubelet/storage_class 10.0.0.0/24(rw,sync,no_root_squash,no_subtree_check)

[root@web04 ~]# systemctl restart nfs-server.service

[root@web04 ~]# exportfs

/kubelet/storage_class10.0.0.0/24

2、部署NFS驱动器支持SC功能

[root@k8smaster01 ~]# git clone https://gitee.com/yinzhengjie/k8s-external-storage.git

[root@k8smaster01 ~]# cd k8s-external-storage/nfs-client/deploy/# 修改配置文件

[root@k8smaster01 deploy]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: nfs-client-provisionerlabels:app: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

spec:replicas: 1strategy:type: Recreateselector:matchLabels:app: nfs-client-provisionertemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: nfs-client-provisionercontainers:- name: nfs-client-provisioner# image: quay.io/external_storage/nfs-client-provisioner:latestimage: registry.cn-hangzhou.aliyuncs.com/k8s_study_rfb/nfs-subdir-external-provisioner:v4.0.0volumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: fuseim.pri/ifs- name: NFS_SERVERvalue: 10.0.0.7- name: NFS_PATHvalue: /kubelet/storage_classvolumes:- name: nfs-client-rootnfs:server: 10.0.0.7path: /kubelet/storage_class#修改动态存储类配置文件

[root@k8smaster01 deploy]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:# archiveOnDelete: "false"archiveOnDelete: "true"#应用动态存储类

[root@k8smaster01 deploy]# kubectl apply -f class.yaml && kubectl get sc

storageclass.storage.k8s.io/managed-nfs-storage created

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 0s[root@k8smaster01 deploy]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 10m

3、应用RBAC角色

[root@k8smaster01 deploy]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: nfs-client-provisioner-runner

rules:- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: run-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

rules:- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

subjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: Rolename: leader-locking-nfs-client-provisionerapiGroup: rbac.authorization.k8s.io#应用RBAC授权角色

[root@k8smaster01 deploy]# kubectl apply -f rbac.yaml#应用NFS动态存储配置器

[root@k8smaster01 deploy]# kubectl apply -f deployment.yaml[root@k8smaster01 deploy]# kubectl get pods,sc

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-5db5f57976-ghs8l 1/1 Running 0 4sNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/managed-nfs-storage fuseim.pri/ifs Delete Immediate false 11m

4、测试动态存储类

# PVC配置文件

[root@k8smaster01 deploy]# cat test-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: test-claim#以注解的方式指定sc资源annotations:volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:accessModes:- ReadWriteManyresources:requests:storage: 1Mi#Pod配置文件

[root@k8smaster01 deploy]# cat test-pod.yaml

kind: Pod

apiVersion: v1

metadata:name: test-pod

spec:containers:- name: test-podimage: busyboxcommand:- "/bin/sh"args:- "-c"- "touch /mnt/SUCCESS && exit 0 || exit 1"volumeMounts:- name: nfs-pvcmountPath: "/mnt"restartPolicy: "Never"volumes:- name: nfs-pvcpersistentVolumeClaim:claimName: test-claim

[root@k8smaster01 deploy]# kubectl apply -f test-claim.yaml -f test-pod.yaml#后端存储目录

[root@web04 ~]# ll /kubelet/storage_class/

total 0

drwxrwxrwx 2 root root 21 Dec 11 15:29 default-test-claim-pvc-72dca5dd-eb1e-4826-a18a-0773acfe55cf

[root@web04 ~]# ll /kubelet/storage_class/default-test-claim-pvc-72dca5dd-eb1e-4826-a18a-0773acfe55cf/

total 0

-rw-r--r-- 1 root root 0 Dec 11 15:29 SUCCESS