依赖条件

sysctl.conf

echo "kernel.threads-max = 262144" >> /etc/sysctl.conf && sysctl -pHuge Pages(按需修改)

编辑 /etc/default/grub,在 GRUB_CMDLINE_LINUX 参数中添加:GRUB_CMDLINE_LINUX="... transparent_hugepage=never"grub2-mkconfig -o /boot/grub2/grub.cfg # CentOS/RHEL

update-grub # Ubuntu/Debian

rebootlimits.conf

cat >> /etc/security/limits.conf <<EOF

clickhouse soft nproc 500000

clickhouse hard nproc 500000

EOF集群规划

clickhouse 集群为3分片2副本,3块磁盘。节点规划

| hostname | 节点 | 角色 | 分片 | 副本 |

| clickhouse01 | 192.168.174.144 | clickhouse + keeper |

1 |

1 |

| clickhouse02 | 192.168.174.145 | clickhouse |

1 |

2 |

| clickhouse03 | 192.168.174.146 | clickhouse + keeper |

2 |

1 |

| clickhouse04 | 192.168.174.147 | clickhouse |

2 |

2 |

| clickhouse05 | 192.168.174.148 | clickhouse + keeper |

3 |

1 |

| clickhouse06 | 192.168.174.149 | clickhouse server |

3 |

2 |

资源清单

clickhouse-server-25.2.1.3085-1

clickhouse-client-25.2.1.3085-1

clickhouse-common-static-25.2.1.3085-1设置 RPM 存储库

sudo yum -y install yum-utils

sudo yum-config-manager --add-repo https://packages.clickhouse.com/rpm/clickhouse.repo安装 ClickHouse

sudo yum install -y clickhouse-server clickhouse-clientClickHouse binary is already located at /usr/bin/clickhouse

Symlink /usr/bin/clickhouse-server already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-server to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-client already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-client to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-local already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-local to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-benchmark already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-benchmark to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-obfuscator already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-obfuscator to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-git-import to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-compressor already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-compressor to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-format already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-format to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-extract-from-config already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-extract-from-config to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-keeper already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-keeper to /usr/bin/clickhouse.

Symlink /usr/bin/clickhouse-keeper-converter already exists but it points to /clickhouse. Will replace the old symlink to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-keeper-converter to /usr/bin/clickhouse.

Creating symlink /usr/bin/clickhouse-disks to /usr/bin/clickhouse.

Symlink /usr/bin/ch already exists. Will keep it.

Symlink /usr/bin/chl already exists. Will keep it.

Symlink /usr/bin/chc already exists. Will keep it.

Creating clickhouse group if it does not exist.groupadd -r clickhouse

Creating clickhouse user if it does not exist.useradd -r --shell /bin/false --home-dir /nonexistent -g clickhouse clickhouse

Will set ulimits for clickhouse user in /etc/security/limits.d/clickhouse.conf.

Creating config directory /etc/clickhouse-server/config.d that is used for tweaks of main server configuration.

Creating config directory /etc/clickhouse-server/users.d that is used for tweaks of users configuration.

Config file /etc/clickhouse-server/config.xml already exists, will keep it and extract path info from it.

/etc/clickhouse-server/config.xml has /var/lib/clickhouse/ as data path.

/etc/clickhouse-server/config.xml has /var/log/clickhouse-server/ as log path.

Users config file /etc/clickhouse-server/users.xml already exists, will keep it and extract users info from it.

Creating log directory /var/log/clickhouse-server/.

Creating data directory /var/lib/clickhouse/.

Creating pid directory /var/run/clickhouse-server.chown -R clickhouse:clickhouse '/var/log/clickhouse-server/'chown -R clickhouse:clickhouse '/var/run/clickhouse-server'chown clickhouse:clickhouse '/var/lib/clickhouse/'

Password for the default user is an empty string. See /etc/clickhouse-server/users.xml and /etc/clickhouse-server/users.d to change it.

Setting capabilities for clickhouse binary. This is optional.chown -R clickhouse:clickhouse '/etc/clickhouse-server'ClickHouse has been successfully installed.Start clickhouse-server with:sudo clickhouse startStart clickhouse-client with:clickhouse-clientSynchronizing state of clickhouse-server.service with SysV service script with /usr/lib/systemd/systemd-sysv-install.

Executing: /usr/lib/systemd/systemd-sysv-install enable clickhouse-server

Created symlink /etc/systemd/system/multi-user.target.wants/clickhouse-server.service → /usr/lib/systemd/system/clickhouse-server.service.数据目录规划

mkdir -pv /data/apps/clickhouse/logs/clickhouse-{keeper,server} /data/apps/clickhouse/clickhouse-{keeper,server} /data/apps/{disk1,disk2,disk3}/clickhousechown -R clickhouse:clickhouse /data/apps/clickhouse/

chown -R clickhouse:clickhouse /data/apps/{disk1,disk2,disk3}/clickhouse/配置 ClickHouse keeper

clickhouse-server 已集成 clickhouse-keeper。

配置文件路径:/etc/clickhouse-keeper/keeper_config.xml备份配置文件

mv /etc/clickhouse-keeper/keeper_config.xml /etc/clickhouse-keeper/keeper_config.xml.backclickhouse01

cat > /etc/clickhouse-keeper/keeper_config.xml <<EOF

<clickhouse><logger><level>information</level><log>/data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.log</log><errorlog>/data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.err.log</errorlog><size>1000M</size><count>10</count></logger><listen_host>0.0.0.0</listen_host><max_connections>40960</max_connections><path>/data/apps/clickhouse/clickhouse-keeper</path><keeper_server><tcp_port>19181</tcp_port><server_id>1</server_id><log_storage_path>/data/apps/clickhouse/coordination/logs</log_storage_path><snapshot_storage_path>/data/apps/clickhouse/coordination/snapshots</snapshot_storage_path><coordination_settings><operation_timeout_ms>10000</operation_timeout_ms><min_session_timeout_ms>10000</min_session_timeout_ms><session_timeout_ms>100000</session_timeout_ms><election_timeout_lower_bound_ms>1000</election_timeout_lower_bound_ms><election_timeout_upper_bound_ms>2000</election_timeout_upper_bound_ms><heart_beat_interval_ms>500</heart_beat_interval_ms><raft_logs_level>information</raft_logs_level><compress_logs>false</compress_logs></coordination_settings><hostname_checks_enabled>true</hostname_checks_enabled><raft_configuration><server><id>1</id><hostname>192.168.174.144</hostname><port>9234</port></server><server><id>2</id><hostname>192.168.174.146</hostname><port>9234</port></server><server><id>3</id><hostname>192.168.174.148</hostname><port>9234</port></server></raft_configuration></keeper_server><openSSL><server><verificationMode>none</verificationMode><loadDefaultCAFile>true</loadDefaultCAFile><cacheSessions>true</cacheSessions><disableProtocols>sslv2,sslv3</disableProtocols><preferServerCiphers>true</preferServerCiphers></server></openSSL>

</clickhouse>

EOFclickhouse03

cat > /etc/clickhouse-keeper/keeper_config.xml <<EOF

<clickhouse><logger><level>information</level><log>/data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.log</log><errorlog>/data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.err.log</errorlog><size>1000M</size><count>10</count></logger><listen_host>0.0.0.0</listen_host><max_connections>40960</max_connections><path>/data/apps/clickhouse/clickhouse-keeper</path><keeper_server><tcp_port>19181</tcp_port><server_id>2</server_id><log_storage_path>/data/apps/clickhouse/coordination/logs</log_storage_path><snapshot_storage_path>/data/apps/clickhouse/coordination/snapshots</snapshot_storage_path><coordination_settings><operation_timeout_ms>10000</operation_timeout_ms><min_session_timeout_ms>10000</min_session_timeout_ms><session_timeout_ms>100000</session_timeout_ms><election_timeout_lower_bound_ms>1000</election_timeout_lower_bound_ms><election_timeout_upper_bound_ms>2000</election_timeout_upper_bound_ms><heart_beat_interval_ms>500</heart_beat_interval_ms><raft_logs_level>information</raft_logs_level><compress_logs>false</compress_logs></coordination_settings><hostname_checks_enabled>true</hostname_checks_enabled><raft_configuration><server><id>1</id><hostname>192.168.174.144</hostname><port>9234</port></server><server><id>2</id><hostname>192.168.174.146</hostname><port>9234</port></server><server><id>3</id><hostname>192.168.174.148</hostname><port>9234</port></server></raft_configuration></keeper_server><openSSL><server><verificationMode>none</verificationMode><loadDefaultCAFile>true</loadDefaultCAFile><cacheSessions>true</cacheSessions><disableProtocols>sslv2,sslv3</disableProtocols><preferServerCiphers>true</preferServerCiphers></server></openSSL>

</clickhouse>

EOFclickhouse05

cat > /etc/clickhouse-keeper/keeper_config.xml <<EOF

<clickhouse><logger><level>information</level><log>/data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.log</log><errorlog>/data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.err.log</errorlog><size>1000M</size><count>10</count></logger><listen_host>0.0.0.0</listen_host><max_connections>40960</max_connections><path>/data/apps/clickhouse/clickhouse-keeper</path><keeper_server><tcp_port>19181</tcp_port><server_id>3</server_id><log_storage_path>/data/apps/clickhouse/coordination/logs</log_storage_path><snapshot_storage_path>/data/apps/clickhouse/coordination/snapshots</snapshot_storage_path><coordination_settings><operation_timeout_ms>10000</operation_timeout_ms><min_session_timeout_ms>10000</min_session_timeout_ms><session_timeout_ms>100000</session_timeout_ms><election_timeout_lower_bound_ms>1000</election_timeout_lower_bound_ms><election_timeout_upper_bound_ms>2000</election_timeout_upper_bound_ms><heart_beat_interval_ms>500</heart_beat_interval_ms><raft_logs_level>information</raft_logs_level><compress_logs>false</compress_logs></coordination_settings><hostname_checks_enabled>true</hostname_checks_enabled><raft_configuration><server><id>1</id><hostname>192.168.174.144</hostname><port>9234</port></server><server><id>2</id><hostname>192.168.174.146</hostname><port>9234</port></server><server><id>3</id><hostname>192.168.174.148</hostname><port>9234</port></server></raft_configuration></keeper_server><openSSL><server><verificationMode>none</verificationMode><loadDefaultCAFile>true</loadDefaultCAFile><cacheSessions>true</cacheSessions><disableProtocols>sslv2,sslv3</disableProtocols><preferServerCiphers>true</preferServerCiphers></server></openSSL></clickhouse>

EOF配置 ClickHouse

config.xml

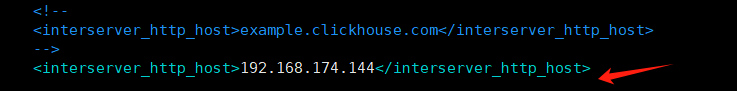

按需更新以下内容sed -i -e 's@<path>/var/lib/clickhouse/</path>@<path>/data/apps/clickhouse/clickhouse-server</path>@' \-e 's@<level>trace</level>@<level>information</level>@' \-e 's@<!-- <max_connections>4096</max_connections> -->@<max_connections>40960</max_connections>@' \-e 's@<!-- <listen_host>::</listen_host> -->@<listen_host>0.0.0.0</listen_host>@' \-e 's@/var/log@/data/apps/clickhouse/logs@' \-e 's@<http_port>8123</http_port>@<http_port>18123</http_port>@' \-e 's@<tcp_port>9000</tcp_port>@<tcp_port>19000</tcp_port>@' \/etc/clickhouse-server/config.xmlinterserver_http_host 修改为本机hostname或者IP地址。

storage.xml

cat >> /etc/clickhouse-server/config.d/storage.xml <<EOF

<clickhouse><storage_configuration><disks><disk1><path>/data/apps/disk1/clickhouse/</path> </disk1><disk2><path>/data/apps/disk2/clickhouse/</path></disk2><disk3><path>/data/apps/disk3/clickhouse/</path></disk3></disks><policies><triple_disk> <!-- 自定义策略名称 --><volumes><triple_volume> <!-- volume name --><disk>disk1</disk><disk>disk2</disk><disk>disk3</disk><volume_type>JBOD</volume_type> <!-- 默认类型 --><load_balancing>round_robin</load_balancing></triple_volume></volumes></triple_disk></policies></storage_configuration>

</clickhouse>

EOFremote_servers.xml

cat > /etc/clickhouse-server/config.d/remote_servers.xml <<EOF

<clickhouse><remote_servers><cluster_3s2r> <!-- 集群名称 --><secret>12345678</secret><shard><internal_replication>true</internal_replication> <!--由ClickHouse自动同步副本(写入一个副本后,内部复制到其他副本)--><replica><host>192.168.174.144</host><port>19000</port><user>default</user></replica><replica><host>192.168.174.145</host><port>19000</port><user>default</user></replica></shard><shard><internal_replication>true</internal_replication> <!--由ClickHouse自动同步副本(写入一个副本后,内部复制到其他副本)--><replica><host>192.168.174.146</host><port>19000</port><user>default</user></replica><replica><host>192.168.174.147</host><port>19000</port><user>default</user></replica></shard><shard><internal_replication>true</internal_replication> <!--由ClickHouse自动同步副本(写入一个副本后,内部复制到其他副本)--><replica><host>192.168.174.148</host><port>19000</port><user>default</user></replica><replica><host>192.168.174.149</host><port>19000</port><user>default</user></replica></shard></cluster_3s2r></remote_servers>

</clickhouse>

EOFuse-keeper.xml

cat >> /etc/clickhouse-server/config.d/use-keeper.xml <<EOF

<clickhouse><zookeeper><node><host>192.168.174.144</host><port>19181</port></node><node><host>192.168.174.146</host><port>19181</port></node><node><host>192.168.174.148</host><port>19181</port></node></zookeeper>

</clickhouse>

EOF配置 macros

clickhouse01

cat >> /etc/clickhouse-server/config.d/macros.xml <<EOF

<clickhouse><macros><shard>1</shard><replica>1</replica><cluster>cluster_3s2r</cluster></macros>

</clickhouse>

EOFsed -i 's@<!--display_name>production</display_name-->@<display_name>cluster_3s2r node 1</display_name>@' /etc/clickhouse-server/config.xmlclickhouse02

cat >> /etc/clickhouse-server/config.d/macros.xml <<EOF

<clickhouse><macros><shard>1</shard><replica>2</replica><cluster>cluster_3s2r</cluster></macros>

</clickhouse>

EOFsed -i 's@<!--display_name>production</display_name-->@<display_name>cluster_3s2r node 2</display_name>@' /etc/clickhouse-server/config.xmlclickhouse03

cat >> /etc/clickhouse-server/config.d/macros.xml <<EOF

<clickhouse><macros><shard>2</shard><replica>1</replica><cluster>cluster_3s2r</cluster></macros>

</clickhouse>

EOFsed -i 's@<!--display_name>production</display_name-->@<display_name>cluster_3s2r node 3</display_name>@' /etc/clickhouse-server/config.xmlclickhouse04

cat >> /etc/clickhouse-server/config.d/macros.xml <<EOF

<clickhouse><macros><shard>2</shard><replica>2</replica><cluster>cluster_3s2r</cluster></macros>

</clickhouse>

EOFsed -i 's@<!--display_name>production</display_name-->@<display_name>cluster_3s2r node 4</display_name>@' /etc/clickhouse-server/config.xmlclickhouse05

cat >> /etc/clickhouse-server/config.d/macros.xml <<EOF

<clickhouse><macros><shard>3</shard><replica>1</replica><cluster>cluster_3s2r</cluster></macros>

</clickhouse>

EOFsed -i 's@<!--display_name>production</display_name-->@<display_name>cluster_3s2r node 5</display_name>@' /etc/clickhouse-server/config.xmlclickhouse06

cat >> /etc/clickhouse-server/config.d/macros.xml <<EOF

<clickhouse><macros><shard>3</shard><replica>2</replica><cluster>cluster_3s2r</cluster></macros>

</clickhouse>

EOFsed -i 's@<!--display_name>production</display_name-->@<display_name>cluster_3s2r node 6</display_name>@' /etc/clickhouse-server/config.xml运行 ClickHouse keeper

ClickHouse keeper 开机自启

systemctl enable clickhouse-keeper --now 验证 ClickHouse keeper 端口

netstat -tnlpActive Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1069/sshd: /usr/sbi

tcp 0 0 0.0.0.0:19181 0.0.0.0:* LISTEN 6787/clickhouse-kee

tcp6 0 0 :::22 :::* LISTEN 1069/sshd: /usr/sbi

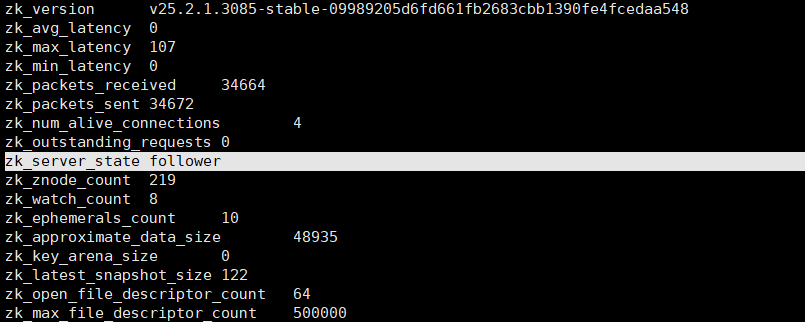

tcp6 0 0 :::9234 :::* LISTEN 6787/clickhouse-kee 验证 ClickHouse keeper 状态

echo mntr | nc localhost 19181

查看 ClickHouse keeper 日志

tail -f /data/apps/clickhouse/logs/clickhouse-keeper/clickhouse-keeper.log2025.02.07 14:17:36.820184 [ 6852 ] {} <Information> SentryWriter: Sending crash reports is disabled

2025.02.07 14:17:36.876137 [ 6852 ] {} <Information> Application: Starting ClickHouse Keeper 25.1.3.23 (revision: 54496, git hash: 5fc2cf719913121725014154c1e698670763bf30, build id: 09637F5D3CC875EE906926933EF307B480571EC1), PID 6852

2025.02.07 14:17:36.876182 [ 6852 ] {} <Information> Application: starting up

2025.02.07 14:17:36.876197 [ 6852 ] {} <Information> Application: OS Name = Linux, OS Version = 4.19.90-2102.2.0.0062.ctl2.x86_64, OS Architecture = x86_64

2025.02.07 14:17:36.876325 [ 6852 ] {} <Information> Jemalloc: Value for background_thread set to true (from true)

2025.02.07 14:17:36.876525 [ 6852 ] {} <Information> Application: keeper_server.max_memory_usage_soft_limit is set to 2.99 GiB

2025.02.07 14:17:36.876590 [ 6852 ] {} <Information> CgroupsReader: Will create cgroup reader from '/sys/fs/cgroup/memory' (cgroups version: v1)

2025.02.07 14:17:36.876604 [ 6852 ] {} <Information> MemoryWorker: Starting background memory thread with period of 50ms, using Cgroups as source

2025.02.07 14:17:36.877518 [ 6852 ] {} <Information> Context: Cannot connect to ZooKeeper (or Keeper) before internal Keeper start, will wait for Keeper synchronously

2025.02.07 14:17:36.877593 [ 6852 ] {} <Information> KeeperContext: Keeper feature flag FILTERED_LIST: enabled

2025.02.07 14:17:36.877600 [ 6852 ] {} <Information> KeeperContext: Keeper feature flag MULTI_READ: enabled

2025.02.07 14:17:36.877607 [ 6852 ] {} <Information> KeeperContext: Keeper feature flag CHECK_NOT_EXISTS: disabled

2025.02.07 14:17:36.877620 [ 6852 ] {} <Information> KeeperContext: Keeper feature flag CREATE_IF_NOT_EXISTS: disabled

2025.02.07 14:17:36.877628 [ 6852 ] {} <Information> KeeperContext: Keeper feature flag REMOVE_RECURSIVE: disabled

2025.02.07 14:17:36.880148 [ 6852 ] {} <Information> KeeperLogStore: force_sync enabled

2025.02.07 14:17:36.880690 [ 6852 ] {} <Information> KeeperServer: Will use config from log store with log index 6

2025.02.07 14:17:36.884176 [ 6852 ] {} <Information> RaftInstance: Raft ASIO listener initiated on :::9234, unsecured

2025.02.07 14:17:36.884458 [ 6852 ] {} <Information> KeeperStateManager: Read state from /data/apps/clickhouse/coordination/state

2025.02.07 14:17:36.884496 [ 6852 ] {} <Information> RaftInstance: parameters: election timeout range 1000 - 2000, heartbeat 500, leadership expiry 10000, max batch 100, backoff 50, snapshot distance 100000, enable randomized snapshot creation NO, log sync stop gap 99999, reserved logs 100000, client timeout 10000, auto forwarding on, API call type async, custom commit quorum size 0, custom election quorum size 0, snapshot receiver included, leadership transfer wait time 0, grace period of lagging state machine 0, snapshot IO: blocking, parallel log appending: on

2025.02.07 14:17:36.884505 [ 6852 ] {} <Information> RaftInstance: new election timeout range: 1000 - 2000

2025.02.07 14:17:36.884517 [ 6852 ] {} <Information> RaftInstance: === INIT RAFT SERVER ===

commit index 6

term 6

election timer allowed

log store start 1, end 6

config log idx 6, prev log idx 5

2025.02.07 14:17:36.884622 [ 6852 ] {} <Information> RaftInstance: peer 1: DC ID 0, 192.168.174.144:9234, voting member, 1

peer 2: DC ID 0, 192.168.174.146:9234, voting member, 1

peer 3: DC ID 0, 192.168.174.148:9234, voting member, 1

my id: 3, voting_member

num peers: 2

2025.02.07 14:17:36.884646 [ 6852 ] {} <Information> RaftInstance: global manager does not exist. will use local thread for commit and append

2025.02.07 14:17:36.884771 [ 6852 ] {} <Information> RaftInstance: wait for HB, for 50 + [1000, 2000] ms

2025.02.07 14:17:36.884923 [ 6887 ] {} <Information> RaftInstance: bg append_entries thread initiated

2025.02.07 14:17:36.930238 [ 6856 ] {} <Information> MemoryTracker: Correcting the value of global memory tracker from 227.50 KiB to 43.29 MiB

2025.02.07 14:17:37.092975 [ 6870 ] {} <Information> RaftInstance: receive a incoming rpc connection

2025.02.07 14:17:37.093025 [ 6870 ] {} <Information> RaftInstance: session 1 got connection from ::ffff:192.168.174.146:45504 (as a server)

2025.02.07 14:17:37.093482 [ 6852 ] {} <Information> Application: Listening for Keeper (tcp): 0.0.0.0:19181

2025.02.07 14:17:37.095148 [ 6852 ] {} <Information> Application: keeper_server.max_memory_usage_soft_limit is set to 2.99 GiB

2025.02.07 14:17:37.095214 [ 6852 ] {} <Information> CertificateReloader: One of paths is empty. Cannot apply new configuration for certificates. Fill all paths and try again.

2025.02.07 14:17:37.095221 [ 6852 ] {} <Information> CertificateReloader: One of paths is empty. Cannot apply new configuration for certificates. Fill all paths and try again.

2025.02.07 14:17:37.095644 [ 6852 ] {} <Information> CgroupsMemoryUsageObserver: Started cgroup current memory usage observer thread

2025.02.07 14:17:37.095654 [ 6852 ] {} <Information> Application: Ready for connections.

2025.02.07 14:17:37.095898 [ 6893 ] {} <Information> CgroupsMemoryUsageObserver: Memory amount initially available to the process is 3.33 GiB运行 ClickHouse server

修改用户进程数限制

在 /lib/systemd/system/clickhouse-server.service 新增以下内容LimitNOFILE=500000

LimitNPROC=500000ClickHouse service 开机自启

systemctl enable clickhouse-server --now 验证 ClickHouse server 端口

netstat -tnlpActive Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:18123 0.0.0.0:* LISTEN 7327/clickhouse-ser

tcp 0 0 0.0.0.0:9004 0.0.0.0:* LISTEN 7327/clickhouse-ser

tcp 0 0 0.0.0.0:9005 0.0.0.0:* LISTEN 7327/clickhouse-ser

tcp 0 0 0.0.0.0:9009 0.0.0.0:* LISTEN 7327/clickhouse-ser

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1096/sshd: /usr/sbi

tcp 0 0 0.0.0.0:19000 0.0.0.0:* LISTEN 7327/clickhouse-ser

tcp6 0 0 :::22 :::* LISTEN 1096/sshd: /usr/sbi 查看 ClickHouse server 日志

tail -f /data/apps/clickhouse/logs/clickhouse-server/clickhouse-server.log2025.02.07 15:37:08.788772 [ 11992 ] {} <Information> Application: Will watch for the process with pid 11994

2025.02.07 15:37:08.788870 [ 11994 ] {} <Information> Application: Forked a child process to watch

2025.02.07 15:37:08.789579 [ 11994 ] {} <Information> SentryWriter: Sending crash reports is disabled

2025.02.07 15:37:08.875038 [ 11994 ] {} <Information> Application: Starting ClickHouse 25.1.3.23 (revision: 54496, git hash: 5fc2cf719913121725014154c1e698670763bf30, build id: 09637F5D3CC875EE906926933EF307B480571EC1), PID 11994

2025.02.07 15:37:08.875182 [ 11994 ] {} <Information> Application: starting up

2025.02.07 15:37:08.875205 [ 11994 ] {} <Information> Application: OS name: Linux, version: 4.19.90-2102.2.0.0062.ctl2.x86_64, architecture: x86_64

2025.02.07 15:37:08.875545 [ 11994 ] {} <Information> Jemalloc: Value for background_thread set to true (from false)

2025.02.07 15:37:08.882405 [ 11994 ] {} <Information> Application: Available RAM: 3.33 GiB; logical cores: 2; used cores: 2.

2025.02.07 15:37:08.882463 [ 11994 ] {} <Information> Application: Available CPU instruction sets: SSE, SSE2, SSE3, SSSE3, SSE41, SSE42, F16C, POPCNT, BMI1, BMI2, PCLMUL, AES, AVX, FMA, AVX2, ADX, RDRAND, RDSEED, RDTSCP, CLFLUSHOPT, XSAVE, OSXSAVE

2025.02.07 15:37:08.890057 [ 11994 ] {} <Information> CgroupsReader: Will create cgroup reader from '/sys/fs/cgroup/memory' (cgroups version: v1)

2025.02.07 15:37:09.169521 [ 11994 ] {} <Information> Application: Integrity check of the executable successfully passed (checksum: 18EC9701B010BF2E39B70C7E920E88BD)

2025.02.07 15:37:09.206895 [ 11994 ] {} <Information> MemoryWorker: Starting background memory thread with period of 50ms, using Cgroups as source

2025.02.07 15:37:09.207093 [ 11994 ] {} <Information> BackgroundSchedulePool/BgSchPool: Create BackgroundSchedulePool with 512 threads

2025.02.07 15:37:09.258552 [ 12002 ] {} <Information> MemoryTracker: Correcting the value of global memory tracker from 910.00 KiB to 60.72 MiB

2025.02.07 15:37:09.362132 [ 11994 ] {} <Warning> Context: Maximum number of threads is lower than 30000. There could be problems with handling a lot of simultaneous queries.

2025.02.07 15:37:09.362718 [ 11994 ] {} <Information> Application: Lowered uncompressed cache size to 1.66 GiB because the system has limited RAM

2025.02.07 15:37:09.362743 [ 11994 ] {} <Information> Application: Lowered mark cache size to 1.66 GiB because the system has limited RAM

2025.02.07 15:37:09.362751 [ 11994 ] {} <Information> Application: Lowered primary index cache size to 1.66 GiB because the system has limited RAM

2025.02.07 15:37:09.362768 [ 11994 ] {} <Information> Application: Lowered index mark cache size to 1.66 GiB because the system has limited RAM

2025.02.07 15:37:09.362776 [ 11994 ] {} <Information> Application: Lowered skipping index cache size to 1.66 GiB because the system has limited RAM

2025.02.07 15:37:09.382961 [ 11994 ] {} <Information> Application: Setting max_server_memory_usage was set to 2.99 GiB (3.33 GiB available * 0.90 max_server_memory_usage_to_ram_ratio)

2025.02.07 15:37:09.383019 [ 11994 ] {} <Information> Application: Setting merges_mutations_memory_usage_soft_limit was set to 1.66 GiB (3.33 GiB available * 0.50 merges_mutations_memory_usage_to_ram_ratio)

2025.02.07 15:37:09.383030 [ 11994 ] {} <Information> Application: Merges and mutations memory limit is set to 1.66 GiB

2025.02.07 15:37:09.384334 [ 11994 ] {} <Information> Application: ConcurrencyControl limit is set to 4

2025.02.07 15:37:09.384381 [ 11994 ] {} <Information> BackgroundSchedulePool/BgBufSchPool: Create BackgroundSchedulePool with 16 threads

2025.02.07 15:37:09.388312 [ 11994 ] {} <Information> BackgroundSchedulePool/BgMBSchPool: Create BackgroundSchedulePool with 16 threads

2025.02.07 15:37:09.392757 [ 11994 ] {} <Information> BackgroundSchedulePool/BgDistSchPool: Create BackgroundSchedulePool with 16 threads

2025.02.07 15:37:09.400838 [ 11994 ] {} <Information> Application: Listening for replica communication (interserver): http://0.0.0.0:9009

2025.02.07 15:37:09.407870 [ 11994 ] {} <Warning> Access(local_directory): File /data/apps/clickhouse/access/users.list doesn't exist

2025.02.07 15:37:09.407957 [ 11994 ] {} <Warning> Access(local_directory): Recovering lists in directory /data/apps/clickhouse/access/

2025.02.07 15:37:09.408427 [ 11994 ] {} <Information> CgroupsMemoryUsageObserver: Started cgroup current memory usage observer thread

2025.02.07 15:37:09.408918 [ 12568 ] {} <Information> CgroupsMemoryUsageObserver: Memory amount initially available to the process is 3.33 GiB

2025.02.07 15:37:09.414757 [ 11994 ] {} <Information> Context: Initialized background executor for merges and mutations with num_threads=16, num_tasks=32, scheduling_policy=round_robin

2025.02.07 15:37:09.417523 [ 11994 ] {} <Information> Context: Initialized background executor for move operations with num_threads=8, num_tasks=8

2025.02.07 15:37:09.426401 [ 11994 ] {} <Information> Context: Initialized background executor for fetches with num_threads=16, num_tasks=16

2025.02.07 15:37:09.429648 [ 11994 ] {} <Information> Context: Initialized background executor for common operations (e.g. clearing old parts) with num_threads=8, num_tasks=8

2025.02.07 15:37:09.434244 [ 11994 ] {} <Warning> Context: Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

2025.02.07 15:37:09.434374 [ 11994 ] {} <Warning> Context: Linux threads max count is too low. Check /proc/sys/kernel/threads-max

2025.02.07 15:37:09.434957 [ 11994 ] {} <Information> DNSCacheUpdater: Update period 15 seconds

2025.02.07 15:37:09.435013 [ 11994 ] {} <Information> Application: Loading metadata from /var/lib/clickhouse/

2025.02.07 15:37:09.439904 [ 11994 ] {} <Information> Context: Database disk name: default

2025.02.07 15:37:09.439931 [ 11994 ] {} <Information> Context: Database disk name: default, path: /var/lib/clickhouse/

2025.02.07 15:37:09.445390 [ 11994 ] {} <Information> DatabaseAtomic (system): Metadata processed, database system has 0 tables and 0 dictionaries in total.

2025.02.07 15:37:09.445432 [ 11994 ] {} <Information> TablesLoader: Parsed metadata of 0 tables in 1 databases in 9.9468e-05 sec

2025.02.07 15:37:09.513918 [ 11994 ] {} <Information> DatabaseCatalog: Found 0 partially dropped tables. Will load them and retry removal.

2025.02.07 15:37:09.515131 [ 11994 ] {} <Information> DatabaseAtomic (default): Metadata processed, database default has 0 tables and 0 dictionaries in total.

2025.02.07 15:37:09.515179 [ 11994 ] {} <Information> TablesLoader: Parsed metadata of 0 tables in 1 databases in 6.1594e-05 sec

2025.02.07 15:37:09.515247 [ 11994 ] {} <Information> loadMetadata: Start asynchronous loading of databases

2025.02.07 15:37:09.515524 [ 11994 ] {} <Information> UserDefinedSQLObjectsLoaderFromDisk: Loading user defined objects from /data/apps/clickhouse/user_defined/

2025.02.07 15:37:09.515600 [ 11994 ] {} <Information> WorkloadEntityDiskStorage: Loading workload entities from /data/apps/clickhouse/workload/

2025.02.07 15:37:09.528817 [ 11994 ] {} <Information> ZooKeeperClient: Connected to ZooKeeper at 192.168.174.146:19181 with session_id 5

2025.02.07 15:37:09.530591 [ 11994 ] {} <Information> ZooKeeperClient: Keeper feature flag FILTERED_LIST: enabled

2025.02.07 15:37:09.530636 [ 11994 ] {} <Information> ZooKeeperClient: Keeper feature flag MULTI_READ: enabled

2025.02.07 15:37:09.530647 [ 11994 ] {} <Information> ZooKeeperClient: Keeper feature flag CHECK_NOT_EXISTS: disabled

2025.02.07 15:37:09.530655 [ 11994 ] {} <Information> ZooKeeperClient: Keeper feature flag CREATE_IF_NOT_EXISTS: disabled

2025.02.07 15:37:09.530680 [ 11994 ] {} <Information> ZooKeeperClient: Keeper feature flag REMOVE_RECURSIVE: disabled

2025.02.07 15:37:09.561808 [ 11994 ] {} <Information> Application: Tasks stats provider: netlink

2025.02.07 15:37:09.611429 [ 11994 ] {} <Information> CertificateReloader: One of paths is empty. Cannot apply new configuration for certificates. Fill all paths and try again.

2025.02.07 15:37:09.611465 [ 11994 ] {} <Information> CertificateReloader: One of paths is empty. Cannot apply new configuration for certificates. Fill all paths and try again.

2025.02.07 15:37:09.614711 [ 11994 ] {} <Information> Application: Listening for http://0.0.0.0:18123

2025.02.07 15:37:09.614779 [ 11994 ] {} <Information> Application: Listening for native protocol (tcp): 0.0.0.0:19000

2025.02.07 15:37:09.614822 [ 11994 ] {} <Information> Application: Listening for MySQL compatibility protocol: 0.0.0.0:9004

2025.02.07 15:37:09.614886 [ 11994 ] {} <Information> Application: Listening for PostgreSQL compatibility protocol: 0.0.0.0:9005

2025.02.07 15:37:09.614899 [ 11994 ] {} <Information> Application: Ready for connections.

2025.02.07 15:37:16.490627 [ 12645 ] {} <Information> StoragePolicySelector: Storage policy `triple_disk` loaded

2025.02.07 15:37:51.516798 [ 12179 ] {} <Information> system.asynchronous_metric_log (c039ceaf-6920-4be1-83be-4c1d7b887a04) (MergerMutator): Selected 6 parts from 202502_1_1_0 to 202502_6_6_0. Merge selecting phase took: 0ms

2025.02.07 15:37:51.524227 [ 12569 ] {c039ceaf-6920-4be1-83be-4c1d7b887a04::202502_1_6_1} <Information> system.asynchronous_metric_log (c039ceaf-6920-4be1-83be-4c1d7b887a04) (MergerMutator): Merged 6 parts: [202502_1_1_0, 202502_6_6_0] -> 202502_1_6_1

2025.02.07 15:37:55.072060 [ 12192 ] {} <Information> system.metric_log (5eee69d4-03c3-4613-8420-f0d611c898ed) (MergerMutator): Selected 6 parts from 202502_1_1_0 to 202502_6_6_0. Merge selecting phase took: 0ms

2025.02.07 15:37:55.378443 [ 12581 ] {5eee69d4-03c3-4613-8420-f0d611c898ed::202502_1_6_1} <Information> system.metric_log (5eee69d4-03c3-4613-8420-f0d611c898ed) (MergerMutator): Merged 6 parts: [202502_1_1_0, 202502_6_6_0] -> 202502_1_6_1

2025.02.07 15:38:17.177227 [ 12281 ] {} <Information> system.trace_log (1f024209-efb5-42f8-b691-dee7f3ce96bb) (MergerMutator): Selected 5 parts from 202502_1_1_0 to 202502_5_5_0. Merge selecting phase took: 0ms

2025.02.07 15:38:17.180871 [ 12571 ] {1f024209-efb5-42f8-b691-dee7f3ce96bb::202502_1_5_1} <Information> system.trace_log (1f024209-efb5-42f8-b691-dee7f3ce96bb) (MergerMutator): Merged 5 parts: [202502_1_1_0, 202502_5_5_0] -> 202502_1_5_1

2025.02.07 15:38:26.533564 [ 12320 ] {} <Information> system.asynchronous_metric_log (c039ceaf-6920-4be1-83be-4c1d7b887a04) (MergerMutator): Selected 6 parts from 202502_1_6_1 to 202502_11_11_0. Merge selecting phase took: 0ms

2025.02.07 15:38:26.539759 [ 12576 ] {c039ceaf-6920-4be1-83be-4c1d7b887a04::202502_1_11_2} <Information> system.asynchronous_metric_log (c039ceaf-6920-4be1-83be-4c1d7b887a04) (MergerMutator): Merged 6 parts: [202502_1_6_1, 202502_11_11_0] -> 202502_1_11_2

2025.02.07 15:38:32.987208 [ 12346 ] {} <Information> system.metric_log (5eee69d4-03c3-4613-8420-f0d611c898ed) (MergerMutator): Selected 6 parts from 202502_1_6_1 to 202502_11_11_0. Merge selecting phase took: 0ms

2025.02.07 15:38:33.284665 [ 12570 ] {5eee69d4-03c3-4613-8420-f0d611c898ed::202502_1_11_2} <Information> system.metric_log (5eee69d4-03c3-4613-8420-f0d611c898ed) (MergerMutator): Merged 6 parts: [202502_1_6_1, 202502_11_11_0] -> 202502_1_11_2

2025.02.07 15:38:39.544778 [ 12378 ] {} <Information> system.text_log (caaafd97-98b8-4a17-96cb-85848ef51533) (MergerMutator): Selected 6 parts from 202502_1_1_0 to 202502_6_6_0. Merge selecting phase took: 0ms

2025.02.07 15:38:39.557569 [ 12569 ] {caaafd97-98b8-4a17-96cb-85848ef51533::202502_1_6_1} <Information> system.text_log (caaafd97-98b8-4a17-96cb-85848ef51533) (MergerMutator): Merged 6 parts: [202502_1_1_0, 202502_6_6_0] -> 202502_1_6_1

2025.02.07 15:39:01.557615 [ 12469 ] {} <Information> system.asynchronous_metric_log (c039ceaf-6920-4be1-83be-4c1d7b887a04) (MergerMutator): Selected 6 parts from 202502_1_11_2 to 202502_16_16_0. Merge selecting phase took: 0ms

2025.02.07 15:39:01.567211 [ 12574 ] {c039ceaf-6920-4be1-83be-4c1d7b887a04::202502_1_16_3} <Information> system.asynchronous_metric_log (c039ceaf-6920-4be1-83be-4c1d7b887a04) (MergerMutator): Merged 6 parts: [202502_1_11_2, 202502_16_16_0] -> 202502_1_16_3

2025.02.07 15:39:10.898016 [ 12004 ] {} <Information> system.metric_log (5eee69d4-03c3-4613-8420-f0d611c898ed) (MergerMutator): Selected 6 parts from 202502_1_11_2 to 202502_16_16_0. Merge selecting phase took: 0ms

2025.02.07 15:39:11.123129 [ 12582 ] {5eee69d4-03c3-4613-8420-f0d611c898ed::202502_1_16_3} <Information> system.metric_log (5eee69d4-03c3-4613-8420-f0d611c898ed) (MergerMutator): Merged 6 parts: [202502_1_11_2, 202502_16_16_0] -> 202502_1_16_3

2025.02.07 15:39:17.057435 [ 12027 ] {} <Information> system.trace_log (1f024209-efb5-42f8-b691-dee7f3ce96bb) (MergerMutator): Selected 6 parts from 202502_1_5_1 to 202502_10_10_0. Merge selecting phase took: 0ms

2025.02.07 15:39:17.061390 [ 12569 ] {1f024209-efb5-42f8-b691-dee7f3ce96bb::202502_1_10_2} <Information> system.trace_log (1f024209-efb5-42f8-b691-dee7f3ce96bb) (MergerMutator): Merged 6 parts: [202502_1_5_1, 202502_10_10_0] -> 202502_1_10_2验证 clickhouse 集群

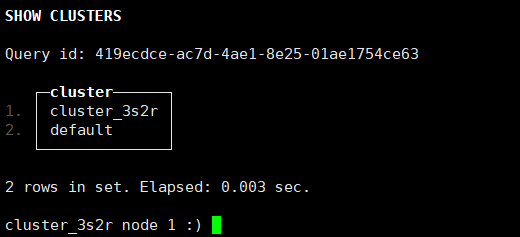

查看集群

SHOW clusters;

查看集群节点

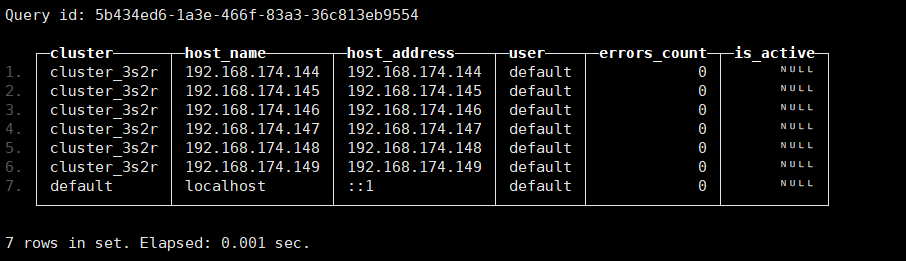

select cluster,host_name,host_address,user, errors_count, is_active from system.clusters;

创建本地表

连接 clickhouse server

clickhouse-client --host 192.168.174.144 --port 19000 ClickHouse client version 25.1.3.23 (official build).

Connecting to 192.168.174.144:19000 as user default.

Connected to ClickHouse server version 25.1.3.cluster_3s2r node 1 :) 查看 volume_type 类型

SELECTpolicy_name,volume_name,volume_type,load_balancing,disks

FROM system.storage_policies;Query id: 809a745e-b0fe-4123-bc30-a0e7bd188d24┌─policy_name─┬─volume_name──┬─volume_type─┬─load_balancing─┬─disks──────────┐

1. │ default │ default │ JBOD │ ROUND_ROBIN │ ['default'] │

2. │ triple_disk │ triple_volume │ JBOD │ ROUND_ROBIN │ ['disk1','disk2','disk3'] │└────── ─┴───── ───┴─────── ┴─────────┴─────────── ──┘2 rows in set. Elapsed: 0.001 sec. 创建 wgs_db

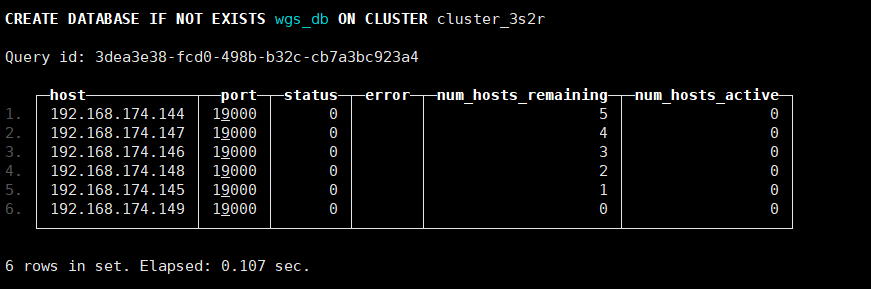

在所有分片节点上自动创建 dbCREATE DATABASE IF NOT EXISTS wgs_db ON CLUSTER cluster_3s2r;

创建本地表

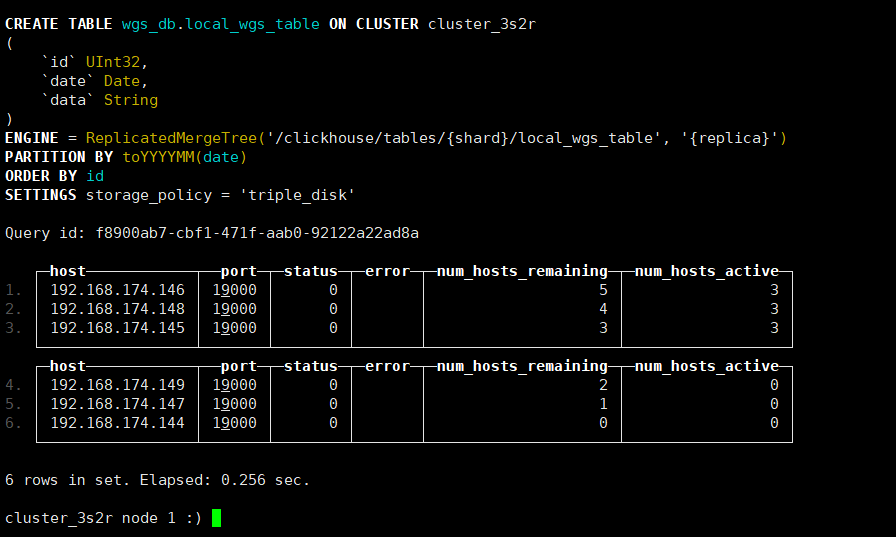

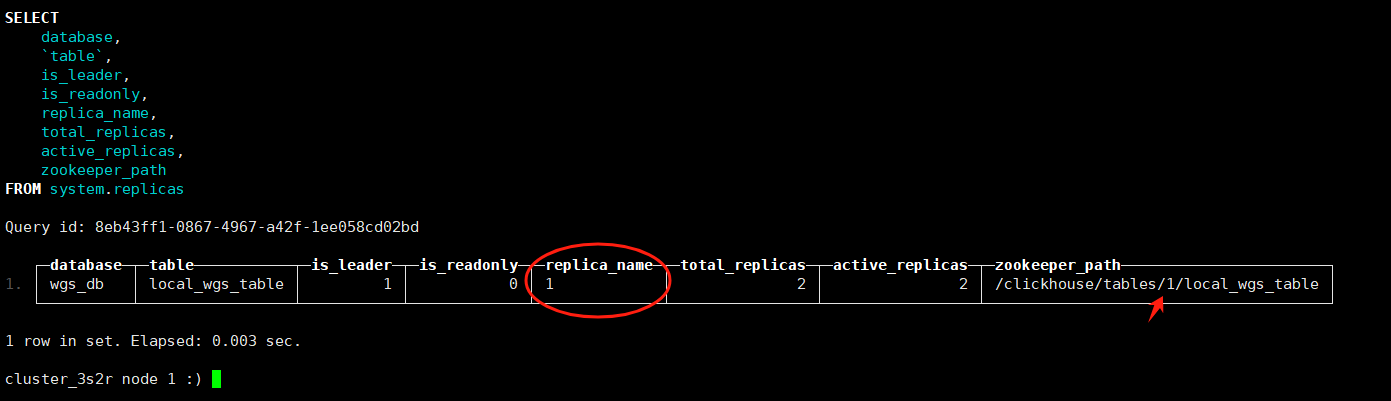

在所有分片节点上自动创建本地表CREATE TABLE wgs_db.local_wgs_table ON CLUSTER cluster_3s2r

(id UInt32,date Date,data String

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/local_wgs_table', '{replica}')

PARTITION BY toYYYYMM(date)

ORDER BY id

SETTINGS storage_policy = 'triple_disk'; -- 使用预定义的存储策略

验证表创建

SHOW TABLES FROM wgs_db;

查看表结构

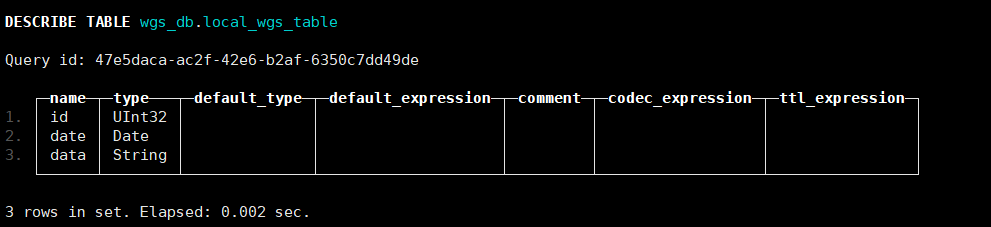

DESCRIBE TABLE wgs_db.local_wgs_table;

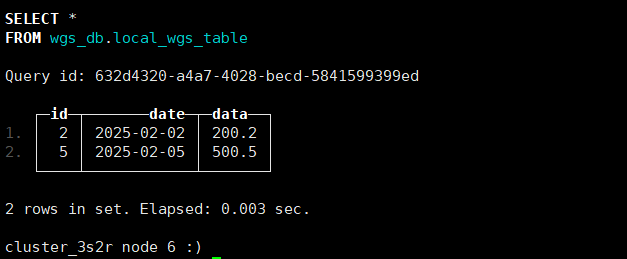

验证副本状态

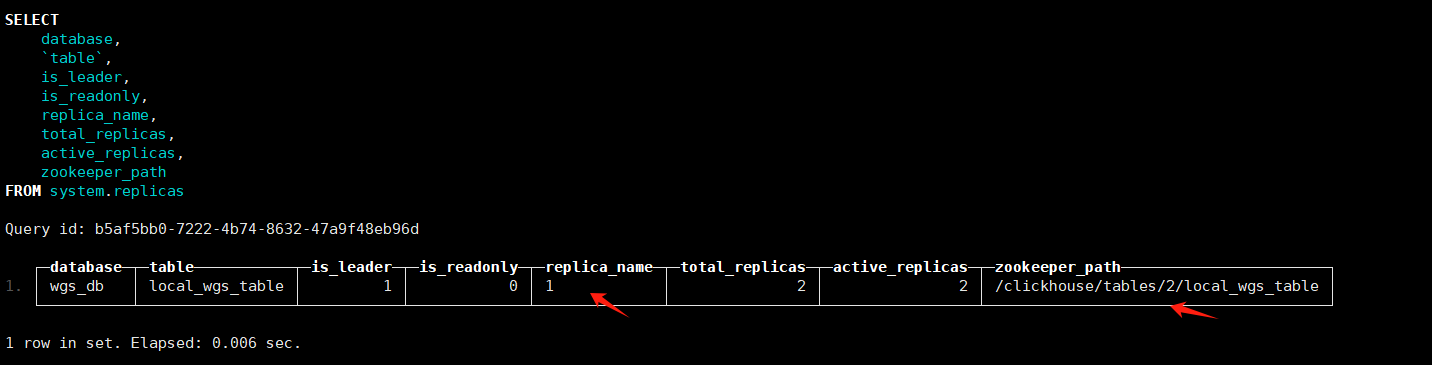

输出应显示所有副本状态为活跃(is_readonly = 0)shard 1

shard 2

SELECTdatabase,table,is_leader,is_readonly,replica_name,total_replicas,active_replicas,zookeeper_path

FROM system.replicas;

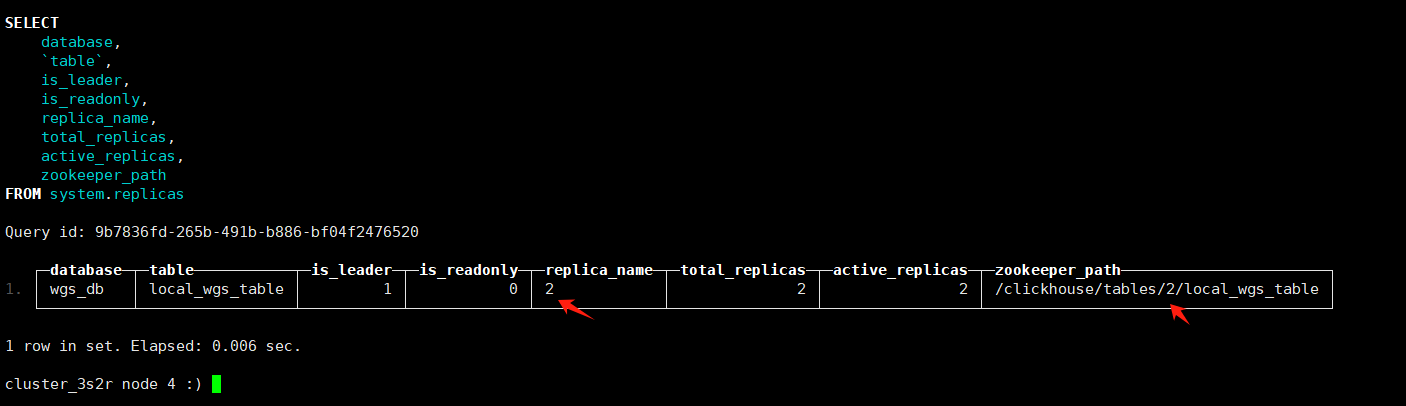

shard 3

SELECTdatabase,table,is_leader,is_readonly,replica_name,total_replicas,active_replicas,zookeeper_path

FROM system.replicas;

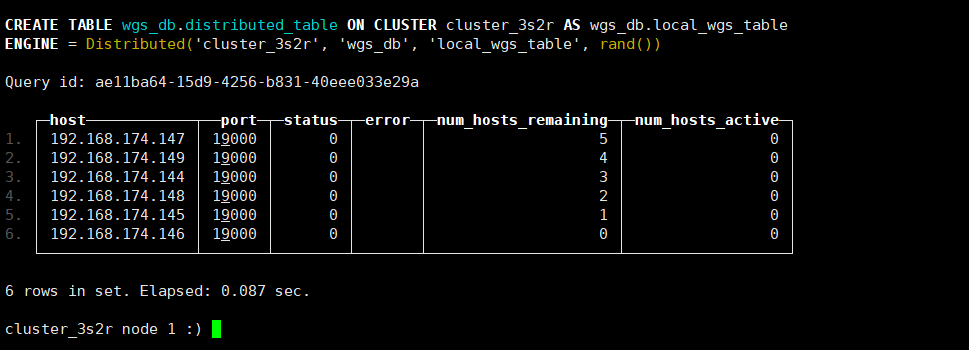

创建分布式表

创建表

在所有分片节点上自动创建表CREATE TABLE wgs_db.distributed_table ON CLUSTER cluster_3s2r AS wgs_db.local_wgs_table

ENGINE = Distributed('cluster_3s2r', -- 集群名称'wgs_db', -- 本地表所在数据库'local_wgs_table', -- 本地表名称rand() -- 分片键(随机分布)

);

插入测试数据

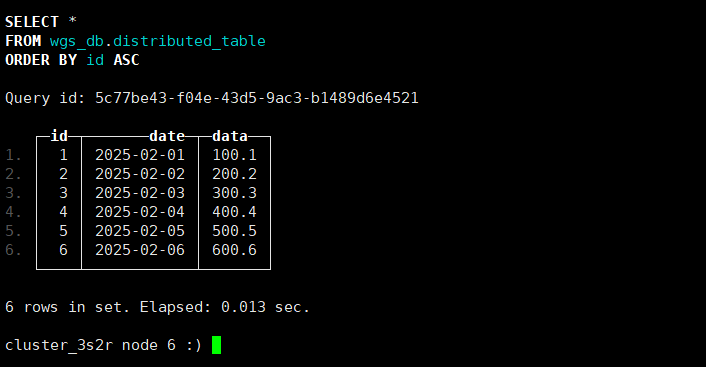

INSERT INTO wgs_db.distributed_table VALUES (1, '2025-02-01 01:00:00', 100.1),(2, '2025-02-02 02:05:00', 200.2),(3, '2025-02-03 03:00:00', 300.3),(4, '2025-02-04 04:05:00', 400.4),(5, '2025-02-05 05:00:00', 500.5),(6, '2025-02-06 06:05:00', 600.6);INSERT INTO test_db.distributed_table FORMAT ValuesQuery id: 2d51614c-a7ba-4250-a3c4-d41f64454354Ok.6 rows in set. Elapsed: 0.022 sec. cluster_3s2r node 1 :) 查询分布式表数据

SELECT * FROM wgs_db.distributed_table ORDER BY id;

查看分布式表存储位置

SELECT*

FROMsystem.tables

WHEREdatabase = 'wgs_db';Row 1:

──────

database: wgs_db

name: distributed_table

uuid: ca16b902-bcae-46d3-880a-08906e45c733

engine: Distributed

is_temporary: 0

data_paths: ['/data/apps/clickhouse/clickhouse-server/store/ca1/ca16b902-bcae-46d3-880a-08906e45c733/']

metadata_path: store/b96/b9699d72-3b0c-4699-a1a0-916b2f3c63cb/distributed_table.sql

metadata_modification_time: 2025-03-11 18:32:22

metadata_version: 0

dependencies_database: []

dependencies_table: []

create_table_query: CREATE TABLE wgs_db.distributed_table (`id` UInt32, `date` Date, `data` String) ENGINE = Distributed('cluster_3s2r', 'wgs_db', 'local_wgs_table', rand())

engine_full: Distributed('cluster_3s2r', 'wgs_db', 'local_wgs_table', rand())

as_select:

partition_key:

sorting_key:

primary_key:

sampling_key:

storage_policy: default

total_rows: ᴺᵁᴸᴸ

total_bytes: 0

total_bytes_uncompressed: ᴺᵁᴸᴸ

parts: ᴺᵁᴸᴸ

active_parts: ᴺᵁᴸᴸ

total_marks: ᴺᵁᴸᴸ

active_on_fly_data_mutations: 0

active_on_fly_metadata_mutations: 0

lifetime_rows: ᴺᵁᴸᴸ

lifetime_bytes: ᴺᵁᴸᴸ

comment:

has_own_data: 1

loading_dependencies_database: []

loading_dependencies_table: []

loading_dependent_database: []

loading_dependent_table: []Row 2:

──────

database: wgs_db

name: local_wgs_table

uuid: a6cd7c93-0e9d-408b-9d46-0f0179351ff8

engine: ReplicatedMergeTree

is_temporary: 0

data_paths: ['/data/apps/disk1/clickhouse/store/a6c/a6cd7c93-0e9d-408b-9d46-0f0179351ff8/','/data/apps/disk2/clickhouse/store/a6c/a6cd7c93-0e9d-408b-9d46-0f0179351ff8/','/data/apps/disk3/clickhouse/store/a6c/a6cd7c93-0e9d-408b-9d46-0f0179351ff8/']

metadata_path: store/b96/b9699d72-3b0c-4699-a1a0-916b2f3c63cb/local_wgs_table.sql

metadata_modification_time: 2025-03-11 18:31:10

metadata_version: 0

dependencies_database: []

dependencies_table: []

create_table_query: CREATE TABLE wgs_db.local_wgs_table (`id` UInt32, `date` Date, `data` String) ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/local_wgs_table', '{replica}') PARTITION BY toYYYYMM(date) ORDER BY id SETTINGS storage_policy = 'triple_disk', index_granularity = 8192

engine_full: ReplicatedMergeTree('/clickhouse/tables/{shard}/local_wgs_table', '{replica}') PARTITION BY toYYYYMM(date) ORDER BY id SETTINGS storage_policy = 'triple_disk', index_granularity = 8192

as_select:

partition_key: toYYYYMM(date)

sorting_key: id

primary_key: id

sampling_key:

storage_policy: triple_disk

total_rows: 2

total_bytes: 427

total_bytes_uncompressed: 144

parts: 1

active_parts: 1

total_marks: 2

active_on_fly_data_mutations: 0

active_on_fly_metadata_mutations: 0

lifetime_rows: ᴺᵁᴸᴸ

lifetime_bytes: ᴺᵁᴸᴸ

comment:

has_own_data: 1

loading_dependencies_database: []

loading_dependencies_table: []

loading_dependent_database: []

loading_dependent_table: []2 rows in set. Elapsed: 0.004 sec. 查询本地表数据

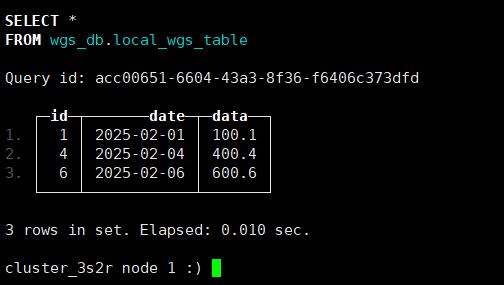

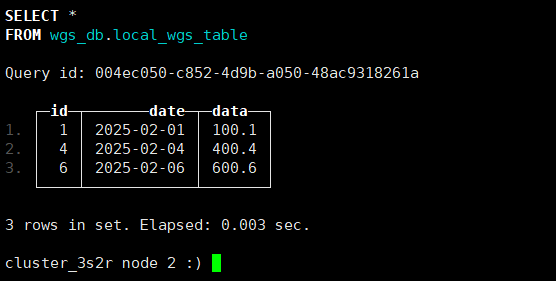

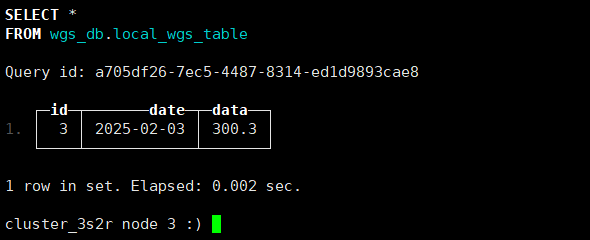

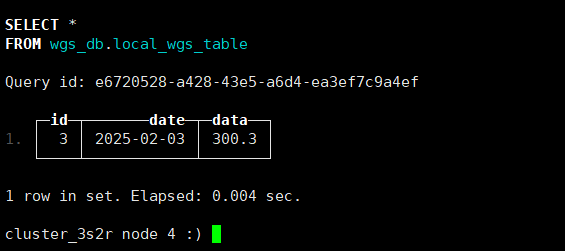

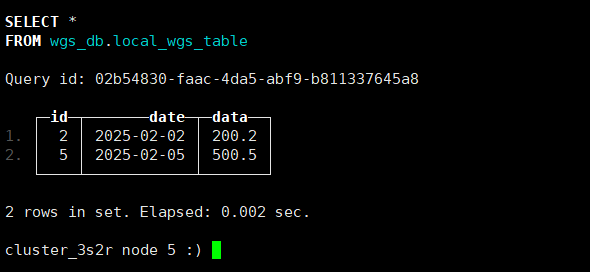

数据分散到3个shard上, 每个shard副本数据一致。select * from wgs_db.local_wgs_table;shard1

shard2

shard3

检查磁盘存储

查看 table 存储

ls -l /data/apps/clickhouse/clickhouse-server/data/total 8

drwxr-x--- 2 clickhouse clickhouse 4096 Mar 11 18:32 system

drwxr-x--- 2 clickhouse clickhouse 4096 Mar 11 18:32 wgs_db查看数据存储

ls -l /data/apps/clickhouse/clickhouse-server/data/wgs_db/total 8

lrwxrwxrwx 1 clickhouse clickhouse 86 Mar 11 18:32 distributed_table -> /data/apps/clickhouse/clickhouse-server/store/ca1/ca16b902-bcae-46d3-880a-08906e45c733

lrwxrwxrwx 1 clickhouse clickhouse 74 Mar 11 18:31 local_wgs_table -> /data/apps/disk1/clickhouse/store/a6c/a6cd7c93-0e9d-408b-9d46-0f0179351ff8删除 clickhouse 集群

systemctl stop clickhouse-server clickhouse-keeper

systemctl disable clickhouse-server clickhouse-keeper

yum remove -y clickhouse-server clickhouse-client

rm -rf /var/lib/clickhouse* /data/apps/clickhouse* /data/apps/disk* /etc/clickhouse*参考文档

https://clickhouse.com/docs/en/architecture/cluster-deployment

https://clickhouse.com/docs/en/install#available-installation-options

![[I.2] 个人作业:软件案例分析](https://img2024.cnblogs.com/blog/3611323/202503/3611323-20250311180321166-16561669.jpg)