参考:https://www.computervision.zone/topic/volumehandcontrol-py/

主函数:

VolumeHandControl.py

import cv2

import time

import numpy as np

import HandTrackingModule as htm

import math

from ctypes import cast, POINTER

from comtypes import CLSCTX_ALL

from pycaw.pycaw import AudioUtilities, IAudioEndpointVolume

################################

wCam, hCam = 640, 480

################################

cap = cv2.VideoCapture(0)

cap.set(3, wCam)

cap.set(4, hCam)

pTime = 0

detector = htm.handDetector(min_detection_confidence=0.7)

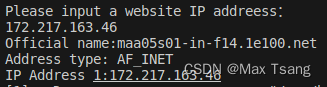

devices = AudioUtilities.GetSpeakers()

interface = devices.Activate(IAudioEndpointVolume._iid_, CLSCTX_ALL, None)

volume = cast(interface, POINTER(IAudioEndpointVolume))

# volume.GetMute()

# volume.GetMasterVolumeLevel()

volRange = volume.GetVolumeRange()

minVol = volRange[0]

maxVol = volRange[1]

vol = 0

volBar = 400

volPer = 0

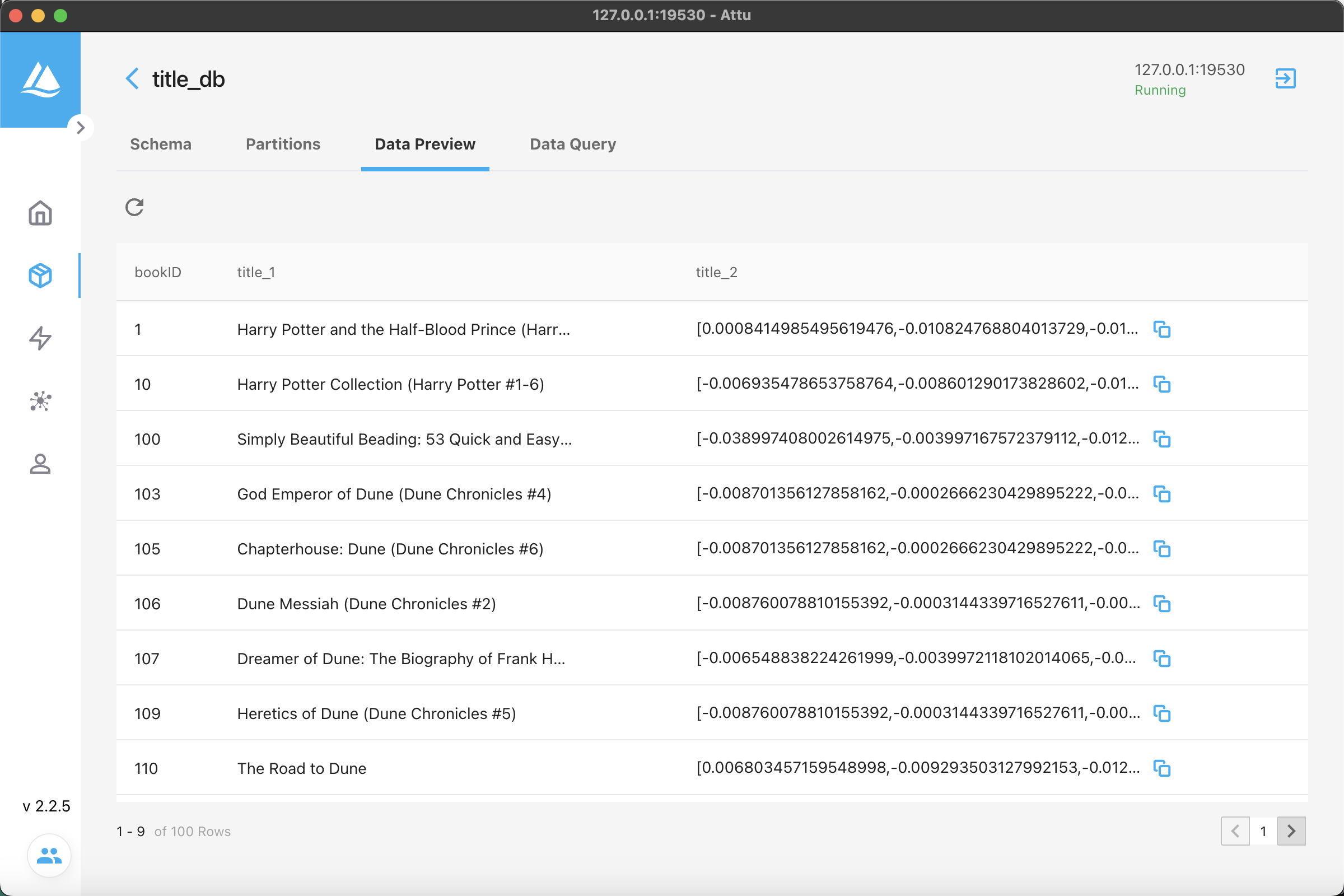

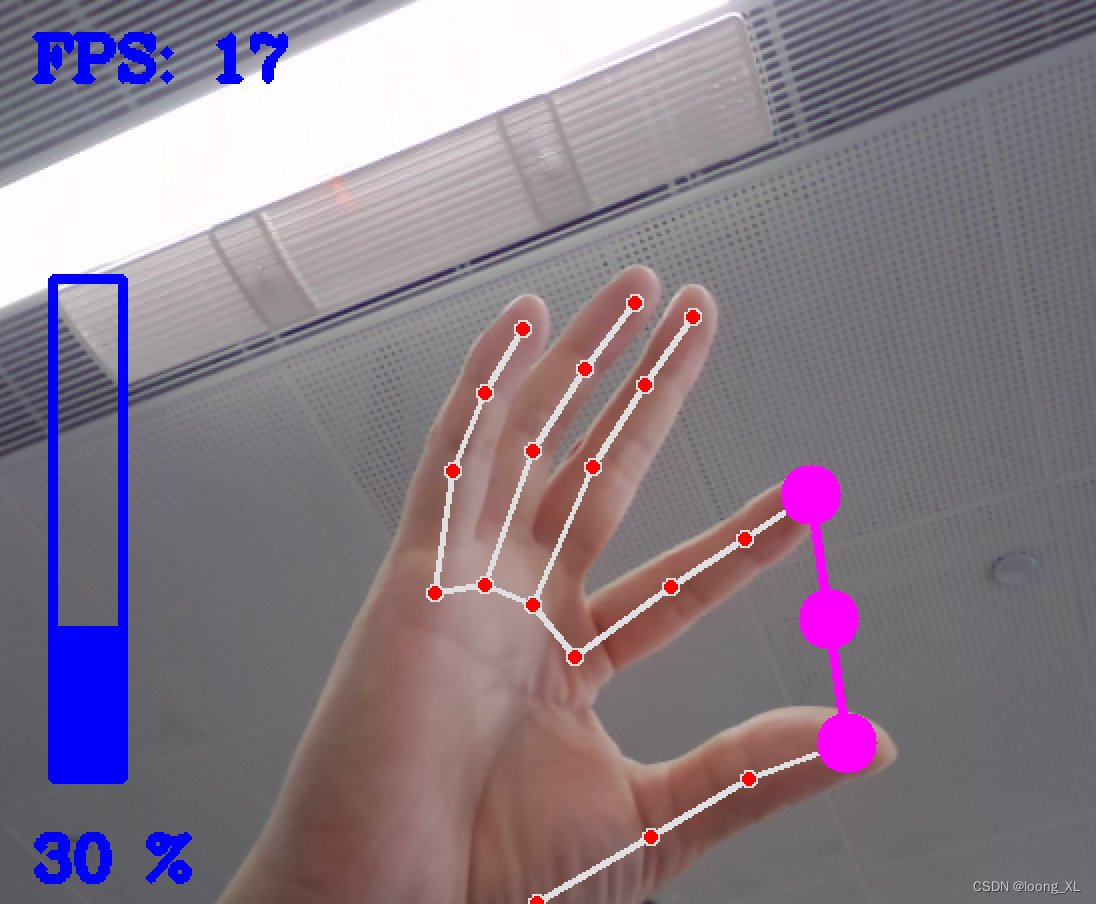

while True:success, img = cap.read()img = detector.findHands(img)lmList = detector.findPosition(img, draw=False)# print(lmList)if len(lmList[0]) != 0:# print(lmList[4], lmList[8])x1, y1 = lmList[0][4][1], lmList[0][4][2]x2, y2 = lmList[0][8][1], lmList[0][8][2]cx, cy = (x1 + x2) // 2, (y1 + y2) // 2cv2.circle(img, (x1, y1), 15, (255, 0, 255), cv2.FILLED)cv2.circle(img, (x2, y2), 15, (255, 0, 255), cv2.FILLED)cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)length = math.hypot(x2 - x1, y2 - y1)print(x2 - x1, y2 - y1)# Hand range 50 - 300# Volume Range -65 - 0vol = np.interp(length, [50, 300], [minVol, maxVol])volBar = np.interp(length, [50, 300], [400, 150])volPer = np.interp(length, [50, 300], [0, 100])# print(int(length), vol)volume.SetMasterVolumeLevel(vol, None)if length < 50:cv2.circle(img, (cx, cy), 15, (0, 255, 0), cv2.FILLED)cv2.rectangle(img, (50, 150), (85, 400), (255, 0, 0), 3)cv2.rectangle(img, (50, int(volBar)), (85, 400), (255, 0, 0), cv2.FILLED)cv2.putText(img, f'{int(volPer)} %', (40, 450), cv2.FONT_HERSHEY_COMPLEX,1, (255, 0, 0), 3)cTime = time.time()fps = 1 / (cTime - pTime)pTime = cTimecv2.putText(img, f'FPS: {int(fps)}', (40, 50), cv2.FONT_HERSHEY_COMPLEX,1, (255, 0, 0), 3)cv2.imshow("Img", img)cv2.waitKey(1)if cv2.waitKey(20) & 0xFF == ord('q'):breakcap.release()

cv2.destroyAllWindows()

HandTrackingModule.py

import cv2

import mediapipe as mp

import time

import mathclass handDetector():def __init__(self, mode=False, max_num_hands=2, min_detection_confidence=0.5, min_tracking_confidence=0.5):self.mode = modeself.max_num_hands = max_num_handsself.min_detection_confidence = min_detection_confidenceself.min_tracking_confidence = min_tracking_confidenceself.mpHands = mp.solutions.handsself.hands = self.mpHands.Hands(static_image_mode=self.mode, max_num_hands=self.max_num_hands,min_detection_confidence=self.min_detection_confidence, min_tracking_confidence=self.min_tracking_confidence)self.mpDraw = mp.solutions.drawing_utilsself.tipIds = [4, 8, 12, 16, 20]def findHands(self, img, draw=True):imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)self.results = self.hands.process(imgRGB)# print(results.multi_hand_landmarks)if self.results.multi_hand_landmarks:for handLms in self.results.multi_hand_landmarks:if draw:self.mpDraw.draw_landmarks(img, handLms,self.mpHands.HAND_CONNECTIONS)return imgdef findPosition(self, img, handNo=0, draw=True):xList = []yList = []bbox = []self.lmList = []if self.results.multi_hand_landmarks:myHand = self.results.multi_hand_landmarks[handNo]for id, lm in enumerate(myHand.landmark):# print(id, lm)h, w, c = img.shapecx, cy = int(lm.x * w), int(lm.y * h)xList.append(cx)yList.append(cy)# print(id, cx, cy)self.lmList.append([id, cx, cy])if draw:cv2.circle(img, (cx, cy), 5, (255, 0, 255), cv2.FILLED)xmin, xmax = min(xList), max(xList)ymin, ymax = min(yList), max(yList)bbox = xmin, ymin, xmax, ymaxif draw:cv2.rectangle(img, (bbox[0] - 20, bbox[1] - 20),(bbox[2] + 20, bbox[3] + 20), (0, 255, 0), 2)return self.lmList, bboxdef fingersUp(self):fingers = []# Thumbif self.lmList[self.tipIds[0]][1] > self.lmList[self.tipIds[0] - 1][1]:fingers.append(1)else:fingers.append(0)# 4 Fingersfor id in range(1, 5):if self.lmList[self.tipIds[id]][2] < self.lmList[self.tipIds[id] - 2][2]:fingers.append(1)else:fingers.append(0)return fingersdef findDistance(self, p1, p2, img, draw=True):x1, y1 = self.lmList[p1][1], self.lmList[p1][2]x2, y2 = self.lmList[p2][1], self.lmList[p2][2]cx, cy = (x1 + x2) // 2, (y1 + y2) // 2if draw:cv2.circle(img, (x1, y1), 15, (255, 0, 255), cv2.FILLED)cv2.circle(img, (x2, y2), 15, (255, 0, 255), cv2.FILLED)cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)length = math.hypot(x2 - x1, y2 - y1)return length, img, [x1, y1, x2, y2, cx, cy]def main():pTime = 0cap = cv2.VideoCapture(0)detector = handDetector()while True:success, img = cap.read()img = detector.findHands(img)lmList = detector.findPosition(img)if len(lmList) != 0:print(lmList[4])cTime = time.time()fps = 1 / (cTime - pTime)pTime = cTimecv2.putText(img, str(int(fps)), (10, 70), cv2.FONT_HERSHEY_PLAIN, 3,(255, 0, 255), 3)cv2.imshow("Image", img)cv2.waitKey(1)if __name__ == "__main__":main()