1、编写Dockerfile

root@ubuntu:~# mkdir /data/docker-compose/trx -p

root@ubuntu:~# cd /data/docker-compose/trx/

root@ubuntu:/data/docker-compose/trx# ls

root@ubuntu:/data/docker-compose/trx# vim Dockerfile

root@ubuntu:/data/docker-compose/trx# cat Dockerfile

FROM openjdk:8

LABEL maintainer=yunson

WORKDIR /data# 安装必要的系统库和工具

RUN apt-get update && \apt-get install -y curl && \apt-get clean# download

RUN curl -Lo ./FullNode.jar https://github.com/tronprotocol/java-tron/releases/download/GreatVoyage-v4.7.4/FullNode.jar# copy config file

COPY data/mainnet.conf mainnet.conf# 设定容器启动时运行的命令

ENTRYPOINT ["java","-jar","FullNode.jar","--witness","-c","mainnet.conf" ]

2、编写docker-compose.yaml

我们挂载了data/,那我们就要将FullNode.jar和mainnet.conf下载放到data/下,不然起不来

version: '3.8'services:tronprotocol:build: context: .dockerfile: Dockerfilecontainer_name: tronprotocol volumes:- ./data:/data/ports:- "8090:8090"- "8091:8091"

或者

我们也可以先打镜像,docker-compose.yaml我们就可以写成打好的镜像

version: '3.8'services:image: devocenter/tronprotocol:latestcontainer_name: tronprotocol volumes:- ./data:/data/ports:- "8090:8090"- "8091:8091"

mainnet.conf

net {type = mainnet# type = testnet

}storage {# Directory for storing persistent datadb.engine = "LEVELDB",db.sync = false,db.directory = "database",index.directory = "index",transHistory.switch = "on",# You can custom these 14 databases' configs:# account, account-index, asset-issue, block, block-index,# block_KDB, peers, properties, recent-block, trans,# utxo, votes, witness, witness_schedule.# Otherwise, db configs will remain default and data will be stored in# the path of "output-directory" or which is set by "-d" ("--output-directory").# setting can impove leveldb performance .... start# node: if this will increase process fds,you may be check your ulimit if 'too many open files' error occurs# see https://github.com/tronprotocol/tips/blob/master/tip-343.md for detail# if you find block sync has lower performance,you can try this settings#default = {# maxOpenFiles = 100#}#defaultM = {# maxOpenFiles = 500#}#defaultL = {# maxOpenFiles = 1000#}# setting can impove leveldb performance .... end# Attention: name is a required field that must be set !!!properties = [// {// name = "account",// path = "storage_directory_test",// createIfMissing = true,// paranoidChecks = true,// verifyChecksums = true,// compressionType = 1, // compressed with snappy// blockSize = 4096, // 4 KB = 4 * 1024 B// writeBufferSize = 10485760, // 10 MB = 10 * 1024 * 1024 B// cacheSize = 10485760, // 10 MB = 10 * 1024 * 1024 B// maxOpenFiles = 100// },// {// name = "account-index",// path = "storage_directory_test",// createIfMissing = true,// paranoidChecks = true,// verifyChecksums = true,// compressionType = 1, // compressed with snappy// blockSize = 4096, // 4 KB = 4 * 1024 B// writeBufferSize = 10485760, // 10 MB = 10 * 1024 * 1024 B// cacheSize = 10485760, // 10 MB = 10 * 1024 * 1024 B// maxOpenFiles = 100// },]needToUpdateAsset = true//dbsettings is needed when using rocksdb as the storage implement (db.engine="ROCKSDB").//we'd strongly recommend that do not modify it unless you know every item's meaning clearly.dbSettings = {levelNumber = 7//compactThreads = 32blocksize = 64 // n * KBmaxBytesForLevelBase = 256 // n * MBmaxBytesForLevelMultiplier = 10level0FileNumCompactionTrigger = 4targetFileSizeBase = 256 // n * MBtargetFileSizeMultiplier = 1}//backup settings when using rocks db as the storage implement (db.engine="ROCKSDB").//if you want to use the backup plugin, please confirm set the db.engine="ROCKSDB" above.backup = {enable = false // indicate whether enable the backup pluginpropPath = "prop.properties" // record which bak directory is validbak1path = "bak1/database" // you must set two backup directories to prevent application halt unexpected(e.g. kill -9).bak2path = "bak2/database"frequency = 10000 // indicate backup db once every 10000 blocks processed.}balance.history.lookup = false# checkpoint.version = 2# checkpoint.sync = true# the estimated number of block transactions (default 1000, min 100, max 10000).# so the total number of cached transactions is 65536 * txCache.estimatedTransactions# txCache.estimatedTransactions = 1000# data root setting, for check data, currently, only reward-vi is used.# merkleRoot = {# reward-vi = 9debcb9924055500aaae98cdee10501c5c39d4daa75800a996f4bdda73dbccd8 // main-net, Sha256Hash, hexString# }

}node.discovery = {enable = truepersist = true

}# custom stop condition

#node.shutdown = {

# BlockTime = "54 59 08 * * ?" # if block header time in persistent db matched.

# BlockHeight = 33350800 # if block header height in persistent db matched.

# BlockCount = 12 # block sync count after node start.

#}node.backup {# udp listen port, each member should have the same configurationport = 10001# my priority, each member should use different prioritypriority = 8# time interval to send keepAlive message, each member should have the same configurationkeepAliveInterval = 3000# peer's ip list, can't contain minemembers = [# "ip",# "ip"]

}crypto {engine = "eckey"

}

# prometheus metrics start

# node.metrics = {

# prometheus{

# enable=true

# port="9527"

# }

# }# prometheus metrics endnode {# trust node for solidity node# trustNode = "ip:port"trustNode = "127.0.0.1:50051"# expose extension api to public or notwalletExtensionApi = truelisten.port = 18888connection.timeout = 2fetchBlock.timeout = 200tcpNettyWorkThreadNum = 0udpNettyWorkThreadNum = 1# Number of validate sign thread, default availableProcessors / 2# validateSignThreadNum = 16maxConnections = 30minConnections = 8minActiveConnections = 3maxConnectionsWithSameIp = 2maxHttpConnectNumber = 50minParticipationRate = 15isOpenFullTcpDisconnect = falsep2p {version = 11111 # 11111: mainnet; 20180622: testnet}active = [# Active establish connection in any case# Sample entries:# "ip:port",# "ip:port"]passive = [# Passive accept connection in any case# Sample entries:# "ip:port",# "ip:port"]fastForward = ["100.26.245.209:18888","15.188.6.125:18888"]http {fullNodeEnable = truefullNodePort = 8090solidityEnable = truesolidityPort = 8091}rpc {port = 50051#solidityPort = 50061# Number of gRPC thread, default availableProcessors / 2# thread = 16# The maximum number of concurrent calls permitted for each incoming connection# maxConcurrentCallsPerConnection =# The HTTP/2 flow control window, default 1MB# flowControlWindow =# Connection being idle for longer than which will be gracefully terminatedmaxConnectionIdleInMillis = 60000# Connection lasting longer than which will be gracefully terminated# maxConnectionAgeInMillis =# The maximum message size allowed to be received on the server, default 4MB# maxMessageSize =# The maximum size of header list allowed to be received, default 8192# maxHeaderListSize =# Transactions can only be broadcast if the number of effective connections is reached.minEffectiveConnection = 1# The switch of the reflection service, effective for all gRPC services# reflectionService = true}# number of solidity thread in the FullNode.# If accessing solidity rpc and http interface timeout, could increase the number of threads,# The default value is the number of cpu cores of the machine.#solidity.threads = 8# Limits the maximum percentage (default 75%) of producing block interval# to provide sufficient time to perform other operations e.g. broadcast block# blockProducedTimeOut = 75# Limits the maximum number (default 700) of transaction from network layer# netMaxTrxPerSecond = 700# Whether to enable the node detection function, default false# nodeDetectEnable = false# use your ipv6 address for node discovery and tcp connection, default false# enableIpv6 = false# if your node's highest block num is below than all your pees', try to acquire new connection. default false# effectiveCheckEnable = false# Dynamic loading configuration function, disabled by default# dynamicConfig = {# enable = false# Configuration file change check interval, default is 600 seconds# checkInterval = 600# }dns {# dns urls to get nodes, url format tree://{pubkey}@{domain}, default emptytreeUrls = [#"tree://AKMQMNAJJBL73LXWPXDI4I5ZWWIZ4AWO34DWQ636QOBBXNFXH3LQS@main.trondisco.net", //offical dns tree]# enable or disable dns publish, default false# publish = false# dns domain to publish nodes, required if publish is true# dnsDomain = "nodes1.example.org"# dns private key used to publish, required if publish is true, hex string of length 64# dnsPrivate = "b71c71a67e1177ad4e901695e1b4b9ee17ae16c6668d313eac2f96dbcda3f291"# known dns urls to publish if publish is true, url format tree://{pubkey}@{domain}, default empty# knownUrls = [#"tree://APFGGTFOBVE2ZNAB3CSMNNX6RRK3ODIRLP2AA5U4YFAA6MSYZUYTQ@nodes2.example.org",# ]# staticNodes = [# static nodes to published on dns# Sample entries:# "ip:port",# "ip:port"# ]# merge several nodes into a leaf of tree, should be 1~5# maxMergeSize = 5# only nodes change percent is bigger then the threshold, we update data on dns# changeThreshold = 0.1# dns server to publish, required if publish is true, only aws or aliyun is support# serverType = "aws"# access key id of aws or aliyun api, required if publish is true, string# accessKeyId = "your-key-id"# access key secret of aws or aliyun api, required if publish is true, string# accessKeySecret = "your-key-secret"# if publish is true and serverType is aliyun, it's endpoint of aws dns server, string# aliyunDnsEndpoint = "alidns.aliyuncs.com"# if publish is true and serverType is aws, it's region of aws api, such as "eu-south-1", string# awsRegion = "us-east-1"# if publish is true and server-type is aws, it's host zone id of aws's domain, string# awsHostZoneId = "your-host-zone-id"}# open the history query APIs(http&GRPC) when node is a lite fullNode,# like {getBlockByNum, getBlockByID, getTransactionByID...}.# default: false.# note: above APIs may return null even if blocks and transactions actually are on the blockchain# when opening on a lite fullnode. only open it if the consequences being clearly known# openHistoryQueryWhenLiteFN = falsejsonrpc {# Note: If you turn on jsonrpc and run it for a while and then turn it off, you will not# be able to get the data from eth_getLogs for that period of time.# httpFullNodeEnable = true# httpFullNodePort = 8545# httpSolidityEnable = true# httpSolidityPort = 8555# httpPBFTEnable = true# httpPBFTPort = 8565}# Disabled api list, it will work for http, rpc and pbft, both fullnode and soliditynode,# but not jsonrpc.# Sample: The setting is case insensitive, GetNowBlock2 is equal to getnowblock2## disabledApi = [# "getaccount",# "getnowblock2"# ]

}## rate limiter config

rate.limiter = {# Every api could be set a specific rate limit strategy. Three strategy are supported:GlobalPreemptibleAdapter、IPQPSRateLimiterAdapte、QpsRateLimiterAdapter# GlobalPreemptibleAdapter: permit is the number of preemptible resource, every client must apply one resourse# before do the request and release the resource after got the reponse automaticlly. permit should be a Integer.# QpsRateLimiterAdapter: qps is the average request count in one second supported by the server, it could be a Double or a Integer.# IPQPSRateLimiterAdapter: similar to the QpsRateLimiterAdapter, qps could be a Double or a Integer.# If do not set, the "default strategy" is set.The "default startegy" is based on QpsRateLimiterAdapter, the qps is set as 10000.## Sample entries:#http = [# {# component = "GetNowBlockServlet",# strategy = "GlobalPreemptibleAdapter",# paramString = "permit=1"# },# {# component = "GetAccountServlet",# strategy = "IPQPSRateLimiterAdapter",# paramString = "qps=1"# },# {# component = "ListWitnessesServlet",# strategy = "QpsRateLimiterAdapter",# paramString = "qps=1"# }],rpc = [# {# component = "protocol.Wallet/GetBlockByLatestNum2",# strategy = "GlobalPreemptibleAdapter",# paramString = "permit=1"# },# {# component = "protocol.Wallet/GetAccount",# strategy = "IPQPSRateLimiterAdapter",# paramString = "qps=1"# },# {# component = "protocol.Wallet/ListWitnesses",# strategy = "QpsRateLimiterAdapter",# paramString = "qps=1"# },]# global qps, default 50000# global.qps = 50000# IP-based global qps, default 10000# global.ip.qps = 10000

}seed.node = {# List of the seed nodes# Seed nodes are stable full nodes# example:# ip.list = [# "ip:port",# "ip:port"# ]ip.list = ["3.225.171.164:18888","52.53.189.99:18888","18.196.99.16:18888","34.253.187.192:18888","18.133.82.227:18888","35.180.51.163:18888","54.252.224.209:18888","18.231.27.82:18888","52.15.93.92:18888","34.220.77.106:18888","15.207.144.3:18888","13.124.62.58:18888","54.151.226.240:18888","35.174.93.198:18888","18.210.241.149:18888","54.177.115.127:18888","54.254.131.82:18888","18.167.171.167:18888","54.167.11.177:18888","35.74.7.196:18888","52.196.244.176:18888","54.248.129.19:18888","43.198.142.160:18888","3.0.214.7:18888","54.153.59.116:18888","54.153.94.160:18888","54.82.161.39:18888","54.179.207.68:18888","18.142.82.44:18888","18.163.230.203:18888",# "[2a05:d014:1f2f:2600:1b15:921:d60b:4c60]:18888", // use this if support ipv6# "[2600:1f18:7260:f400:8947:ebf3:78a0:282b]:18888", // use this if support ipv6]

}genesis.block = {# Reserve balanceassets = [{accountName = "Zion"accountType = "AssetIssue"address = "TLLM21wteSPs4hKjbxgmH1L6poyMjeTbHm"balance = "99000000000000000"},{accountName = "Sun"accountType = "AssetIssue"address = "TXmVpin5vq5gdZsciyyjdZgKRUju4st1wM"balance = "0"},{accountName = "Blackhole"accountType = "AssetIssue"address = "TLsV52sRDL79HXGGm9yzwKibb6BeruhUzy"balance = "-9223372036854775808"}]witnesses = [{address: THKJYuUmMKKARNf7s2VT51g5uPY6KEqnat,url = "http://GR1.com",voteCount = 100000026},{address: TVDmPWGYxgi5DNeW8hXrzrhY8Y6zgxPNg4,url = "http://GR2.com",voteCount = 100000025},{address: TWKZN1JJPFydd5rMgMCV5aZTSiwmoksSZv,url = "http://GR3.com",voteCount = 100000024},{address: TDarXEG2rAD57oa7JTK785Yb2Et32UzY32,url = "http://GR4.com",voteCount = 100000023},{address: TAmFfS4Tmm8yKeoqZN8x51ASwdQBdnVizt,url = "http://GR5.com",voteCount = 100000022},{address: TK6V5Pw2UWQWpySnZyCDZaAvu1y48oRgXN,url = "http://GR6.com",voteCount = 100000021},{address: TGqFJPFiEqdZx52ZR4QcKHz4Zr3QXA24VL,url = "http://GR7.com",voteCount = 100000020},{address: TC1ZCj9Ne3j5v3TLx5ZCDLD55MU9g3XqQW,url = "http://GR8.com",voteCount = 100000019},{address: TWm3id3mrQ42guf7c4oVpYExyTYnEGy3JL,url = "http://GR9.com",voteCount = 100000018},{address: TCvwc3FV3ssq2rD82rMmjhT4PVXYTsFcKV,url = "http://GR10.com",voteCount = 100000017},{address: TFuC2Qge4GxA2U9abKxk1pw3YZvGM5XRir,url = "http://GR11.com",voteCount = 100000016},{address: TNGoca1VHC6Y5Jd2B1VFpFEhizVk92Rz85,url = "http://GR12.com",voteCount = 100000015},{address: TLCjmH6SqGK8twZ9XrBDWpBbfyvEXihhNS,url = "http://GR13.com",voteCount = 100000014},{address: TEEzguTtCihbRPfjf1CvW8Euxz1kKuvtR9,url = "http://GR14.com",voteCount = 100000013},{address: TZHvwiw9cehbMxrtTbmAexm9oPo4eFFvLS,url = "http://GR15.com",voteCount = 100000012},{address: TGK6iAKgBmHeQyp5hn3imB71EDnFPkXiPR,url = "http://GR16.com",voteCount = 100000011},{address: TLaqfGrxZ3dykAFps7M2B4gETTX1yixPgN,url = "http://GR17.com",voteCount = 100000010},{address: TX3ZceVew6yLC5hWTXnjrUFtiFfUDGKGty,url = "http://GR18.com",voteCount = 100000009},{address: TYednHaV9zXpnPchSywVpnseQxY9Pxw4do,url = "http://GR19.com",voteCount = 100000008},{address: TCf5cqLffPccEY7hcsabiFnMfdipfyryvr,url = "http://GR20.com",voteCount = 100000007},{address: TAa14iLEKPAetX49mzaxZmH6saRxcX7dT5,url = "http://GR21.com",voteCount = 100000006},{address: TBYsHxDmFaRmfCF3jZNmgeJE8sDnTNKHbz,url = "http://GR22.com",voteCount = 100000005},{address: TEVAq8dmSQyTYK7uP1ZnZpa6MBVR83GsV6,url = "http://GR23.com",voteCount = 100000004},{address: TRKJzrZxN34YyB8aBqqPDt7g4fv6sieemz,url = "http://GR24.com",voteCount = 100000003},{address: TRMP6SKeFUt5NtMLzJv8kdpYuHRnEGjGfe,url = "http://GR25.com",voteCount = 100000002},{address: TDbNE1VajxjpgM5p7FyGNDASt3UVoFbiD3,url = "http://GR26.com",voteCount = 100000001},{address: TLTDZBcPoJ8tZ6TTEeEqEvwYFk2wgotSfD,url = "http://GR27.com",voteCount = 100000000}]timestamp = "0" #2017-8-26 12:00:00parentHash = "0xe58f33f9baf9305dc6f82b9f1934ea8f0ade2defb951258d50167028c780351f"

}// Optional.The default is empty.

// It is used when the witness account has set the witnessPermission.

// When it is not empty, the localWitnessAccountAddress represents the address of the witness account,

// and the localwitness is configured with the private key of the witnessPermissionAddress in the witness account.

// When it is empty,the localwitness is configured with the private key of the witness account.//localWitnessAccountAddress =localwitness = [

]#localwitnesskeystore = [

# "localwitnesskeystore.json"

#]block = {needSyncCheck = truemaintenanceTimeInterval = 21600000proposalExpireTime = 259200000 // 3 day: 259200000(ms)

}# Transaction reference block, default is "solid", configure to "head" may incur TaPos error

# trx.reference.block = "solid" // head;solid;# This property sets the number of milliseconds after the creation of the transaction that is expired, default value is 60000.

# trx.expiration.timeInMilliseconds = 60000vm = {supportConstant = falsemaxEnergyLimitForConstant = 100000000minTimeRatio = 0.0maxTimeRatio = 5.0saveInternalTx = false# Indicates whether the node stores featured internal transactions, such as freeze, vote and so on# saveFeaturedInternalTx = false# In rare cases, transactions that will be within the specified maximum execution time (default 10(ms)) are re-executed and packaged# longRunningTime = 10# Indicates whether the node support estimate energy API.# estimateEnergy = false# Indicates the max retry time for executing transaction in estimating energy.# estimateEnergyMaxRetry = 3

}committee = {allowCreationOfContracts = 0 //mainnet:0 (reset by committee),test:1allowAdaptiveEnergy = 0 //mainnet:0 (reset by committee),test:1

}event.subscribe = {native = {useNativeQueue = true // if true, use native message queue, else use event plugin.bindport = 5555 // bind portsendqueuelength = 1000 //max length of send queue}path = "" // absolute path of pluginserver = "" // target server address to receive event triggers// dbname|username|password, if you want to create indexes for collections when the collections// are not exist, you can add version and set it to 2, as dbname|username|password|version// if you use version 2 and one collection not exists, it will create index automaticaly;// if you use version 2 and one collection exists, it will not create index, you must create index manually;dbconfig = ""contractParse = truetopics = [{triggerName = "block" // block trigger, the value can't be modifiedenable = falsetopic = "block" // plugin topic, the value could be modifiedsolidified = false // if set true, just need solidified block, default is false},{triggerName = "transaction"enable = falsetopic = "transaction"solidified = falseethCompatible = false // if set true, add transactionIndex, cumulativeEnergyUsed, preCumulativeLogCount, logList, energyUnitPrice, default is false},{triggerName = "contractevent"enable = falsetopic = "contractevent"},{triggerName = "contractlog"enable = falsetopic = "contractlog"redundancy = false // if set true, contractevent will also be regarded as contractlog},{triggerName = "solidity" // solidity block trigger(just include solidity block number and timestamp), the value can't be modifiedenable = true // the default value is truetopic = "solidity"},{triggerName = "solidityevent"enable = falsetopic = "solidityevent"},{triggerName = "soliditylog"enable = falsetopic = "soliditylog"redundancy = false // if set true, solidityevent will also be regarded as soliditylog}]filter = {fromblock = "" // the value could be "", "earliest" or a specified block number as the beginning of the queried rangetoblock = "" // the value could be "", "latest" or a specified block number as end of the queried rangecontractAddress = ["" // contract address you want to subscribe, if it's set to "", you will receive contract logs/events with any contract address.]contractTopic = ["" // contract topic you want to subscribe, if it's set to "", you will receive contract logs/events with any contract topic.]}

}

3、下载快照数据

mkdir data && cd data/

wget http://52.77.31.45/saveInternalTx/backup20240422/FullNode_output-directory.tgz

tar -xf FullNode_output-directory.tgz

4、启动容器

root@ubuntu:/data/docker-compose/trx# docker-compose up -d

[+] Building 3.5s (11/11) FINISHED => [internal] load build definition from Dockerfile 0.0s=> => transferring dockerfile: 508B 0.0s=> [internal] load metadata for docker.io/library/openjdk:8 3.4s=> [auth] library/openjdk:pull token for registry-1.docker.io 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [1/5] FROM docker.io/library/openjdk:8@sha256:86e863cc57215cfb181bd319736d0baf625fe8f150577f9eb58bd937f5452cb8 0.0s=> [internal] load build context 0.0s=> => transferring context: 22.52kB 0.0s=> CACHED [2/5] WORKDIR /data 0.0s=> CACHED [3/5] RUN apt-get update && apt-get install -y curl && apt-get clean 0.0s=> CACHED [4/5] RUN curl -Lo ./FullNode.jar https://github.com/tronprotocol/java-tron/releases/download/GreatVoyage-v4.7.4/FullNode.jar 0.0s=> [5/5] COPY data/mainnet.conf mainnet.conf 0.0s=> exporting to image 0.0s=> => exporting layers 0.0s=> => writing image sha256:3b4e1f7694e0089a11996fc1881ffff91a1a3cc4a94109fe635f1e96b3e086a7 0.0s=> => naming to docker.io/library/trx_tronprotocol 0.0s

[+] Running 2/2⠿ Network trx_default Created 0.1s⠿ Container tronprotocol Started root@ubuntu:/data/docker-compose/trx# docker-compose ps

NAME COMMAND SERVICE STATUS PORTS

tronprotocol "java -jar FullNode.…" tronprotocol running 0.0.0.0:8090-8091->8090-8091/tcp, :::8090-8091->8090-8091/tcp

查询日志

日志路径在数据目录下的logs

oot@ubuntu:/data/docker-compose/trx# tail -f data/logs/tron.log

generated by myself=false

generate time=2018-06-25 06:39:33.0

account root=0000000000000000000000000000000000000000000000000000000000000000

txs are empty

]

11:59:58.969 INFO [sync-handle-block] [DB](PendingManager.java:57) Pending tx size: 0.

11:59:58.969 INFO [sync-handle-block] [DB](Manager.java:1346) PushBlock block number: 5743, cost/txs: 35/0 false.

11:59:58.969 INFO [sync-handle-block] [net](TronNetDelegate.java:269) Success process block Num:5743,ID:000000000000166fd5cac4ece68bb5a16fe7256a9162d4c7a0e9270aefdd726d

11:59:58.970 INFO [sync-handle-block] [DB](Manager.java:1219) Block num: 5744, re-push-size: 0, pending-size: 0, block-tx-size: 0, verify-tx-size: 0

11:59:58.971 INFO [sync-handle-block] [DB](KhaosDatabase.java:331) Remove from khaosDatabase: 000000000000165be2a1e27c8773eddf2daaff5d0f923819c17e066e3e4adaa4.

11:59:59.007 INFO [sync-handle-block] [DB](SnapshotManager.java:362) Flush cost: 36 ms, create checkpoint cost: 33 ms, refresh cost: 3 ms.

11:59:59.011 INFO [sync-handle-block] [DB](DynamicPropertiesStore.java:2193) Update state flag = 0.

11:59:59.011 INFO [sync-handle-block] [consensus](DposService.java:162) Update solid block number to 5726

11:59:59.011 INFO [sync-handle-block] [DB](DynamicPropertiesStore.java:2188) Update latest block header id = 00000000000016704446a426e3a20044488ee203f5286967fe856cf9b1f507f2.

11:59:59.011 INFO [sync-handle-block] [DB](DynamicPropertiesStore.java:2180) Update latest block header number = 5744.

11:59:59.011 INFO [sync-handle-block] [DB](DynamicPropertiesStore.java:2172) Update latest block header timestamp = 1529908776000.

11:59:59.011 INFO [sync-handle-block] [DB](Manager.java:1328) Save block: BlockCapsule

[ hash=00000000000016704446a426e3a20044488ee203f5286967fe856cf9b1f507f2

number=5744

parentId=000000000000166fd5cac4ece68bb5a16fe7256a9162d4c7a0e9270aefdd726d

witness address=41f8c7acc4c08cf36ca08fc2a61b1f5a7c8dea7bec

generated by myself=false

generate time=2018-06-25 06:39:36.0

account root=0000000000000000000000000000000000000000000000000000000000000000

txs are empty

]5、打镜像

root@ubuntu:/data/docker-compose/trx# docker build -t tronprotocol .

[+] Building 44.1s (9/9) FINISHED docker:default=> [internal] load build definition from Dockerfile 0.0s=> => transferring dockerfile: 585B 0.0s=> [internal] load metadata for docker.io/library/openjdk:8 7.2s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [1/5] FROM docker.io/library/openjdk:8@sha256:86e863cc57215cfb181bd3 16.6s=> => resolve docker.io/library/openjdk:8@sha256:86e863cc57215cfb181bd31 0.0s=> => sha256:b273004037cc3af245d8e08cfbfa672b93ee7dcb289 7.81kB / 7.81kB 0.0s=> => sha256:001c52e26ad57e3b25b439ee0052f6692e5c0f2d5 55.00MB / 55.00MB 9.7s=> => sha256:2068746827ec1b043b571e4788693eab7e9b2a953 10.88MB / 10.88MB 2.5s=> => sha256:86e863cc57215cfb181bd319736d0baf625fe8f1505 1.04kB / 1.04kB 0.0s=> => sha256:3af2ac94130765b73fc8f1b42ffc04f77996ed8210c 1.79kB / 1.79kB 0.0s=> => sha256:d9d4b9b6e964657da49910b495173d6c4f0d9bc47b3 5.16MB / 5.16MB 3.1s=> => sha256:9daef329d35093868ef75ac8b7c6eb407fa53abbc 54.58MB / 54.58MB 6.6s=> => sha256:d85151f15b6683b98f21c3827ac545188b1849efb14 5.42MB / 5.42MB 4.8s=> => sha256:52a8c426d30b691c4f7e8c4b438901ddeb82ff80d4540d5 210B / 210B 5.8s=> => sha256:8754a66e005039a091c5ad0319f055be393c712 105.92MB / 105.92MB 9.0s=> => extracting sha256:001c52e26ad57e3b25b439ee0052f6692e5c0f2d5d982a00 1.9s=> => extracting sha256:d9d4b9b6e964657da49910b495173d6c4f0d9bc47b3b4427 0.2s=> => extracting sha256:2068746827ec1b043b571e4788693eab7e9b2a9530117651 0.2s=> => extracting sha256:9daef329d35093868ef75ac8b7c6eb407fa53abbcb3a264c 2.1s=> => extracting sha256:d85151f15b6683b98f21c3827ac545188b1849efb14a1049 0.2s=> => extracting sha256:52a8c426d30b691c4f7e8c4b438901ddeb82ff80d4540d5b 0.0s=> => extracting sha256:8754a66e005039a091c5ad0319f055be393c7123717b1f6f 2.0s=> [2/5] WORKDIR /data 1.1s=> [3/5] RUN apt-get update && apt-get install -y curl && apt-ge 7.6s=> [4/5] RUN curl -Lo ./FullNode.jar https://github.com/tronprotocol/jav 9.5s=> [5/5] RUN curl -Lo ./mainnet.conf https://github.com/tronprotocol/tro 1.4s=> exporting to image 0.5s=> => exporting layers 0.3s=> => writing image sha256:7de082bb163cb878f315ec2fc6f72216832805e2eae07 0.0s=> => naming to docker.io/library/tronprotocol 0.1s

root@ubuntu:/data/docker-compose/trx# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

tronprotocol latest 7de082bb163c 6 seconds ago 689MB

保存镜像

root@ubuntu:/data/docker-compose/trx# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

trx_tronprotocol latest 3b4e1f7694e0 38 seconds ago 689MB

root@ubuntu:/data/docker-compose/trx# docker tag trx_tronprotocol:latest devocenter/tronprotocol:v4.7.4

root@ubuntu:/data/docker-compose/trx# docker tag trx_tronprotocol:latest devocenter/tronprotocol

root@ubuntu:/data/docker-compose/trx# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

trx_tronprotocol latest 3b4e1f7694e0 About a minute ago 689MB

devocenter/tronprotocol latest 3b4e1f7694e0 About a minute ago 689MB

devocenter/tronprotocol v4.7.4 3b4e1f7694e0 About a minute ago 689MB

root@ubuntu:/data/docker-compose/trx# docker login docker.io

Log in with your Docker ID or email address to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com/ to create one.

You can log in with your password or a Personal Access Token (PAT). Using a limited-scope PAT grants better security and is required for organizations using SSO. Learn more at https://docs.docker.com/go/access-tokens/Username: devocenter

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded

root@ubuntu:/data/docker-compose/trx# docker push devocenter/tronprotocol:v4.7.4

The push refers to repository [docker.io/devocenter/tronprotocol]

c8883b956af0: Pushed

14b9852dbb22: Pushed

2652b2be035c: Pushed

79e65205e03f: Pushed

6b5aaff44254: Mounted from library/openjdk

53a0b163e995: Mounted from library/openjdk

b626401ef603: Mounted from library/openjdk

9b55156abf26: Mounted from library/openjdk

293d5db30c9f: Mounted from library/openjdk

03127cdb479b: Mounted from library/openjdk

9c742cd6c7a5: Mounted from library/openjdk

v4.7.4: digest: sha256:fbe40944dda0d8c67798aeb192a2881283865d1ba0692f7b9d8008af80150289 size: 2635

root@ubuntu:/data/docker-compose/trx# docker push devocenter/tronprotocol:latest

The push refers to repository [docker.io/devocenter/tronprotocol]

c8883b956af0: Layer already exists

14b9852dbb22: Layer already exists

2652b2be035c: Layer already exists

79e65205e03f: Layer already exists

6b5aaff44254: Layer already exists

53a0b163e995: Layer already exists

b626401ef603: Layer already exists

9b55156abf26: Layer already exists

293d5db30c9f: Layer already exists

03127cdb479b: Layer already exists

9c742cd6c7a5: Layer already exists

latest: digest: sha256:fbe40944dda0d8c67798aeb192a2881283865d1ba0692f7b9d8008af80150289 size: 2635

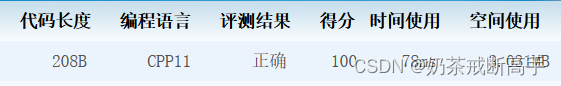

镜像仓库查看镜像