作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

目录

- 一.启用Cilium Ingress Controller

- 1.Cilium Ingress Controller概述

- 2.启用Cilium Ingress Controller

- 3.部署metallb组件

- 4.测试Ingress规则之dedicated模式

- 5.测试Ingress规则之shared模式

- 二.Cilium高级特性优化方案

- 1.kubernetes without kube-proxy

- 2.Client Source IP Preservation

- 3.Maglev Consistent Hashing

- 4.Direct Server Return(DSR)

- 5.Hybird DSR and SNAT Mode

- 6.Socket LoadBalancer Bypass

- 7.LoadBalancer & NodePort XDP Acceleration

一.启用Cilium Ingress Controller

1.Cilium Ingress Controller概述

Cilium内置支持用于Kubernetes Cluster的Ingress Controller。- 1.支持通过将IngressClassName的值设置为"cilium"来解析Ingress资源;- 2.也兼容"kubernetes.io/ingress.class"注解方式;- 3.支持各类Ingress,包括TLS类型;- 4.依赖的环境- 4.1 启用kube Proxy时,设置了"nodePort.enabled=true";- 4.2 未启用kube Proxy,由cilium代替其功能;Cilium Ingress的负载均衡器模式包含独占模式(dedicated)和共享模式(shared)。- 独占模式(dedicated):Cilium会为每个Ingress资源在同一个namespace下创建一个专用的LoadBalancer类型的Service资源。- 共享模式(shared):多个Ingress资源共享使用Cilium默认创建的LoadBalancer类型的Service资源(位于Cilium所在的名称空间,默认为"kube-system")。配置Ingress注意事项:- 1.在Ingress资源上,可以通过IngressClass或者annotations来指定cilium;- "Ingress.spec.ingressClassName: cilium"- 注解中添加"kubernetes.io/ingress.class: cilium"。- 2.Ingress资源的LoadBalancer类型- 默认继承cilium的全局设定;- 也可以通过注解"ingress.cilium.io/loadbalancer-mode"单独配置;- dedicated模式下,还可以通过注解"ingress.cilium.io/service-type"指定svc类型,有效值为: "LoadBalancer"和"NodePort"。- 3.其他注解参考Cilium官方文档即可;

2.启用Cilium Ingress Controller

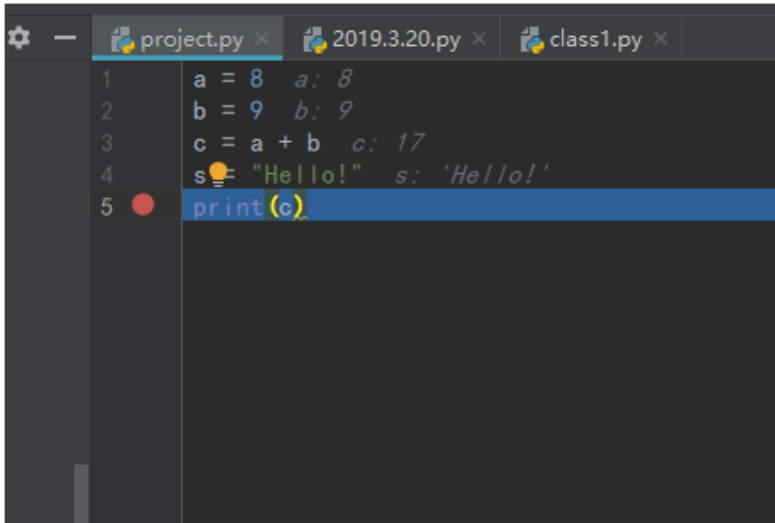

1.部署Cilium时,通过特定的选项即可启用其Ingress Contoller功能

[root@master241 ~]# cilium install \--set kubeProxyReplacement=true \--set ipam.mode=kubernetes \--set routingMode=native \--set autoDirectNodeRoutes=true \--set ipam.operator.clusterPoolIPv4PodCIDRList=10.100.0.0/16 \--set ipam.operator.clusterPoolIPv4MaskSize=24 \--set ipv4NativeRoutingCIDR=10.100.0.0/16 \--set bpf.masquerade=true \--set version=v1.17.0 \--set hubble.enabled="true" \--set hubble.listenAddress=":4244" \--set hubble.relay.enabled="true" \--set hubble.ui.enabled="true" \--set prometheus.enable=true \--set operator.prometheus.enabled=true \--set hubble.metrics.port=9665 \--set hubble.metrics.enableOpenMetrics=true \--set hubble.metrics.enable="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:expmplars=true;lablesContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}" \--set ingressController.enabled=true \--set ingressController.loadbalancerMode=dedicated \--set ingressController.default=true

... # 输出效果如下所示

ℹ️ Using Cilium version 1.17.0

🔮 Auto-detected cluster name: kubernetes

🔮 Auto-detected kube-proxy has not been installed

ℹ️ Cilium will fully replace all functionalities of kube-proxy

[root@master241 ~]# 2.查看部署IngressClass

[root@master241 ~]# kubectl get ingressclasses.networking.k8s.io -n kube-system

NAME CONTROLLER PARAMETERS AGE

cilium cilium.io/ingress-controller <none> 9m3s

[root@master241 ~]#

3.部署metallb组件

1.如果不部署metallb组件,则Cilium的svc就无法分配LoadBalancer的EXTERNAL-IP地址

[root@master241 ~]# kubectl get svc -n kube-system cilium-ingress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress LoadBalancer 10.206.109.188 <pending> 80:30498/TCP,443:30875/TCP 12m

[root@master241 ~]# 2.部署metallb

[root@master241 ~]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.14.9/config/manifests/metallb-native.yaml3.查看metallb的Pod是否准备就绪

[root@master241 ~]# kubectl get pods -o wide -n metallb-system

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

controller-74b6dc8f85-rhck5 1/1 Running 0 58s 10.100.2.191 worker243 <none> <none>

speaker-8r4fk 1/1 Running 0 58s 10.0.0.243 worker243 <none> <none>

speaker-99xr6 1/1 Running 0 58s 10.0.0.241 master241 <none> <none>

speaker-xjm5x 1/1 Running 0 58s 10.0.0.242 worker242 <none> <none>

[root@master241 ~]# 4.创建地址池

[root@master241 ~]# cat metallb-ip-pool.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:name: yinzhengjie-lb-poolnamespace: metallb-system

spec:addresses:- 10.0.0.150-10.0.0.180---apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:name: yinzhengjie-enable-metallbnamespace: metallb-system

spec:ipAddressPools:- yinzhengjie-lb-pool

[root@master241 ~]#

[root@master241 ~]# kubectl apply -f metallb-ip-pool.yaml

ipaddresspool.metallb.io/yinzhengjie-lb-pool created

l2advertisement.metallb.io/yinzhengjie-enable-metallb created

[root@master241 ~]# 5.再次查看cilium-ingress的对应的EXTERNAL-IP【已经自动获取到IP地址】

[root@master241 ~]# kubectl get svc -n kube-system cilium-ingress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress LoadBalancer 10.206.109.188 10.0.0.150 80:30498/TCP,443:30875/TCP 18m

[root@master241 ~]# 参考链接:https://metallb.universe.tf/installation/https://www.cnblogs.com/yinzhengjie/p/17811466.html

4.测试Ingress规则之dedicated模式

1.查看Ingressclass和svc信息

[root@master241 ~]# kubectl -n kube-system get svc hubble-ui

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hubble-ui ClusterIP 10.198.179.31 <none> 80/TCP 23m

[root@master241 ~]#

[root@master241 ~]# kubectl -n kube-system get ingressclasses

NAME CONTROLLER PARAMETERS AGE

cilium cilium.io/ingress-controller <none> 24m

[root@master241 ~]# 2.查看Ingress的资源清单

[root@master241 ~]# kubectl -n kube-system create ingress hubble-ui --rule='hubble.yinzhengjie.com/*=hubble-ui:80' --class='cilium' --dry-run=client -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:creationTimestamp: nullname: hubble-uinamespace: kube-system

spec:ingressClassName: ciliumrules:- host: hubble.yinzhengjie.comhttp:paths:- backend:service:name: hubble-uiport:number: 80path: /pathType: Prefix

status:loadBalancer: {}

[root@master241 ~]# 3.创建Ingress规则

[root@master241 ~]# kubectl -n kube-system create ingress hubble-ui --rule='hubble.yinzhengjie.com/*=hubble-ui:80' --class='cilium'

ingress.networking.k8s.io/hubble-ui created

[root@master241 ~]#

[root@master241 ~]# kubectl -n kube-system get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

hubble-ui cilium hubble.yinzhengjie.com 80 7s

[root@master241 ~]#

[root@master241 ~]# kubectl -n kube-system describe ing

Name: hubble-ui

Labels: <none>

Namespace: kube-system

Address:

Ingress Class: cilium

Default backend: <default>

Rules:Host Path Backends---- ---- --------hubble.yinzhengjie.com / hubble-ui:80 (10.100.1.118:8081)

Annotations: <none>

Events: <none>

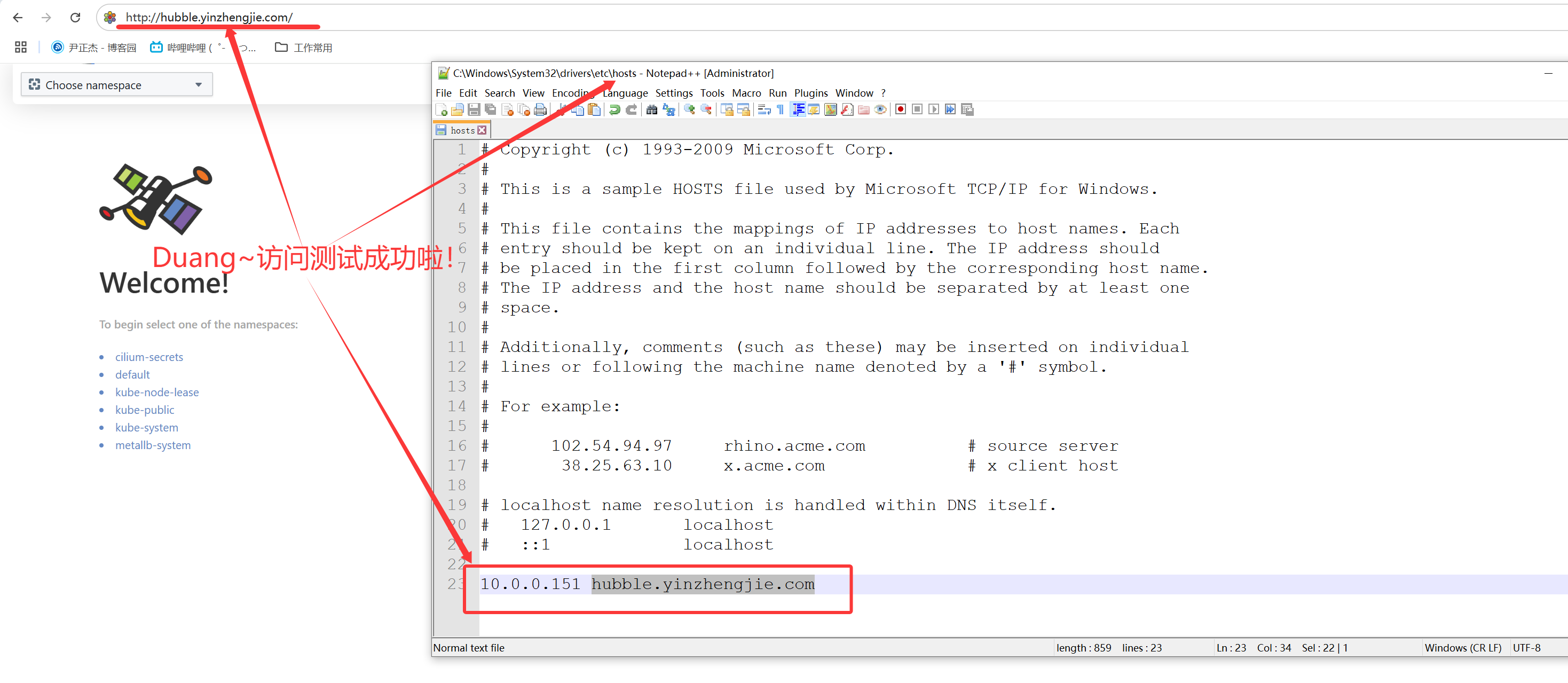

[root@master241 ~]# 4.独立模式的特点就是暴露服务后会重新创建一个新的LoadBalancer类型

[root@master241 ~]# kubectl get svc -n kube-system -l cilium.io/ingress=true

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress LoadBalancer 10.206.109.188 10.0.0.150 80:30498/TCP,443:30875/TCP 36m

cilium-ingress-hubble-ui LoadBalancer 10.193.158.11 10.0.0.151 80:30353/TCP,443:31984/TCP 8m51s

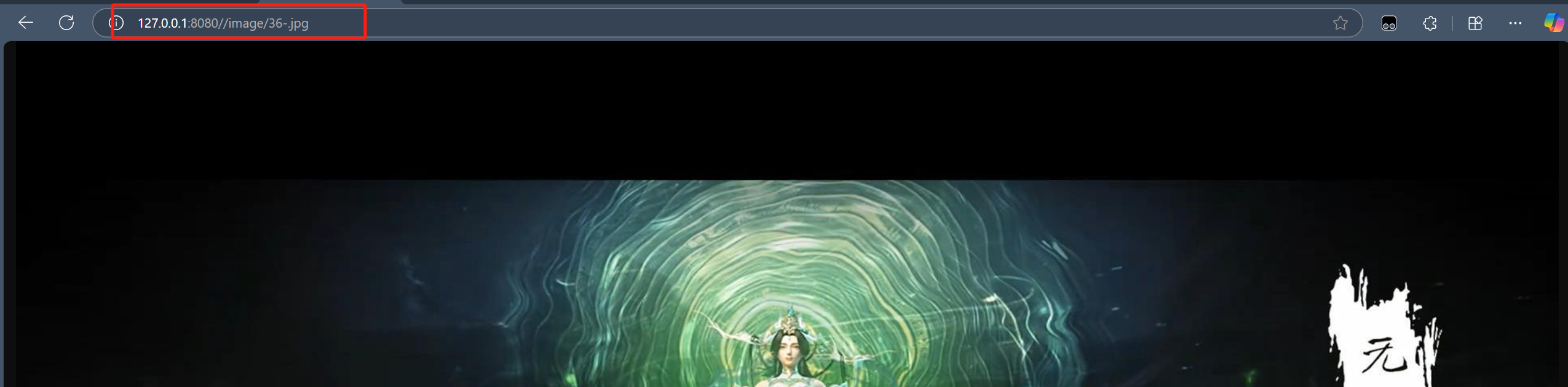

[root@master241 ~]# 5.客户端访问测试【也可以浏览器访问,如上图所示】

[root@master241 ~]# curl -s -H 'host: hubble.yinzhengjie.com' 10.0.0.151 | more

<!doctype html><html><head><meta charset="utf-8"/><title>Hubble UI</title><base href="/"/><meta name="color-scheme" content="only light"/><meta http-equiv

="X-UA-Compatible" content="IE=edge"/><meta name="viewport" content="width=device-width,user-scalable=0,initial-scale=1,minimum-scale=1,maximum-scale=1"/>

<link rel="icon" type="image/png" sizes="32x32" href="favicon-32x32.png"/><link rel="icon" type="image/png" sizes="16x16" href="favicon-16x16.png"/><link

rel="shortcut icon" href="favicon.ico"/><script defer="defer" src="bundle.main.eae50800ddcd18c25e9e.js"></script><link href="bundle.main.1d051ccbd0f5cd578

32e.css" rel="stylesheet"></head><body><div id="app" class="test"></div></body></html>

[root@master241 ~]# 5.测试Ingress规则之shared模式

1.准备测试环境

[root@master241 ~]# kubectl get pods --show-labels -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

xiuxian-v1 1/1 Running 0 4h34m 10.100.1.42 worker242 <none> <none> apps=xiuxian

xiuxian-v2 1/1 Running 0 4h34m 10.100.2.195 worker243 <none> <none> apps=xiuxian

[root@master241 ~]#

[root@master241 ~]# kubectl describe svc svc-xiuxian | grep Endpoints

Endpoints: 10.100.1.42:80,10.100.2.195:80

[root@master241 ~]#

[root@master241 ~]# kubectl get svc svc-xiuxian

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-xiuxian ClusterIP 10.204.89.73 <none> 80/TCP 3h58m

[root@master241 ~]# 2.创建Ingress规则指定shared模式

[root@master241 ~]# cat ing-xiuxain-cilium.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: ing-xiuxianannotations:# 我在安装Cilium时,指定了loadbalancer-mode: 'dedicated',此处我测试验证shared# ingress.cilium.io/loadbalancer-mode: 'dedicated'ingress.cilium.io/loadbalancer-mode: 'shared'ingress.cilium.io/service-type: 'LoadBalancer'

spec:ingressClassName: ciliumrules:- host: xiuxian.yinzhengjie.comhttp:paths:- backend:service:name: svc-xiuxianport:number: 80path: /pathType: Prefix

[root@master241 ~]#

[root@master241 ~]# kubectl apply -f ing-xiuxain-cilium.yaml

ingress.networking.k8s.io/ing-xiuxian created

[root@master241 ~]#

[root@master241 ~]# kubectl get ing ing-xiuxian # 注意观察ADDRESS的IP地址和kube-system名称空间的cilium-ingress共享。

NAME CLASS HOSTS ADDRESS PORTS AGE

ing-xiuxian cilium xiuxian.yinzhengjie.com 10.0.0.150 80 6s

[root@master241 ~]#

[root@master241 ~]# kubectl get svc -n kube-system cilium-ingress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress LoadBalancer 10.206.109.188 10.0.0.150 80:30498/TCP,443:30875/TCP 53m

[root@master241 ~]#

[root@master241 ~]# kubectl describe ingress ing-xiuxian

Name: ing-xiuxian

Labels: <none>

Namespace: default

Address: 10.0.0.150

Ingress Class: cilium

Default backend: <default>

Rules:Host Path Backends---- ---- --------xiuxian.yinzhengjie.com / svc-xiuxian:80 (10.100.1.42:80,10.100.2.195:80)

Annotations: ingress.cilium.io/loadbalancer-mode: sharedingress.cilium.io/service-type: LoadBalancer

Events: <none>

[root@master241 ~]# 3.测试验证【如上图所示,也可以在Windows上测试,记得配置解析哟~】

[root@master241 ~]# curl -s -H 'host: xiuxian.yinzhengjie.com' 10.0.0.150

<!DOCTYPE html>

<html><head><meta charset="utf-8"/><title>yinzhengjie apps v1</title><style>div img {width: 900px;height: 600px;margin: 0;}</style></head><body><h1 style="color: green">凡人修仙传 v1 </h1><div><img src="1.jpg"><div></body></html>

[root@master241 ~]#

[root@master241 ~]# curl -s -H 'host: xiuxian.yinzhengjie.com' 10.0.0.150

<!DOCTYPE html>

<html><head><meta charset="utf-8"/><title>yinzhengjie apps v2</title><style>div img {width: 900px;height: 600px;margin: 0;}</style></head><body><h1 style="color: red">凡人修仙传 v2 </h1><div><img src="2.jpg"><div></body></html>

[root@master241 ~]# 二.Cilium高级特性优化方案

1.kubernetes without kube-proxy

Cilium既可以作为CNI插件,也可同时取代kube-proxy组件的功能。- 1.取代kube proxy的功能称为:"eBPF kube-proxy replacement";- 2.可替代kube proxy支持ClusterIP,NodePort,LoadBalancer类型的Service,以及在svc使用ExternalIP;- 3.支持容器的hostPort进行端口映射;- 4.需要注意的是,节点具有多个网络接口是,需要在各节点的kubelet上基于"--node-ip"明确指定集群内部使用的IP地址,否则cilium可能会工作异常;"eBPF kube-proxy replacement"模式下,cilium支持多种高级功能:- Client Source IP Preservation- Maglev Consistent Hashing- Direct Server Return(DSR)- Hybird DSR and SNAT Mode- Socket LoadBalancer Bypass- LoadBalancer & NodePort XDP Acceleration

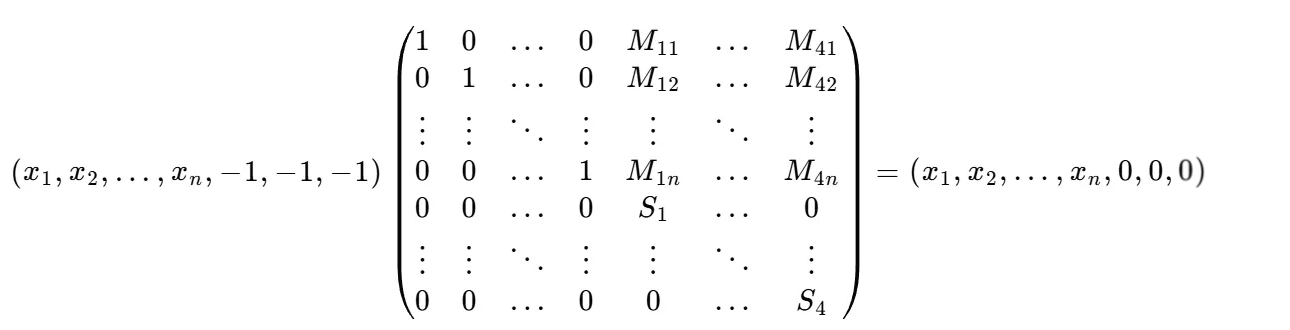

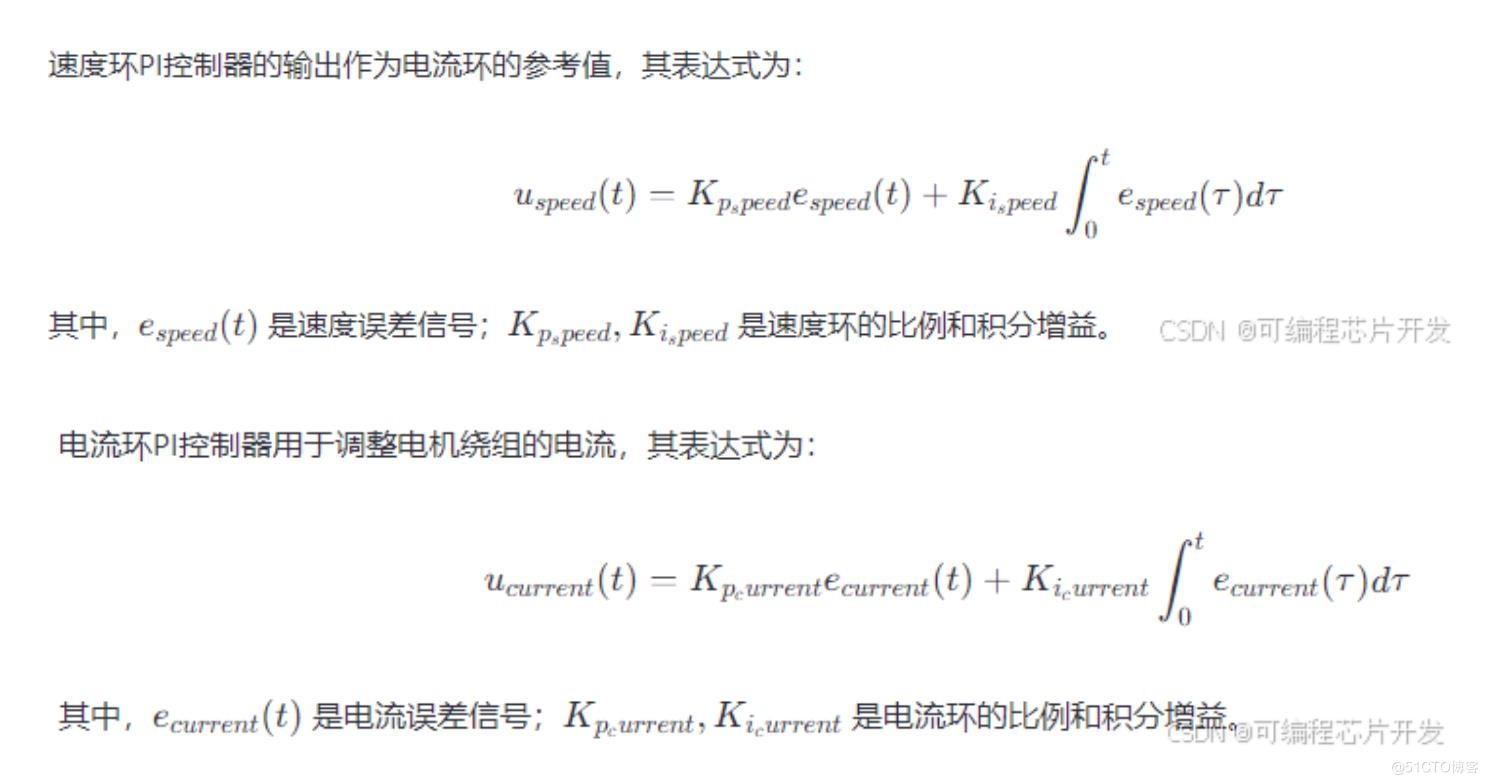

2.Client Source IP Preservation

| Traffic Policy | Service backends used | ||

|---|---|---|---|

| Internal | External | for North-Soutgh traffic | for East-West traffic |

| Cluster | Cluster | ALL(default) | ALL(default) |

| Cluster | Local | Node-local only | ALL(default) |

| Local | Cluster | ALL(default) | Node-local only |

| Local | Local | Node-local only | Node-local only |

"Client Source IP Preservation"用于保留客户端IP:- 1.客户端请求NodePort类型的Service时,Kubernetes可能会执行SNAT,Cilium能够帮助规避这种SNAT操作以保留真是客户端IP;- 2.外部流量策略(externalTrafficPolicy)下的外部请求报文的SNAT机制- Local策略:无需对请求报文进行SNAT,Cilium和kube Proxy都能支持。- Cluster策略:需要对请求报文进行SNAT,但cilium工作于DSR或Hybrid模式时,支持保持TCP流量报文的原地址。- 3.内部流量策略(internalTrafficPolicy)仅影响东西流量(E-W),这类流量的请求报文默认都不需要进行SNAT;两类流量策略下,流量调度至Service后端Pod上的生效逻辑如上表所示。

3.Maglev Consistent Hashing

Cilium支持在南北流量(N-S)上,对外部请求实施Maglev调度策略。- 1.默认的负载均衡算法是Random;- 2.Maglev是一种特殊的一致性哈希策略,能更好地应对故障;- 3.该算法基于5个后端节点完成哈希映射,并且无须同其他节点同步状态;- 4.节点故障后再均衡的影响范围较小;配置cilium使用Maglve算法:- 1.Maglev算法允许用户自定义查找表的大小;- 2.表的大小要显著大于Service后端Pod调度平均数量;- 3.实践中,表大小至少应该是后端Pod实例数量最大值的100倍;启用Maglev一致性哈希算法示例:

[root@master241 ~]# cilium install \--set kubeProxyReplacement=true \...--set loadBalancer.algorithm=maglev \--set maglev.tableSize=65521 \--set k8sServiceHost=${API_SERVER_IP} \--set k8sServicePort=${API_SERVER_PORT}参数说明:loadBalancer.algorithm=maglev表示启用Maglev负载均衡算法。maglev.tableSize=65512设置hash查找表的大小,表的大小要显著大于Service后端Pod数量。

4.Direct Server Return(DSR)

DSR在南北向流量上支撑起了"Client Source IP Preservation"机制的实现。DSR指的是外部请求报文从A主机转发至B主机时,无需进行SNAT。而是响应报文直接由B主机响应给外部客户端,以提升性能。启用DSR示例:

[root@master241 ~]# cilium install \--set kubeProxyReplacement=true \...--set loadBalancer.mode=dsr \--set loadBalancer.dsrDispatch=opt温馨提示:特殊场景下,某后端可能会同时隶属于多个Service,DSR模式下,Service IP和Port可附加在IPv4报文的options段,或者IPv6报文的"Destination Option extension header"中,以告诉后端该响应哪个Service。这避免了添加多个的字段导致影响原始MTU的设定,且仅会影响SYN报文。但该功能仅Native Routing可用,Encapsulation模式不支持。5.Hybird DSR and SNAT Mode

Cilium还支持混合使用DSR和SNAT模式。DSR功能应用于TCP流量上,而SNAT应用于UDP流量之上。于是,loadBalancer.mode参数有效值为:snat(默认配置),dsr,hybrid。启用混合模式的负载均衡案例:

[root@master241 ~]# cilium install \--set kubeProxyReplacement=true \--set routingMode=native \...--set loadBalancer.mode=hybrid \--set k8sServiceHost=${API_SERVER_IP} \--set k8sServicePort=${API_SERVER_PORT}

6.Socket LoadBalancer Bypass

Socket套接字级的负载均衡器:- socket级的负载均衡器对Cilium的下层数据路径透明;- 尽管应用程序认为自身连接到Service,但对应的内核中的socket却是直接连接到了后端地址,而且该功能并不需要NAT的参数;cilium支持绕过Socket LB:- 绕过Socket LB,意味着针对客户端请求,内核中的socket使用的是ClusterIP级相应的Port- 1.有些场景中需要实现的模板会依赖于客户原始请求的ClusterIP,例如: Istio Sidecar;- 2.也有些场景中并不支持Socket LB,例如: kubeVirt,Kata containers和gVisor集中容器运行时;- 该功能要通过"socketLB.hostNamespaceOnly"参数进行配置。测试示例:

[root@master241 ~]# cilium install \--set kubeProxyReplacement=true \--set routingMode=native \...--set socketLB.hostNamespaceOnly=true

7.LoadBalancer & NodePort XDP Acceleration

外部请求报文发往NodePort,LoadBalancer类型的Service,或者发往ExternalIP的Service,且需要跨节点转发时,Cilium支持基于XDP对请求进行加速。默认为禁用状态,可将参数"loadBalancer.acceleration"的值设置为"native"进行启用。该功能能够对Kubernetes系统上的LoadBalancer服务的实现结合使用,例如: MetalLB。该功能自Cilium 1.8版本推出,仅能用于启用了direct routing的单个设备上。支持同dsr,snat或hybrid中的任何一种负载均衡模式配合使用。启用XDP加速,负载均衡为混合模式示例:

[root@master241 ~]# cilium install \--set kubeProxyReplacement=true \--set routingMode=native \...--set loadBalancer.acceleration=native \--set loadBalacner.mode=hybrid \--set k8sServiceHost=${API_SERVER_IP} \--set k8sServicePort=${API_SERVER_PORT}