HOG全称(histogram of oriented gradients),方向梯度直方图,可以用来提取表示图像的特征,本质就是一行高维特征。

HOG特征提取步骤

-

图像预处理(gamma校正和灰度化)【option】

-

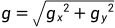

计算每一个像素点的梯度值,得到梯度图(尺寸与原图一致)

sobel计算水平和竖直梯度,并通过公式求得梯度的方向(边缘方向与梯度方向垂直)

梯度方向取绝对值,梯度方向取值范围为[0,180]

-

统计每个cell的梯度直方图(不同梯度的个数),形成每个cell的descriptor

-

将每几个cell组成一个block(3*3),一个block内所有cell的特征串联起来得到该block的HOG特征descripitor

-

将图像image内所有block的HOG特征descripitor串联起来得到该image的HOG特征descripitor,即最终分类的特征向量

HOG特征提取python实现

import cv2

import numpy as np

import math

import matplotlib.pyplot as pltclass Hog_descriptor():def __init__(self, img, cell_size=16, bin_size=8):self.img = imgself.img = np.sqrt(img / float(np.max(img)))self.img = self.img * 255self.cell_size = cell_sizeself.bin_size = bin_sizeself.angle_unit = 360 / self.bin_sizeassert type(self.bin_size) == int, "bin_size should be integer,"assert type(self.cell_size) == int, "cell_size should be integer,"assert type(self.angle_unit) == int, "bin_size should be divisible by 360"def extract(self):height, width = self.img.shapegradient_magnitude, gradient_angle = self.global_gradient()gradient_magnitude = abs(gradient_magnitude)cell_gradient_vector = np.zeros((height / self.cell_size, width / self.cell_size, self.bin_size))for i in range(cell_gradient_vector.shape[0]):for j in range(cell_gradient_vector.shape[1]):cell_magnitude = gradient_magnitude[i * self.cell_size:(i + 1) * self.cell_size,j * self.cell_size:(j + 1) * self.cell_size]cell_angle = gradient_angle[i * self.cell_size:(i + 1) * self.cell_size,j * self.cell_size:(j + 1) * self.cell_size]cell_gradient_vector[i][j] = self.cell_gradient(cell_magnitude, cell_angle)hog_image = self.render_gradient(np.zeros([height, width]), cell_gradient_vector)hog_vector = []for i in range(cell_gradient_vector.shape[0] - 1):for j in range(cell_gradient_vector.shape[1] - 1):block_vector = []block_vector.extend(cell_gradient_vector[i][j])block_vector.extend(cell_gradient_vector[i][j + 1])block_vector.extend(cell_gradient_vector[i + 1][j])block_vector.extend(cell_gradient_vector[i + 1][j + 1])mag = lambda vector: math.sqrt(sum(i ** 2 for i in vector))magnitude = mag(block_vector)if magnitude != 0:normalize = lambda block_vector, magnitude: [element / magnitude for element in block_vector]block_vector = normalize(block_vector, magnitude)hog_vector.append(block_vector)return hog_vector, hog_imagedef global_gradient(self):gradient_values_x = cv2.Sobel(self.img, cv2.CV_64F, 1, 0, ksize=5)gradient_values_y = cv2.Sobel(self.img, cv2.CV_64F, 0, 1, ksize=5)gradient_magnitude = cv2.addWeighted(gradient_values_x, 0.5, gradient_values_y, 0.5, 0)gradient_angle = cv2.phase(gradient_values_x, gradient_values_y, angleInDegrees=True)return gradient_magnitude, gradient_angledef cell_gradient(self, cell_magnitude, cell_angle):orientation_centers = [0] * self.bin_sizefor i in range(cell_magnitude.shape[0]):for j in range(cell_magnitude.shape[1]):gradient_strength = cell_magnitude[i][j]gradient_angle = cell_angle[i][j]min_angle, max_angle, mod = self.get_closest_bins(gradient_angle)orientation_centers[min_angle] += (gradient_strength * (1 - (mod / self.angle_unit)))orientation_centers[max_angle] += (gradient_strength * (mod / self.angle_unit))return orientation_centersdef get_closest_bins(self, gradient_angle):idx = int(gradient_angle / self.angle_unit)mod = gradient_angle % self.angle_unitif idx == self.bin_size:return idx - 1, (idx) % self.bin_size, modreturn idx, (idx + 1) % self.bin_size, moddef render_gradient(self, image, cell_gradient):cell_width = self.cell_size / 2max_mag = np.array(cell_gradient).max()for x in range(cell_gradient.shape[0]):for y in range(cell_gradient.shape[1]):cell_grad = cell_gradient[x][y]cell_grad /= max_magangle = 0angle_gap = self.angle_unitfor magnitude in cell_grad:angle_radian = math.radians(angle)x1 = int(x * self.cell_size + magnitude * cell_width * math.cos(angle_radian))y1 = int(y * self.cell_size + magnitude * cell_width * math.sin(angle_radian))x2 = int(x * self.cell_size - magnitude * cell_width * math.cos(angle_radian))y2 = int(y * self.cell_size - magnitude * cell_width * math.sin(angle_radian))cv2.line(image, (y1, x1), (y2, x2), int(255 * math.sqrt(magnitude)))angle += angle_gapreturn imageimg = cv2.imread('data/picture1.png', cv2.IMREAD_GRAYSCALE)

hog = Hog_descriptor(img, cell_size=8, bin_size=8)

vector, image = hog.extract()

plt.imshow(image, cmap=plt.cm.gray)

plt.show()HOG+SVM实现行人检测

数据集地址: ftp://ftp.inrialpes.fr/pub/lear/douze/data/INRIAPerson.ta

import cv2

import numpy as np

import randomdef load_images(dirname, amout = 9999):img_list = []file = open(dirname)img_name = file.readline()while img_name != '': # 文件尾img_name = dirname.rsplit(r'/', 1)[0] + r'/' + img_name.split('/', 1)[1].strip('\n')img_list.append(cv2.imread(img_name))img_name = file.readline()amout -= 1if amout <= 0: # 控制读取图片的数量breakreturn img_list# 从每一张没有人的原始图片中随机裁出10张64*128的图片作为负样本

def sample_neg(full_neg_lst, neg_list, size):random.seed(1)width, height = size[1], size[0]for i in range(len(full_neg_lst)):for j in range(10):y = int(random.random() * (len(full_neg_lst[i]) - height))x = int(random.random() * (len(full_neg_lst[i][0]) - width))neg_list.append(full_neg_lst[i][y:y + height, x:x + width])return neg_list# wsize: 处理图片大小,通常64*128; 输入图片尺寸>= wsize

def computeHOGs(img_lst, gradient_lst, wsize=(128, 64)):hog = cv2.HOGDescriptor()# hog.winSize = wsizefor i in range(len(img_lst)):if img_lst[i].shape[1] >= wsize[1] and img_lst[i].shape[0] >= wsize[0]:roi = img_lst[i][(img_lst[i].shape[0] - wsize[0]) // 2: (img_lst[i].shape[0] - wsize[0]) // 2 + wsize[0], \(img_lst[i].shape[1] - wsize[1]) // 2: (img_lst[i].shape[1] - wsize[1]) // 2 + wsize[1]]gray = cv2.cvtColor(roi, cv2.COLOR_BGR2GRAY)gradient_lst.append(hog.compute(gray))# return gradient_lstdef get_svm_detector(svm):sv = svm.getSupportVectors()rho, _, _ = svm.getDecisionFunction(0)sv = np.transpose(sv)return np.append(sv, [[-rho]], 0)# 主程序

# 第一步:计算HOG特征

neg_list = []

pos_list = []

gradient_lst = []

labels = []

hard_neg_list = []

svm = cv2.ml.SVM_create()

pos_list = load_images(r'G:/python_project/INRIAPerson/96X160H96/Train/pos.lst')

full_neg_lst = load_images(r'G:/python_project/INRIAPerson/train_64x128_H96/neg.lst')

sample_neg(full_neg_lst, neg_list, [128, 64])

print(len(neg_list))

computeHOGs(pos_list, gradient_lst)

[labels.append(+1) for _ in range(len(pos_list))]

computeHOGs(neg_list, gradient_lst)

[labels.append(-1) for _ in range(len(neg_list))]# 第二步:训练SVM

svm.setCoef0(0)

svm.setCoef0(0.0)

svm.setDegree(3)

criteria = (cv2.TERM_CRITERIA_MAX_ITER + cv2.TERM_CRITERIA_EPS, 1000, 1e-3)

svm.setTermCriteria(criteria)

svm.setGamma(0)

svm.setKernel(cv2.ml.SVM_LINEAR)

svm.setNu(0.5)

svm.setP(0.1) # for EPSILON_SVR, epsilon in loss function?

svm.setC(0.01) # From paper, soft classifier

svm.setType(cv2.ml.SVM_EPS_SVR) # C_SVC # EPSILON_SVR # may be also NU_SVR # do regression task

svm.train(np.array(gradient_lst), cv2.ml.ROW_SAMPLE, np.array(labels))# 第三步:加入识别错误的样本,进行第二轮训练

# 参考 http://masikkk.com/article/SVM-HOG-HardExample/

hog = cv2.HOGDescriptor()

hard_neg_list.clear()

hog.setSVMDetector(get_svm_detector(svm))

for i in range(len(full_neg_lst)):rects, wei = hog.detectMultiScale(full_neg_lst[i], winStride=(4, 4),padding=(8, 8), scale=1.05)for (x,y,w,h) in rects:hardExample = full_neg_lst[i][y:y+h, x:x+w]hard_neg_list.append(cv2.resize(hardExample,(64,128)))

computeHOGs(hard_neg_list, gradient_lst)

[labels.append(-1) for _ in range(len(hard_neg_list))]

svm.train(np.array(gradient_lst), cv2.ml.ROW_SAMPLE, np.array(labels))# 第四步:保存训练结果

hog.setSVMDetector(get_svm_detector(svm))

hog.save('myHogDector.bin')test代码

import cv2

import numpy as nphog = cv2.HOGDescriptor()

hog.load('myHogDector.bin')

cap = cv2.VideoCapture(0)

while True:ok, img = cap.read()rects, wei = hog.detectMultiScale(img, winStride=(4, 4),padding=(8, 8), scale=1.05)for (x, y, w, h) in rects:cv2.rectangle(img, (x, y), (x + w, y + h), (0, 0, 255), 2)cv2.imshow('a', img)if cv2.waitKey(1)&0xff == 27: # esc键break

cv2.destroyAllWindows()代码参考:

https://github.com/PENGZhaoqing/Hog-feature/blob/master/hog.py

博客参考:

80行Python实现-HOG梯度特征提取_hog特征 python代码-CSDN博客

HOG特征 - 知乎

【计算机视觉】INRIA 行人数据集 (INRIA Person Dataset)_inria数据集-CSDN博客

![[嵌入式AI从0开始到入土]9_yolov5在昇腾上推理](https://img-blog.csdnimg.cn/direct/993ed03ce22442aba322d22a0550a23d.png)