Flannel WireGuard 模式

一、环境信息

| 主机 | IP |

|---|---|

| ubuntu | 172.16.94.141 |

| 软件 | 版本 |

|---|---|

| docker | 26.1.4 |

| helm | v3.15.0-rc.2 |

| kind | 0.18.0 |

| clab | 0.54.2 |

| kubernetes | 1.23.4 |

| ubuntu os | Ubuntu 20.04.6 LTS |

| kernel | 5.11.5 内核升级文档 |

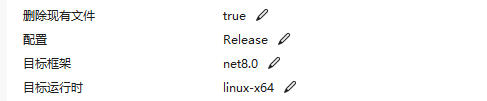

二、安装服务

kind 配置文件信息

$ cat install.sh#!/bin/bash

date

set -v# 1.prep noCNI env

cat <<EOF | kind create cluster --name=flannel-wireguard --image=kindest/node:v1.23.4 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:# kind 默认使用 rancher cni,cni 我们需要自己创建disableDefaultCNI: true# 定义节点使用的 pod 网段podSubnet: "10.244.0.0/16"

nodes:- role: control-plane- role: worker- role: workercontainerdConfigPatches:

- |-[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.evescn.com"]endpoint = ["https://harbor.evescn.com"]

EOF# 2.install cni

kubectl apply -f ./flannel.yaml# 3.install necessary tools

# cd /opt/

# curl -o calicoctl -O -L "https://gh.api.99988866.xyz/https://github.com/containernetworking/plugins/releases/download/v0.9.0/cni-plugins-linux-amd64-v0.9.0.tgz"

# tar -zxvf cni-plugins-linux-amd64-v0.9.0.tgzfor i in $(docker ps -a --format "table {{.Names}}" | grep flannel)

doecho $idocker cp /opt/bridge $i:/opt/cni/bin/docker cp /usr/bin/ping $i:/usr/bin/pingdocker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

flannel.yaml

配置文件

# flannel.yaml

---

kind: Namespace

apiVersion: v1

metadata:name: kube-flannellabels:pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

rules:

- apiGroups:- ""resources:- podsverbs:- get

- apiGroups:- ""resources:- nodesverbs:- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:name: flannelnamespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flannel

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "wireguard"}}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-dsnamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cni-pluginimage: harbor.dayuan1997.com/devops/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0command:- cpargs:- -f- /flannel- /opt/cni/bin/flannelvolumeMounts:- name: cni-pluginmountPath: /opt/cni/bin- name: install-cniimage: harbor.dayuan1997.com/devops/rancher/mirrored-flannelcni-flannel:v0.19.2command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: harbor.dayuan1997.com/devops/rancher/mirrored-flannelcni-flannel:v0.19.2command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: EVENT_QUEUE_DEPTHvalue: "5000"volumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/- name: xtables-lockmountPath: /run/xtables.lock- name: tunmountPath: /dev/net/tunvolumes:- name: tunhostPath:path: /dev/net/tun- name: runhostPath:path: /run/flannel- name: cni-pluginhostPath:path: /opt/cni/bin- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate

flannel.yaml 参数解释

Backend.Type- 含义: 用于指定

flannel工作模式。 wireguard:flannel工作在wireguard模式。

- 含义: 用于指定

- 安装

k8s集群和flannel服务

# ./install.shCreating cluster "flannel-wireguard" ...✓ Ensuring node image (kindest/node:v1.23.4) 🖼✓ Preparing nodes 📦 📦 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜

Set kubectl context to "kind-flannel-wireguard"

You can now use your cluster with:kubectl cluster-info --context kind-flannel-wireguardThanks for using kind! 😊

- 查看安装的服务

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-64897985d-dwbzg 1/1 Running 0 7m

kube-system coredns-64897985d-wxhwb 1/1 Running 0 7m

kube-system etcd-flannel-wireguard-control-plane 1/1 Running 0 7m15s

kube-system kube-apiserver-flannel-wireguard-control-plane 1/1 Running 0 7m13s

kube-system kube-controller-manager-flannel-wireguard-control-plane 1/1 Running 0 7m13s

kube-system kube-flannel-ds-4pn8v 1/1 Running 0 6m43s

kube-system kube-flannel-ds-62dvf 1/1 Running 0 6m43s

kube-system kube-flannel-ds-dnvbq 1/1 Running 0 6m43s

kube-system kube-proxy-hnww9 1/1 Running 0 6m44s

kube-system kube-proxy-lr88h 1/1 Running 0 6m44s

kube-system kube-proxy-vbhrl 1/1 Running 0 7m1s

kube-system kube-scheduler-flannel-wireguard-control-plane 1/1 Running 0 7m13s

local-path-storage local-path-provisioner-5ddd94ff66-l9hxt 1/1 Running 0 7m

k8s 集群安装 Pod 测试网络

root@kind:~# cat cni.yamlapiVersion: apps/v1

kind: DaemonSet

#kind: Deployment

metadata:labels:app: cniname: cni

spec:#replicas: 1selector:matchLabels:app: cnitemplate:metadata:labels:app: cnispec:containers:- image: harbor.dayuan1997.com/devops/nettool:0.9name: nettoolboxsecurityContext:privileged: true---

apiVersion: v1

kind: Service

metadata:name: serversvc

spec:type: NodePortselector:app: cniports:- name: cniport: 80targetPort: 80nodePort: 32000

root@kind:~# kubectl apply -f cni.yaml

daemonset.apps/cni created

service/serversvc createdroot@kind:~# kubectl run net --image=harbor.dayuan1997.com/devops/nettool:0.9

pod/net created

- 查看安装服务信息

root@kind:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-2zz99 1/1 Running 0 13s 10.244.1.2 flannel-wireguard-worker <none> <none>

cni-8c9np 1/1 Running 0 13s 10.244.2.5 flannel-wireguard-worker2 <none> <none>

net 1/1 Running 0 10s 10.244.1.3 flannel-wireguard-worker <none> <none>root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7m49s

serversvc NodePort 10.96.105.87 <none> 80:32000/TCP 11s

三、测试网络

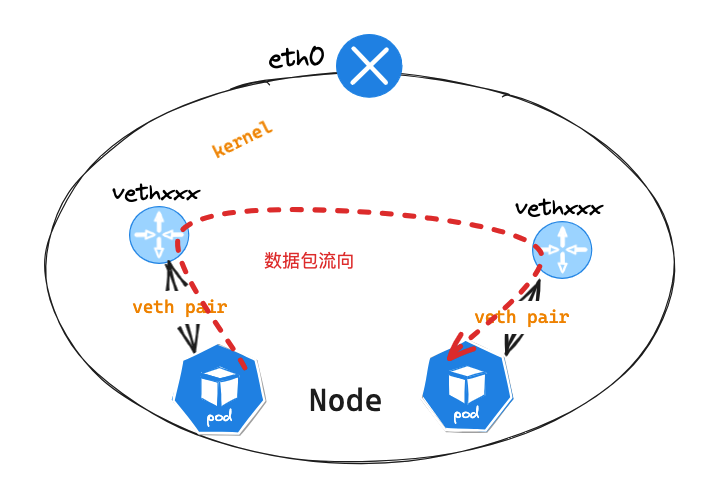

同节点 Pod 网络通讯

可以查看此文档 Flannel UDP 模式 中,同节点网络通讯,数据包转发流程一致

Flannel 同节点通信通过

l2网络通信,2层交换机完成

不同节点 Pod 网络通讯

Pod节点信息

## ip 信息

root@kind:~# kubectl exec -it net -- ip a l

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1420 qdisc noqueue state UP group default link/ether fa:f0:48:65:c5:28 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.244.1.3/24 brd 10.244.1.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fe80::f8f0:48ff:fe65:c528/64 scope link valid_lft forever preferred_lft forever## 路由信息

root@kind:~# kubectl exec -it net -- ip r s

default via 10.244.1.1 dev eth0

10.244.0.0/16 via 10.244.1.1 dev eth0

10.244.1.0/24 dev eth0 proto kernel scope link src 10.244.1.3

Pod节点所在Node节点信息

root@kind:~# docker exec -it flannel-wireguard-worker bash## ip 信息

root@flannel-wireguard-worker:/# ip a l

2: flannel-wg: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1420 qdisc noqueue state UNKNOWN group default link/none inet 10.244.1.0/32 scope global flannel-wgvalid_lft forever preferred_lft forever

3: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1420 qdisc noqueue state UP group default qlen 1000link/ether 3e:37:f3:a0:8e:a0 brd ff:ff:ff:ff:ff:ffinet 10.244.1.1/24 brd 10.244.1.255 scope global cni0valid_lft forever preferred_lft foreverinet6 fe80::3c37:f3ff:fea0:8ea0/64 scope link valid_lft forever preferred_lft forever

4: veth7ff69c99@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1420 qdisc noqueue master cni0 state UP group default link/ether 06:f8:d3:64:ef:e4 brd ff:ff:ff:ff:ff:ff link-netns cni-ebbfc658-feb1-eca0-91aa-ac815a1a8504inet6 fe80::4f8:d3ff:fe64:efe4/64 scope link valid_lft forever preferred_lft forever

5: vethd38faacd@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1420 qdisc noqueue master cni0 state UP group default link/ether ea:cf:56:fa:c2:da brd ff:ff:ff:ff:ff:ff link-netns cni-e938b0d2-ea2f-f1cf-0823-af51a9ec0f7binet6 fe80::e8cf:56ff:fefa:c2da/64 scope link valid_lft forever preferred_lft forever

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:12:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.18.0.4/16 brd 172.18.255.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fc00:f853:ccd:e793::4/64 scope global nodad valid_lft forever preferred_lft foreverinet6 fe80::42:acff:fe12:4/64 scope link valid_lft forever preferred_lft forever## 路由信息

root@flannel-wireguard-worker:/# ip r s

default via 172.18.0.1 dev eth0

10.244.0.0/16 dev flannel-wg scope link

10.244.1.0/24 dev cni0 proto kernel scope link src 10.244.1.1

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.4

Pod节点进行ping包测试,访问cni-8c9npPod节点

root@kind:~# kubectl exec -it net -- ping 10.244.2.5 -c 1

PING 10.244.2.5 (10.244.2.5): 56 data bytes

64 bytes from 10.244.2.5: seq=0 ttl=62 time=2.926 ms--- 10.244.2.5 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 2.926/2.926/2.926 ms

Pod节点eth0网卡抓包

net~$ tcpdump -pne -i eth0

10:20:20.910458 fa:f0:48:65:c5:28 > 3e:37:f3:a0:8e:a0, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.2.5: ICMP echo request, id 74, seq 0, length 64

10:20:20.910777 3e:37:f3:a0:8e:a0 > fa:f0:48:65:c5:28, ethertype IPv4 (0x0800), length 98: 10.244.2.5 > 10.244.1.3: ICMP echo reply, id 74, seq 0, length 64

数据包源 mac 地址: fa:f0:48:65:c5:28 为 eth0 网卡 mac 地址,而目的 mac 地址: 3e:37:f3:a0:8e:a0 为 net Pod 节点 cni0 网卡对应的网卡 mac 地址,cni0

网卡 ip 地址为网络网关地址 10.244.2.1 , flannel 为 2 层网络模式通过路由送往数据到网关地址

net~$ arp -n

Address HWtype HWaddress Flags Mask Iface

10.244.1.1 ether 3e:37:f3:a0:8e:a0 C eth0

而通过 veth pair 可以确定 Pod 节点 eth0 网卡对应的 veth pair 为 vethd38faacd@if3 网卡

flannel-wireguard-worker节点vethd38faacd网卡抓包

root@flannel-wireguard-worker:/# tcpdump -pne -i vethd38faacd

10:21:30.939970 fa:f0:48:65:c5:28 > 3e:37:f3:a0:8e:a0, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.2.5: ICMP echo request, id 90, seq 0, length 64

10:21:30.940112 3e:37:f3:a0:8e:a0 > fa:f0:48:65:c5:28, ethertype IPv4 (0x0800), length 98: 10.244.2.5 > 10.244.1.3: ICMP echo reply, id 90, seq 0, length 64

因为他们互为 veth pair 所以抓包信息相同

flannel-wireguard-worker节点cni0网卡抓包

root@flannel-wireguard-worker:/# tcpdump -pne -i cni0

10:21:30.939970 fa:f0:48:65:c5:28 > 3e:37:f3:a0:8e:a0, ethertype IPv4 (0x0800), length 98: 10.244.1.3 > 10.244.2.5: ICMP echo request, id 90, seq 0, length 64

10:21:30.940112 3e:37:f3:a0:8e:a0 > fa:f0:48:65:c5:28, ethertype IPv4 (0x0800), length 98: 10.244.2.5 > 10.244.1.3: ICMP echo reply, id 90, seq 0, length 64

数据包源 mac 地址: fa:f0:48:65:c5:28 为 net Pod 节点 eth0 网卡 mac 地址,而目的 mac 地址: 3e:37:f3:a0:8e:a0 为 cni0 网卡 mac 地址

查看

flannel-wireguard-worker主机路由信息,发现并在数据包会在通过10.244.0.0/16 dev flannel-wg scope link路由信息转发

flannel-wireguard-worker节点flannel-wg网卡抓包

root@flannel-wireguard-worker:/# tcpdump -pne -i flannel-wg

listening on flannel-wg, link-type RAW (Raw IP), snapshot length 262144 bytes

10:22:36.317228 ip: 10.244.1.3 > 10.244.2.5: ICMP echo request, id 98, seq 0, length 64

10:22:36.317538 ip: 10.244.2.5 > 10.244.1.3: ICMP echo reply, id 98, seq 0, length 64

flannel-wg 网卡数据包中没有 mac 层,没有 mac 地址,只有 ip 层往上的数据层。因为这个接口模式为 link-type RAW (Raw IP)

flannel-wireguard-worker节点安装wireguard-tools软件包,查看wg信息

root@flannel-wireguard-worker:/# apt install -y wireguard-tools# 查看 wg 信息

root@flannel-wireguard-worker:/# ip -d link show

2: flannel-wg: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1420 qdisc noqueue state UNKNOWN mode DEFAULT group default link/none promiscuity 0 minmtu 0 maxmtu 2147483552 wireguard addrgenmode none numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535 root@flannel-wireguard-worker:/# wg

interface: flannel-wgpublic key: 1umq7/lWVbly2PKshmKxLOYq+NI3MAsCYr3OiIVuSG8=private key: (hidden)listening port: 51820peer: 8UiTuiJtms7jN89M45aRg/RHu69CSheMM5JLmO30RxM=endpoint: 172.18.0.2:51820allowed ips: 10.244.2.0/24latest handshake: 8 seconds agotransfer: 916 B received, 1.18 KiB sentpeer: D67/74RE+5kgAGYJdU7MFgbOZRJ0kNntKoMK9u8dFA4=endpoint: 172.18.0.3:51820allowed ips: 10.244.0.0/24

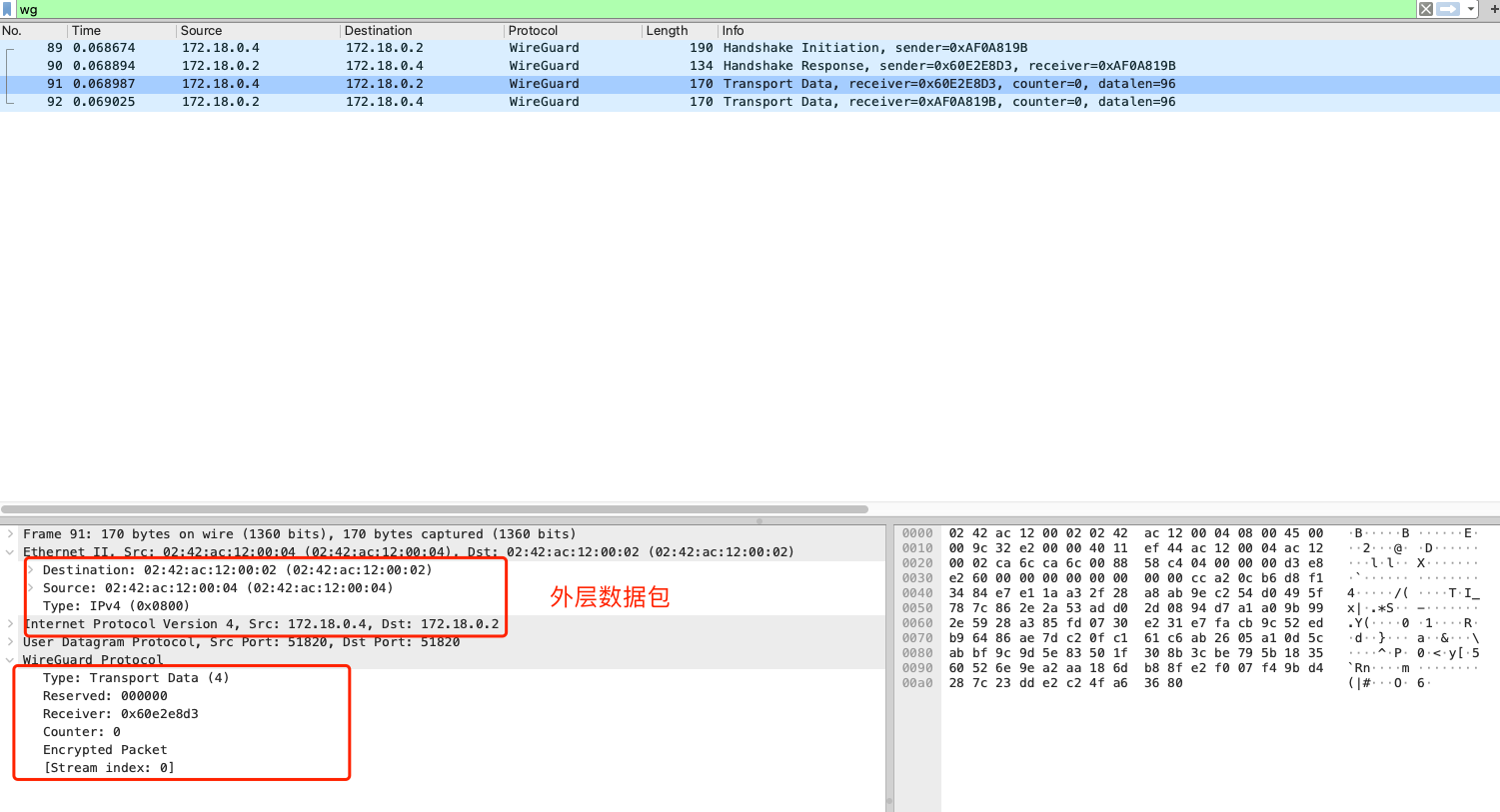

flannel-wireguard-worker节点eth0网卡抓包

数据包外层源 mac 地址: 02:42:ac:12:00:04 为 Node 节点 eth0 网卡 mac 地址,而目的 mac 地址: 02:42:ac:12:00:02 为对端 Node 节点 eth0 网卡 mac 地址

root@flannel-wireguard-worker:/# arp -n

Address HWtype HWaddress Flags Mask Iface

172.18.0.2 ether 02:42:ac:12:00:02 C eth0

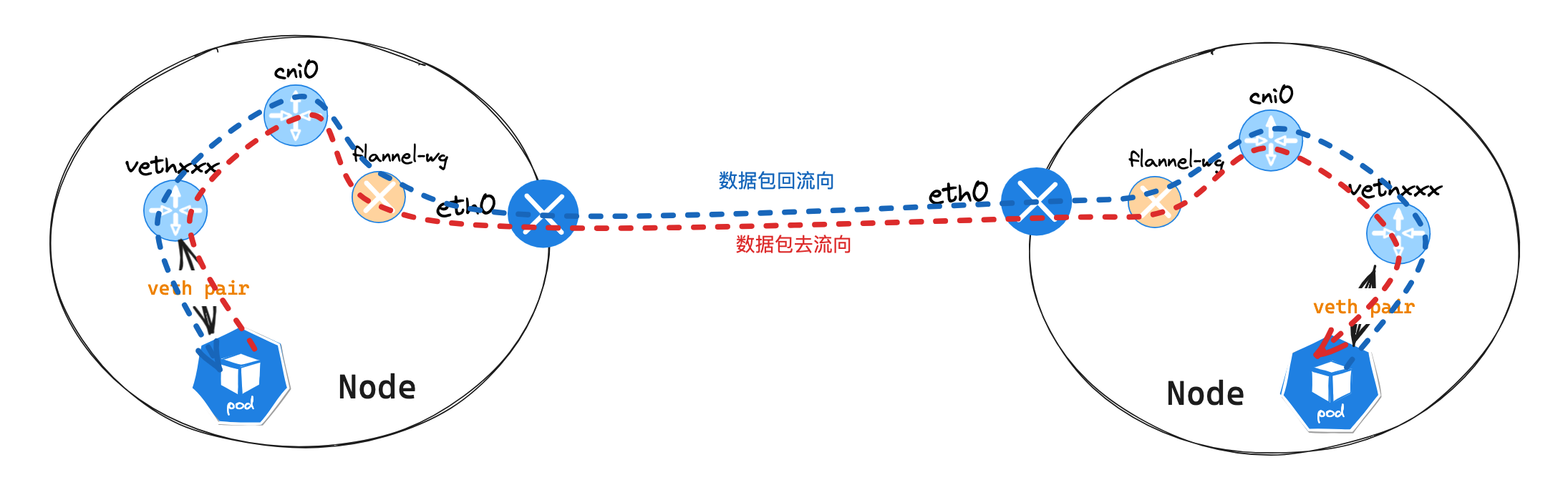

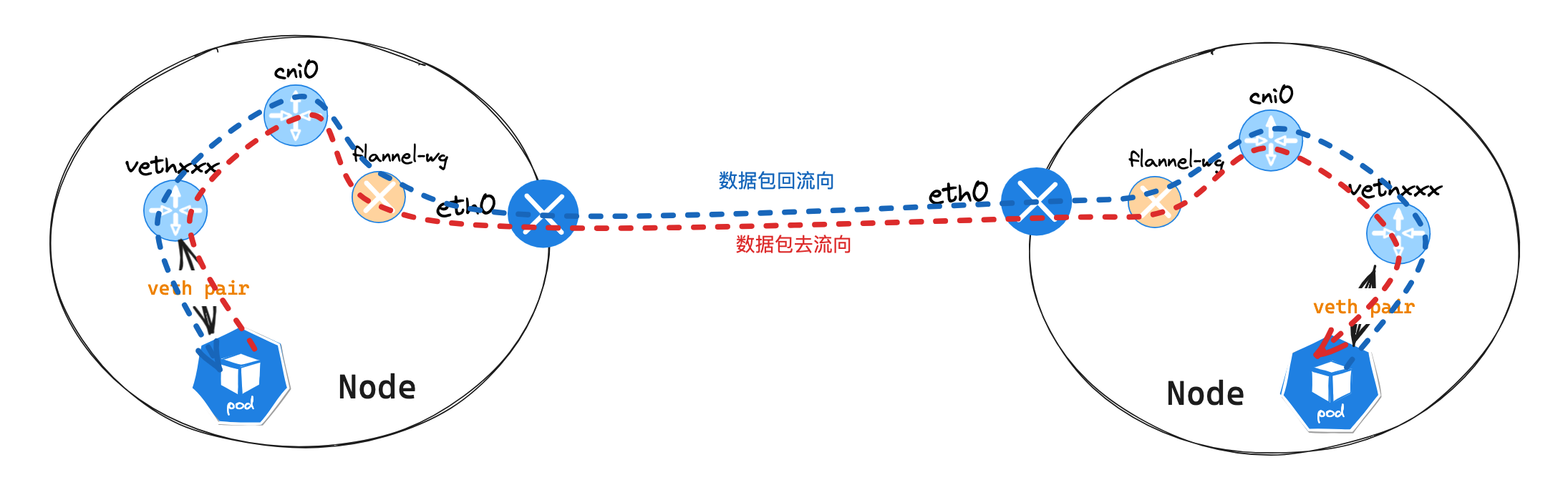

数据包流向

- 数据从

pod服务发出,通过查看本机路由表,送往10.244.2.1网卡。路由:10.244.0.0/16 via 10.244.2.1 dev eth0 - 通过

veth pair网卡vethd38faacd发送数据到flannel-wireguard-worker主机上,在转送到cni0: 10.244.2.1网卡 flannel-wireguard-worker主机查看自身路由后,会送往flannel-wg接口,因为目的地址为10.244.2.5。路由:10.244.0.0/16 dev flannel-wg scope linkflannel-wg接口重新封装为wireguard数据包并送往eth0接口- 对端

flannel-ipsec-worker2主机接受到数据包后,发现这个是一个wireguard数据包,将数据包内核模块处理。 - 解封装后发现目的地址为

10.244.2.5,通过查看本机路由表,送往cni0网卡。路由:10.244.2.0/24 dev cni0 proto kernel scope link src 10.244.2.1 - 通过

cni0网卡brctl showmacs cni0mac信息 ,最终会把数据包送到cni-8c9np主机

Service 网络通讯

可以查看此文档 Flannel UDP 模式 中,Service 网络通讯,数据包转发流程一致

Flannel Wireguard 模式

Flannel Wireguard 模式